02-VGNet Learning Notes

- The following are personal learning notes for reference only

- 1. What percentage of the parameters are less for three 3*3 convolutions in VGG than for one 7*7 convolution?(Assuming both input and output channels are C)

- 2. What is the difference between VGG-16 and VGG-19?

- 3. What are your inspirations after reading this paper?

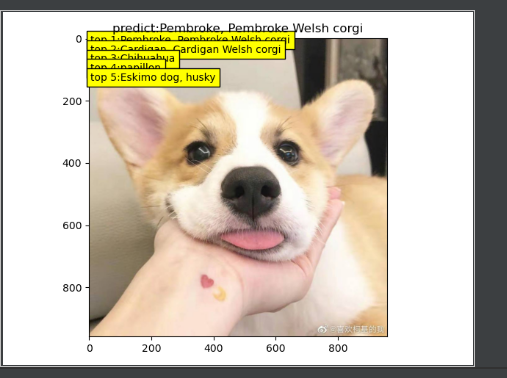

- 4. Find a picture from the Internet, execute vgg16, observe the category of top5 output, and take a screenshot of the output

The following are personal learning notes for reference only

1. What percentage of the parameters are less for three 3*3 convolutions in VGG than for one 7*7 convolution?(Assuming both input and output channels are C)

1. For three 33 convolution layers, the parameter is 333C^2;

For a 77 convolution layer, the parameter is 7 * 7C^2.

The parameter reduction from the former is (49-27)/49 = 44.89%

2. What is the difference between VGG-16 and VGG-19?

1. On the network structure, VGG-19 adds a 3*3 convolution layer to the 3rd, 4th and 5th blocks of VGG-16, respectively.

2. Under the same training and testing conditions, the accuracy of VGG-19 is slightly higher than that of VGG-16.

3. What are your inspirations after reading this paper?

1. Enlightenment on the experimental methods of the paper

- In the process of experimentation, to train an optimal model, it is not possible to get one at a time. Contrast experiments are needed.

- As shown in Table3-Table6 in VGG, we compare the results of experiments with several models, analyze the association and hidden information, and then adjust the network.

- Effective models are gradually left behind in the experiment to further analyze the factors affecting accuracy

2. Use multi-model fusion to improve accuracy

3. Using three 33 convolution cores instead of one 77 convolution core reduces many parameters

4. Find a picture from the Internet, execute vgg16, observe the category of top5 output, and take a screenshot of the output

---------------------------------------------------------------- Layer (type) Output Shape Param # ================================================================ Conv2d-1 [-1, 64, 224, 224] 1,792 ReLU-2 [-1, 64, 224, 224] 0 Conv2d-3 [-1, 64, 224, 224] 36,928 ReLU-4 [-1, 64, 224, 224] 0 MaxPool2d-5 [-1, 64, 112, 112] 0 Conv2d-6 [-1, 128, 112, 112] 73,856 ReLU-7 [-1, 128, 112, 112] 0 Conv2d-8 [-1, 128, 112, 112] 147,584 ReLU-9 [-1, 128, 112, 112] 0 MaxPool2d-10 [-1, 128, 56, 56] 0 Conv2d-11 [-1, 256, 56, 56] 295,168 ReLU-12 [-1, 256, 56, 56] 0 Conv2d-13 [-1, 256, 56, 56] 590,080 ReLU-14 [-1, 256, 56, 56] 0 Conv2d-15 [-1, 256, 56, 56] 590,080 ReLU-16 [-1, 256, 56, 56] 0 MaxPool2d-17 [-1, 256, 28, 28] 0 Conv2d-18 [-1, 512, 28, 28] 1,180,160 ReLU-19 [-1, 512, 28, 28] 0 Conv2d-20 [-1, 512, 28, 28] 2,359,808 ReLU-21 [-1, 512, 28, 28] 0 Conv2d-22 [-1, 512, 28, 28] 2,359,808 ReLU-23 [-1, 512, 28, 28] 0 MaxPool2d-24 [-1, 512, 14, 14] 0 Conv2d-25 [-1, 512, 14, 14] 2,359,808 ReLU-26 [-1, 512, 14, 14] 0 Conv2d-27 [-1, 512, 14, 14] 2,359,808 ReLU-28 [-1, 512, 14, 14] 0 Conv2d-29 [-1, 512, 14, 14] 2,359,808 ReLU-30 [-1, 512, 14, 14] 0 MaxPool2d-31 [-1, 512, 7, 7] 0 AdaptiveAvgPool2d-32 [-1, 512, 7, 7] 0 Linear-33 [-1, 4096] 102,764,544 ReLU-34 [-1, 4096] 0 Dropout-35 [-1, 4096] 0 Linear-36 [-1, 4096] 16,781,312 ReLU-37 [-1, 4096] 0 Dropout-38 [-1, 4096] 0 Linear-39 [-1, 1000] 4,097,000 ================================================================ Total params: 138,357,544 Trainable params: 138,357,544 Non-trainable params: 0 ---------------------------------------------------------------- Input size (MB): 0.57 Forward/backward pass size (MB): 218.78 Params size (MB): 527.79 Estimated Total Size (MB): 747.15 ---------------------------------------------------------------- img: Little Koki.jpg is: Pembroke, Pembroke Welsh corgi 263 n02113023 Dog, Pembroke, Pembroke Welsh corgi time consuming:0.90s