When developing high concurrency systems, there are three sharp tools to protect the system: caching, degradation and current limiting.

-

Cache: the purpose is to improve the system access speed and increase the capacity that the system can handle. It can be described as a silver bullet against high concurrent traffic;

-

Degradation: when the service has a problem or affects the performance of the core process, it needs to be shielded temporarily and opened after the peak or problem is solved;

-

Flow restriction: some scenarios cannot be solved by caching and degradation, such as scarce resources (second kill, rush purchase), writing services (such as comments and orders), and frequent complex queries (the last few pages of comments). Therefore, a means is needed to limit the concurrency / requests of these scenarios.

1 Internet avalanche effect solution

-

Service degradation: in the case of high concurrency, prevent users from waiting and directly return a friendly error prompt to the client.

-

Service fusing: in the case of high concurrency, once the maximum endurance limit of the service is reached, access is directly denied and the service is degraded.

-

Service isolation: use service isolation to solve the service avalanche effect.

-

Service flow restriction: in the case of high concurrency, once the service cannot bear to use the service flow restriction mechanism (timer (sliding window counting), leaky bucket algorithm, token bucket (Restlimite))

2 high concurrency current limiting solution

High concurrency current limiting solution current limiting algorithm (token bucket, leaky bucket, counter), application layer current limiting (Nginx)

3 current limiting algorithm

Common current limiting algorithms include token bucket and leaky bucket. The counter can also be implemented by current limiting.

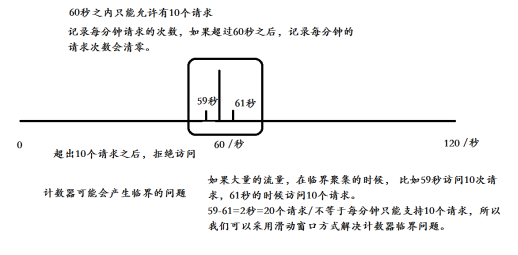

3.1 counter algorithm

3.1.1 counter

It is the simplest and easiest algorithm among current limiting algorithms. For example, if we require an interface, the number of requests in one minute cannot exceed 10. We can set a counter at the beginning. For each request, the counter + 1; If the value of the counter is greater than 10 and the time interval with the first request is within 1 minute, it indicates that there are too many requests. If the time interval between the request and the first request is greater than 1 minute and the value of the counter is still within the current limit, reset the counter.

If a large amount of traffic accesses 10 requests in 59s and 10 requests in 61s during critical aggregation, 20 requests occur in 2s and 10 counters are not satisfied (not limited by traditional methods), so we can use sliding window method to solve the critical problem of counters

/**

* Function Description: pure handwriting counter mode < br >

*/

public class LimitService {

private int limtCount = 60;// Limit maximum access capacity

AtomicInteger atomicInteger = new AtomicInteger(0); // Number of actual requests per second

private long start = System.currentTimeMillis();// Get current system time

private int interval = 60;// Interval 60 seconds

public boolean acquire() {

long newTime = System.currentTimeMillis();

if (newTime > (start + interval)) {

// Determine whether it is a cycle

start = newTime;

atomicInteger.set(0); // Cleanup to 0

return true;

}

atomicInteger.incrementAndGet();// i++;

return atomicInteger.get() <= limtCount;

}

static LimitService limitService = new LimitService();

public static void main(String[] args) {

ExecutorService newCachedThreadPool = Executors.newCachedThreadPool();

for (int i = 1; i < 100; i++) {

final int tempI = i;

newCachedThreadPool.execute(new Runnable() {

public void run() {

if (limitService.acquire()) {

System.out.println("You're not restricted,Logic can be accessed normally i:" + tempI);

} else {

System.out.println("You've been restricted i:" + tempI);

}

}

});

}

}

}

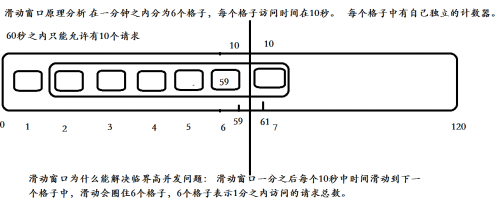

3.1.2 sliding window count

Sliding window counting has many usage scenarios, such as current limiting to prevent system avalanche. Compared with the counting implementation, the sliding window implementation will be smoother and can automatically eliminate burrs.

The principle of sliding window is to judge whether the total access volume in the first N unit times exceeds the set threshold every time there is access, and add 1 to the number of requests on the current time slice.

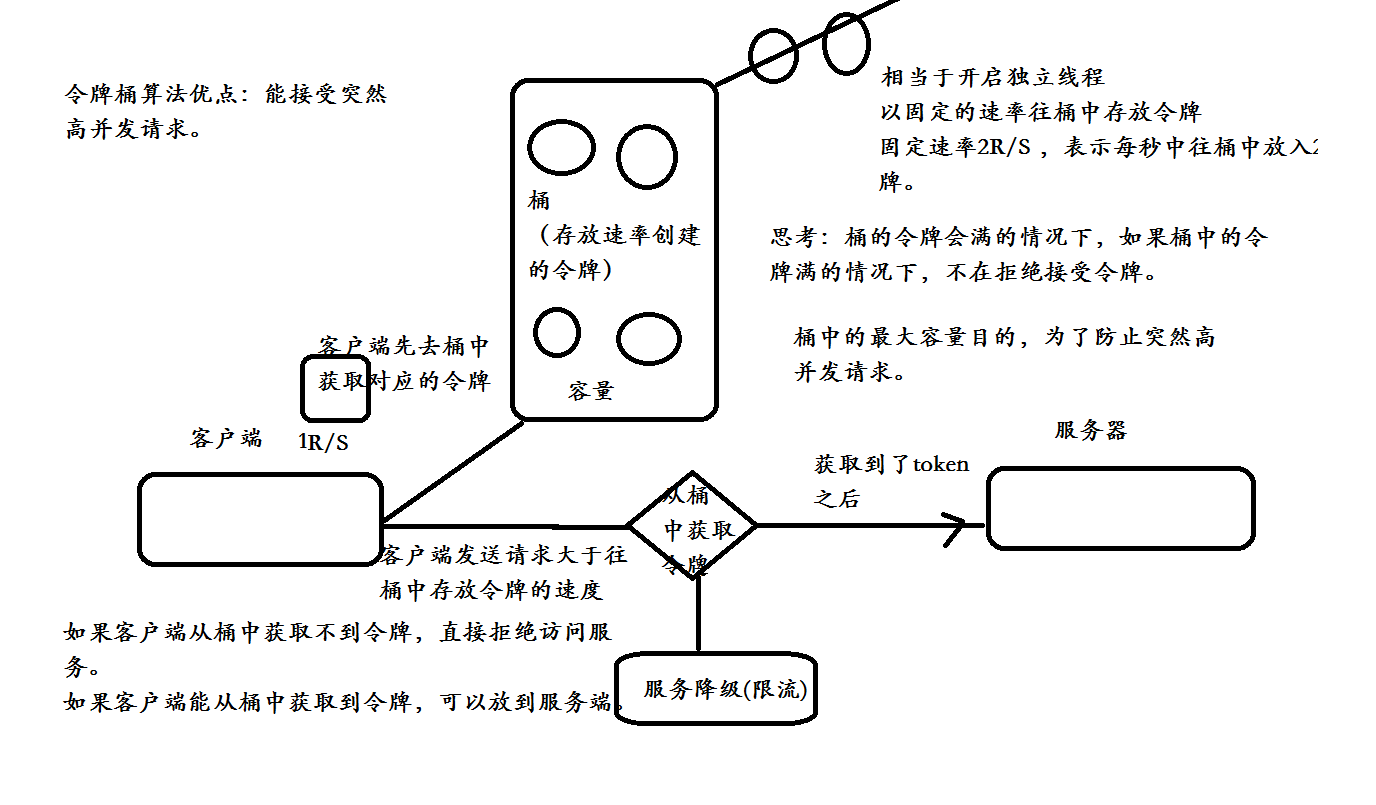

3.2 token bucket algorithm

Token bucket algorithm is a bucket for storing tokens with a fixed capacity. Tokens are added to the bucket at a fixed rate. The token bucket algorithm is described as follows:

- Assuming a limit of 2r/s, add tokens to the bucket at a fixed rate of 500 milliseconds;

- A maximum of b tokens are stored in the bucket. When the bucket is full, the newly added tokens are discarded or rejected;

- When a packet of N bytes arrives, n tokens will be deleted from the bucket, and then the packet will be sent to the network;

- If there are less than n tokens in the bucket, the token will not be deleted and the packet will be throttled (either discarded or waiting in the buffer).

Advantages of token bucket algorithm

Ability to accept sudden high concurrency requests

3.2.1 using RateLimiter to realize token bucket current limiting

RateLimiter is an implementation class based on token bucket algorithm provided by guava. It can easily complete the current limiting stunt and adjust the rate of generating tokens according to the actual situation of the system.

Generally, it can be applied to rush purchase current limiting to prevent the system from crashing; Limit the access volume of an interface and service unit within a certain time. For example, some third-party services will limit the user access volume; Limit the network speed, how many bytes are allowed to upload and download per unit time, etc.

Let's look at some simple practices. We need to introduce guava's maven dependency first.

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>25.1-jre</version>

</dependency>

</dependencies>

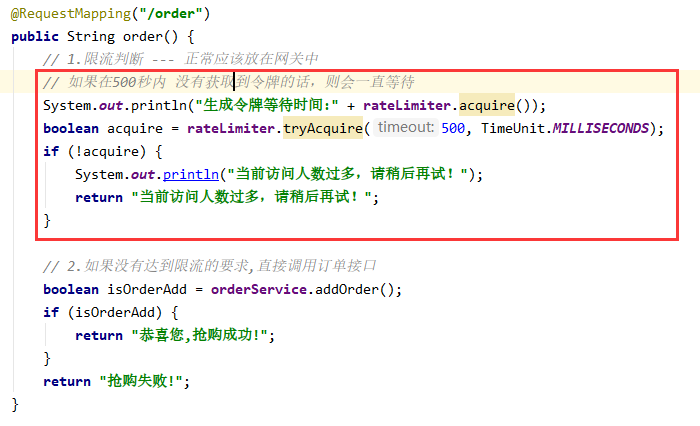

Implement token bucket algorithm using RateLimiter:

package com.snow.limit.controller;

import com.google.common.util.concurrent.RateLimiter;

import com.snow.limit.service.OrderService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.concurrent.TimeUnit;

/**

* Function Description: use RateLimiter to realize token bucket algorithm

*

*/

@RestController

public class IndexController {

@Autowired

private OrderService orderService;

// Explanation: 1.0 means that 1 token is generated every second and stored in the bucket

RateLimiter rateLimiter = RateLimiter.create(1.0);

// Order request

@RequestMapping("/order")

public String order() {

// 1. Current limiting judgment - normally, it should be placed in the gateway

// If the token is not obtained within 500 seconds, it will wait all the time

System.out.println("Generate token wait time:" + rateLimiter.acquire());

boolean acquire = rateLimiter.tryAcquire(500, TimeUnit.MILLISECONDS);

if (!acquire) {

System.out.println("There are too many visitors at present. Please try again later!");

return "There are too many visitors at present. Please try again later!";

}

// 2. If the current limiting requirements are not met, directly call the order interface

boolean isOrderAdd = orderService.addOrder();

if (isOrderAdd) {

return "Congratulations,Successful rush purchase!";

}

return "Rush purchase failed!";

}

}

Test: high concurrent access found, console print:

Generate token wait time:0.0 db....Operating order table database... Generate token wait time:0.902096 There are too many visitors at present. Please try again later! Generate token wait time:1.80186 There are too many visitors at present. Please try again later! Generate token wait time:2.701986 There are too many visitors at present. Please try again later! Generate token wait time:3.601859 There are too many visitors at present. Please try again later! Generate token wait time:4.501148 There are too many visitors at present. Please try again later! Generate token wait time:5.40092 There are too many visitors at present. Please try again later! Generate token wait time:6.300638 There are too many visitors at present. Please try again later! Generate token wait time:7.2005 There are too many visitors at present. Please try again later! Generate token wait time:8.10059 There are too many visitors at present. Please try again later!

ratelimiter enables service degradation

3.2.2 why should service flow restriction be implemented

For example: second kill rush purchase, service security, avalanche effect, etc.

Sudden increase of filtration flow.

The ultimate purpose of current limiting is to protect services.

Current limiting algorithm:

- Counter algorithm (disadvantages of traditional counter: critical problem - > sliding counter)

- Token Bucket

- Leaky bucket algorithm

- Application layer current limiting (Nginx, belonging to operation and maintenance configuration)

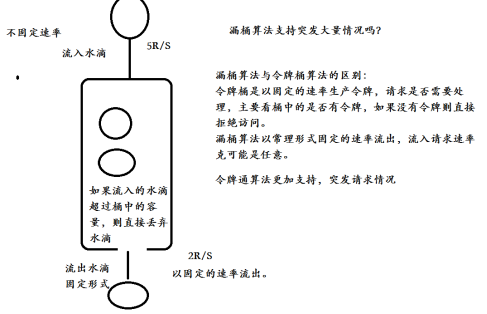

3.3 leaky bucket algorithm

When The Leaky Bucket Algorithm as a Meter can be used for Traffic Shaping and traffic policy, the leaky bucket algorithm is described as follows:

- A leaky bucket with a fixed capacity flows out water droplets at a constant fixed rate;

- If the bucket is empty, no water droplets need to flow out;

- The water can flow into the leakage bucket at any rate;

- If the inflow drops exceed the capacity of the bucket, the inflow drops overflow (are discarded), and the capacity of the leaky bucket remains unchanged.

Comparison between token bucket and leaky bucket:

- The token bucket adds tokens to the bucket at a fixed rate. Whether the request is processed depends on whether the tokens in the bucket are sufficient. When the number of tokens is reduced to zero, the new request is rejected;

- The leaky bucket is an outflow request at a constant fixed rate. The inflow request rate is arbitrary. When the number of incoming requests accumulates to the leaky bucket capacity, the new incoming request is rejected;

- The token bucket limits the average inflow rate (allows burst requests, which can be processed as long as there are tokens, and supports taking 3 tokens and 4 tokens at a time), and allows a certain degree of burst traffic;

- The leaky bucket limits the constant outflow rate (that is, the outflow rate is a fixed constant value, such as the outflow rate of 1, but not 1 at one time and 2 next time), so as to smooth the burst inflow rate;

- The token bucket allows a certain degree of burst, and the main purpose of the leaky bucket is to smooth the inflow rate;

The implementation of the two algorithms can be the same, but the direction is opposite. For the same parameters, the current limiting effect is the same.

In addition, sometimes we also use counters to limit the flow, which is mainly used to limit the total concurrency, such as the concurrency of database connection pool, thread pool and second kill; As long as the threshold value set for the total global requests or the total requests in a certain period of time is limited, it is a simple and rough total quantity current limit, not an average rate current limit.

A fixed leaky bucket that emits water droplets at a constant rate.

- If there are no water droplets in the bucket, no water droplets will flow out

- If the incoming water drops exceed the flow in the bucket, the incoming water drops may overflow, and the overflow water drop request is inaccessible. Call the service degradation method directly, and the capacity in the bucket will not change.

3.4 difference between leaky bucket algorithm and token bucket algorithm

The main difference is that the "leaky bucket algorithm" can forcibly limit the data transmission rate, while the "token bucket algorithm" can not only limit the average data transmission rate, but also allow some degree of burst transmission.

In the "token bucket algorithm", as long as there are tokens in the token bucket, it is allowed to transmit data in bursts until the user configured threshold is reached. Therefore, it is suitable for traffic with burst characteristics.

- Token bucket: allows a certain degree of burst

- Leaky bucket: smooth inflow rate

4 handwritten ratelimiter current limiting annotation framework

Add comments on the methods that require current limiting

Gateway: it generally intercepts all interfaces

We only need the second kill rush purchase and large traffic access interface to realize current restriction.

Implementation idea of ratelimiter current limiting annotation framework:

- Customize an annotation

- Integrate spring AOP

- Perform service degradation using surround notifications

4.1 adding dependencies

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>25.1-jre</version>

</dependency>

<!-- springboot integration AOP -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-aop</artifactId>

</dependency>

</dependencies>

4.2 user defined annotation

package com.snow.limit.annotation;

import java.lang.annotation.Documented;

import java.lang.annotation.ElementType;

import java.lang.annotation.Retention;

import java.lang.annotation.RetentionPolicy;

import java.lang.annotation.Target;

/**

* Function Description: principle of user-defined service flow limiting annotation framework: Reference: ratelimit < br >

*

*/

@Target({ ElementType.METHOD })

@Retention(RetentionPolicy.RUNTIME)

@Documented

public @interface ExtRateLimiter {

/**

* Adds a token to the token bucket at a fixed rate per second

*/

double permitsPerSecond();

/**

* In the specified number of milliseconds, if no token is obtained, the service degradation process will be carried out directly

*/

long timeout();

}

4.3 packaging RateLimiter

Custom annotations encapsulate ratelimit instances:

// Add 1 token to the token bucket per second

@ExtRateLimiter(permitsPerSecond = 1.0, timeout = 500)

@RequestMapping("/findIndex")

public String findIndex() {

System.out.println("findIndex" + System.currentTimeMillis());

return "findIndex" + System.currentTimeMillis();

}

4.4 writing AOP

package com.snow.limit.aop;

import java.io.IOException;

import java.io.PrintWriter;

import java.lang.reflect.Method;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

import java.util.concurrent.TimeUnit;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import com.snow.limit.annotation.ExtRateLimiter;

import org.aspectj.lang.ProceedingJoinPoint;

import org.aspectj.lang.annotation.Around;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Pointcut;

import org.aspectj.lang.reflect.MethodSignature;

import org.springframework.stereotype.Component;

import org.springframework.web.context.request.RequestContextHolder;

import org.springframework.web.context.request.ServletRequestAttributes;

import com.google.common.util.concurrent.RateLimiter;

/**

* Function Description: use AOP surround notification to judge and intercept all spring MVC requests, and judge whether there is an extratelimiter in the request method < br >

* 1.Judge whether there is @ extratelimiter < br > on the request method

* 2.If there is an @ ExtRateLimiter annotation on the method < br >

* 3.Use reflection technology to obtain parameters on @ extratelimit annotation method < br >

* 4.Call the native RateLimiter code to create a token bucket < br >

* 5.If the token acquisition timeout occurs, call the service degradation method directly (you need to define it yourself) < br >

* 6.If you can get the token, go directly to the actual request method< br>

* AOP There are two creation methods: annotation version and XML < br >

*

*/

@Aspect

@Component

public class RateLimiterAop {

// Define a HashMap and put the same request in the same bucket

private Map<String, RateLimiter> rateHashMap = new ConcurrentHashMap<>();

/**

* Define pointcuts to intercept classes under the com.snow.limit.controller package

*/

@Pointcut("execution(public * com.snow.limit.controller.*.*(..))")

public void rlAop() {

}

/**

* Use AOP surround notification to intercept all spring MVC requests, and determine whether there is an ExtRateLimiter annotation on the request method

*

* @param proceedingJoinPoint

* @return

* @throws Throwable

*/

@Around("rlAop()")

public Object doBefore(ProceedingJoinPoint proceedingJoinPoint) throws Throwable {

// 1. If the @ ExtRateLimiter annotation exists on the request method

Method sinatureMethod = getSinatureMethod(proceedingJoinPoint);

if (sinatureMethod == null) {

// Direct error reporting

return null;

}

// 2. Use the reflection mechanism of java to obtain the parameters of custom annotations on the interception method

ExtRateLimiter extRateLimiter = sinatureMethod.getDeclaredAnnotation(ExtRateLimiter.class);

if (extRateLimiter == null) {

// Directly into the actual request method

return proceedingJoinPoint.proceed();

}

double permitsPerSecond = extRateLimiter.permitsPerSecond(); // Get permitsPerSecond parameter

long timeout = extRateLimiter.timeout(); // Get timeout parameter

// 3. Call the native RateLimiter to create a token to ensure that each request corresponds to a singleton RateLimiter

// /Index --- ratelimit / order -- ratelimit uses hashMap key as the url address of the request##

// The same request is in the same bucket

String requestURI = getRequestURI();

RateLimiter rateLimiter = null;

if (rateHashMap.containsKey(requestURI)) {

// If ratelimit can be detected in hashMap URL

rateLimiter = rateHashMap.get(requestURI);

} else {

// If no ratelimit is detected in the hashMap URL, add a new ratelimit

rateLimiter = RateLimiter.create(permitsPerSecond);

rateHashMap.put(requestURI, rateLimiter);

}

// 4. Get the token in the token bucket. If the token is not valid, call the local service degradation method directly and will not enter the actual request method.

boolean tryAcquire = rateLimiter.tryAcquire(timeout, TimeUnit.MILLISECONDS);

if (!tryAcquire) {

// service degradation

fallback();

return null;

}

// 5. Get the token in the token bucket. If you can get the token order within the validity period, you can directly enter the actual request method.

// Directly into the actual request method

return proceedingJoinPoint.proceed();

}

private void fallback() throws IOException {

System.out.println("Enter "service degradation", please try again later!");

// Get response in AOP programming

ServletRequestAttributes attributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes();

HttpServletResponse response = attributes.getResponse();

response.setHeader("Content-type", "text/html;charset=UTF-8");

PrintWriter writer = response.getWriter();

try {

writer.println("Enter "service degradation", please try again later!");

} catch (Exception e) {

} finally {

writer.close();

}

}

// Gets the URI of the request

private String getRequestURI() {

return getRequest().getRequestURI();

}

// Get request

private HttpServletRequest getRequest() {

ServletRequestAttributes attributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes();

return attributes.getRequest();

}

// Get the method of AOP interception

private Method getSinatureMethod(ProceedingJoinPoint proceedingJoinPoint) {

MethodSignature signature = (MethodSignature) proceedingJoinPoint.getSignature();

// Get the method of AOP interception

Method method = signature.getMethod();

return method;

}

}

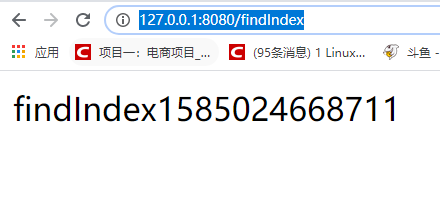

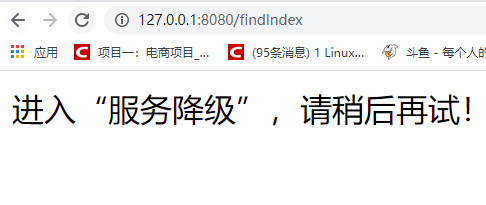

4.5 operation effect

Start the service, browser access: http://127.0.0.1:8080/findIndex , the quick refresh request can see:

Level 5 current limiting

5.1 total concurrent / connections / requests of current limiting

For an application system, there must be a limit to the number of concurrent / requests, that is, there is always a TPS/QPS threshold. If the threshold is exceeded, the system will not respond to user requests or respond very slowly. Therefore, we'd better carry out overload protection to prevent a large number of requests from flooding into the system.

If you have used Tomcat, one of its Connector configurations has the following parameters:

- acceptCount: if Tomcat threads are busy responding, new connections will enter the queue. If the queue size is exceeded, the connection will be rejected;

- maxConnections: the instantaneous maximum number of connections. If it exceeds, it will be queued;

- maxThreads: Tomcat can start the maximum number of threads used to process requests. If the request processing capacity is far greater than the maximum number of threads, it may freeze.

Please refer to the official document for detailed configuration. In addition, MySQL (such as max_connections) and Redis (such as TCP backlog) have similar configurations to limit the number of connections.

5.2 total current limiting resources

If some resources are scarce resources (such as database connections and threads), and multiple systems may use them, applications need to be limited; You can use pooling technology to limit the total number of resources: connection pool and thread pool. For example, if the database connection allocated to each application is 100, the application can use up to 100 resources, exceeding the limit of waiting or throwing exceptions.

5.3 limit the total concurrent / requests of an interface

If the interface may have unexpected access, but it is worried that too much access will cause collapse, such as rush buying business; At this time, it is necessary to limit the total concurrent / requests of this interface; Because the granularity is fine, you can set corresponding thresholds for each interface. AtomicLong in Java can be used for current limiting:

It is suitable for limiting the flow of services without damage to the business or services requiring overload protection. For example, if the business is snapped up, either let the user queue up or tell the user that it is out of stock. It is acceptable for the user. Some open platforms also limit the amount of trial requests for users to call an interface, which can also be implemented in this way. This method is also simple and rough current limiting without smoothing, which needs to be selected and used according to the actual situation;

5.4 number of time window requests for a current limiting interface

That is, the number of requests in a time window. If you want to limit the number of requests / calls per second / minute / day of an interface / service. For example, some basic services will be called by many other systems. For example, the commodity detail page service will call the basic commodity service, but we are afraid that the basic service will be hung up because of the large amount of updates. At this time, we need to limit the number of calls per second / minute; One implementation method is as follows:

5.5 number of requests for a smooth current limiting interface

The previous current limiting methods can not deal with sudden requests well, that is, instantaneous requests may be allowed, resulting in some problems; Therefore, in some scenarios, the burst request needs to be shaped into the average rate request processing (for example, 5r/s, a request is processed every 200 milliseconds to smooth the rate). At this time, there are two algorithms to meet our scenario: token bucket and leaky bucket algorithm. Guava framework provides the implementation of token bucket algorithm, which can be used directly.

Guava RateLimiter provides the implementation of token bucket algorithm: smooth burst current limiting and smooth warming up.

5.6 access layer current limiting

Access layer usually refers to the entrance of request traffic. The main purposes of this layer are: load balancing, illegal request filtering, request aggregation, caching, degradation, current limiting, A/B testing, quality of service monitoring, etc. you can refer to the author's "developing high-performance Web applications using Nginx+Lua(OpenResty)".

For the current limiting of Nginx access layer, Nginx has two modules: connection number current limiting module ngx_http_limit_conn_module and leaky bucket algorithm ngx_http_limit_req_module. You can also use the Lua rest limit traffic module provided by OpenResty for more complex current limiting scenarios.

limit_conn is used to limit the total number of network connections corresponding to a KEY. You can limit the current according to dimensions such as IP and domain name. limit_req is used to limit the average rate of requests corresponding to a KEY. It has two uses: smooth mode (delay) and allow burst mode (nodelay).

5.7 ngx_http_limit_conn_module

limit_conn is to limit the current of the total number of network connections corresponding to a KEY. You can limit the total connections of the IP dimension according to IP, or limit the total connections of a domain name according to the service domain name. But remember that not every request connection will be counted by the counter. Only those request connections processed by Nginx and read the whole request header will be counted by the counter.