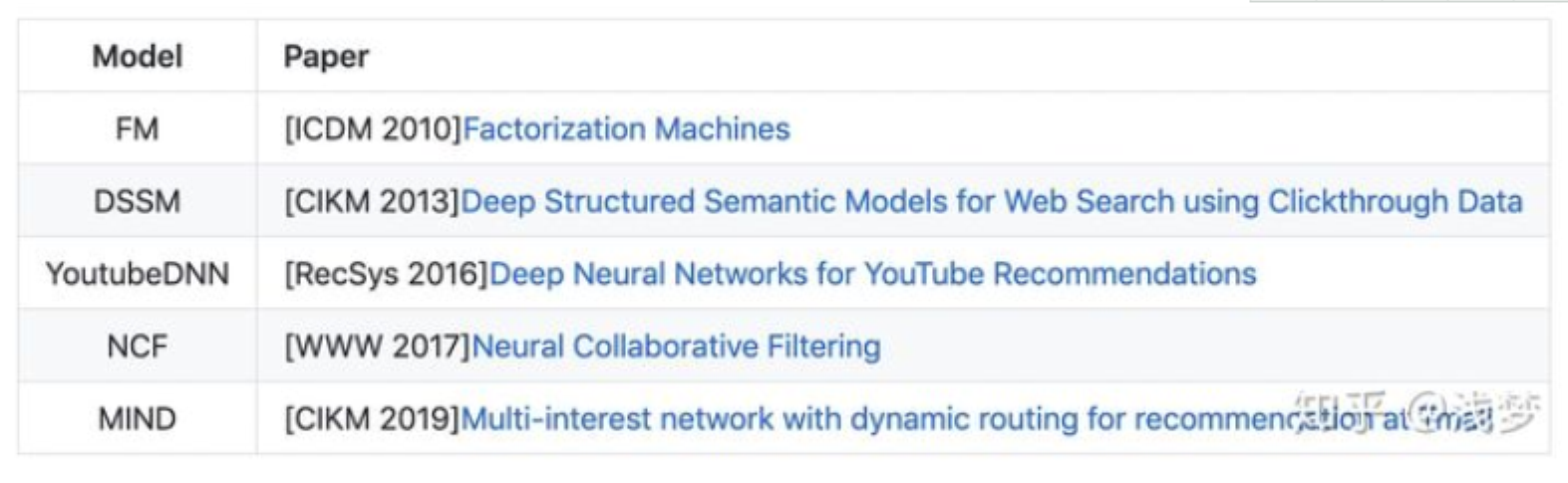

The open source project DeepMatch provides the implementation of several mainstream deep recall matching algorithms, and supports the rapid export of user and item vectors for ANN retrieval. It is very suitable for students to carry out rapid experiments and learning, and liberate the hands of Algorithm Engineers!

At present, the mainstream recommended advertising algorithm architecture is a two-stage process of recall sorting. The recall module recalls a variety of relevant candidate materials from a large number of candidate pools, and the sorting module gives an ordered list that users are most likely to be interested in according to user preferences and context information.

With the popularization of deep learning technology, more and more deep learning algorithms have been applied to industry. After graduating and entering the enterprise last year, the author was lucky to participate in the construction of a new business recommendation system and the optimization of user experience and business indicators. In the recall part, the author also explored some vector based recall and achieved some benefits.

During my postgraduate study, I developed a deep learning based hit rate prediction algorithm library deepctr for personal interest( https://github.com/shenweichen/DeepCTR ), with the iteration of time, it has been supported and recognized by some students. I have also personally used the algorithm in it, applied it to my business, and achieved remarkable benefits.

Compared with various click through rate prediction models in sorting, I still have a lot of deficiencies in my understanding of the recall module. Taking this opportunity, with a learning attitude, I did the DeepMatch project with several enthusiastic and excellent partners. I hope it can help you!

The following is a brief introduction to how to install and use

import pandas as pd

from deepctr.inputs import SparseFeat, VarLenSparseFeat

from preprocess import gen_data_set, gen_model_input

from sklearn.preprocessing import LabelEncoder

from tensorflow.python.keras import backend as K

from tensorflow.python.keras.models import Model

from deepmatch.models import *

from deepmatch.utils import sampledsoftmaxloss

# Taking movielens data as an example, 200 sample data are taken for process demonstration

data = pd.read_csvdata = pd.read_csv("./movielens_sample.txt")

sparse_features = ["movie_id", "user_id",

"gender", "age", "occupation", "zip", ]

SEQ_LEN = 50

negsample = 0

# 1. First ID code the features in the data, and then use ` gen_date_set` and `gen_model_input ` to generate characteristic data with user historical behavior sequence

features = ['user_id', 'movie_id', 'gender', 'age', 'occupation', 'zip']

feature_max_idx = {}

for feature in features:

lbe = LabelEncoder()

data[feature] = lbe.fit_transform(data[feature]) + 1

feature_max_idx[feature] = data[feature].max() + 1

user_profile = data[["user_id", "gender", "age", "occupation", "zip"]].drop_duplicates('user_id')

item_profile = data[["movie_id"]].drop_duplicates('movie_id')

user_profile.set_index("user_id", inplace=True)

user_item_list = data.groupby("user_id")['movie_id'].apply(list)

train_set, test_set = gen_data_set(data, negsample)

train_model_input, train_label = gen_model_input(train_set, user_profile, SEQ_LEN)

test_model_input, test_label = gen_model_input(test_set, user_profile, SEQ_LEN)

# 2. Configure the feature columns required by the model definition, mainly the feature name and the size of the embedded thesaurus

embedding_dim = 16

user_feature_columns = [SparseFeat('user_id', feature_max_idx['user_id'], embedding_dim),

SparseFeat("gender", feature_max_idx['gender'], embedding_dim),

SparseFeat("age", feature_max_idx['age'], embedding_dim),

SparseFeat("occupation", feature_max_idx['occupation'], embedding_dim),

SparseFeat("zip", feature_max_idx['zip'], embedding_dim),

VarLenSparseFeat(SparseFeat('hist_movie_id', feature_max_idx['movie_id'], embedding_dim,

embedding_name="movie_id"), SEQ_LEN, 'mean', 'hist_len'),

]

item_feature_columns = [SparseFeat('movie_id', feature_max_idx['movie_id'], embedding_dim)]