Jet separation system of Shipborne water gun

Project background introduction

According to the Russian satellite news agency, a ship flying the Vietnamese flag suddenly broke into the waters of southern China and ignored the expulsion warning of our maritime police ship. In this case, we had to use shipborne water guns to drive away the Vietnamese maritime police ship and launch several jamming bombs. More than three minutes later, the radar of the Vietnamese ship immediately failed. In short, once the Vietnamese ship is not guided by radar, it will not be able to distinguish its course, just like a headless fly running around at sea. After the radar recovered, the Vietnamese marine police ship immediately accelerated to flee the sea area.

Marine police recruits encounter suspicious ships on their first maritime patrol. How to deal with it?

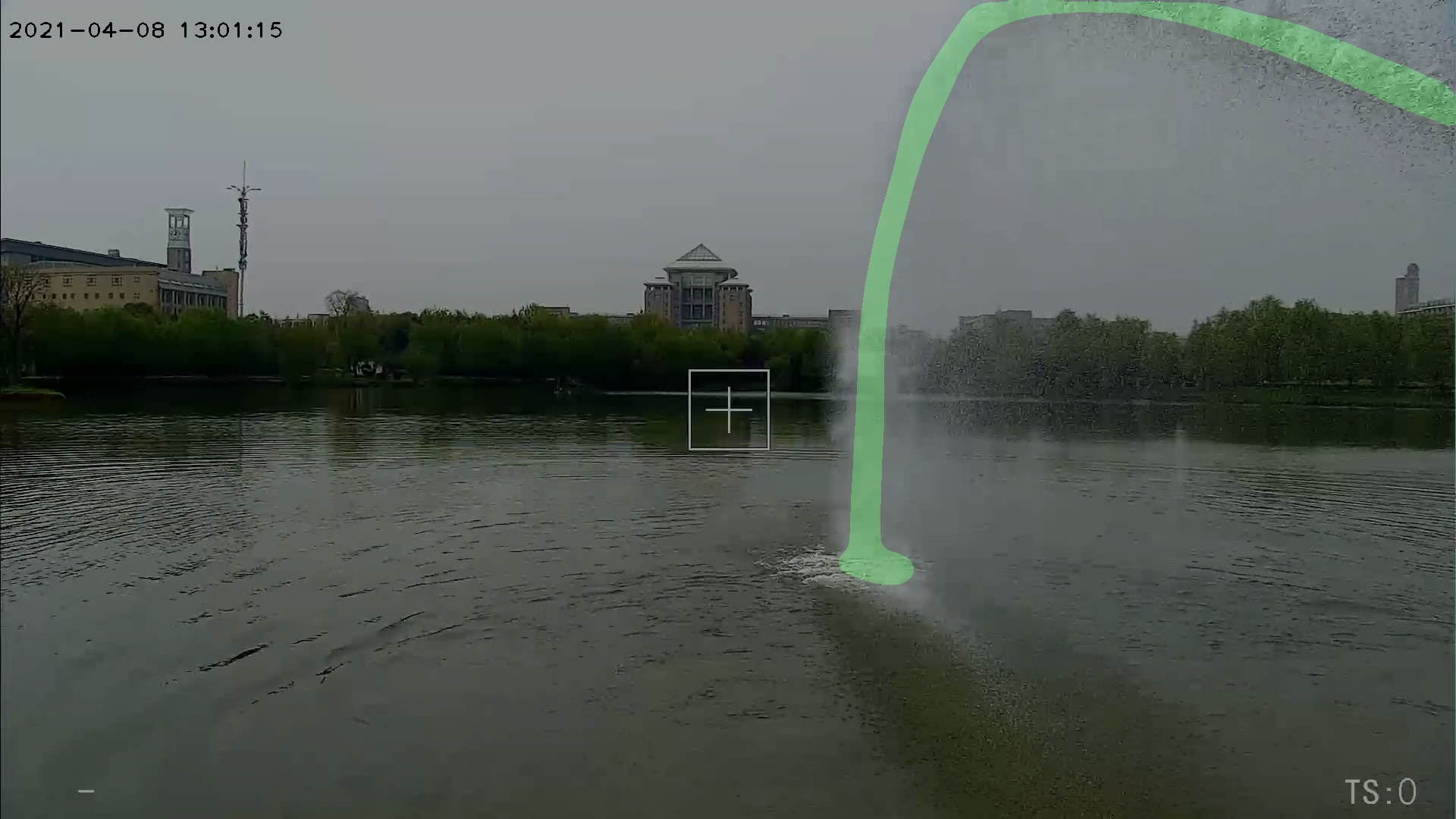

China's sea areas are often invaded by foreign ships. In the face of foreign ship invasion, Chinese maritime police often use shipborne water guns to drive away. However, the water mist formed by the water cannon is large, which will seriously interfere with the maritime police's judgment of whether the water cannon hits the target or not.

The shipborne water gun jet segmentation system uses semantic segmentation technology to extract and segment the water mist of water gun jet, and intelligently judge whether the water gun hits the target according to the shape of water mist, so as to realize the automatic control of Shipborne water gun.

Data set introduction

The data set is made and used by our school's experimental team. Because it involves experimental secrets, it cannot be made public. Please understand.

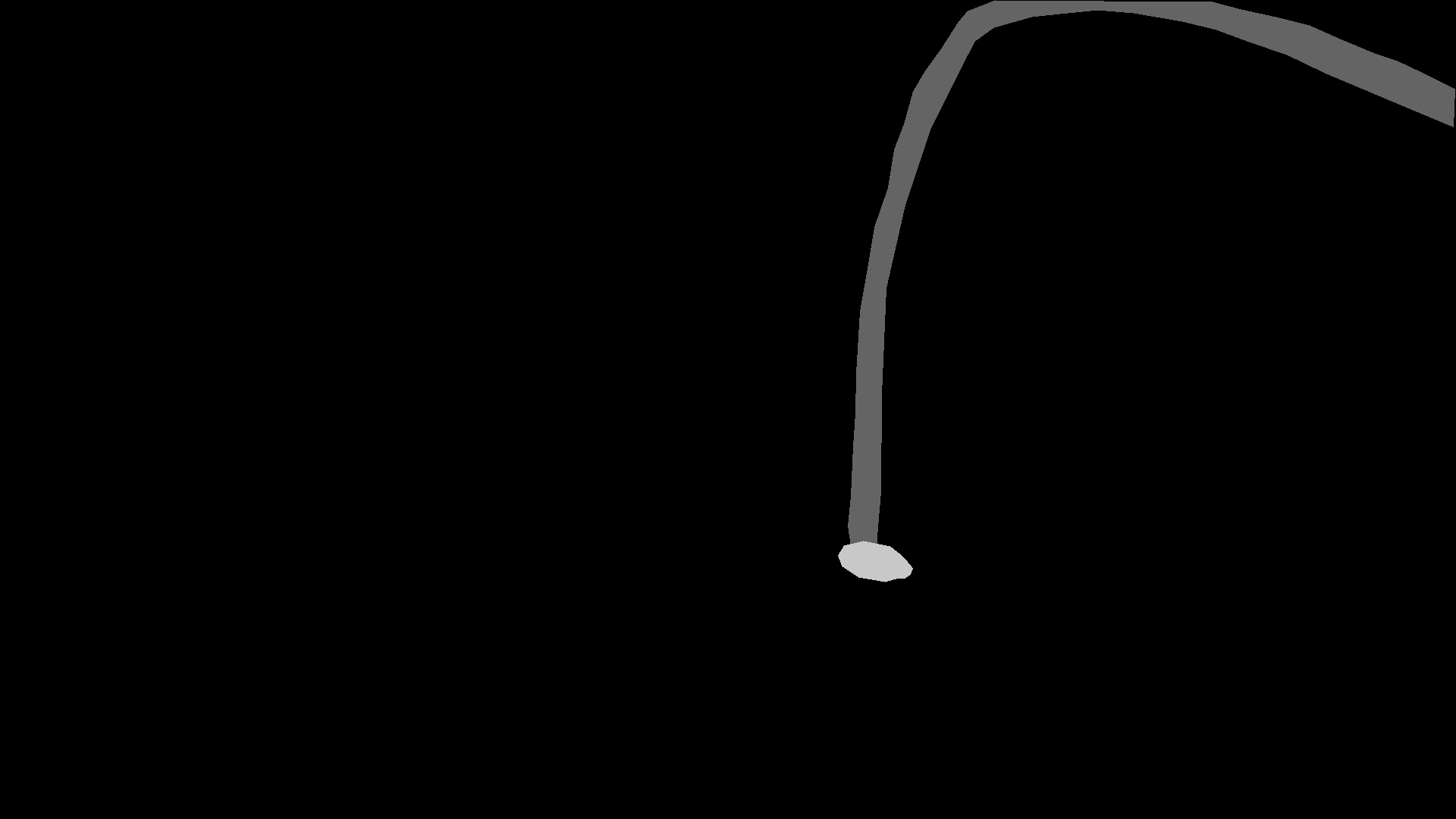

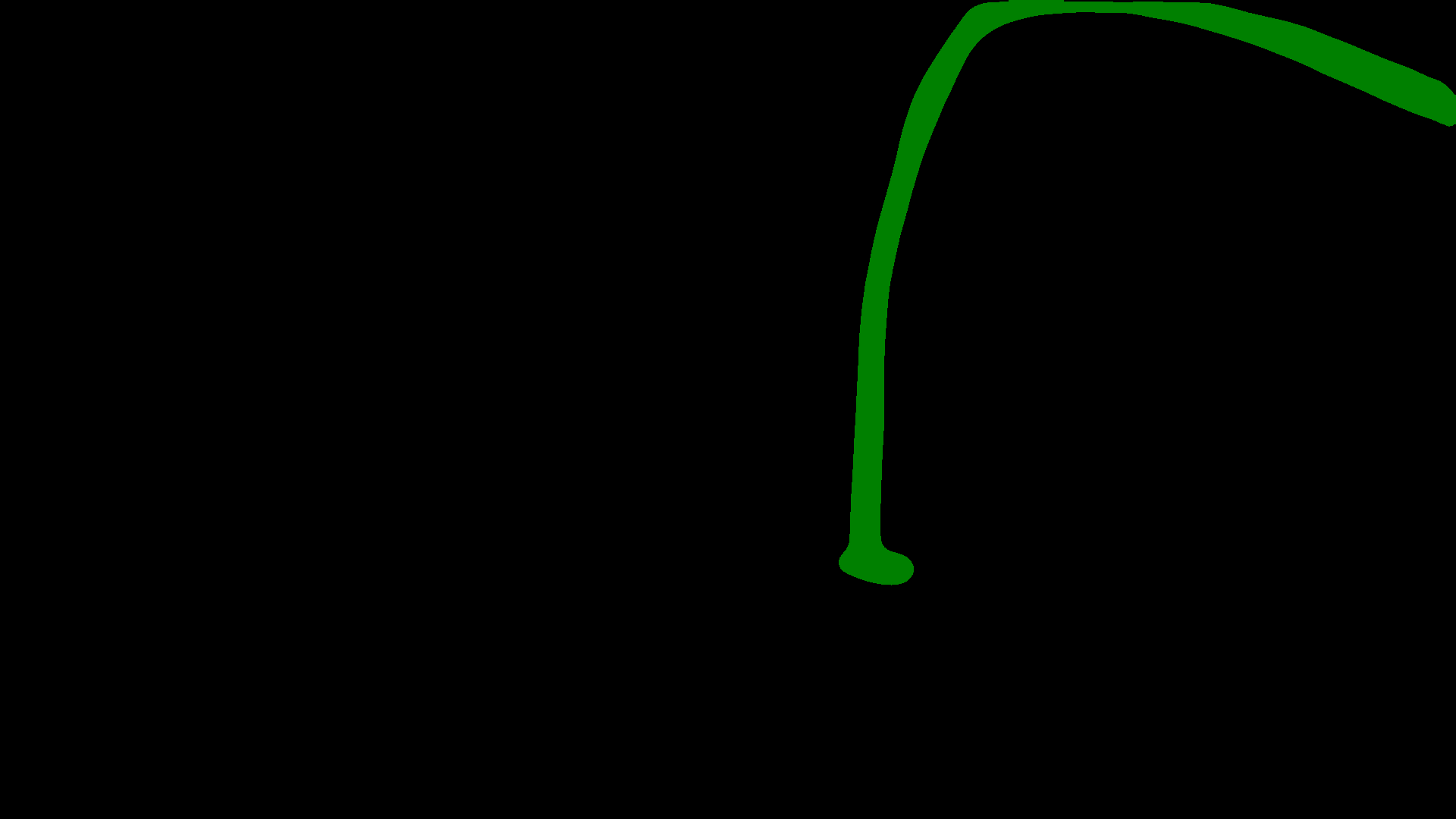

The data set was labeled with LabelMe, and 563 training sets and 141 verification sets were randomly divided according to the ratio of 8:2. The sample diagram is as follows:

Quickly Start

Model training using PaddleSeg

Using u2net_cityscapes_1024x512_160k.yml for quick training, the code is as follows:

python train.py \

--config configs/u2net/u2net_cityscapes_1024x512_160k.yml \

--batch_size 1 \

--do_eval \

--use_vdl \

--save_interval 100 \

--save_dir output

Since nVidia gtx1080 is used locally and the video memory is only 8G, you can manually modify cityscapes The configuration parameters of YML are:

train_dataset:

type: OpticDiscSeg

dataset_root: Your dataset path

transforms:

- type: Resize

target_size: [512, 256]

- type: RandomHorizontalFlip

- type: RandomDistort

brightness_range: 0.4

contrast_range: 0.4

saturation_range: 0.4

- type: Normalize

mode: train

val_dataset:

type: OpticDiscSeg

dataset_root: Your dataset path

transforms:

- type: Normalize

- type: Resize

target_size: [512, 256]

mode: val

model prediction

%cd PaddleSeg/

/home/aistudio/PaddleSeg

!python predict.py --config configs/u2net/u2net_cityscapes_1024x512_160k.yml --model_path ../data/data122309/model.pdparams --image_path ../work/1_1.jpg --save_dir output/result --custom_color 0 0 0

2021-12-19 11:21:51 [INFO]

---------------Config Information---------------

batch_size: 4

iters: 160000

loss:

coef:

- 1

- 1

- 1

- 1

- 1

- 1

- 1

types:

- type: CrossEntropyLoss

lr_scheduler:

end_lr: 0

learning_rate: 0.01

power: 0.9

type: PolynomialDecay

model:

num_classes: 2

pretrained: null

type: U2Net

optimizer:

momentum: 0.9

type: sgd

weight_decay: 4.0e-05

train_dataset:

dataset_root: ../data/rowdata

mode: train

transforms:

- target_size:

- 512

- 256

type: Resize

- type: RandomHorizontalFlip

- brightness_range: 0.4

contrast_range: 0.4

saturation_range: 0.4

type: RandomDistort

- type: Normalize

type: OpticDiscSeg

val_dataset:

dataset_root: ../data/rowdata

mode: val

transforms:

- type: Normalize

- target_size:

- 512

- 256

type: Resize

type: OpticDiscSeg

------------------------------------------------

W1219 11:21:51.328089 762 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1

W1219 11:21:51.328135 762 device_context.cc:465] device: 0, cuDNN Version: 7.6.

2021-12-19 11:21:55 [INFO] Number of predict images = 1

2021-12-19 11:21:55 [INFO] Loading pretrained model from ../data/data122309/model.pdparams

2021-12-19 11:21:56 [INFO] There are 686/686 variables loaded into U2Net.

2021-12-19 11:21:56 [INFO] Start to predict...

1/1 [==============================] - 0s 272ms/step

!python predict_video.py --config configs/u2net/u2net_cityscapes_1024x512_160k.yml --model_path ../data/data122309/model.pdparams --video_path ../work/2.mp4 --save_dir output/result --custom_color 0 0 0

Connecting to https://paddleseg.bj.bcebos.com/dataset/optic_disc_seg.zip

Downloading optic_disc_seg.zip

[==================================================] 100.00%

Uncompress optic_disc_seg.zip

[==================================================] 100.00%

2021-12-19 11:33:25 [INFO]

---------------Config Information---------------

batch_size: 4

iters: 160000

loss:

coef:

- 1

- 1

- 1

- 1

- 1

- 1

- 1

types:

- type: CrossEntropyLoss

lr_scheduler:

end_lr: 0

learning_rate: 0.01

power: 0.9

type: PolynomialDecay

model:

num_classes: 2

pretrained: null

type: U2Net

optimizer:

momentum: 0.9

type: sgd

weight_decay: 4.0e-05

train_dataset:

dataset_root: ../data/rowdata

mode: train

transforms:

- target_size:

- 512

- 256

type: Resize

- type: RandomHorizontalFlip

- brightness_range: 0.4

contrast_range: 0.4

saturation_range: 0.4

type: RandomDistort

- type: Normalize

type: OpticDiscSeg

val_dataset:

dataset_root: ../data/rowdata

mode: val

transforms:

- type: Normalize

- target_size:

- 512

- 256

type: Resize

type: OpticDiscSeg

------------------------------------------------

W1219 11:33:25.326017 238 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.0, Runtime API Version: 10.1

W1219 11:33:25.326063 238 device_context.cc:465] device: 0, cuDNN Version: 7.6.

2021-12-19 11:33:29 [INFO] Loading pretrained model from ../data/data122309/model.pdparams

2021-12-19 11:33:29 [INFO] There are 686/686 variables loaded into U2Net.

2021-12-19 11:33:29 [INFO] Start to predict...

2021-12-19 11:34:15 [INFO] finist

Effect display and summary improvement

The 58200 iteration model is used, and the mIOU is 0.8542. It can be seen that the effect is still very good. Use a fire water monitor fire fighting video in the network to test, and it is found that the effect is slightly poor. It should be that the model is more fitted and generalized, which will be much better. In the later stage, the project can also be moved to the field of fire fighting, so as to make the people's fire fighting cause more convenient and intelligent.

Please click here View the basic usage of this environment

Please click here for more detailed instructions.