2021SC@SDUSC hbase source code analysis (V) HLog analysis

2021SC@SDUSC2021SC@SDUSC

2021SC@SDUSC2021SC@SDUSC

2021SC@SDUSC2021SC@SDUSC

HLog

By default, the data of all write operations (write, update and delete) are written to the HLog in the form of append first, and then to the MemStore. In most cases, the HLog will not be read, but if the RegionServer goes down under some abnormal conditions, the data written to the MemStore but not f lush to the disk will be lost. It is necessary to playback the HLog to recover the lost data. In addition, HBase master-slave replication requires the master cluster to send HLog logs to the slave cluster, and the slave cluster performs playback locally to complete data replication between clusters.

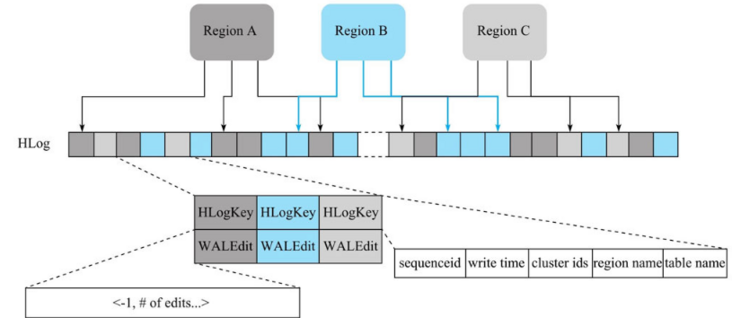

HLog structure

HLog log format

The complete WAL record is composed of WALKey and KeyValue.

@InterfaceAudience.Private

protected void init(final byte[] encodedRegionName,

final TableName tablename,

long logSeqNum,

final long now,

List<UUID> clusterIds,

long nonceGroup,

long nonce,

MultiVersionConcurrencyControl mvcc,

NavigableMap<byte[], Integer> replicationScope,

Map<String, byte[]> extendedAttributes) {

this.sequenceId = logSeqNum;

this.writeTime = now;

this.clusterIds = clusterIds;

this.encodedRegionName = encodedRegionName;

this.tablename = tablename;

this.nonceGroup = nonceGroup;

this.nonce = nonce;

this.mvcc = mvcc;

if (logSeqNum != NO_SEQUENCE_ID) {

setSequenceId(logSeqNum);

}

this.replicationScope = replicationScope;

this.extendedAttributes = extendedAttributes;

}

The above is the method of initializing the log in the WalKeyImpl class.

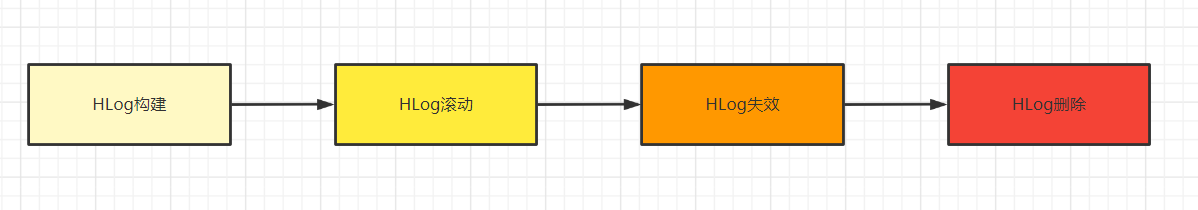

HLog life cycle

(1) HLog construction

Any write (update, delete) operation of HBase will first append the record to the HLog file.

(2) HLog scroll

Regionserver starts a thread to scroll the hlog periodically (determined by the parameter 'hbase.regionserver.logroll.period', 1 hour by default) and re create a new hlog. In this way, many small hlog files will be generated on the regionserver. The reason why hbase does this is that when there are more and more hbase data, the size of the hlog will become larger and larger. When the memory store is written to the disk, expired hlogs can be deleted if they are useless. Small hlog files are easy to delete.

protected static final String WAL_ROLL_PERIOD_KEY = "hbase.regionserver.logroll.period";

this.rollPeriod = conf.getLong(WAL_ROLL_PERIOD_KEY, 3600000);

static MasterRegionWALRoller create(String name, Configuration conf, Abortable abortable,

FileSystem fs, Path walRootDir, Path globalWALRootDir, String archivedWALSuffix,

long rollPeriodMs, long flushSize) {

// we can not run with wal disabled, so force set it to true.

conf.setBoolean(WALFactory.WAL_ENABLED, true);

// we do not need this feature, so force disable it.

conf.setBoolean(AbstractFSWALProvider.SEPARATE_OLDLOGDIR, false);

conf.setLong(WAL_ROLL_PERIOD_KEY, rollPeriodMs);

// make the roll size the same with the flush size, as we only have one region here

conf.setLong(WALUtil.WAL_BLOCK_SIZE, flushSize * 2);

conf.setFloat(AbstractFSWAL.WAL_ROLL_MULTIPLIER, 0.5f);

return new MasterRegionWALRoller(name, conf, abortable, fs, walRootDir, globalWALRootDir,

archivedWALSuffix);

}

This code is used to process our HLog and add scheduling for regular execution:

@Override

public void run() {

updateTimeTrackingBeforeRun();

if (missedStartTime() && isScheduled()) {

onChoreMissedStartTime();

LOG.info("Chore: {} missed its start time", getName());

} else if (stopper.isStopped() || !isScheduled()) {

cancel(false);

cleanup();

LOG.info("Chore: {} was stopped", getName());

} else {

try {

long start = 0;

if (LOG.isDebugEnabled()) {

start = System.nanoTime();

}

if (!initialChoreComplete) {

initialChoreComplete = initialChore();

} else {

chore();

}

if (LOG.isDebugEnabled() && start > 0) {

long end = System.nanoTime();

LOG.debug("{} execution time: {} ms.", getName(),

TimeUnit.NANOSECONDS.toMillis(end - start));

}

} catch (Throwable t) {

LOG.error("Caught error", t);

if (this.stopper.isStopped()) {

cancel(false);

cleanup();

}

}

}

}

After scheduling, the LogCleaner.chore() method will be executed periodically

protected synchronized void chore() {

if (isDisabled() || isRunning()) {

LOG.warn("hbckChore is either disabled or is already running. Can't run the chore");

return;

}

regionInfoMap.clear();

disabledTableRegions.clear();

splitParentRegions.clear();

orphanRegionsOnRS.clear();

orphanRegionsOnFS.clear();

inconsistentRegions.clear();

checkingStartTimestamp = EnvironmentEdgeManager.currentTime();

running = true;

try {

loadRegionsFromInMemoryState();

loadRegionsFromRSReport();

try {

loadRegionsFromFS(scanForMergedParentRegions());

} catch (IOException e) {

LOG.warn("Failed to load the regions from filesystem", e);

}

saveCheckResultToSnapshot();

} catch (Throwable t) {

LOG.warn("Unexpected", t);

}

running = false;

}

(3) HLog failure

Once the write data is dropped from the MemStore, the corresponding log data will become invalid. To facilitate processing, log invalidation deletion in HBase is always performed in file units. To check whether an HLog file is invalid, you only need to confirm whether the data corresponding to all log records in the HLog file has been dropped. If all log records in the log have been dropped, the log file can be considered invalid. Once the log file becomes invalid, it is moved from the walls folder to the oldwalls folder. Note that the HLog is not deleted by the system at this time.

(4) HLog delete

The master background will start a thread to check all invalid log files under the folder oldwalls every other period of time (parameter 'hbase.master.cleaner. interval', 1 minute by default), confirm whether they can be deleted, and then execute the deletion operation. There are two main confirmation conditions:

• whether the HLog file is still participating in master-slave replication. For businesses that use HLog for master-slave replication, you need to continue to confirm whether the HLog is still applied to master-slave replication.

• whether the HLog file has existed in the oldwalls directory for 10 minutes. In order to manage the HLog life cycle more flexibly, the system provides the TTL of the parameter setting log file (parameter 'hbase.master.logcleaner.ttl', 10 minutes by default). By default, the HLog file in oldwalls can be saved for another 10 minutes at most.

Check and delete method in CleanerChore (here, all files in oldwalls directory are obtained and selectively deleted):

private void traverseAndDelete(Path dir, boolean root, CompletableFuture<Boolean> result) {

try {

// Obtained all files in the oldwalls directory

List<FileStatus> allPaths = Arrays.asList(fs.listStatus(dir));

List<FileStatus> subDirs =

allPaths.stream().filter(FileStatus::isDirectory).collect(Collectors.toList());

List<FileStatus> files =

allPaths.stream().filter(FileStatus::isFile).collect(Collectors.toList());

// Call the checkAndDeleteFiles method to check

boolean allFilesDeleted =

files.isEmpty() || deleteAction(() -> checkAndDeleteFiles(files), "files", dir);

//Start conditional deletion

List<CompletableFuture<Boolean>> futures = new ArrayList<>();

if (!subDirs.isEmpty()) {

sortByConsumedSpace(subDirs);

subDirs.forEach(subDir -> {

CompletableFuture<Boolean> subFuture = new CompletableFuture<>();

pool.execute(() -> traverseAndDelete(subDir.getPath(), false, subFuture));

futures.add(subFuture);

});

}

FutureUtils.addListener(

CompletableFuture.allOf(futures.toArray(new CompletableFuture[futures.size()])),

(voidObj, e) -> {

if (e != null) {

result.completeExceptionally(e);

return;

}

try {

boolean allSubDirsDeleted = futures.stream().allMatch(CompletableFuture::join);

boolean deleted = allFilesDeleted && allSubDirsDeleted && isEmptyDirDeletable(dir);

if (deleted && !root) {

deleted = deleteAction(() -> fs.delete(dir, false), "dir", dir);

}

result.complete(deleted);

} catch (Exception ie) {

result.completeExceptionally(ie);

}

});

} catch (Exception e) {

LOG.debug("Failed to traverse and delete the path: {}", dir, e);

result.completeExceptionally(e);

}

}

The above code calls the checkAndDeleteFiles(files) method. The function of this method is to run a given file through each cleaner to see whether the file should be deleted and delete it if necessary. The input parameters are the files in all oldwalls directories:

private boolean checkAndDeleteFiles(List<FileStatus> files) {

if (files == null) {

return true;

}

// first check to see if the path is valid

List<FileStatus> validFiles = Lists.newArrayListWithCapacity(files.size());

List<FileStatus> invalidFiles = Lists.newArrayList();

for (FileStatus file : files) {

if (validate(file.getPath())) {

validFiles.add(file);

} else {

LOG.warn("Found a wrongly formatted file: " + file.getPath() + " - will delete it.");

invalidFiles.add(file);

}

}

Iterable<FileStatus> deletableValidFiles = validFiles;

// check each of the cleaners for the valid files

for (T cleaner : cleanersChain) {

if (cleaner.isStopped() || this.getStopper().isStopped()) {

LOG.warn("A file cleaner" + this.getName() + " is stopped, won't delete any more files in:"

+ this.oldFileDir);

return false;

}

Iterable<FileStatus> filteredFiles = cleaner.getDeletableFiles(deletableValidFiles);

// trace which cleaner is holding on to each file

if (LOG.isTraceEnabled()) {

ImmutableSet<FileStatus> filteredFileSet = ImmutableSet.copyOf(filteredFiles);

for (FileStatus file : deletableValidFiles) {

if (!filteredFileSet.contains(file)) {

LOG.trace(file.getPath() + " is not deletable according to:" + cleaner);

}

}

}

deletableValidFiles = filteredFiles;

}

Iterable<FileStatus> filesToDelete = Iterables.concat(invalidFiles, deletableValidFiles);

return deleteFiles(filesToDelete) == files.size();

}

To delete a file:

protected int deleteFiles(Iterable<FileStatus> filesToDelete) {

int deletedFileCount = 0;

for (FileStatus file : filesToDelete) {

Path filePath = file.getPath();

LOG.trace("Removing {} from archive", filePath);

try {

boolean success = this.fs.delete(filePath, false);

if (success) {

deletedFileCount++;

} else {

LOG.warn("Attempted to delete:" + filePath

+ ", but couldn't. Run cleaner chain and attempt to delete on next pass.");

}

} catch (IOException e) {

e = e instanceof RemoteException ?

((RemoteException)e).unwrapRemoteException() : e;

LOG.warn("Error while deleting: " + filePath, e);

}

}

return deletedFileCount;

}

summary

We can find that the deletion process is to delete the file thread regularly, and it belongs to conditional deletion.

In the write process of HLog, the generated file will not be permanently stored in the system. After its mission is completed, the file will be invalid and deleted.