From finger-to-finger contact to MotionEvent being transmitted to Activity or View, what exactly happened in the middle? How did touch events come about in Android? Where is the source? In this paper, the whole process is described intuitively, not for understanding, but for understanding.

Android Touch Event Model

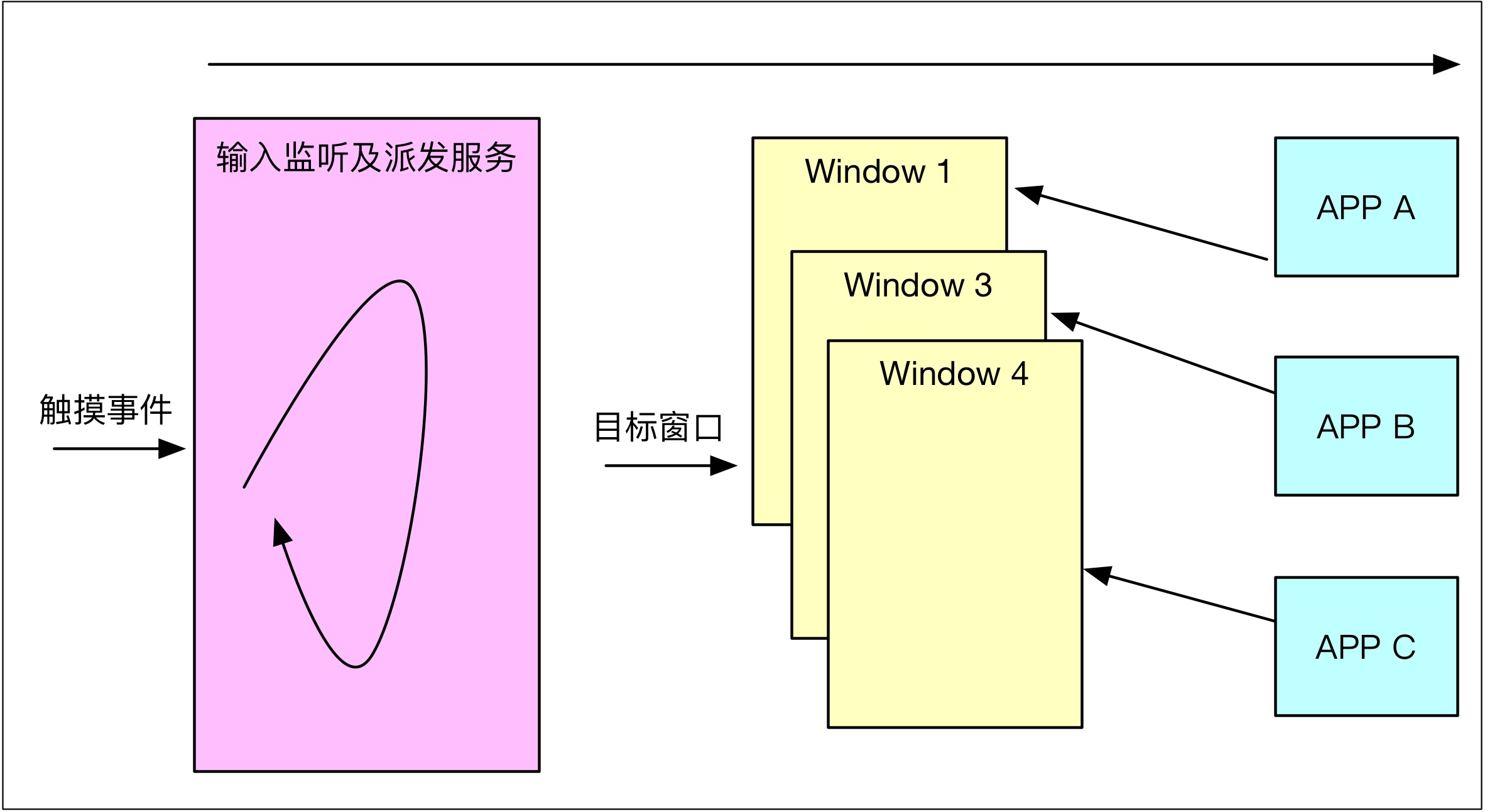

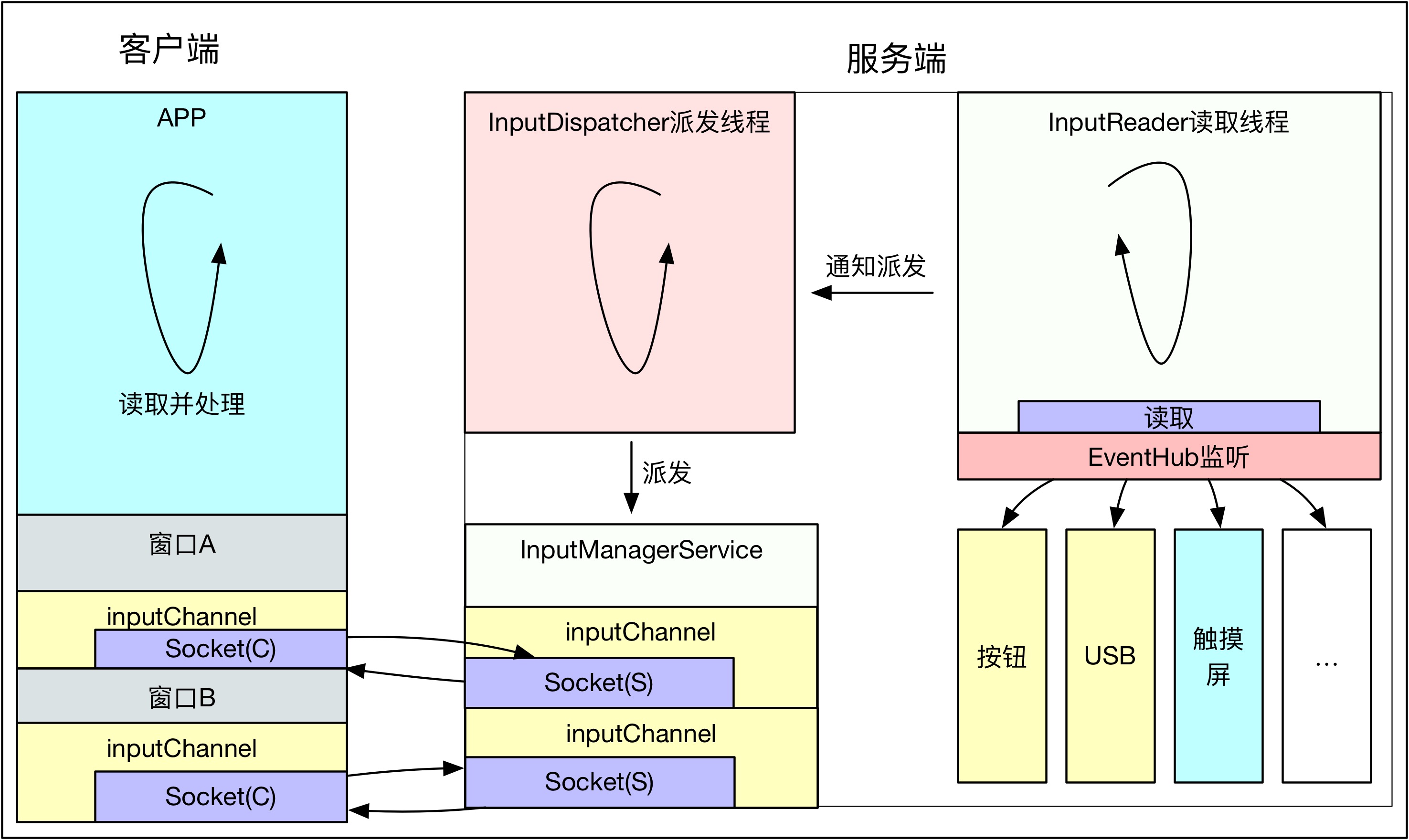

Touch events must be captured before they can be transmitted to the window. Therefore, first of all, there should be a thread on the continuous monitoring screen, once there is a touch event, it should be captured; second, there should be some means to find the target window, because there may be multiple interfaces of APP s for users to see, we must determine this. Events actually inform that window; the last question is how the target window consumes events.

InputManagerService is a service abstracted by Android to deal with various user operations. It can be regarded as a Binder service entity. It is instantiated when the System Server process starts and registered in Service Manager. However, this service is mainly used to provide some information about input devices. As a Binder service, the role is relatively small:

private void startOtherServices() { ... inputManager = new InputManagerService(context); wm = WindowManagerService.main(context, inputManager, mFactoryTestMode != FactoryTest.FACTORY_TEST_LOW_LEVEL, !mFirstBoot, mOnlyCore); ServiceManager.addService(Context.WINDOW_SERVICE, wm); ServiceManager.addService(Context.INPUT_SERVICE, inputManager); ... }

Input Manager Service and Windows Manager Service are added almost at the same time. To some extent, the relationship between them is almost simultaneous. The handling of touch events does involve two services at the same time. The best evidence is that Windows Manager Service needs to hold the reference of Input Manager Service directly, if compared with the above. In the processing model, Input Manager Service is mainly responsible for the collection of touch events, while Windows Manager Service is responsible for finding the target window. Next, let's look at how Input Manager Service completes the collection of touch events.

How to capture touch events

Input Manager Service opens a separate thread to read touch events.

NativeInputManager::NativeInputManager(jobject contextObj, jobject serviceObj, const sp<Looper>& looper) : mLooper(looper), mInteractive(true) { ... sp<EventHub> eventHub = new EventHub(); mInputManager = new InputManager(eventHub, this, this); }

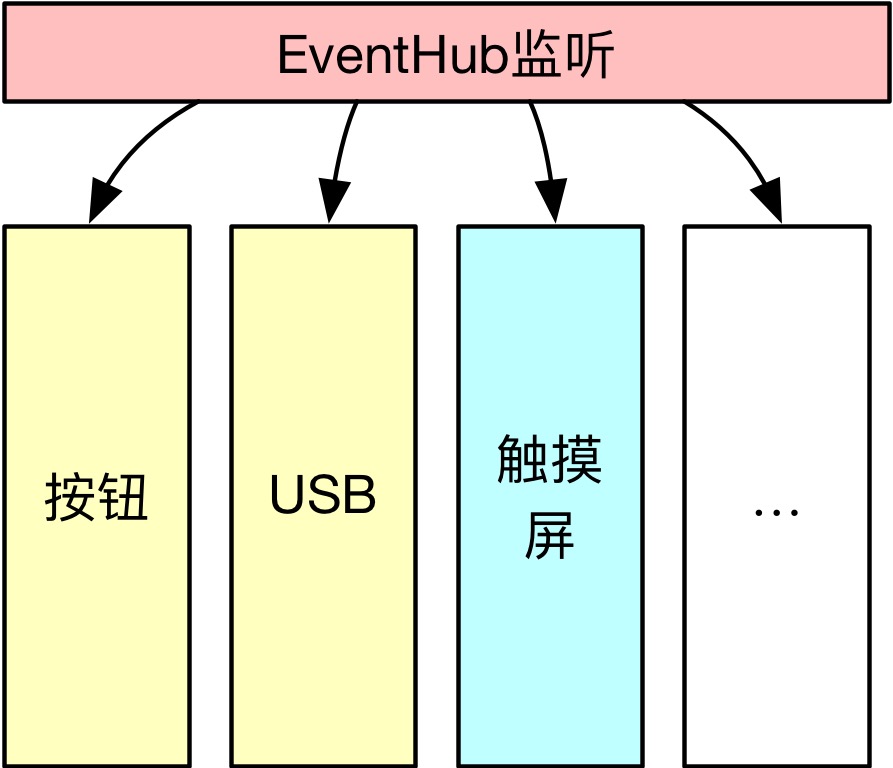

There is an EventHub, which mainly uses the inotify and epoll mechanism of Linux to monitor device events, including device plug-in and various touch and button events. It can be seen as a hub for different devices, mainly for device nodes in the / dev/input directory, such as events on / dev/input/event0. Enter an event, which can be monitored and retrieved through EventHub's getEvents:

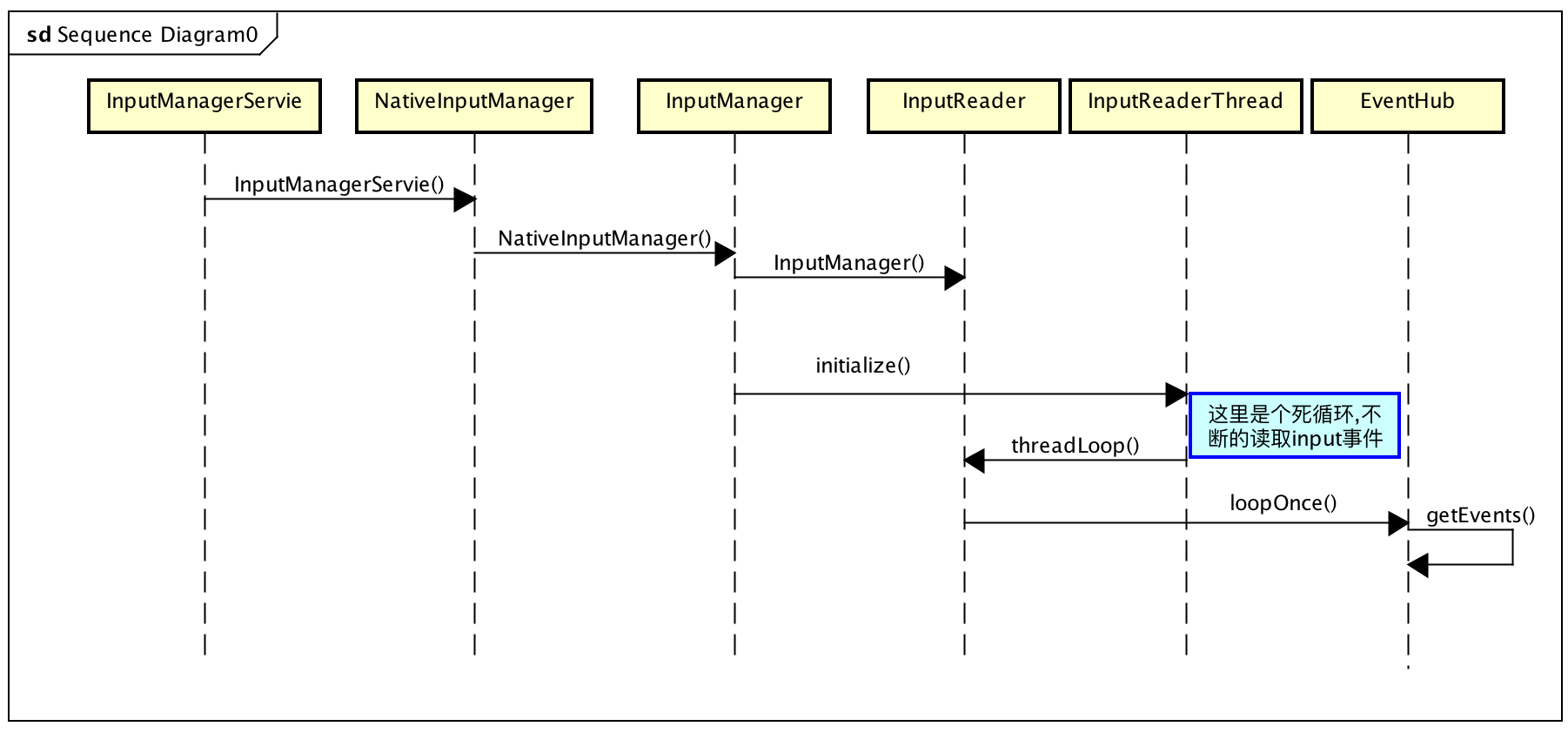

In the new InputManager, an InputReader object and an InputReader Thread Loop thread are created. The main function of this loop thread is to get Input events through EventHub's getEvents.

InputManager::InputManager( const sp<EventHubInterface>& eventHub, const sp<InputReaderPolicyInterface>& readerPolicy, const sp<InputDispatcherPolicyInterface>& dispatcherPolicy) { <!--Event Distribution Execution Class--> mDispatcher = new InputDispatcher(dispatcherPolicy); <!--Event Read Execution Class--> mReader = new InputReader(eventHub, readerPolicy, mDispatcher); initialize(); } void InputManager::initialize() { mReaderThread = new InputReaderThread(mReader); mDispatcherThread = new InputDispatcherThread(mDispatcher); } bool InputReaderThread::threadLoop() { mReader->loopOnce(); return true; } void InputReader::loopOnce() { int32_t oldGeneration; int32_t timeoutMillis; bool inputDevicesChanged = false; Vector<InputDeviceInfo> inputDevices; { ...<!--Monitoring events--> size_t count = mEventHub->getEvents(timeoutMillis, mEventBuffer, EVENT_BUFFER_SIZE); ....<!--Handling events--> processEventsLocked(mEventBuffer, count); ... <!--Notification Dissemination--> mQueuedListener->flush(); }

Through the above process, input events can be read, processEvents Locked is initially encapsulated as RawEvent, and finally a notification is sent to request a message to be dispatched. This solves the problem of event reading, and the following focuses on the distribution of events.

Dissemination of events

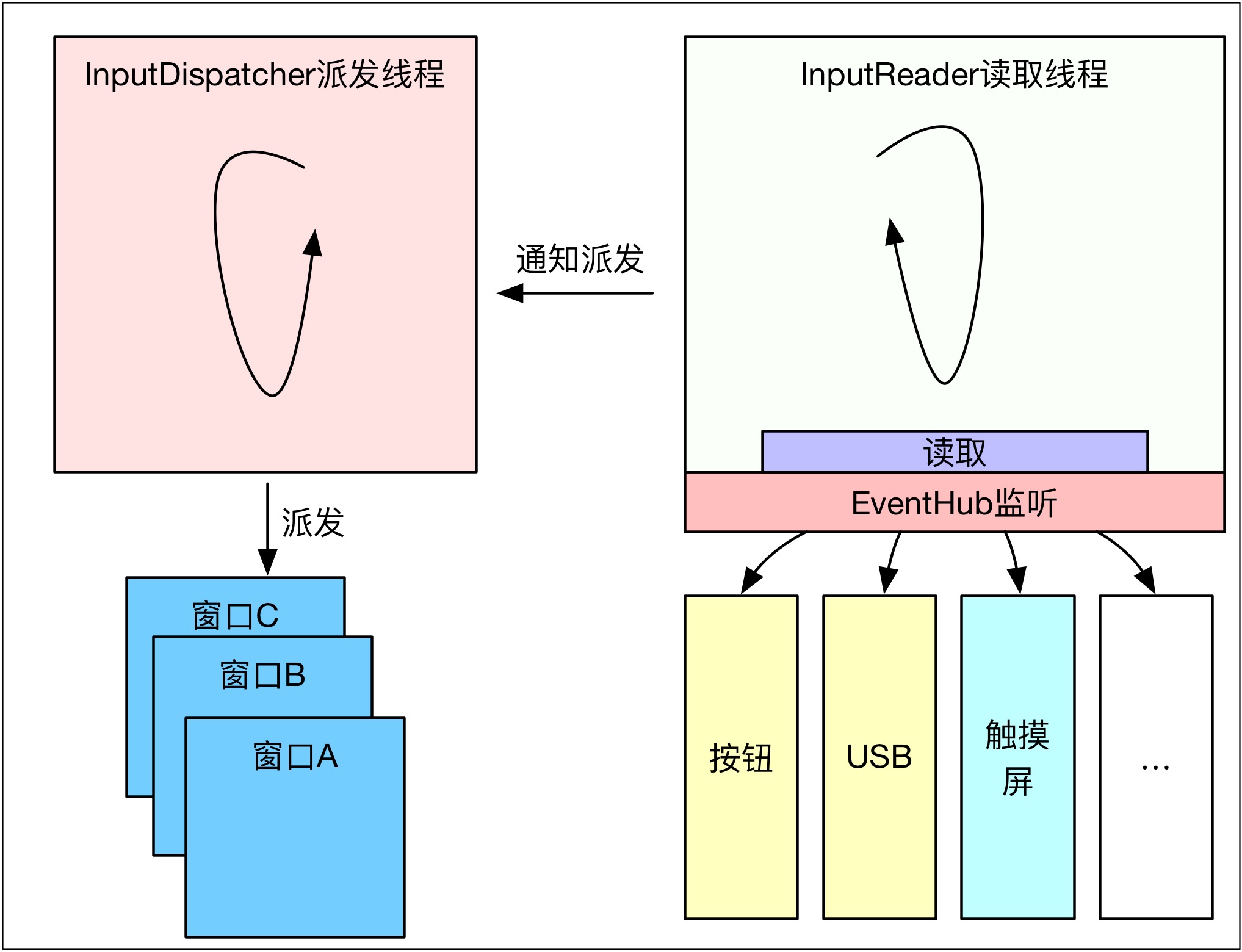

In the new InputManager, not only an event reader thread is created, but also an event dispatcher thread is created. Although it can also be dispatched directly in the reader thread, this will certainly increase the time-consuming, which is not conducive to the timely reading of events. Therefore, after the event is read, it will send a pass directly to the dispatcher thread. Know, please send threads to process, so that read threads can be more agile, prevent the loss of events, so the InputManager model is the following style:

InputReader's mQueuedListener is actually an InputDispatcher object, so mQueuedListener - > flush () is to inform InputDispatcher that the event has been read and can be dispatched. InputDispatcher Thread is a typical Looper thread. native-based Loper implements the Hanlder message processing model, if an InputDispatcher event arrives. After the event has been processed, we continue to sleep and wait. The simplified code is as follows:

bool InputDispatcherThread::threadLoop() { mDispatcher->dispatchOnce(); return true; } void InputDispatcher::dispatchOnce() { nsecs_t nextWakeupTime = LONG_LONG_MAX; { <!--To be awakened, handled Input news--> if (!haveCommandsLocked()) { dispatchOnceInnerLocked(&nextWakeupTime); } ... } nsecs_t currentTime = now(); int timeoutMillis = toMillisecondTimeoutDelay(currentTime, nextWakeupTime); <!--Sleep Waiting input Event--> mLooper->pollOnce(timeoutMillis); }

This is the dispatch thread model, dispatch One ceInnerLocked is the specific dispatch processing logic, here we look at one branch, touch events:

void InputDispatcher::dispatchOnceInnerLocked(nsecs_t* nextWakeupTime) { ... case EventEntry::TYPE_MOTION: { MotionEntry* typedEntry = static_cast<MotionEntry*>(mPendingEvent); ... done = dispatchMotionLocked(currentTime, typedEntry, &dropReason, nextWakeupTime); break; } bool InputDispatcher::dispatchMotionLocked( nsecs_t currentTime, MotionEntry* entry, DropReason* dropReason, nsecs_t* nextWakeupTime) { ... Vector<InputTarget> inputTargets; bool conflictingPointerActions = false; int32_t injectionResult; if (isPointerEvent) { <!--Key Point 1 Finding Goals Window--> injectionResult = findTouchedWindowTargetsLocked(currentTime, entry, inputTargets, nextWakeupTime, &conflictingPointerActions); } else { injectionResult = findFocusedWindowTargetsLocked(currentTime, entry, inputTargets, nextWakeupTime); } ... <!--Key Point 2 Distribution--> dispatchEventLocked(currentTime, entry, inputTargets); return true; }

As can be seen from the above code, for touch events, the target Window is first found through find Touched Windows Targets Locked, and then the message is sent to the target Window through dispatch EventLocked. Let's see how to find the target Window and how the list of windows is maintained.

How to Find the Target Window for Touch Events

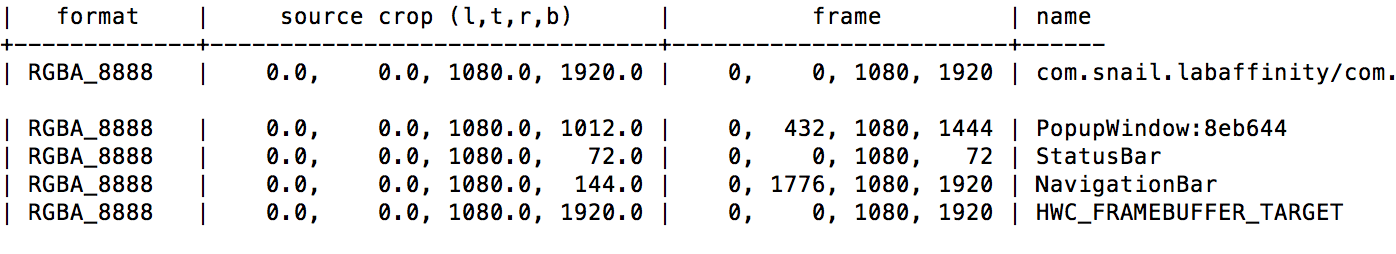

Android system can support multiple screens at the same time, each screen is abstracted into a DisplayContent object, and a Windows List list object is maintained internally to record all windows in the current screen, including status bar, navigation bar, application window, sub-window, etc. For touch events, we are more concerned about the visible window. Use ADB shell dumpsys Surface Flinger to see the organization of the visible window:

Then, how to find the window corresponding to the touch event is the status bar, navigation bar or application window. At this time, the Windows List of DisplayContent plays a role. DisplayContent holds the information of all windows. Therefore, according to the location of the touch event and the attributes of the window, it can determine which event to send to. Windows, of course, are more complicated than a sentence. It has something to do with the state, transparency and split-screen information of windows. Here's a simple look at the process to achieve subjective understanding.

int32_t InputDispatcher::findTouchedWindowTargetsLocked(nsecs_t currentTime, const MotionEntry* entry, Vector<InputTarget>& inputTargets, nsecs_t* nextWakeupTime, bool* outConflictingPointerActions) { ... sp<InputWindowHandle> newTouchedWindowHandle; bool isTouchModal = false; <!--Travel through all windows--> size_t numWindows = mWindowHandles.size(); for (size_t i = 0; i < numWindows; i++) { sp<InputWindowHandle> windowHandle = mWindowHandles.itemAt(i); const InputWindowInfo* windowInfo = windowHandle->getInfo(); if (windowInfo->displayId != displayId) { continue; // wrong display } int32_t flags = windowInfo->layoutParamsFlags; if (windowInfo->visible) { if (! (flags & InputWindowInfo::FLAG_NOT_TOUCHABLE)) { isTouchModal = (flags & (InputWindowInfo::FLAG_NOT_FOCUSABLE | InputWindowInfo::FLAG_NOT_TOUCH_MODAL)) == 0; <!--Find the target window--> if (isTouchModal || windowInfo->touchableRegionContainsPoint(x, y)) { newTouchedWindowHandle = windowHandle; break; // found touched window, exit window loop } } ...

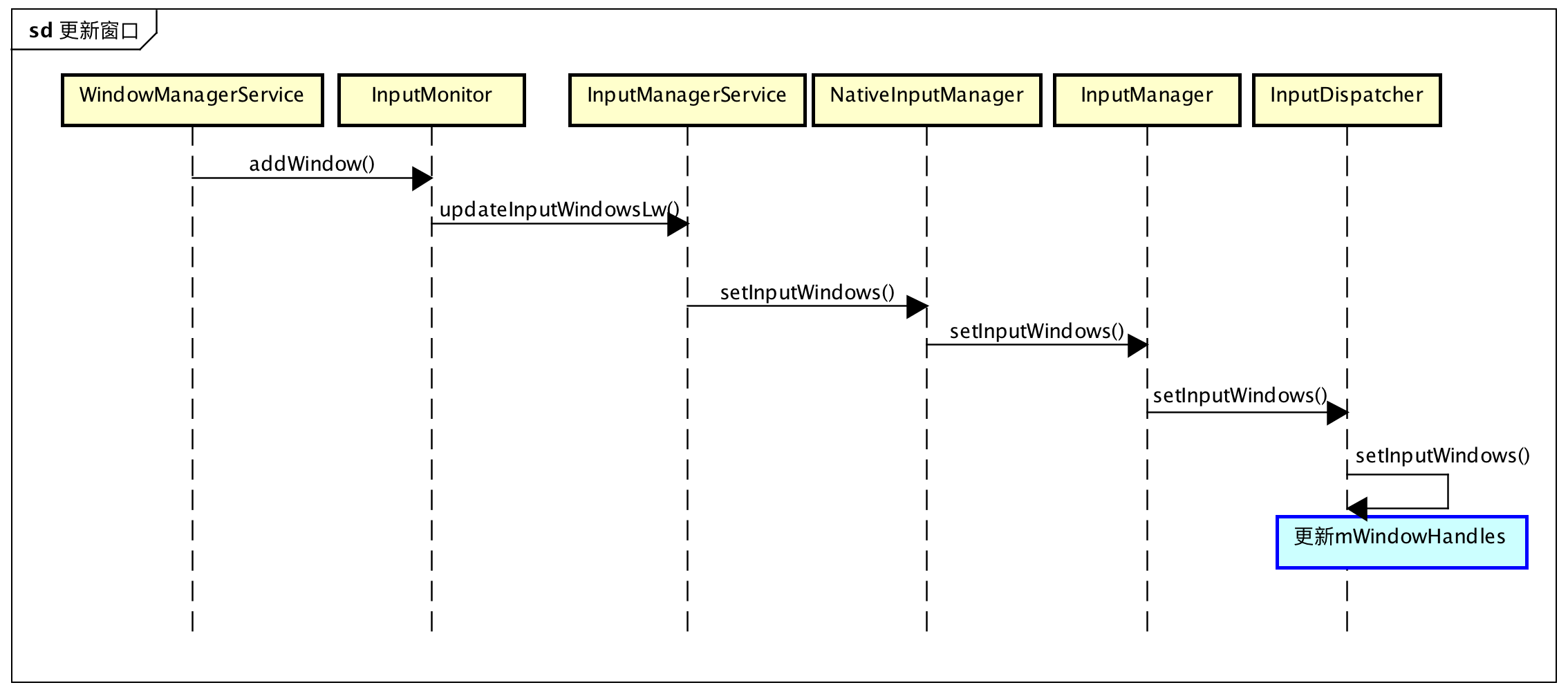

MWindows Handles represent all windows. Find Touched Windows Targets Locked is to find the target window from mWindows Handles. The rules are too complex. In a word, it is determined according to the click position, window Z order and other characteristics. Interest can be analyzed by itself. But what we need to care about here is mWindows Handles, which is how it comes from, and how to keep it up to date when adding or deleting windows? This involves interacting with Windows Manager Service. The value of mWindows Handles is set in Input Dispatcher:: setInput Windows.

void InputDispatcher::setInputWindows(const Vector<sp<InputWindowHandle> >& inputWindowHandles) { ... mWindowHandles = inputWindowHandles; ...

Who will call this function? The real entry is InputMonitor in Windows Manager Service. InputDispatcher::setInputWindows is called briefly. This time is mainly related to the logic of window modification and deletion. Take addWindow as an example:

From the above process, we can understand why Windows Manager Service and Input Manager Service are complementary. Here, how to find the target window has been solved. Here is how to send events to the target window.

How to send events to the target window

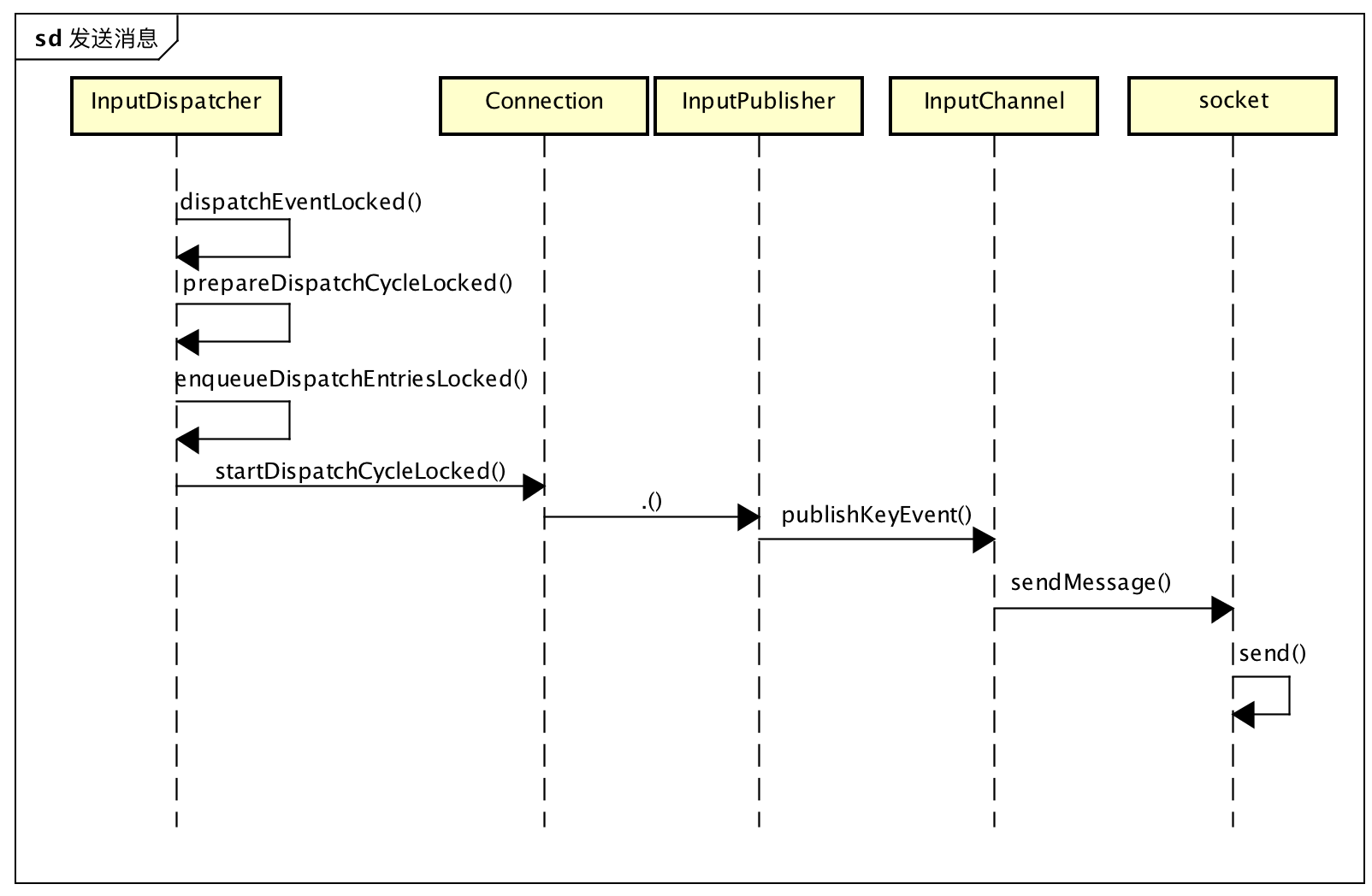

Find the target window, and encapsulate the event, the rest is the notification target window, but the most obvious problem is that all the logic is in the System Server process, and the window to be notified is in the user process of the APP side, so how to notify? Subconsciously, you might think of Binder communication. After all, Binder is the most used IPC method in Android, but the Input event handling is not Binder: the higher version uses Socket communication, while the older version uses Pipe pipeline.

void InputDispatcher::dispatchEventLocked(nsecs_t currentTime, EventEntry* eventEntry, const Vector<InputTarget>& inputTargets) { pokeUserActivityLocked(eventEntry); for (size_t i = 0; i < inputTargets.size(); i++) { const InputTarget& inputTarget = inputTargets.itemAt(i); ssize_t connectionIndex = getConnectionIndexLocked(inputTarget.inputChannel); if (connectionIndex >= 0) { sp<Connection> connection = mConnectionsByFd.valueAt(connectionIndex); prepareDispatchCycleLocked(currentTime, connection, eventEntry, &inputTarget); } else { } } }

Looking down at the code layer by layer, you will find that the sendMessage function of InputChannel will be invoked at the end, and it will most be sent to the APP side through socket.

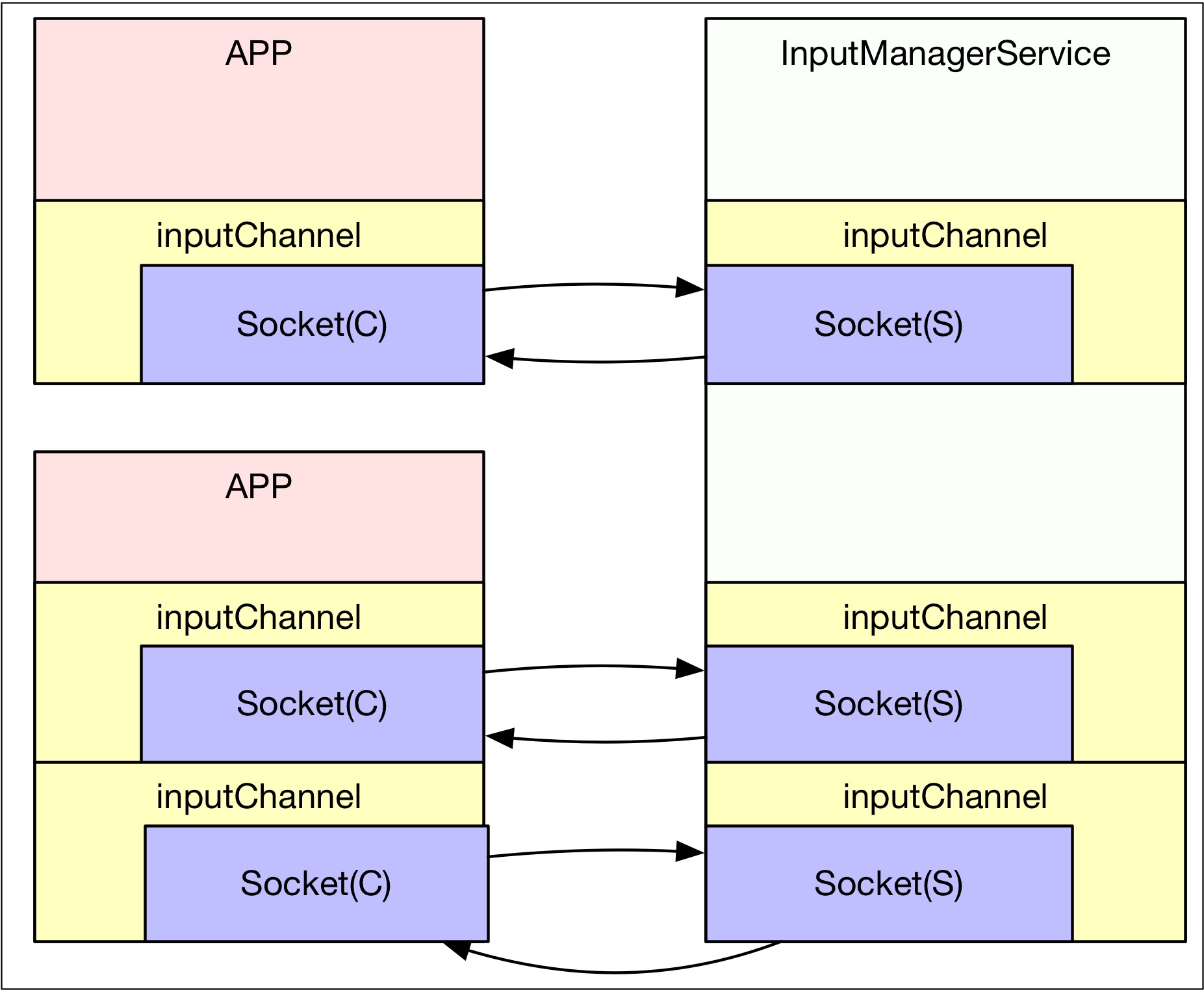

How did this Socket come from? Or how does a pair of Sockets that communicate at both ends come from? In fact, it still involves Windows Manager Service. When the APP side requests to add windows to WMS, it will be accompanied by the creation of Input channel. The addition of windows will certainly call the setView function of ViewRootImpl:

ViewRootImpl

public void setView(View view, WindowManager.LayoutParams attrs, View panelParentView) { ... requestLayout(); if ((mWindowAttributes.inputFeatures & WindowManager.LayoutParams.INPUT_FEATURE_NO_INPUT_CHANNEL) == 0) { <!--Establish InputChannel container--> mInputChannel = new InputChannel(); } try { mOrigWindowType = mWindowAttributes.type; mAttachInfo.mRecomputeGlobalAttributes = true; collectViewAttributes(); <!--Add window and request opening Socket Input Communication Channel--> res = mWindowSession.addToDisplay(mWindow, mSeq, mWindowAttributes, getHostVisibility(), mDisplay.getDisplayId(), mAttachInfo.mContentInsets, mAttachInfo.mStableInsets, mAttachInfo.mOutsets, mInputChannel); }... <!--Monitor, Open Input channel--> if (mInputChannel != null) { if (mInputQueueCallback != null) { mInputQueue = new InputQueue(); mInputQueueCallback.onInputQueueCreated(mInputQueue); } mInputEventReceiver = new WindowInputEventReceiver(mInputChannel, Looper.myLooper()); }

In the definition of IWindows Session. aidl, InputChannel is the out type, that is to say, it needs to be filled by the server. Then how does the server WMS fill?

public int addWindow(Session session, IWindow client, int seq, WindowManager.LayoutParams attrs, int viewVisibility, int displayId, Rect outContentInsets, Rect outStableInsets, Rect outOutsets, InputChannel outInputChannel) { ... if (outInputChannel != null && (attrs.inputFeatures & WindowManager.LayoutParams.INPUT_FEATURE_NO_INPUT_CHANNEL) == 0) { String name = win.makeInputChannelName(); <!--Key Point 1 Creating Communication Channels --> InputChannel[] inputChannels = InputChannel.openInputChannelPair(name); <!--Local use--> win.setInputChannel(inputChannels[0]); <!--APP End-use--> inputChannels[1].transferTo(outInputChannel); <!--Registration Channel and Window--> mInputManager.registerInputChannel(win.mInputChannel, win.mInputWindowHandle); }

WMS first creates socketpair as a full duplex channel and fills it into the Input Channel of Client and Server respectively; then lets Input Manager bind the Input communication channel to the current window ID, so that we can know which window communicates with which channel; finally, through Binder, outInput Channel is returned to the APP end, and below is Soc. Creation code of ketPair:

status_t InputChannel::openInputChannelPair(const String8& name, sp<InputChannel>& outServerChannel, sp<InputChannel>& outClientChannel) { int sockets[2]; if (socketpair(AF_UNIX, SOCK_SEQPACKET, 0, sockets)) { status_t result = -errno; ... return result; } int bufferSize = SOCKET_BUFFER_SIZE; setsockopt(sockets[0], SOL_SOCKET, SO_SNDBUF, &bufferSize, sizeof(bufferSize)); setsockopt(sockets[0], SOL_SOCKET, SO_RCVBUF, &bufferSize, sizeof(bufferSize)); setsockopt(sockets[1], SOL_SOCKET, SO_SNDBUF, &bufferSize, sizeof(bufferSize)); setsockopt(sockets[1], SOL_SOCKET, SO_RCVBUF, &bufferSize, sizeof(bufferSize)); <!--Fill in server inputchannel--> String8 serverChannelName = name; serverChannelName.append(" (server)"); outServerChannel = new InputChannel(serverChannelName, sockets[0]); <!--Fill in client inputchannel--> String8 clientChannelName = name; clientChannelName.append(" (client)"); outClientChannel = new InputChannel(clientChannelName, sockets[1]); return OK; }

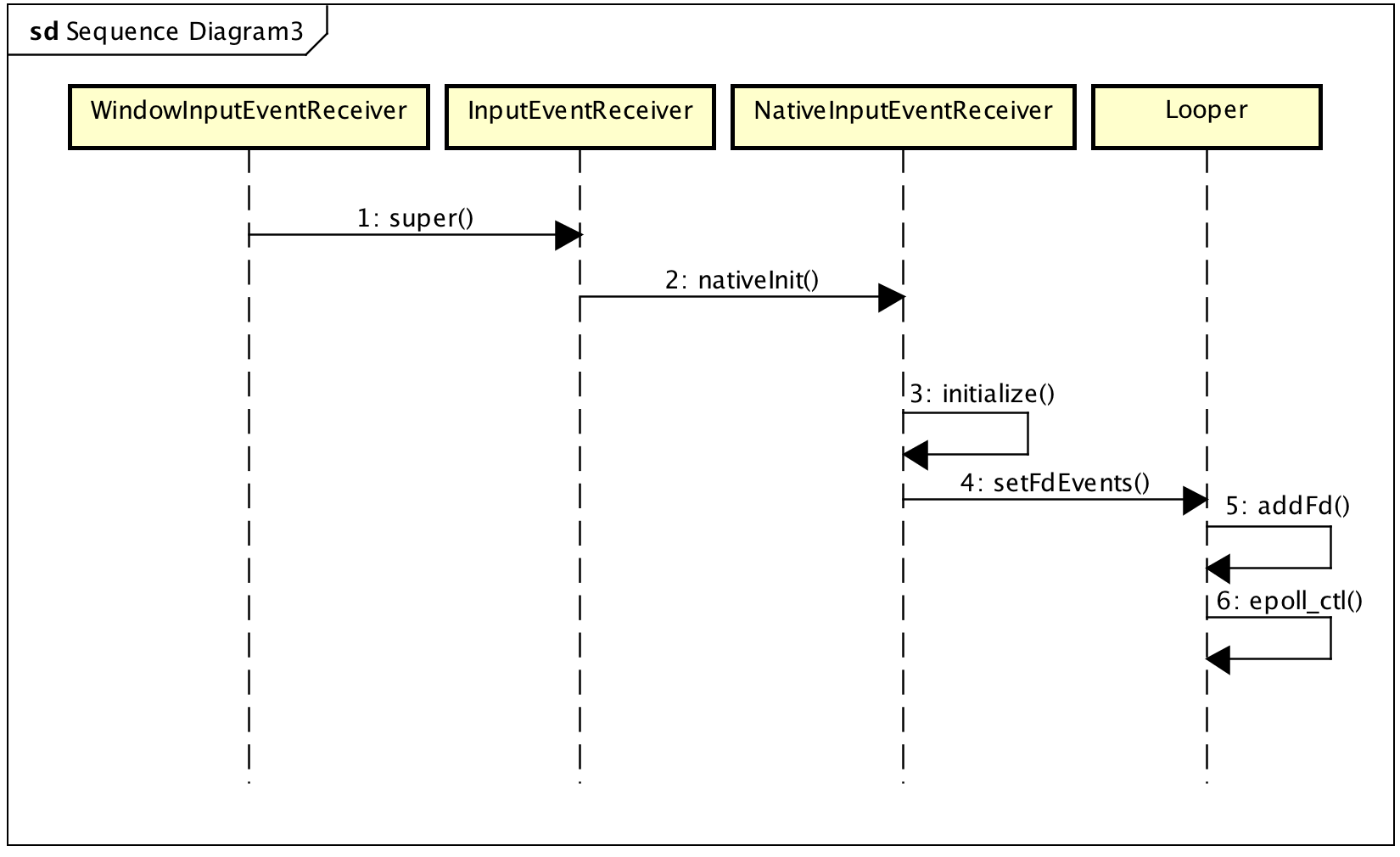

Here, the creation and access of socketpair is actually based on the file descriptor. WMS needs to return the file descriptor fd to APP through Binder communication. This part can only refer to the knowledge of Binder. It mainly implements the conversion of two processes fd at the kernel level. After the window is added successfully, socketpair is created and passed to APP. End, but the channel is not fully established, because there is also a need for active monitoring, after all, the arrival of messages need to be notified, first look at the channel model.

The way to listen on the APP side is to add socket s to the epoll array of Looper threads. Once a message arrives, the Looper threads will be awakened and the event content will be obtained. From the code point of view, the opening of communication channel is accompanied by the creation of Windows Input Event Receiver.

When information arrives, Looper finds the corresponding listener based on fd: NativeInput Event Receiver, and calls handleEvent to process the corresponding event.

int NativeInputEventReceiver::handleEvent(int receiveFd, int events, void* data) { ... if (events & ALOOPER_EVENT_INPUT) { JNIEnv* env = AndroidRuntime::getJNIEnv(); status_t status = consumeEvents(env, false /*consumeBatches*/, -1, NULL); mMessageQueue->raiseAndClearException(env, "handleReceiveCallback"); return status == OK || status == NO_MEMORY ? 1 : 0; } ...

Afterwards, events are read further and encapsulated into Java layer objects, which are passed to Java layer for corresponding callback processing.

status_t NativeInputEventReceiver::consumeEvents(JNIEnv* env, bool consumeBatches, nsecs_t frameTime, bool* outConsumedBatch) { ... for (;;) { uint32_t seq; InputEvent* inputEvent; <!--Getting events--> status_t status = mInputConsumer.consume(&mInputEventFactory, consumeBatches, frameTime, &seq, &inputEvent); ... <!--Handle touch Event--> case AINPUT_EVENT_TYPE_MOTION: { MotionEvent* motionEvent = static_cast<MotionEvent*>(inputEvent); if ((motionEvent->getAction() & AMOTION_EVENT_ACTION_MOVE) && outConsumedBatch) { *outConsumedBatch = true; } inputEventObj = android_view_MotionEvent_obtainAsCopy(env, motionEvent); break; } <!--Callback handler--> if (inputEventObj) { env->CallVoidMethod(receiverObj.get(), gInputEventReceiverClassInfo.dispatchInputEvent, seq, inputEventObj); env->DeleteLocalRef(inputEventObj); }

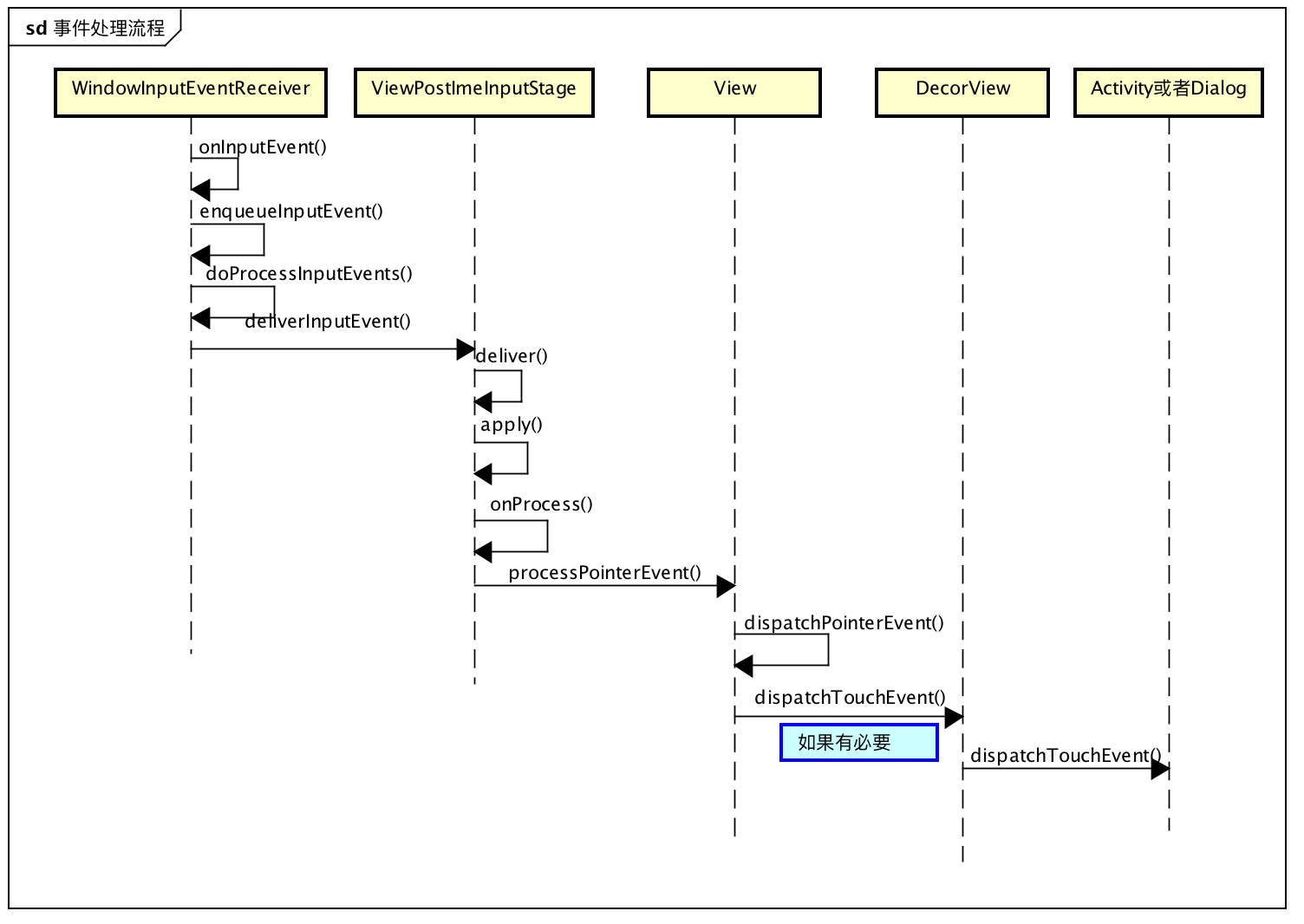

So finally, touch events are encapsulated as inputEvent and processed through dispatch InputEvent (Windows InputEvent Receiver) of InputEvent Receiver, which returns to our common Java world.

Event Processing in Target Window

Finally, take a brief look at the process of event processing, how does Activity or Dialog get Touch events? How to deal with it? To put it bluntly, it's up to the rootView in ViewRootImpl to make itself responsible for consuming the event. It depends on which View consumes the event. For DecorView in Activity and Dialog, it rewrites the event allocation function dispatch TouchEvent of View, and handles the event to CallBack. Object processing, as for the consumption of View and ViewGroup, is the logic of View itself.

summary

Now connect all the processes in series with the modules, and the process is roughly as follows:

- Click on the screen

- The Read thread of InputManagerService captures events and sends them to the Dispatcher thread after preprocessing.

- Dispatcher Finds the Target Window

- Send events to the target window through Socket

- APP is awakened

- Find the target window to handle events

Well, this is the end of the article. If you think it's well written, give me a compliment. If you think it's worth improving, please leave me a message. We will inquire carefully and correct the shortcomings. Thank you.

I hope you can forward, share and pay attention to me, and update the technology dry goods in the future. Thank you for your support! ___________

Forwarding + Praise + Concern, First Time to Acquire the Latest Knowledge Points

Android architects have a long way to go. Let's work together.

The following wall cracks recommend reading!!!

- Android Learning Notes Reference!

- "Winter is not over," Ali P9 architecture to share Android essential technical points, so that you get soft offer!

- Three years after graduation, how did I become a 30W senior Android developer from a 10W drag engineer?

- Tencent T3 Taurus gives you an understanding of the development trend of Android in 2019 and the necessary technical points!

- Eight years of Android development, from code farmer to architect to share my technological growth path, to encourage!