Introduction to MySQL Binlog

- What is binlog?

A binary log that records SQL statements that make or potentially change to data and is saved on disk as a result.

- What is the role of binlog?

There are three main uses:

-

Data replication (master-slave synchronization)

Mysql's Master-Slave protocol enables Slave to achieve data consistency by listening on binlog s for data replication

data recovery

Recovering data through the mysqlbinlog tool

-

Binlog variable

-

log_bin (Binlog switch, using show variables like'log_bin'; view)

- binlog_format (Binlog log format, using show variables like'binlog_format'; view)

There are three types of log formats:

- ROW, keeps only the details of the record being modified, and does not record the context-related information of the SQL statement.(It can clearly record the modification details of each row of data, does not need to record context-related information, so there will be no problem that procedure, function and trigger calls can not be copied accurately under certain circumstances, can be copied under any circumstances, and can speed up the efficiency of replaying logs from libraries to ensure the efficiency of replaying logs fromConsistency of database data)

- STATEMENT, each SQL that modifies data is recorded.(Records only the details and context of the execution statement, avoids recording changes for each line, and can significantly reduce the amount of binlog logs, save IO, and improve performance when there are more modification records than ROW types.It can also be used for real-time restore, and the master-slave version can be different and the slave version can be higher than the master version)

- MIXED, a mix of the two above

-

-

Binlog Management

- show master logs; view a list of logs for all binlog s

- show master status; see the name of the last binlog log number and the location of the last event technology

- Flush logs; refresh the binlog, and now a new number of binlog log files will be generated

- reset master; empty all binlog logs

-

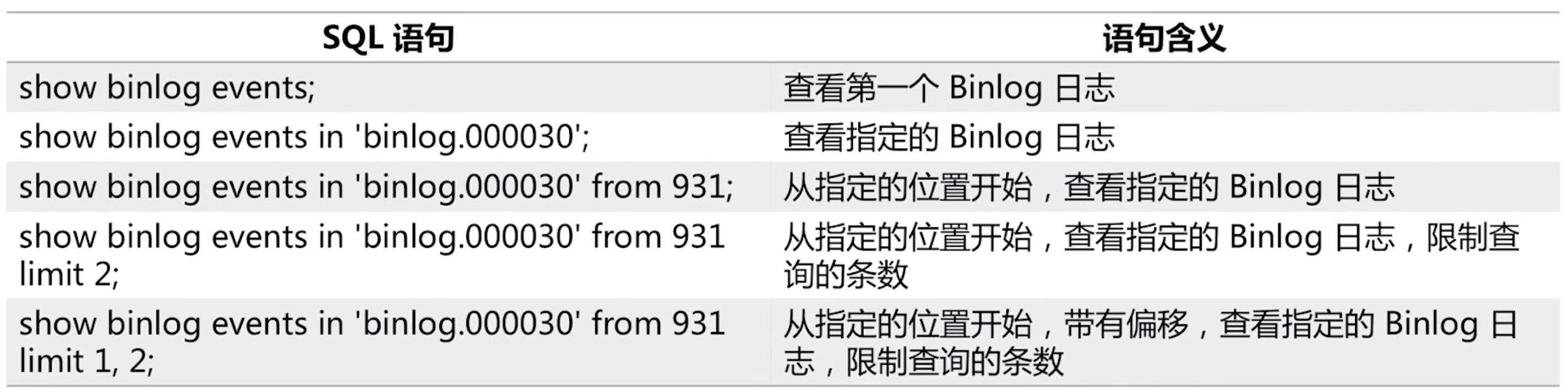

Binlog-related SQL show binlog events [in'log_name'][from position][limit [offset,]row_count]

-

Common Binlog event s

- QUERY - Data independent operations, begin, drop table, truncate table, and so on

- TABLE_MAP - Records the table information for the next operation, storing the database name and table name

- XID - Mark Transaction Commit

- WRITE_ROWS insert data, insert operation

- UPDATE_ROWS update Data, update Operation

- DELETE_ROWS Delete Data, the delete operation

Event contains header and data, which provides information such as when an event was created, which server, etc. The data section provides specific information about the event, such as modifications to the specific data.

Tip: binlog does not record column names of data tables

In the next implementation, we will wrap our system into a fake Mysql Slave and listen on the binlog using the open source tool mysql-binlog-connector-java.

Open source tool mysql-binlog-connector-java

-

Tool Source: Github Portal

-

Component Use

1. Adding dependency

<!-- binlog Log Listener,Resolve Open Source Tool Class Library --> <dependency> <groupId>com.github.shyiko</groupId> <artifactId>mysql-binlog-connector-java</artifactId> <version>0.18.1</version> </dependency>

2. Create a test interface

package com.sxzhongf.ad.service; import com.github.shyiko.mysql.binlog.BinaryLogClient; import com.github.shyiko.mysql.binlog.event.DeleteRowsEventData; import com.github.shyiko.mysql.binlog.event.EventData; import com.github.shyiko.mysql.binlog.event.UpdateRowsEventData; import com.github.shyiko.mysql.binlog.event.WriteRowsEventData; import java.io.IOException; /** * BinlogServiceTest for Test Mysql binlog monitoring * {@code * Mysql8 Connection prompt Client does not support authentication protocol requested by server; consider upgrading MySQL client solution * USE mysql; * ALTER USER 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY 'password'; * FLUSH PRIVILEGES; * } * * @author <a href="mailto:magicianisaac@gmail.com">Isaac.Zhang | If initial </a> */ public class BinlogServiceTest { /** * --------Update----------- * UpdateRowsEventData{tableId=90, includedColumnsBeforeUpdate={0, 1, 2, 3, 4, 5, 6, 7}, includedColumns={0, 1, 2, 3, 4, 5, 6, 7}, rows=[ * {before=[11, 10, Test Bin Log, 1, Tue Jun 25 08:00:00 CST 2019, Tue Jun 25 08:00:00 CST 2019, Tue Jun 25 08:00:00 CST 2019, Tue Jun 25 08:00:00 CST 2019], after=[11, 10, zhangpan test Binlog, 1, Tue Jun 25 08:00:00 CST 2019, Tue Jun 25 08:00:00 CST 2019, Tue Jun 25 08:00:00 CST 2019, Tue Jun 25 08:00:00 CST 2019]} * ]} * * --------Insert----------- * WriteRowsEventData{tableId=91, includedColumns={0, 1, 2, 3, 4, 5, 6, 7}, rows=[ * [10, 11, ad unit test binlog, 1, 0, 1236.7655, Thu Jun 27 08:00:00 CST 2019, Thu Jun 27 08:00:00 CST 2019] * ]} */ public static void main(String[] args) throws IOException { // //Construct BinaryLogClient to populate mysql link information BinaryLogClient client = new BinaryLogClient("127.0.0.1", 3306, "root", "12345678" ); //Set the file and location of the Binlog to read, otherwise the client will read Binlog from Header and listen on it // client.setBinlogFilename("binlog.000035"); // client.setBinlogPosition(); //Register listeners for clients to monitor and parse Binlog //Evet is the Binlog change information that is monitored. Evet contains header & Data client.registerEventListener(event -> { EventData data = event.getData(); if (data instanceof UpdateRowsEventData) { System.out.println("--------Update-----------"); System.out.println(data.toString()); } else if (data instanceof WriteRowsEventData) { System.out.println("--------Insert-----------"); System.out.println(data.toString()); } else if (data instanceof DeleteRowsEventData) { System.out.println("--------Delete-----------"); System.out.println(data.toString()); } }); client.connect(); } }Function:

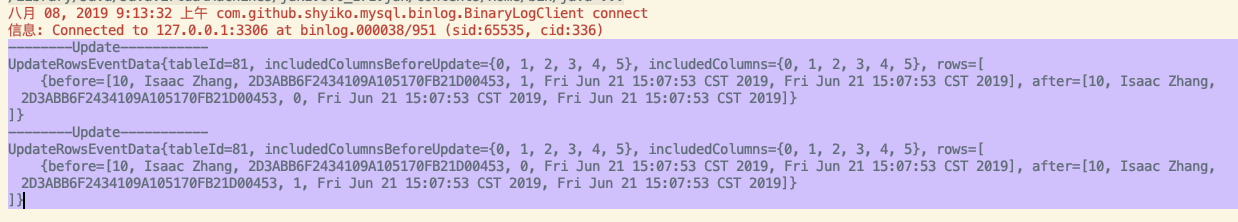

August 08, 2019:13:32 AM com.github.shyiko.mysql.binlog.BinaryLogClient connect Information: Connected to 127.0.0.1:3306 at binlog.000038/951 (sid:65535, cid:336) ...

Execute sql update ad_user set user_status=1 where user_id=10;

What we need to know is that our goal is to monitor changes to Mysql tables and parse them into the format we want, our java objects.Based on the monitoring results we have seen above, we know the general content of the returned information. Now that we have learned to simply use the BinaryLogClient to listen on the binlog, we need to define a listener to implement our own business content.

Because we only need content from Events, we only need to customize a listener to implement our business by implementing the com.github.shyiko.mysql.binlog.BinaryLogClient.EventListener interface.Determine if and how the current event needs to be handled by the content of the event.

Construct template file for parsing binlog

The fundamental reason why we listen for binlog to construct incremental data is to decouple our advertising delivery system from our advertising retrieval system business. Since there are no databases and data table dependencies defined in our retrieval system, we get us by defining a template file and parsing the template fileThe required database and table information, because binlog monitoring does not distinguish which database and which table information, we can use a template to specify the part we want to listen on.

{

"database": "advertisement",

"tableList": [

{

"tableName": "ad_plan",

"level": 2,

"insert": [

{

"column": "plan_id"

},

{

"column": "user_id"

},

{

"column": "plan_status"

},

{

"column": "start_date"

},

{

"column": "end_date"

}

],

"update": [

{

"column": "plan_id"

},

{

"column": "user_id"

},

{

"column": "plan_status"

},

{

"column": "start_date"

},

{

"column": "end_date"

}

],

"delete": [

{

"column": "plan_id"

}

]

},

{

"tableName": "ad_unit",

"level": 3,

"insert": [

{

"column": "unit_id"

},

{

"column": "unit_status"

},

{

"column": "position_type"

},

{

"column": "plan_id"

}

],

"update": [

{

"column": "unit_id"

},

{

"column": "unit_status"

},

{

"column": "position_type"

},

{

"column": "plan_id"

}

],

"delete": [

{

"column": "unit_id"

}

]

},

{

"tableName": "ad_creative",

"level": 2,

"insert": [

{

"column": "creative_id"

},

{

"column": "type"

},

{

"column": "material_type"

},

{

"column": "height"

},

{

"column": "width"

},

{

"column": "audit_status"

},

{

"column": "url"

}

],

"update": [

{

"column": "creative_id"

},

{

"column": "type"

},

{

"column": "material_type"

},

{

"column": "height"

},

{

"column": "width"

},

{

"column": "audit_status"

},

{

"column": "url"

}

],

"delete": [

{

"column": "creative_id"

}

]

},

{

"tableName": "relationship_creative_unit",

"level": 3,

"insert": [

{

"column": "creative_id"

},

{

"column": "unit_id"

}

],

"update": [

],

"delete": [

{

"column": "creative_id"

},

{

"column": "unit_id"

}

]

},

{

"tableName": "ad_unit_district",

"level": 4,

"insert": [

{

"column": "unit_id"

},

{

"column": "province"

},

{

"column": "city"

}

],

"update": [

],

"delete": [

{

"column": "unit_id"

},

{

"column": "province"

},

{

"column": "city"

}

]

},

{

"tableName": "ad_unit_hobby",

"level": 4,

"insert": [

{

"column": "unit_id"

},

{

"column": "hobby_tag"

}

],

"update": [

],

"delete": [

{

"column": "unit_id"

},

{

"column": "hobby_tag"

}

]

},

{

"tableName": "ad_unit_keyword",

"level": 4,

"insert": [

{

"column": "unit_id"

},

{

"column": "keyword"

}

],

"update": [

],

"delete": [

{

"column": "unit_id"

},

{

"column": "keyword"

}

]

}

]

}In the template file above, a database is specified as advertisement, so you can easily add more than one listening library.Below the database, we listen to CUD operations on several tables and the field information needed for each operation.

-

Implementation Template - > Java Entity

- Define the entity corresponding to the template file

@Data @AllArgsConstructor @NoArgsConstructor public class BinlogTemplate { //Single Database Correspondence private String database; //Multi-table private List<JsonTable> tableList; }- table information in the corresponding json

/** * JsonTable for Used to represent the corresponding table information in template.json * * @author <a href="mailto:magicianisaac@gmail.com">Isaac.Zhang | If initial </a> */ @Data @AllArgsConstructor @NoArgsConstructor public class JsonTable { private String tableName; private Integer level; private List<Column> insert; private List<Column> update; private List<Column> delete; @Data @AllArgsConstructor @NoArgsConstructor public static class Column { private String columnName; } }- Read the corresponding table information object (the primary purpose is to map the field index to the field name)

@Data @AllArgsConstructor @NoArgsConstructor public class TableTemplate { private String tableName; private String level; //Operation Type->Multiple Columns private Map<OperationTypeEnum, List<String>> opTypeFieldSetMap = new HashMap<>(); /** * Binlog A transformation mapping for field index - > field name in log * Because the updated column name is not displayed in the binlog, it only shows the index of the field, so we need to implement a conversion */ private Map<Integer, String> posMap = new HashMap<>(); }- Parsing template files to java objects

@Data public class ParseCustomTemplate { private String database; /** * key -> TableName * value -> {@link TableTemplate} */ private Map<String, TableTemplate> tableTemplateMap; public static ParseCustomTemplate parse(BinlogTemplate _template) { ParseCustomTemplate template = new ParseCustomTemplate(); template.setDatabase(_template.getDatabase()); for (JsonTable jsonTable : _template.getTableList()) { String name = jsonTable.getTableName(); Integer level = jsonTable.getLevel(); TableTemplate tableTemplate = new TableTemplate(); tableTemplate.setTableName(name); tableTemplate.setLevel(level.toString()); template.tableTemplateMap.put(name, tableTemplate); //Column information corresponding to traversal operation type Map<OperationTypeEnum, List<String>> operationTypeListMap = tableTemplate.getOpTypeFieldSetMap(); for (JsonTable.Column column : jsonTable.getInsert()) { getAndCreateIfNeed( OperationTypeEnum.ADD, operationTypeListMap, ArrayList::new ).add(column.getColumnName()); } for (JsonTable.Column column : jsonTable.getUpdate()) { getAndCreateIfNeed( OperationTypeEnum.UPDATE, operationTypeListMap, ArrayList::new ).add(column.getColumnName()); } for (JsonTable.Column column : jsonTable.getDelete()) { getAndCreateIfNeed( OperationTypeEnum.DELETE, operationTypeListMap, ArrayList::new ).add(column.getColumnName()); } } return template; } /** * Get the object from the Map, create one if it doesn't exist */ private static <T, R> R getAndCreateIfNeed(T key, Map<T, R> map, Supplier<R> factory) { return map.computeIfAbsent(key, k -> factory.get()); } }- Resolve a transformation mapping for field index - > field name

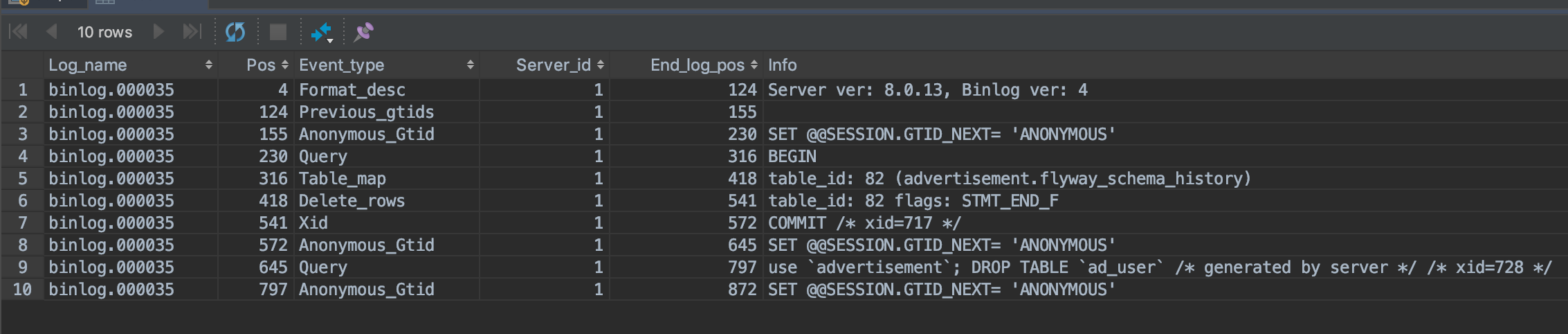

First, let's look at the specific log information for binlog:

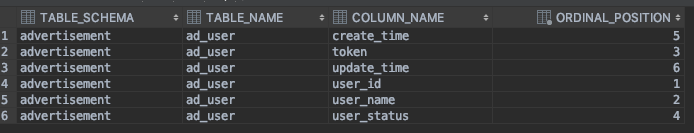

--------Insert----------- WriteRowsEventData{tableId=91, includedColumns={0, 1, 2, 3, 4, 5, 6, 7}, rows=[ [10, 11, ad unit test binlog, 1, 0, 1236.7655, Thu Jun 27 08:00:00 CST 2019, Thu Jun 27 08:00:00 CST 2019] --------Update----------- UpdateRowsEventData{tableId=81, includedColumnsBeforeUpdate={0, 1, 2, 3, 4, 5}, includedColumns={0, 1, 2, 3, 4, 5}, rows=[ {before=[10, Isaac Zhang, 2D3ABB6F2434109A105170FB21D00453, 0, Fri Jun 21 15:07:53 CST 2019, Fri Jun 21 15:07:53 CST 2019], after=[10, Isaac Zhang, 2D3ABB6F2434109A105170FB21D00453, 1, Fri Jun 21 15:07:53 CST 2019, Fri Jun 21 15:07:53 CST 2019]}As you can see, includedColumns only contains {0, 1, 2, 3, 4, 5} location information in the log, so how can we know which field it represents? Next, we will implement this mapping relationship. Before we do so, let's first query the database for the specific location of the fields in our table:

sql> SELECT table_schema,table_name,column_name,ordinal_position FROM information_schema.COLUMNS WHERE TABLE_SCHEMA = 'advertisement' AND TABLE_NAME='ad_user'

We can see that ordinal_position corresponds to 1-6, but the binlog log index we listened to above is 0-5, so we can see the relationship.

We started coding the implementation, and we used JdbcTemplate to query the database information:

@Slf4j @Component public class TemplateHolder { private ParseCustomTemplate template; private final JdbcTemplate jdbcTemplate; private String SQL_SCHEMA = "SELECT TABLE_SCHEMA,TABLE_NAME,COLUMN_NAME,ORDINAL_POSITION FROM information_schema.COLUMNS " + "WHERE TABLE_SCHEMA = ? AND TABLE_NAME = ?"; @Autowired public TemplateHolder(JdbcTemplate jdbcTemplate) { this.jdbcTemplate = jdbcTemplate; } /** * Data information needs to be loaded when the container is loaded */ @PostConstruct private void init() { loadJSON("template.json"); } /** * Provide external loading services */ public TableTemplate getTable(String tableName) { return template.getTableTemplateMap().get(tableName); } /** * Load binlog json file for listening */ private void loadJSON(String path) { ClassLoader classLoader = Thread.currentThread().getContextClassLoader(); InputStream inputStream = classLoader.getResourceAsStream(path); try { BinlogTemplate binlogTemplate = JSON.parseObject( inputStream, Charset.defaultCharset(), BinlogTemplate.class ); this.template = ParseCustomTemplate.parse(binlogTemplate); loadMeta(); } catch (IOException ex) { log.error((ex.getMessage())); throw new RuntimeException("fail to parse json file"); } } /** * Loading meta information * Mapping relationships using table indexes to column names */ private void loadMeta() { for (Map.Entry<String, TableTemplate> entry : template.getTableTemplateMap().entrySet()) { TableTemplate table = entry.getValue(); List<String> updateFields = table.getOpTypeFieldSetMap().get( OperationTypeEnum.UPDATE ); List<String> insertFields = table.getOpTypeFieldSetMap().get( OperationTypeEnum.ADD ); List<String> deleteFields = table.getOpTypeFieldSetMap().get( OperationTypeEnum.DELETE ); jdbcTemplate.query(SQL_SCHEMA, new Object[]{ template.getDatabase(), table.getTableName() }, (rs, i) -> { int pos = rs.getInt("ORDINAL_POSITION"); String colName = rs.getString("COLUMN_NAME"); if ((null != updateFields && updateFields.contains(colName)) || (null != insertFields && insertFields.contains(colName)) || (null != deleteFields && deleteFields.contains(colName))) { table.getPosMap().put(pos - 1, colName); } return null; } ); } } }-

Listen for binlog implementation

- Define the java objects that Event parses to convert

@Data public class BinlogRowData { private TableTemplate tableTemplate; private EventType eventType; private List<Map<String, String>> before; private List<Map<String, String>> after; }- Define binlog client BinaryLogClient

/** * CustomBinlogClient for Customize Binlog Client * * @author <a href="mailto:magicianisaac@gmail.com">Isaac.Zhang | If initial </a> * @since 2019/6/27 */ @Slf4j @Component public class CustomBinlogClient { private BinaryLogClient client; private final BinlogConfig config; private final AggregationListener listener; @Autowired public CustomBinlogClient(BinlogConfig config, AggregationListener listener) { this.config = config; this.listener = listener; } public void connect() { new Thread(() -> { client = new BinaryLogClient( config.getHost(), config.getPort(), config.getUsername(), config.getPassword() ); if (!StringUtils.isEmpty(config.getBinlogName()) && !config.getPosition().equals(-1L)) { client.setBinlogFilename(config.getBinlogName()); client.setBinlogPosition(config.getPosition()); } try { log.info("connecting to mysql start..."); client.connect(); log.info("connecting to mysql done!"); } catch (IOException e) { e.printStackTrace(); } }).start(); } public void disconnect() { try { log.info("disconnect to mysql start..."); client.disconnect(); log.info("disconnect to mysql done!"); } catch (IOException e) { e.printStackTrace(); } } }- Register event listener com.github.shyiko.mysql.binlog.BinaryLogClient.EventListener with client

/** * Ilistener for Extend different implementations for later * * @author <a href="mailto:magicianisaac@gmail.com">Isaac.Zhang | If initial </a> */ public interface Ilistener { void register(); void onEvent(BinlogRowData eventData); }- Listen for Binlog, collect mysql binlog datas

@Slf4j @Component public class AggregationListener implements BinaryLogClient.EventListener { private String dbName; private String tbName; private Map<String, Ilistener> listenerMap = new HashMap<>(); @Autowired private TemplateHolder templateHolder; private String genKey(String dbName, String tbName) { return dbName + ":" + tbName; } /** * Implement registration information based on tables */ public void register(String dbName, String tbName, Ilistener listener) { log.info("register : {}-{}", dbName, tbName); this.listenerMap.put(genKey(dbName, tbName), listener); } @Override public void onEvent(Event event) { EventType type = event.getHeader().getEventType(); log.info("Event type: {}", type); //Before adding or deleting a database, there must be a binlog for table_map event if (type == EventType.TABLE_MAP) { TableMapEventData data = event.getData(); this.tbName = data.getTable(); this.dbName = data.getDatabase(); return; } //EXT_UPDATE_ROWS is a type above Mysql 8 if (type != EventType.EXT_UPDATE_ROWS && type != EventType.EXT_WRITE_ROWS && type != EventType.EXT_DELETE_ROWS ) { return; } // Check that table and database names are populated correctly if (StringUtils.isEmpty(dbName) || StringUtils.isEmpty(tbName)) { log.error("Meta data got error. tablename:{},database:{}", tbName, dbName); return; } //Find out which listeners are sensitive to the corresponding Datasheet String key = genKey(this.dbName, this.tbName); Ilistener ilistener = this.listenerMap.get(key); if (null == ilistener) { log.debug("skip {}", key); } log.info("trigger event:{}", type.name()); try { BinlogRowData rowData = convertEventData2BinlogRowData(event.getData()); if (null == rowData) { return; } rowData.setEventType(type); ilistener.onEvent(rowData); } catch (Exception e) { e.printStackTrace(); log.error(e.getMessage()); } finally { this.dbName = ""; this.tbName = ""; } } /** * Resolve mapping of Binlog data to Java entity objects * * @param data binlog * @return java object */ private BinlogRowData convertEventData2BinlogRowData(EventData data) { TableTemplate tableTemplate = templateHolder.getTable(tbName); if (null == tableTemplate) { log.warn("table {} not found.", tbName); return null; } List<Map<String, String>> afterMapList = new ArrayList<>(); for (Serializable[] after : getAfterValues(data)) { Map<String, String> afterMap = new HashMap<>(); int columnLength = after.length; for (int i = 0; i < columnLength; ++i) { //Remove the column name corresponding to the current location String colName = tableTemplate.getPosMap().get(i); //If not, the column is not required if (null == colName) { log.debug("ignore position: {}", i); continue; } String colValue = after[i].toString(); afterMap.put(colName, colValue); } afterMapList.add(afterMap); } BinlogRowData binlogRowData = new BinlogRowData(); binlogRowData.setAfter(afterMapList); binlogRowData.setTableTemplate(tableTemplate); return binlogRowData; } /** * Get post-change data for different events * Add & Delete Pre-change data assumed to be empty */ private List<Serializable[]> getAfterValues(EventData eventData) { if (eventData instanceof WriteRowsEventData) { return ((WriteRowsEventData) eventData).getRows(); } if (eventData instanceof UpdateRowsEventData) { return ((UpdateRowsEventData) eventData).getRows() .stream() .map(Map.Entry::getValue) .collect(Collectors.toList() ); } if (eventData instanceof DeleteRowsEventData) { return ((DeleteRowsEventData) eventData).getRows(); } return Collections.emptyList(); } }- Parse the binlog data object BinlogRowData for subsequent processing of incremental indexes

/** * MysqlRowData for Simplify {@link BinlogRowData} to facilitate incremental indexing * * @author <a href="mailto:magicianisaac@gmail.com">Isaac.Zhang | If initial </a> */ @Data @AllArgsConstructor @NoArgsConstructor public class MysqlRowData { //When implementing multiple data, you need to pass the database name //private String database; private String tableName; private String level; private OperationTypeEnum operationTypeEnum; private List<Map<String, String>> fieldValueMap = new ArrayList<>(); }Because we need to convert Binlog EventType to our OperationTypeEnum operation type, we add a conversion method to OperationTypeEnum:

public enum OperationTypeEnum { ... public static OperationTypeEnum convert(EventType type) { switch (type) { case EXT_WRITE_ROWS: return ADD; case EXT_UPDATE_ROWS: return UPDATE; case EXT_DELETE_ROWS: return DELETE; default: return OTHER; } } }We also need to define a java class for each column name contained in a table to facilitate our later CUD operations on the table:

package com.sxzhongf.ad.mysql.constant; import java.util.HashMap; import java.util.Map; /** * Constant for java class for column names to facilitate our later CUD operations on tables * * @author <a href="mailto:magicianisaac@gmail.com">Isaac.Zhang | If initial </a> */ public class Constant { private static final String DATABASE_NAME = "advertisement"; public static class AD_PLAN_TABLE_INFO { public static final String TABLE_NAME = "ad_plan"; public static final String COLUMN_PLAN_ID = "plan_id"; public static final String COLUMN_USER_ID = "user_id"; public static final String COLUMN_PLAN_STATUS = "plan_status"; public static final String COLUMN_START_DATE = "start_date"; public static final String COLUMN_END_DATE = "end_date"; } public static class AD_CREATIVE_TABLE_INFO { public static final String TABLE_NAME = "ad_creative"; public static final String COLUMN_CREATIVE_ID = "creative_id"; public static final String COLUMN_TYPE = "type"; public static final String COLUMN_MATERIAL_TYPE = "material_type"; public static final String COLUMN_HEIGHT = "height"; public static final String COLUMN_WIDTH = "width"; public static final String COLUMN_AUDIT_STATUS = "audit_status"; public static final String COLUMN_URL = "url"; } public static class AD_UNIT_TABLE_INFO { public static final String TABLE_NAME = "ad_unit"; public static final String COLUMN_UNIT_ID = "unit_id"; public static final String COLUMN_UNIT_STATUS = "unit_status"; public static final String COLUNN_POSITION_TYPE = "position_type"; public static final String COLUNN_PLAN_ID = "plan_id"; } public static class RELATIONSHIP_CREATIVE_UNIT_TABLE_INFO { public static final String TABLE_NAME = "relationship_creative_unit"; public static final String COLUMN_CREATIVE_ID = "creative_id"; public static final String COLUMN_UNIT_ID = "unit_id"; } public static class AD_UNIT_DISTRICT_TABLE_INFO { public static final String TABLE_NAME = "ad_unit_district"; public static final String COLUMN_UNIT_ID = "unit_id"; public static final String COLUMN_PROVINCE = "province"; public static final String COLUMN_CITY = "city"; } public static class AD_UNIT_KEYWORD_TABLE_INFO { public static final String TABLE_NAME = "ad_unit_keyword"; public static final String COLUMN_UNIT_ID = "unit_id"; public static final String COLUMN_KEYWORD = "keyword"; } public static class AD_UNIT_HOBBY_TABLE_INFO { public static final String TABLE_NAME = "ad_unit_hobby"; public static final String COLUMN_UNIT_ID = "unit_id"; public static final String COLUMN_HOBBY_TAG = "hobby_tag"; } //Key ->table name //Value ->database name public static Map<String, String> table2db; static { table2db = new HashMap<>(); table2db.put(AD_PLAN_TABLE_INFO.TABLE_NAME, DATABASE_NAME); table2db.put(AD_CREATIVE_TABLE_INFO.TABLE_NAME, DATABASE_NAME); table2db.put(AD_UNIT_TABLE_INFO.TABLE_NAME, DATABASE_NAME); table2db.put(RELATIONSHIP_CREATIVE_UNIT_TABLE_INFO.TABLE_NAME, DATABASE_NAME); table2db.put(AD_UNIT_DISTRICT_TABLE_INFO.TABLE_NAME, DATABASE_NAME); table2db.put(AD_UNIT_HOBBY_TABLE_INFO.TABLE_NAME, DATABASE_NAME); table2db.put(AD_UNIT_KEYWORD_TABLE_INFO.TABLE_NAME, DATABASE_NAME); } }