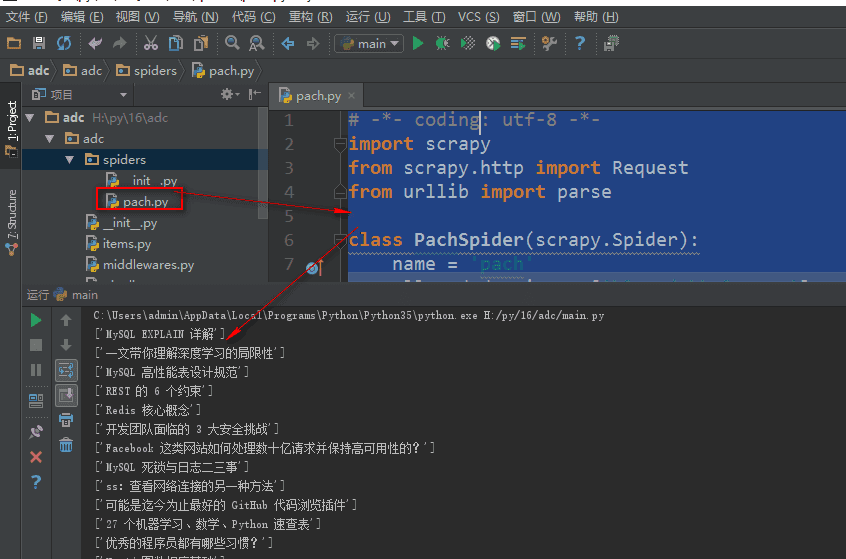

Write spiders crawler files to cycle through the contents

Request() method, which adds the specified url address to the downloader download page with two required parameters,

Parameters:

url='url'

callback=page handler

yield Request() is required for use

The parse.urljoin() method, which is under the urllib library, is an automatic url splicing. If the url address of the second parameter is a relative path, it is automatically spliced with the first parameter

# -*- coding: utf-8 -*-

import scrapy

from scrapy.http import Request #Method of importing url to return to Downloader

from urllib import parse #Import parse module from urllib Library

class PachSpider(scrapy.Spider):

name = 'pach'

allowed_domains = ['blog.jobbole.com'] #Starting Domain Name

start_urls = ['http://blog.jobbole.com/all-posts/'] #starting url

def parse(self, response):

"""

//Get the article url address of the list page and hand it to the downloader

"""

#Get the current page article url

lb_url = response.xpath('//a[@class="archive-title"]/@href').extract()#Get article list url

for i in lb_url:

# print(parse.urljoin(response.url,i)) #The urljoin() method of the parse module in the urllib library is an automatic url splicing, where the url address of the second parameter is a relative path and the first parameter are spliced automatically

yield Request(url=parse.urljoin(response.url, i), callback=self.parse_wzhang) #Add the looped article url to the downloader and give it to the parse_wzhang callback function

#Get the next page list url, hand it to the downloader, and return it to the parse function loop

x_lb_url = response.xpath('//a[@class="next page-numbers"]/@href').extract()#Get the next page article list url

if x_lb_url:

yield Request(url=parse.urljoin(response.url, x_lb_url[0]), callback=self.parse) #Get the next page url and return it to the downloader, calling back the parse function to loop through

def parse_wzhang(self,response):

title = response.xpath('//Div[@class="entry-header"]/h1/text()'.extract()#Get Article Title

print(title)If you are still confused in the world of programming, you can join us in Python learning to deduct qun:784758214 and see how our forefathers learned.Exchange experience.From basic Python scripts to web development, crawlers, django, data mining, and so on, zero-based to project actual data are organized.For every Python buddy!Share some learning methods and small details that need attention, Click to join us python learner cluster

When the Request() function returns a url, it can also return a custom dictionary to the callback function through a meta attribute

# -*- coding: utf-8 -*-

import scrapy

from scrapy.http import Request #Method of importing url to return to Downloader

from urllib import parse #Import parse module from urllib Library

from adc.items import AdcItem #Import Receive Class for items Data Receive Module

class PachSpider(scrapy.Spider):

name = 'pach'

allowed_domains = ['blog.jobbole.com'] #Starting Domain Name

start_urls = ['http://blog.jobbole.com/all-posts/'] #starting url

def parse(self, response):

"""

//Get the article url address of the list page and hand it to the downloader

"""

#Get the current page article url

lb = response.css('div .post.floated-thumb') #Get article list block, css selector

# print(lb)

for i in lb:

lb_url = i.css('.archive-title ::attr(href)').extract_first('') #Get article URLs in blocks

# print(lb_url)

lb_img = i.css('.post-thumb img ::attr(src)').extract_first('') #Get thumbnails of articles in blocks

# print(lb_img)

yield Request(url=parse.urljoin(response.url, lb_url), meta={'lb_img':parse.urljoin(response.url, lb_img)}, callback=self.parse_wzhang) #Add the looped article url to the downloader and give it to the parse_wzhang callback function

#Get the next page list url, hand it to the downloader, and return it to the parse function loop

x_lb_url = response.css('.next.page-numbers ::attr(href)').extract_first('') #Get the next page article list url

if x_lb_url:

yield Request(url=parse.urljoin(response.url, x_lb_url), callback=self.parse) #Get the next page url and return it to the downloader, calling back the parse function to loop through

def parse_wzhang(self,response):

title = response.css('.entry-header h1 ::text').extract() #Get Article Title

# print(title)

tp_img = response.meta.get('lb_img', '') #Receive meta-passed values, get them to prevent errors

# print(tp_img)

shjjsh = AdcItem() #Instantiate Data Receive Class

shjjsh['title'] = title #Transfer data to the specified class of the items receive module

shjjsh['img'] = tp_img

yield shjjsh #Return the receiving object to the pipelines.py processing moduleScrapy's built-in picture downloader uses

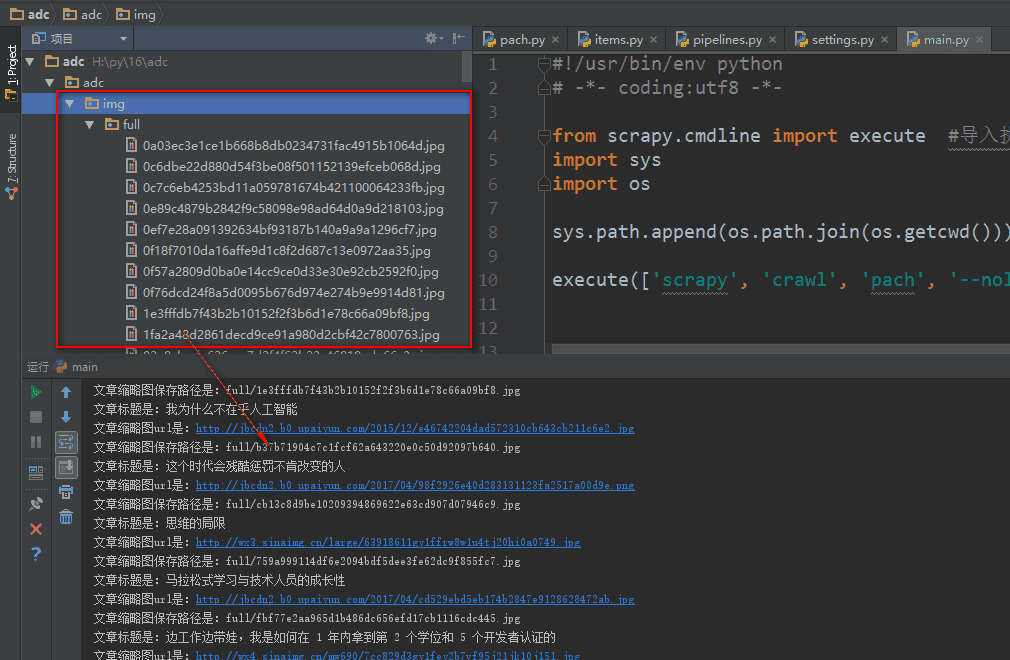

Scrapy has built us a picture downloader, crapy.pipelines.images.ImagesPipeline, designed to download images locally after the crawler grabs the image url

First, after the crawler grabs the picture URL address, it fills in the container function of the items.py file

* Crawler Files

# -*- coding: utf-8 -*-

import scrapy

from scrapy.http import Request #Method of importing url to return to Downloader

from urllib import parse #Import parse module from urllib Library

from adc.items import AdcItem #Import Receive Class for items Data Receive Module

class PachSpider(scrapy.Spider):

name = 'pach'

allowed_domains = ['blog.jobbole.com'] #Starting Domain Name

start_urls = ['http://blog.jobbole.com/all-posts/'] #starting url

def parse(self, response):

"""

//Get the article url address of the list page and hand it to the downloader

"""

#Get the current page article url

lb = response.css('div .post.floated-thumb') #Get article list block, css selector

# print(lb)

for i in lb:

lb_url = i.css('.archive-title ::attr(href)').extract_first('') #Get article URLs in blocks

# print(lb_url)

lb_img = i.css('.post-thumb img ::attr(src)').extract_first('') #Get thumbnails of articles in blocks

# print(lb_img)

yield Request(url=parse.urljoin(response.url, lb_url), meta={'lb_img':parse.urljoin(response.url, lb_img)}, callback=self.parse_wzhang) #Add the looped article url to the downloader and give it to the parse_wzhang callback function

#Get the next page list url, hand it to the downloader, and return it to the parse function loop

x_lb_url = response.css('.next.page-numbers ::attr(href)').extract_first('') #Get the next page article list url

if x_lb_url:

yield Request(url=parse.urljoin(response.url, x_lb_url), callback=self.parse) #Get the next page url and return it to the downloader, calling back the parse function to loop through

def parse_wzhang(self,response):

title = response.css('.entry-header h1 ::text').extract() #Get Article Title

# print(title)

tp_img = response.meta.get('lb_img', '') #Receive meta-passed values, get them to prevent errors

# print(tp_img)

shjjsh = AdcItem() #Instantiate Data Receive Class

shjjsh['title'] = title #Transfer data to the specified class of the items receive module

shjjsh['img'] = [tp_img]

yield shjjsh #Return the receiving object to the pipelines.py processing moduleStep 2, set the container function of the items.py file to receive the data padding obtained by the crawler

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

#items.py, which is designed to receive data information from crawlers, is equivalent to a container file

class AdcItem(scrapy.Item): #Set the information container class that the crawler gets

title = scrapy.Field() #Receive title information from Crawlers

img = scrapy.Field() #Receive thumbnails

img_tplj = scrapy.Field() #Picture Save PathStep 3: Using crapy's built-in Image Downloader in pipelines.py

1. Introduce the built-in picture downloader first

2. Customize an image download that inherits crapy's built-in ImagesPipeline Image Downloader class

3. Use the item_completed() method in the ImagesPipeline class to get the path to save the downloaded pictures

4. In the settings.py settings file, register the custom picture downloader class, and set the path to save the picture

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

from scrapy.pipelines.images import ImagesPipeline #Import Picture Downloader Module

class AdcPipeline(object): #To define a data processing class, you must inherit the object

def process_item(self, item, spider): #process_item(item) is a data processing function that receives an item, which is the data object from the crawler's last yield item

print('The title of the article is:' + item['title'][0])

print('Post Thumbnails url Yes:' + item['img'][0])

print('The path to save the article thumbnail is:' + item['img_tplj']) #Receive Picture Downloader Filled Path After Picture Download

return item

class imgPipeline(ImagesPipeline): #Customize an image download that inherits crapy's built-in ImagesPipeline Image Downloader class

def item_completed(self, results, item, info): #Use the item_completed() method in the ImagesPipeline class to get the path to save the downloaded picture

for ok, value in results:

img_lj = value['path'] #Receive Picture Save Path

# print(ok)

item['img_tplj'] = img_lj #Fill in the fields in items.py with the path to save the picture

return item #Container function that gives item to items.py file

#Note: Once the custom picture downloader is set up, you need toIn the settings.py settings file, register the custom picture downloader class, and set the path to save the picture

IMAGES_URLS_FIELD sets the url address to download pictures, and the field received in items.py, which is generally set

IMAGES_STORE Set Picture Save Path

I don't know what to add to my learning

python learning communication deduction qun, 784758214

There are good learning video tutorials, development tools and e-books in the group.

Share with you the current talent needs of the python enterprise and how to learn Python from a zero-based perspective, and what to learn

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'adc.pipelines.AdcPipeline': 300, #Register the adc.pipelines.AdcPipeline class, followed by a numeric parameter indicating the execution level,

'adc.pipelines.imgPipeline': 1, #Register a custom picture downloader, the smaller the number, the more preferred

}

IMAGES_URLS_FIELD ='img'#Sets the url field for the picture to be downloaded, that is, the field for the picture in items.py

lujin = os.path.abspath(os.path.dirname(__file__))

IMAGES_STORE = os.path.join (lujin,'img') #Set picture save path