Note: This method is for quick experience or small data volume, not suitable for large data volume production environment.

Environmental preparation:

- Centos7

- Nutch2.2.1

- JAVA1.8

- ant1.9.14

- HBase 0.90.4 (stand-alone version)

- solr7.7

Relevant download address:

Links: https://pan.baidu.com/s/1Tut2CcKoJ9-G-HBq8zexMQ Extraction code: v75v

Start installation

-

Default installation of jdk, ant (in fact, decompression configuration of environment variables will not be able to Baidu once)

-

Install hbase stand-alone version

- Download decompression

wget http://archive.apache.org/dist/hbase/hbase-0.90.4/hbase-0.90.4.tar.gz tar zxf hbase-0.90.4.tar.gz # Or use the package I provided directly - To configure

<configuration> <property> <name>hbase.rootdir</name> <value>/data/hbase</value> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/data/zookeeper</value> </property> </configuration>Description: The hbase.rootdir directory is used to store information about HBase. The default value is / tmp/hbase-${user.name}/hbase; the hbase.zookeeper.property.dataDir directory is used to store information about zookeeper (HBase has zookeeper built in), and the default value is / tmp/hbase-${user.name}/zookeeper. 3. boot

./bin/start-hbase.sh

- Download decompression

-

solr installation configuration

- Download and install

wget https://mirrors.cnnic.cn/apache/lucene/solr/7.7.2/solr-7.7.2-src.tgz tar -zxvf solr-7.7.2-src.tgz ./bin/solr start -force //start-up- Add core reference https://blog.csdn.net/weixin_39082031/article/details/78924909

After adding, remember to restart start transposition restart

-

Nutch Edit Installation (don't forget the pre-ant configuration)

- download

wget http://archive.apache.org/dist/nutch/2.2.1/apache-nutch-2.2.1-src.tar.gz tar zxf apache-nutch-2.2.1-src.tar.gz- Configuration modification

conf/nutch-site.xml

<property> <name>storage.data.store.class</name> <value>org.apache.gora.hbase.store.HBaseStore</value> <description>Default class for storing data</description> </property>ivy/ivy.xml

<!-- Uncomment this to use HBase as Gora backend. --> <dependency org="org.apache.gora" name="gora-hbase" rev="0.3" conf="*->default" />conf/gora.properties

gora.datastore.default=org.apache.gora.hbase.store.HBaseStore-

Compile ant runtime Here is particularly slow, you can optimize the speed of ivy by Baidu, you can also download it like this. If you encounter failure, you can download the package yourself and put it in the wrong path.

After success: generate two directories, runtime and build. The following configuration file modifications are all changed to the following files in runtime/local

-

Adding seed url

#In the directory you want to store mkdir /data/urls vim seed.txt #Add url to grab http://www.dxy.cn/- Setting url filtering rules (optional)

#Comment out this line # skip URLs containing certain characters as probable queries, etc. #-[?*!@=] # accept anything else #Comment out this line #+. +^http:\/\/heart\.dxy\.cn\/article\/[0-9]+$- Configure the agent name (must be configured or errors will be reported)

<property> <name>http.agent.name</name> <value>My Nutch Spider</value> </property> -

The last step is to configure Solr to support nutch stored data structure (schema), modify / data/solr-7.7.2/server/solr/jkj_core/conf/managed-schema file, and restart Solr

Add a new configuration section (just put it in < schema>)

<!-- New field for nutch start--> <fieldType name="url" class="solr.TextField" positionIncrementGap="100"> <analyzer> <tokenizer class="solr.StandardTokenizerFactory"/> <filter class="solr.LowerCaseFilterFactory"/> <filter class="solr.WordDelimiterFilterFactory" generateWordParts="1" generateNumberParts="1"/> </analyzer> </fieldType> <fieldType name="date" class="solr.TrieDateField" omitNorms="true" precisionStep="0" positionIncrementGap="0"/> <!-- A Trie based date field for faster date range queries and date faceting. --> <fieldType name="tdate" class="solr.TrieDateField" omitNorms="true" precisionStep="6" positionIncrementGap="0"/> <!-- core fields --> <field name="batchId" type="string" stored="true" indexed="false"/> <field name="digest" type="string" stored="true" indexed="false"/> <field name="boost" type="pfloat" stored="true" indexed="false"/> <!-- fields for index-basic plugin --> <field name="host" type="url" stored="false" indexed="true"/> <field name="url" type="url" stored="true" indexed="true" required="true"/> <field name="orig" type="url" stored="true" indexed="true" /> <!-- stored=true for highlighting, use term vectors and positions for fast highlighting --> <field name="content" type="text_general" stored="true" indexed="true"/> <field name="title" type="text_general" stored="true" indexed="true"/> <field name="cache" type="string" stored="true" indexed="false"/> <field name="tstamp" type="date" stored="true" indexed="false"/> <!-- catch-all field --> <field name="text" type="text_general" stored="false" indexed="true" multiValued="true"/> <!-- fields for index-anchor plugin --> <field name="anchor" type="text_general" stored="true" indexed="true" multiValued="true"/> <!-- fields for index-more plugin --> <field name="type" type="string" stored="true" indexed="true" multiValued="true"/> <field name="contentLength" type="string" stored="true" indexed="false"/> <field name="lastModified" type="date" stored="true" indexed="false"/> <field name="date" type="tdate" stored="true" indexed="true"/> <!-- fields for languageidentifier plugin --> <field name="lang" type="string" stored="true" indexed="true"/> <!-- fields for subcollection plugin --> <field name="subcollection" type="string" stored="true" indexed="true" multiValued="true"/> <!-- fields for feed plugin (tag is also used by microformats-reltag)--> <field name="author" type="string" stored="true" indexed="true"/> <field name="tag" type="string" stored="true" indexed="true" multiValued="true"/> <field name="feed" type="string" stored="true" indexed="true"/> <field name="publishedDate" type="date" stored="true" indexed="true"/> <field name="updatedDate" type="date" stored="true" indexed="true"/> <!-- fields for creativecommons plugin --> <field name="cc" type="string" stored="true" indexed="true" multiValued="true"/> <!-- fields for tld plugin --> <field name="tld" type="string" stored="false" indexed="false"/> <!-- New field for nutch end--> -

Start nutch grab

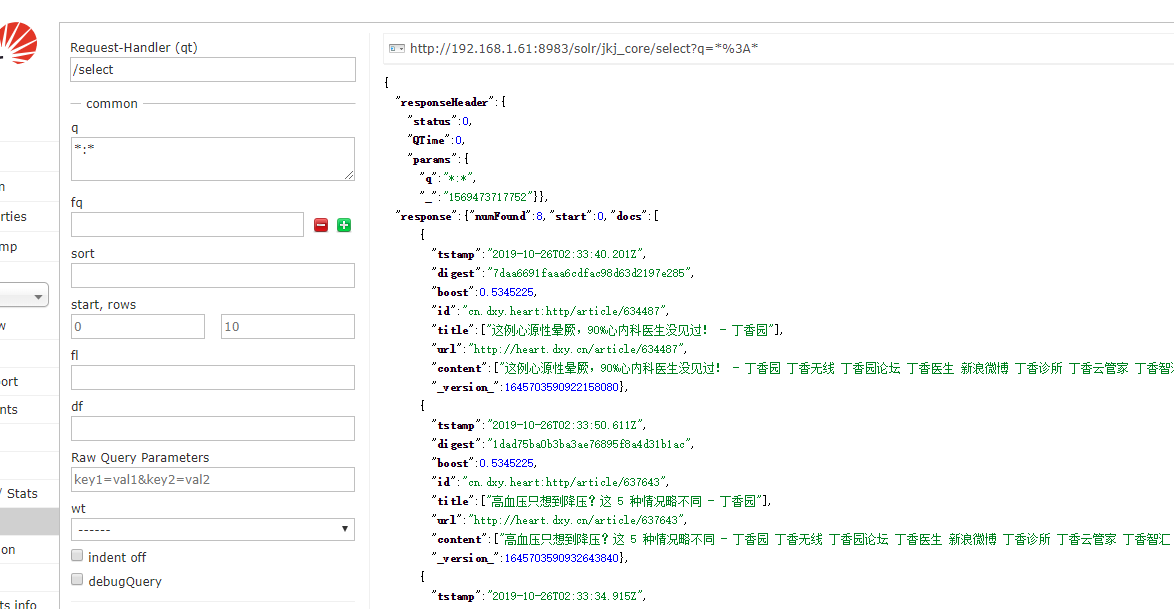

# The bin directory is Runtime / bin under nutch ./bin/crawl ~/urls/ jkj http://192.168.1.61:8983/solr/jkj_core 2 ~/urls/ It's the directory where I store the crawled files. jkj It's my designated store in hbase Medium id(As you can understand, create tables automatically http://192.168.1.61:8983/solr/jkj_core solr creates the address of collection 2 For grabbing depth

7. View the results through solr or hbase