1. Preparations (the tool library used will be placed at the end for download)

1.1. Install thrift

cmd>pip install thrift

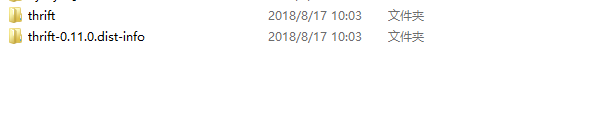

I use Anaconda3. The downloaded packages will be stored in the / Lib/site-packages / directory. If you don't use Anaconda3, you can put the following two folders directly under the python installation directory / Lib/site-packages / directory.

1.2. Copy the hbase folder (which can be downloaded later, under the hbase directory is the library used by python and hbase links) to the current project directory

1.3. Start the hbase cluster

1.4. Start thriftserver of hbase to satisfy communication with third-party applications

$>hbase-daemon.sh start thrift2

View webui: http://s10:9095////webui port

// 9090 rpc port

1.5 Code:

# -*- coding: utf-8 -*-

# python operation hbase

import os

# Importing thrift's python module

from thrift import Thrift

from thrift.transport import TSocket

from thrift.transport import TTransport

from thrift.protocol import TBinaryProtocol

#Import the compiled hbase module

from com.py.spark.hbase import THBaseService

from com.py.spark.hbase.ttypes import *

#hbase's thrift server address s10:9090

from com.py.spark.hbase.ttypes import TGet

transport = TSocket.TSocket('s10', 9090)

transport = TTransport.TBufferedTransport(transport)

protocol = TBinaryProtocol.TBinaryProtocol(transport)

client = THBaseService.Client(protocol)

#Open the connection and prepare to transfer data

transport.open()

# #get query

table = b'ns1:t1' #Define table name

rowkey=b'row9998' #Define rowkey

col_id=TColumn(b"f1",b"id")

col_name = TColumn(b"f1",b"name")

col_age = TColumn(b"f1",b"age")

cols = [col_id,col_name,col_age]

get = TGet(rowkey,cols)#Create get objects

res=client.get(table,get)

# The final output is sorted

print(bytes.decode(res.columnValues[0].family))

print(bytes.decode(res.columnValues[0].qualifier))

print(bytes.decode(res.columnValues[0].value))

print(res.columnValues[0].timestamp)

print(bytes.decode(res.columnValues[1].family))

print(bytes.decode(res.columnValues[1].qualifier))

print(bytes.decode(res.columnValues[1].value))

print(res.columnValues[1].timestamp)

print(bytes.decode(res.columnValues[2].family))

print(bytes.decode(res.columnValues[2].qualifier))

print(bytes.decode(res.columnValues[2].value))

print(res.columnValues[2].timestamp)

# put operation

table =b'ns1:t1'

row=b'row10000'

v1=TColumnValue(b'f1',b'id',b'10000')

v2=TColumnValue(b'f1',b'name',b'zpx')

v3=TColumnValue(b'f1',b'age',b'25')

vals=[v1,v2,v3]

put=TPut(row,vals)

client.put(table,put)

print("okkkk!!")

# delete

# delete

table =b'ns1:t1'

rowkey=b'row10000'

col_id = TColumn(b"f1", b"id")

col_name = TColumn(b"f1", b"name")

col_age = TColumn(b"f1", b"age")

cols = [col_id, col_name,col_age]

#Construct deletion objects

delete = TDelete(rowkey,cols)

res = client.deleteSingle(table, delete)

# print("ok")

#Scanning scan

#scan

table=b'ns1:t1'

startRow=b'row9900'

stopRow=b'row9998'

col_id = TColumn(b"f1", b"id")

col_name = TColumn(b"f1", b"name")

col_age = TColumn(b"f1", b"age")

cols = [col_id, col_name,col_age]

scan=TScan(startRow=startRow,stopRow=stopRow,columns=cols)

r=client.getScannerResults(table,scan,100)

for x in r:

print("====================")

print(bytes.decode(x.columnValues[2].family))

print(bytes.decode(x.columnValues[2].qualifier))

print(bytes.decode(x.columnValues[2].value))

print(x.columnValues[2].timestamp)

Link: https://pan.baidu.com/s/1dY56Sbo_ZZ3lwlRiP5nAw password: akky