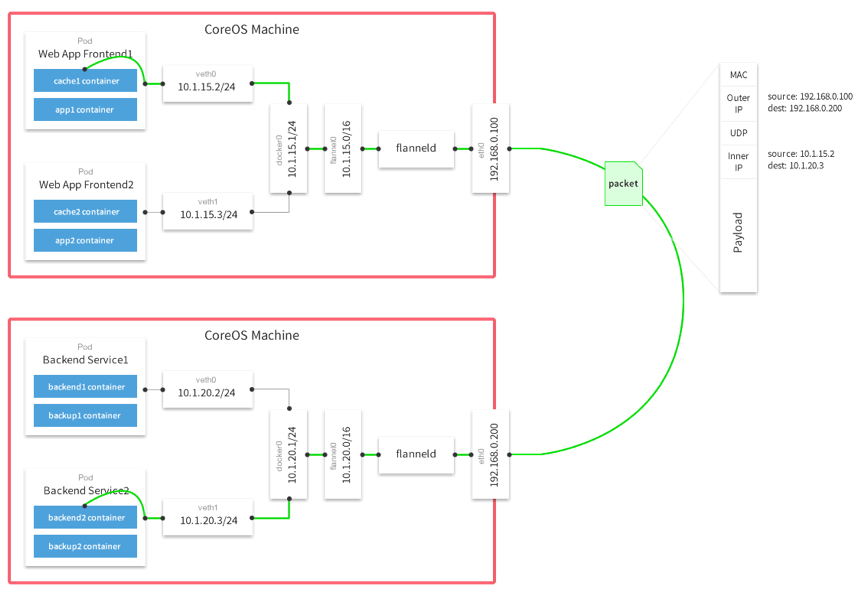

Flannel is an open-source CNI network plug-in of cereos. The figure below shows a schematic diagram of a data package provided by flannel official website after packet, transmission and unpacking. From this picture, we can see that the docker0 of two machines are in different segments: 10.1.20.1/24 and 10.1.15.1/24. If you connect the Backend Service2 pod (10.1.15.2) on another host from the Web App Frontend1 pod (10.1.15.2), you can see that the docker0 of two machines is in different segments. 20.3). The network packet is sent from the host 192.168.0.100 to 192.168.0.200. The data packet of the inner container is encapsulated in the UDP of the host, and the IP and mac address of the host are wrapped in the outer layer. This is a classic overlay network. Because the IP of the container is an internal IP, it cannot communicate across the host, so the network interworking of the container needs to be carried on the host's network.

flannel supports a variety of network modes, such as vxlan, UDP, hostgw, ipip, gce and alicloud. The difference between vxlan and UDP is that vxlan is the kernel packet, while UDP is the flanneld user state program packet, so the performance of UDP mode is slightly poor. Hostgw mode is a host gateway mode, and the gateway from the container to another host is set as the network card address of the host. , which is very similar to calico, except that calico is declared through BGP, and hostgw is distributed through the central etcd, so hostgw is a direct connection mode, which does not need to be wrapped or unpacked through overlay, so its performance is relatively high, but the biggest disadvantage of hostgw mode is that it must be in a two-tier network, after all, the next hop route needs to be in the neighbor table, otherwise it cannot pass through.

In the actual production environment, the most commonly used mode is vxlan mode. Let's first look at the working principle, and then through the source code analysis implementation process.

The installation process is very simple, mainly divided into two steps:

The first step is to install flannel

yum install flannel or launch via the daemonset mode of kubernetes to configure the etcd address used by flannel.

Step 2 configure the cluster network

curl -L http://etcdurl:2379/v2/keys/flannel/network/config -XPUT -d value="{\"Network\":\"172.16.0.0/16\",\"SubnetLen\":24,\"Backend\":{\"Type\":\"vxlan\",\"VNI\":1}}"

Then start the flanned program for each node.

I. working principle

1. How to assign the container address

When the Docker container starts, it allocates an IP address through docker0, and flannel allocates an IP segment for each machine, which is configured on docker0. After the container starts, it selects an unoccupied IP in this segment. How does flannel modify the docker0 network segment?

First, take a look at the startup file of flannel / usr/lib/systemd/system/flanneld.service

[Service] Type=notify EnvironmentFile=/etc/sysconfig/flanneld ExecStart=/usr/bin/flanneld-start $FLANNEL_OPTIONS ExecStartPost=/opt/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

The file specifies the flannel environment variable, startup script and mk-docker-opts.sh set by ExecStartPost, which is used to generate / run/flannel/docker. The contents of the file are as follows:

DOCKER_OPT_BIP="--bip=10.251.81.1/24" DOCKER_OPT_IPMASQ="--ip-masq=false" DOCKER_OPT_MTU="--mtu=1450" DOCKER_NETWORK_OPTIONS=" --bip=10.251.81.1/24 --ip-masq=false --mtu=1450"

This file is also associated with the docker startup file / usr/lib/systemd/system/docker.service.

[Service] Type=notify NotifyAccess=all EnvironmentFile=-/run/flannel/docker EnvironmentFile=-/etc/sysconfig/docker

In this way, you can set the network bridge of docker0.

In the development environment, there are three machines, which are assigned the following network segments:

host-139.245 10.254.44.1/24

host-139.246 10.254.60.1/24

host-139.247 10.254.50.1/24

2. How containers communicate

The above describes how to assign IP to each container, so how can containers on different hosts communicate? Let's use the most common vxlan example. Here are three key points: a route, an arp and an FDB. We analyze the functions of the above three elements one by one according to the process of container contract awarding. First, the packets from the container will go through docker0. Then, will they go out directly from the host network or forward through vxlan packets? This is the routing setting on each machine.

#ip route show dev flannel.1 10.254.50.0/24 via 10.254.50.0 onlink 10.254.60.0/24 via 10.254.60.0 onlink

You can see that each host has a route to the other two machines. This route is an onlink route. The onlink parameter indicates that the gateway is forced to be "on the link" (although there is no link layer route). Otherwise, it is impossible to add routes of different network segments on linux. In this way, the packet can know that if it is directly accessed by the container, it will be handed over to the flannel.1 device for processing.

flannel.1 is a virtual network device that will packet data, but the next question is, what is the mac address of the gateway? Because the gateway is set through onlink, flannel will send the mac address and check the arp table.

# ip neig show dev flannel.1 10.254.50.0 lladdr ba:10:0e:7b:74:89 PERMANENT 10.254.60.0 lladdr 92:f3:c8:b2:6e:f0 PERMANENT

You can see the mac address corresponding to the gateway, so that the inner layer packets are encapsulated.

Or the last question, what is the destination IP of outgoing packets? In other words, which machine should this encapsulated packet be sent to? Every packet is broadcast. By default, vxlan is implemented by broadcasting for the first time, but flannel sends the forwarding table FDB directly in a hack mode again.

# bridge fdb show dev flannel.1 92:f3:c8:b2:6e:f0 dst 10.100.139.246 self permanent ba:10:0e:7b:74:89 dst 10.100.139.247 self permanent

In this way, the corresponding mac address forwarding destination IP can be obtained.

Here's another thing to note: both the arp table and the FDB table are permanent s, which indicates that the record writing is maintained manually. The traditional way for arp to get neighbors is through broadcasting. If the opposite arp is received, it will mark the opposite end as reachable. If the opposite end fails after the time set for reachable, it will mark the opposite end as stale, and then the delay and Probe enters the probe state, and if the probe fails, it will be marked as Failed. The reason why the basic content of arp is introduced is that the old version of flannel does not use the above method in this article, but adopts a temporary arp scheme. The arp issued at this time represents the real state, which means that if the time-out of the real state is exceeded when the flannel is down, the network of the container on this machine will be interrupted. Let's briefly review the previous (0. In the. 7. X) version, in order to get the arp address of the opposite end, the kernel will first send the arp query. If you try

/proc/sys/net/ipv4/neigh/$NIC/ucast_solicit

arp inquiry will be sent to user space

/proc/sys/net/ipv4/neigh/$NIC/app_solicit

The previous version of flannel just used this feature to set

# cat /proc/sys/net/ipv4/neigh/flannel.1/app_solicit 3

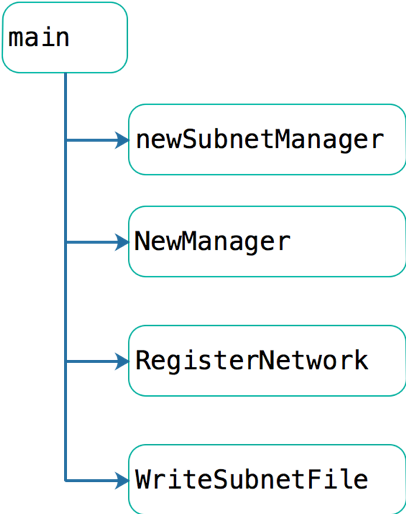

In this way, flanneld can obtain the L3MISS sent to the user space by the kernel, and cooperate with etcd to return the mac address corresponding to the IP address, which is set to reach. From the analysis, it can be seen that if the flanneld program exits, the communication between containers will be interrupted, which needs to be noted here. The startup process of Flannel is as follows:

Flannel starts to execute the new subnet manager, through which it creates the background data store. Currently, there are two back ends supported. The default is etcd storage. If flannel starts to specify the "Kube subnet Mgr" parameter, the kubernetes interface is used to store the data.

The specific code is as follows:

func newSubnetManager() (subnet.Manager, error) { if opts.kubeSubnetMgr { return kube.NewSubnetManager(opts.kubeApiUrl, opts.kubeConfigFile) } cfg := &etcdv2.EtcdConfig{ Endpoints: strings.Split(opts.etcdEndpoints, ","), Keyfile: opts.etcdKeyfile, Certfile: opts.etcdCertfile, CAFile: opts.etcdCAFile, Prefix: opts.etcdPrefix, Username: opts.etcdUsername, Password: opts.etcdPassword, } // Attempt to renew the lease for the subnet specified in the subnetFile prevSubnet := ReadCIDRFromSubnetFile(opts.subnetFile, "FLANNEL_SUBNET") return etcdv2.NewLocalManager(cfg, prevSubnet) }

Through SubnetManager, combined with the data of etcd configured during deployment, network configuration information can be obtained, mainly including backend and network segment information. If it is vxlan, create corresponding network manager through NewManager. Here, simple engineering mode is used. First, each network mode manager will be initialized and registered through init.

Such as vxlan

func init() { backend.Register("vxlan", New)

If it's udp

func init() { backend.Register("udp", New) }

The other is similar. Register the building methods in a map, so as to enable the corresponding network manager according to the network mode configured by etcd.

3. Registration network

Register network will first create the network card of flannel.vxlanID. The default vxlanID is 1. Then register the lease with etcd and obtain the corresponding network segment information. In this way, there is a detail that the old version of flannel gets the new network segment every time it is started. The new version of flannel will traverse the etcd information registered in etcd, so as to obtain the previously allocated network segment and continue to use.

Finally, write the local subnet file through WriteSubnetFile.

# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.254.0.0/16 FLANNEL_SUBNET=10.254.44.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true

Set the network of docker through this file. Careful readers may find that the MTU here is not the 1500 specified by Ethernet, because the outer vxlan packet still occupies 50 bytes.

Of course, the data in the watch etcd needs to be kept after the flannel is started. This is the three tables that other flannel nodes can update dynamically when new flannel nodes are added or changed. The main processing methods are in handleSubnetEvents

func (nw *network) handleSubnetEvents(batch []subnet.Event) { . . . switch event.Type {//If there is a new network segment joining (new host joining) case subnet.EventAdded: . . .//Update routing table if err := netlink.RouteReplace(&directRoute); err != nil { log.Errorf("Error adding route to %v via %v: %v", sn, attrs.PublicIP, err) continue } //Add arp table log.V(2).Infof("adding subnet: %s PublicIP: %s VtepMAC: %s", sn, attrs.PublicIP, net.HardwareAddr(vxlanAttrs.VtepMAC)) if err := nw.dev.AddARP(neighbor{IP: sn.IP, MAC: net.HardwareAddr(vxlanAttrs.VtepMAC)}); err != nil { log.Error("AddARP failed: ", err) continue } //Add FDB table if err := nw.dev.AddFDB(neighbor{IP: attrs.PublicIP, MAC: net.HardwareAddr(vxlanAttrs.VtepMAC)}); err != nil { log.Error("AddFDB failed: ", err) if err := nw.dev.DelARP(neighbor{IP: event.Lease.Subnet.IP, MAC: net.HardwareAddr(vxlanAttrs.VtepMAC)}); err != nil { log.Error("DelARP failed: ", err) } continue }//If the practice is deleted case subnet.EventRemoved: //Delete route if err := netlink.RouteDel(&directRoute); err != nil { log.Errorf("Error deleting route to %v via %v: %v", sn, attrs.PublicIP, err) } else { log.V(2).Infof("removing subnet: %s PublicIP: %s VtepMAC: %s", sn, attrs.PublicIP, net.HardwareAddr(vxlanAttrs.VtepMAC)) //Delete ARP if err: = nw.dev.delarp (neighbor {IP: SN. IP, MAC: net. Hardwareaddr (vxlanattrs. Vtepmac)}); err! = nil{ log.Error("DelARP failed: ", err) } //Delete FDB if err := nw.dev.DelFDB(neighbor{IP: attrs.PublicIP, MAC: net.HardwareAddr(vxlanAttrs.VtepMAC)}); err != nil { log.Error("DelFDB failed: ", err) } if err := netlink.RouteDel(&vxlanRoute); err != nil { log.Errorf("failed to delete vxlanRoute (%s -> %s): %v", vxlanRoute.Dst, vxlanRoute.Gw, err) } } default: log.Error("internal error: unknown event type: ", int(event.Type)) } } }

In this way, the addition and deletion of any host in flannel can be sensed by other nodes, so as to update the local kernel forwarding table.

Author: Chen Xiaoyu

Source: Yixin Institute of Technology