I think I have lived in this world for more than 20 years. The most popular restaurant I have ever been to is KFC's birthday when I was a child. Now I grow up, KFC has become my daily snack. When I go hungry from the front door after work, I will go in and buy a cup of coffee in the morning. It's mainly fast, delicious and full, and it's always passing by everywhere. Now if you're hungry, your heart will be KFC's taste

Environment introduction

python 3.6

pycharm

requests

csv

General thinking of reptiles

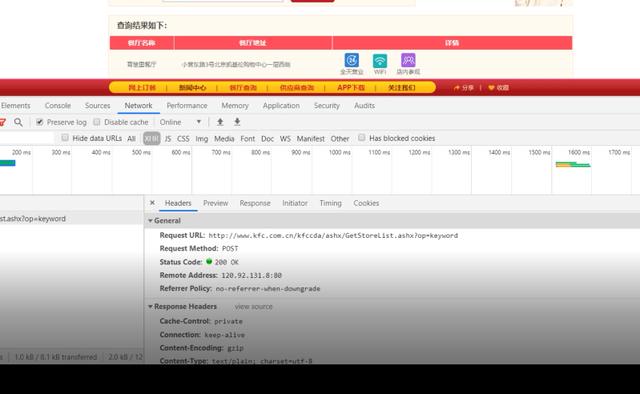

1. Determine the url path to crawl, and the headers parameter

2. Send request -- requests simulate browser to send request and get response data

3. Parse data

4. Save data

step

1. Determine the url path to crawl, and the headers parameter

Get Beijing data first

base_url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=keyword' headers = {'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36'} data = { 'cname': '', 'pid': '', 'keyword': 'Beijing', 'pageIndex': '1', 'pageSize': '10', }

2. Send request -- requests simulate browser to send request and get response data

response = requests.post(url=base_url, headers=headers, data=data) json_data = response.json() # pprint.pprint(json_data)

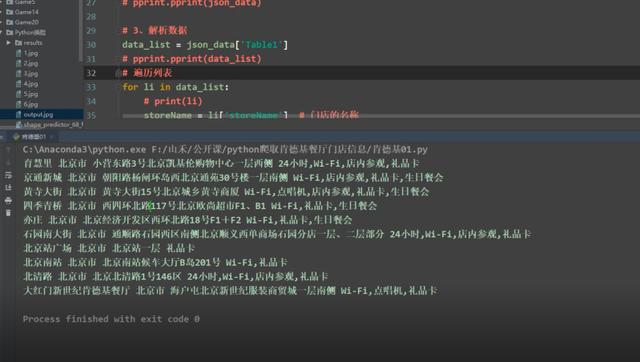

3. Parse data

data_list = json_data['Table1'] # pprint.pprint(data_list) # Build a loop and parse data fields for ls in data_list: storeName = ls['storeName'] + 'Restaurant' # Restaurant name cityName = ls['cityName'] # Restaurant City addressDetail = ls['addressDetail'] # Restaurant Address pro = ls['pro'] # Restaurant details # print(storeName, cityName, addressDetail, pro)

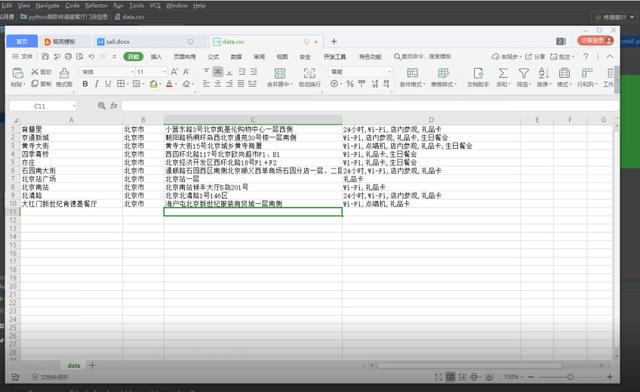

4. Save data

print('Crawling:', storeName) with open('data.csv', 'a', newline='') as csvfile: # newline='' Specify write line by line csvwriter = csv.writer(csvfile, delimiter=',') # delimiter=',' csv Separator for data csvwriter.writerow([storeName, cityName, addressDetail, pro]) # Serialized data, writing csv

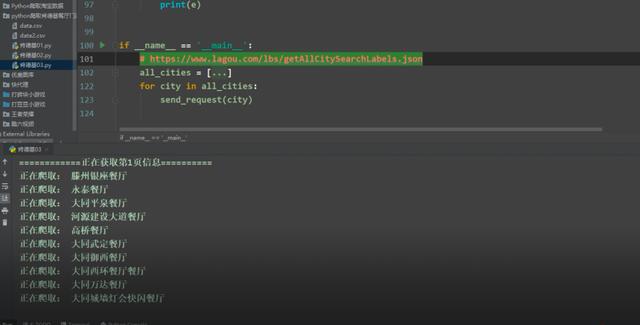

5. Data of 315 cities in China

Get the data of 315 cities of lagoon

# coding:utf-8 import requests import csv import time import random ip = [{'HTTP': '1.199.31.213:9999'}, {'HTTP': '182.46.197.33:9999'}, {'HTTP': '58.18.133.101:56210'}, {'HTTP': '175.44.108.123:9999'}, {'HTTP': '123.52.97.90:9999'}, {'HTTP': '182.92.233.137:8118'}, {'HTTP': '223.242.225.42:9999'}, {'HTTP': '113.194.28.84:9999'}, {'HTTP': '113.194.30.115:9999'}, {'HTTP': '113.195.19.41:9999'}, {'HTTP': '144.123.69.123:9999'}, {'HTTP': '27.192.168.202:9000'}, {'HTTP': '163.204.244.179:9999'}, {'HTTP': '112.84.53.197:9999'}, {'HTTP': '117.69.13.69:9999'}, {'HTTP': '1.197.203.214:9999'}, {'HTTP': '125.108.111.22:9000'}, {'HTTP': '171.35.169.69:9999'}, {'HTTP': '171.15.173.234:9999'}, {'HTTP': '171.13.103.52:9999'}, {'HTTP': '183.166.97.201:9999'}, {'HTTP': '60.2.44.182:44990'}, {'HTTP': '58.253.158.21:9999'}, {'HTTP': '47.94.89.87:3128'}, {'HTTP': '60.13.42.235:9999'}, {'HTTP': '60.216.101.46:32868'}, {'HTTP': '117.90.137.91:9000'}, {'HTTP': '123.169.164.163:9999'}, {'HTTP': '123.169.162.230:9999'}, {'HTTP': '125.108.119.189:9000'}, {'HTTP': '163.204.246.68:9999'}, {'HTTP': '223.100.166.3:36945'}, {'HTTP': '113.195.18.134:9999'}, {'HTTP': '163.204.245.50:9999'}, {'HTTP': '125.108.79.50:9000'}, {'HTTP': '163.125.220.205:8118'}, {'HTTP': '1.198.73.246:9999'}, {'HTTP': '175.44.109.51:9999'}, {'HTTP': '121.232.194.47:9000'}, {'HTTP': '113.194.30.27:9999'}, {'HTTP': '129.28.183.30:8118'}, {'HTTP': '123.169.165.73:9999'}, {'HTTP': '120.83.99.190:9999'}, {'HTTP': '175.42.128.48:9999'}, {'HTTP': '123.101.212.223:9999'}, {'HTTP': '60.190.250.120:8080'}, {'HTTP': '125.94.44.129:1080'}, {'HTTP': '118.112.195.91:9999'}, {'HTTP': '110.243.5.163:9999'}, {'HTTP': '118.89.91.108:8888'}, {'HTTP': '125.122.199.13:9000'}, {'HTTP': '171.11.28.248:9999'}, {'HTTP': '211.152.33.24:39406'}, {'HTTP': '59.62.35.130:9000'}, {'HTTP': '123.163.96.124:9999'}] def get_page(keyword): global base_url base_url = 'http://www.kfc.com.cn/kfccda/ashx/GetStoreList.ashx?op=keyword' global headers headers = { 'user-agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36'} data = { 'cname': '', 'pid': '', 'keyword': keyword, 'pageIndex': '1', 'pageSize': '10', } try: response = requests.post(url=base_url, headers=headers, data=data) json_data = response.json() page = json_data['Table'][0]['rowcount'] if page % 10 > 0: page_num = page // 10 + 1 else: page_num = page // 10 return page_num except Exception as e: print(e) def send_request(keyword): page_num = get_page(keyword) try: for page in range(1, page_num + 1): print('============Getting{}Page information=========='.format(page)) data = { 'cname': '', 'pid': '', 'keyword': keyword, 'pageIndex': str(page), 'pageSize': '10', } response = requests.post(url=base_url, headers=headers, data=data, proxies=random.choice(ip),timeout=3) json_data = response.json() # pprint.pprint(json_data) time.sleep(0.4) # 3,Parse data data_list = json_data['Table1'] # pprint.pprint(data_list) # Build a loop and parse data fields for ls in data_list: storeName = ls['storeName'] + 'Restaurant' # Restaurant name cityName = ls['cityName'] # Restaurant City addressDetail = ls['addressDetail'] # Restaurant Address pro = ls['pro'] # Restaurant details # print(storeName, cityName, addressDetail, pro) # 4,Save data print('Crawling:', storeName) with open('data5.csv', 'a', newline='') as csvfile: # newline='' Specify write line by line csvwriter = csv.writer(csvfile, delimiter=',') # delimiter=',' csv Separator for data csvwriter.writerow([storeName, cityName, addressDetail, pro]) # Serialized data, writing csv time.sleep(0.2) except Exception as e: print(e) if __name__ == '__main__': # https://www.lagou.com/lbs/getAllCitySearchLabels.json all_cities = ['Anyang', 'Anqing', 'Anshan', 'Macao SAR', 'Anshun', 'Altay', 'Ankang', 'Aksu', 'Aba Tibetan and Qiang Autonomous Prefecture', 'alxa league ', 'Beijing', 'Baoding', 'Bengbu', 'Binzhou', 'Baotou', 'Baoji', 'The North Sea', 'Bozhou', 'Baise', 'Bijie', 'Bazhong', 'Benxi', 'Bayingolin', 'Bayannur', 'Bortala', 'Baoshan', 'Baicheng', 'Mount Bai', 'Chengdu', 'Changsha', 'Chongqing', 'Changchun', 'Changzhou', 'Cangzhou', 'Chifeng', 'Chenzhou', 'Chaozhou', 'Changde', 'Chaoyang', 'Chizhou', 'Chuzhou', 'Chengde', 'Changji', 'Chuxiong', 'Chongzuo', 'Dongguan', 'Dalian', 'Texas', 'Deyang', 'Daqing', 'doy', 'Da Tong', 'Dazhou', 'Dali', 'Dehong', 'Dandong', 'Dingxi', 'Danzhou', 'Diqing', 'Ezhou', 'Enshi', 'erdos', 'Foshan', 'Fuzhou', 'Fuyang', 'Fuzhou', 'Fushun', 'Fuxin', 'port of fangcheng', 'Guangzhou', 'Guiyang', 'Guilin', 'Ganzhou', 'Guangyuan', 'Guigang', 'Guang'an', 'Guyuan', 'Ganzi Tibetan Autonomous Prefecture', 'Hangzhou', 'Hefei', 'Huizhou', 'Harbin', 'Haikou', 'Hohhot', 'Handan', 'city in Hunan', 'Huzhou', 'Huaian', 'overseas', 'Heze', 'Hengshui', 'Heyuan', 'Huaihua', 'Huanggang', 'Huangshi', 'Mount Huangshan', 'Huaibei', 'Huainan', 'Huludao', 'Hulun Buir', 'Hanzhoung', 'Red River', 'Hezhou', 'Hechi', 'Hebi', 'Hegang', 'Haidong', 'Hami', 'Ji'nan', 'Jinhua', 'Jiaxing', 'Jining', 'Jiangmen', 'Jinzhong', 'Jilin', 'Jiujiang', 'Jieyang', 'Jiaozuo', 'Jingzhou', 'Jinzhou', 'Jingmen', 'Ji'an', 'Jingdezhen', 'Jincheng', 'Jiamusi', 'Jiuquan', 'Jiyuan', 'Kunming', 'Kaifeng', 'Karamay', 'Kashgar', 'Lanzhou', 'Linyi', 'Langfang', 'Luoyang', 'city in Guangxi', 'Lu'an', 'Liaocheng', 'Lianyungang', 'Lvliang', 'Luzhou', 'Lhasa', 'Lishui', 'Leshan', 'Longyan', 'Linfen', 'Luohe', 'Liupanshui', 'Liangshan Yi Autonomous Prefecture', 'Lijiang', 'Loudi', 'Laiwu prefecture level city in Shandong', 'Liaoyuan', 'Longnan', 'Linxia', 'Guest', 'Mianyang', 'Maoming', 'Ma'anshan', 'Meizhou', 'Mudanjiang', 'Meishan', 'Nanjing', 'Ningbo', 'Nanchang', 'Nanning', 'Nantong', 'Nanyang', 'Nao', 'Ningde', 'Nanping', 'Neijiang', 'Putian', 'Puyang', 'Pingxiang', 'Pingdingshan', 'Panjin', 'Panzhihua', 'Pingliang', 'Pu'er Tea', 'Qingdao', 'Quanzhou', 'Qingyuan', 'qinghuangdao', 'Qujing', 'Quzhou', 'Qiqihar', 'Southwest Guizhou', 'Qiannan', 'Qinzhou', 'Qiandongnan', 'Qingyang', 'Qitaihe River', 'sunshine', 'Shenzhen', 'Shanghai', 'Suzhou', 'Shenyang', 'Shijiazhuang', 'Shaoxing', 'Shantou', 'Suqian', 'Shangqiu', 'Sanya', 'Shangrao', 'Suzhou', 'Shaoyang', 'Shiyan', 'Suining', 'Shaoguan', 'Sanmenxia', 'Shanwei', 'Suizhou', 'Three sand', 'Sanming', 'Suihua', 'Shizuishan', 'Siping', 'Shuozhou', 'Shangluo', 'Songyuan', 'Tianjin', 'Taiyuan', 'Tangshan', 'Taizhou', 'Tai'an', 'Taizhou', 'Tianshui', 'Tongliao', 'Tongling', 'Taiwan', 'Tongren', 'Tongchuan', 'Tieling', 'Tuscaloosa', 'Tianmen', 'make well-connected', 'Wuhan', 'Wuxi', 'Wenzhou', 'Weifang', 'Urumqi', 'Wuhu', 'Weihai', 'Wuzhou', 'Weinan', 'Wu Zhong', 'Ulanchab', 'Wenshan', 'Wuhai', 'Xi'an', 'Xiamen', 'Xuzhou', 'Xinxiang', 'Xining', 'Xianyang', 'Xuchang', 'Xingtai', 'Xiaogan', 'Xiangyang', 'Hong Kong Special Administrative Region', 'Xiangtan', 'Xinyang', 'Xinzhou', 'Xianning', 'Xuancheng', 'Xishuangbanna', 'Xiangxi Tujia and Miao Autonomous Prefecture', 'Xinyu', 'Xing'an League', 'Yantai', 'Yangzhou', 'Yinchuan', 'ynz', 'Yichun', 'Yueyang', 'Yichang', 'Yangjiang', 'Yuxi', 'Yulin', 'Yiyang', 'Yuncheng', 'Yibin', 'Yulin', 'Yunfu', 'Yingkou', 'Yongzhou', 'Yan'an', 'Yingtan', 'Ili', 'Yanbian', 'Yangquan', 'Ya'an', 'Zhengzhou', 'Zhuhai', 'Zhongshan', 'Zhuzhou', 'Zibo', 'Zunyi', 'Zhanjiang', 'Zhaoqing', 'Zhenjiang', 'Zhangjiakou', 'Zhoukou', 'Zhumadian', 'Zhangzhou', 'Zaozhuang', 'CiH', 'Zhaotong', 'Zhoushan', 'Ziyang', 'Zhangye', 'Zigong', 'Central defender', 'Zhangjiajie'] for city in all_cities: send_request(city)

If you want to learn Python or are learning python, there are many Python tutorials, but are they up to date? Maybe you have learned something that someone else probably learned two years ago. Share a wave of the latest Python tutorials in 2020 in this editor. Access to the way, private letter small "information", you can get free Oh!