Production environment Oracle RAC cluster test best method (support Oracle 11g/12c/18c/19c RAC installation post test process)

1, Oracle RAC cluster test background

A large and medium-sized manufacturing company has built a set of business system ERP system for new projects. The database environment of this system is Oracle RAC (RHEL Linux7+Oracle11gR2 RAC) architecture. According to the construction scheme provided by Fengge, the project has been completed.

Before the RAC cluster database of this ERP system goes online, we need to do some functional tests on the RAC cluster. This test method is applicable to Oracle11g/12c and oracle18c/19c. The technical communication QQ group of this article is 189070296.

2, Introduction to Oracle RAC cluster

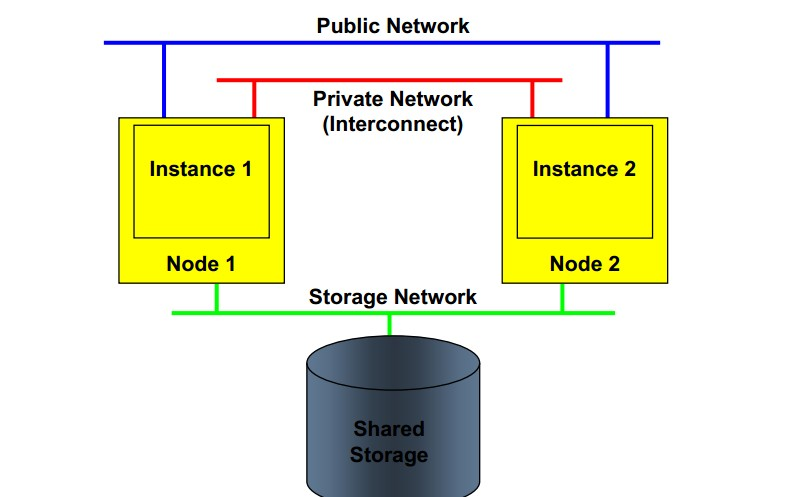

Before the test, brother Feng will introduce what Oracle RAC is: Oracle Real Application Cluster is Oracle 9i and later, and Oracle 9i was called OPS cluster before. Oracle RAC mainly supports Oracle9i, 10g, 11g, 12c versions (18c, 19c). In the Oracle RAC environment, Oracle cluster provides cluster software and storage management software, cluster software CRS/GRID, storage management software ASM (automatic storage management), and multiple nodes share a single data.

The ORACLE RAC architecture is as follows:

In the physical architecture of Oracle RAC, the hardware equipment mainly includes the following parts:

Server, shared storage device (shared storage, optical switch, hbacard, optical cable), network device (network switch, optical switch, network cable)

1) Server

We call this server "database server" and "database host". In terms of RAC, we also call it "node". The configuration of the server should be the same, cpu, memory, etc.

2) Network equipment

There are at least two physical network cards on each server, which are used for private communication between hosts and external public communication respectively. How fast can there be network cards to bind network cards and realize redundancy of network cards.

The network card for private communication is called private NIC, and the corresponding IP is private IP.

The network card for public communication is public NIC, and the corresponding IP is public IP.

3) Shared storage device

Shared storage is the core of RAC architecture

At least one / two HbAS per server for shared storage connections.

You can use fiber-optic cable to directly connect storage, or you can use fiber-optic switch. We recommend using fiber-optic switch.

RAC is a typical "multi instance, single database" architecture, which is shared and accessed by all nodes in parallel.

Database data files, control files, parameter files, online redo log files, and even archive log files are placed in shared storage

It can be accessed by all nodes at the same time. IO performance requirements are relatively high, generally connected with optical fiber cable.

In addition, Oracle RAC has two cluster modes:

Oracle RAC has ha (high availability) and LB(LoadBalance).

1) Failover:

It means that the failure of any node in the cluster will not affect the user's use, and the user connected to the failed node will be automatically transferred to the healthy node, which is not felt by the user's feeling.

2) Load balance:

It is to distribute the load evenly to each node in the cluster, so as to improve the overall throughput.

3, Oracle RAC cluster function test

| No | OracleRAC test project | OracleRAC test method | OracleRAC correct results | OracleRAC test results |

| 1 | Check the version and patch of the database | select * from v$version; | Oracle 11/12c version | Normal or not: |

| 2 | Database startup and shutdown | startup | Can start and shut down normally | Normal or not: |

| Shutdown immediate | ||||

| 3 | Logical backup | exp,expdp | Export succeeded | Normal or not: |

| 4 | character set | select name,value$ from | ZHS16GBK , UTF8 | Normal or not: |

| props$ | ||||

| where name like | ||||

| '%CHARACTERSET%'; | ||||

| 5 | Create / delete | create tablespace fgedudata01 | Created successfully | Normal or not: |

| Tablespace | datafile '+fgedudata1' | Delete succeeded | ||

| size 10m autoextend off; | ||||

| drop tablespace fgedudata01 | ||||

| including contents and files; | ||||

| 6 | Create / delete users | create user fgedu identified | Created successfully | Normal or not: |

| by test default tablespace | Delete succeeded | |||

| fgedudata01 temporary tablespace temp; | ||||

| drop user fgedu cascade; | ||||

| 7 | Create / delete table | create table fgedu.itpux | Created successfully | Normal or not: |

| (name varchar2(10),id number); | Delete succeeded | |||

| drop table fgedu.itpux; | ||||

| 8 | Insert / delete data | Insert into fgedu.itpux values('itpux01','1); | Insert successful | Normal or not: |

| Commit; | Delete succeeded | |||

| Delete from fgedu.itpux | ||||

| Commit; | ||||

| 9 | Client connects to | sqlplus "sys/oracle@itpuxdb as sysdba"; | Connection successful | Normal or not: |

| data base | ||||

| 10 | alter database archivelog | Alter system set db_recovery_file_dest='+dgrecover' scope=spfile; | Archive Mode | Normal or not: |

| alter system set db_recovery_file_dest_size=200G scope=spfile; | ||||

| Srvctl stop database -d fgerpdb | ||||

| Sqlplus "/as sysdba" | ||||

| Startup mount; | ||||

| Alter database archivelog; | ||||

| Shutdown immediate | ||||

| Srvctl start database -d fgerpdb |

4, Oracle RAC cluster load test

| No | OracleRAC test content | OracleRAC test method | OracleRAC correct results | OracleRAC test results |

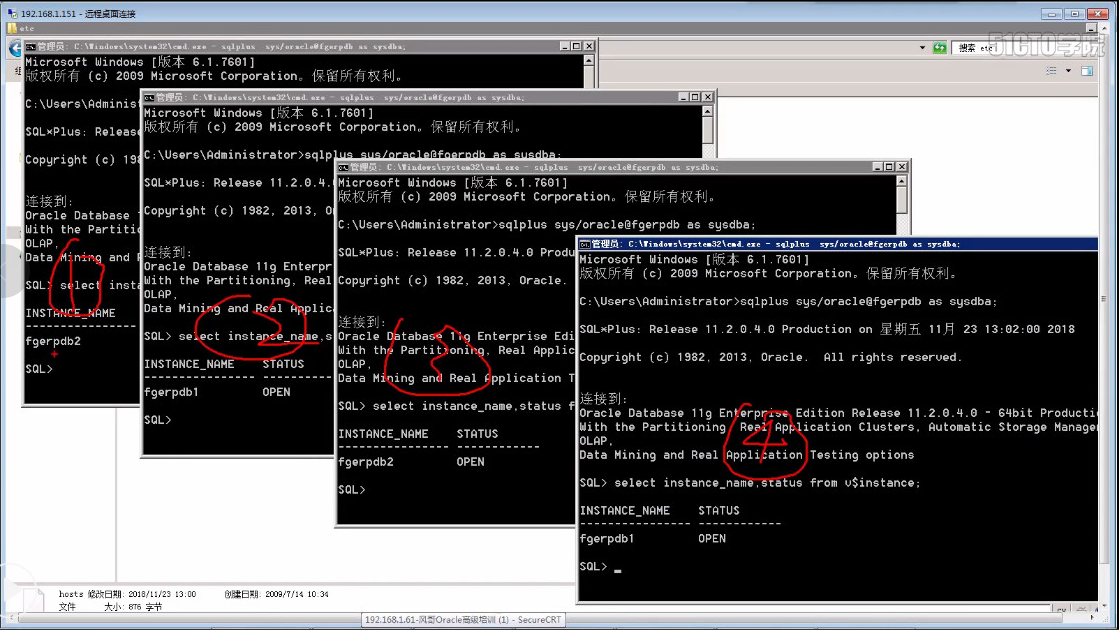

| 1 | Client connection database (RAC mode) | sqlplus "sys/itpux123@itpuxdb as sysdba"; | The connection is successful, and each connection may be distributed to different instances | Normal or not: |

| select instance_name from v$instance; | ||||

| 2 | CRS normal startup and shutdown | crsctl start crs | Can start and shut down normally | Normal or not: |

| crsctl stop crs | ||||

| 3 | Lost network connection (public network) | Unplug the network cable of node i public network card | The instance of this node is normal. vip drifts to node 2, listener,ons,network service offline. The connection originally connected to node 1 is automatically connected to node 2 | Normal or not: |

| 4 | Network connection recovery (public network) | Plug back the network cable of node i public network card | vip drifts back to node 1, listener,ons,network service and automatically onlien, crs RESOURCES return to normal | Normal or not: |

| 5 | Lost network connection (private network) | Unplug the network cable of node i private network card | After node 2 is restarted, the crs resource is offline and vip drifts to node 1. The connection originally connected to node 2 is automatically connected to node 1 | Normal or not: |

| 6 | Network connection recovery (private network) | Plug back the network cable of node i private network card, and use crsctl start crs to start crs | The vip of node 2 drifts back to node 2, and the crs resource of node 2 returns to normal | Normal or not: |

| 7 | Lost network connection (public network) | Unplug the network cable of node 2 public network card | The instance of this node is normal. vip drifts to node 1, listener,ons,network service offline. The connection originally connected to node 2 is automatically connected to node 1 | Normal or not: |

| 8 | Network connection recovery (public network) | Plug back the network cable of node 2 public network card | vip drifts back to node 2. listener,ons,network services automatically restore online and crs resources to normal | Normal or not: |

| 9 | Lost network connection (private network) | Unplug the network cable of private network card of node 2 | After node 2 is restarted, the crs resource is offline and vip drifts to node 1. The connection originally connected to node 2 is automatically connected to node 1 | Normal or not: |

| 10 | Network connection recovery (private network) | Plug back the network cable of private network card of node 2, and use crsctl start crs to start crs | The vip of node 2 drifts back to node 2, and the crs resource of node 2 returns to normal | Normal or not: |

| 11 | load balancing | Open multiple database connections | Multiple connections should be distributed between two nodes | Normal or not: |

| 12 | Transparent failover | Using RAC to connect to database | Connection is not interrupted, query continues and automatically switches to another instance | Normal or not: |

| select instance_name from v$instance; | ||||

| After closing the current instance | ||||

| select instance_name from v$instance; | ||||

| 13 | Normal maintenance, normal shutdown node 1 | Crsctl stop crs | Scan vip,vip drifts to node 2, and the connection originally connected to node 1 is automatically connected to node 2 | Normal or not: |

| 14 | Normal maintenance, normal shutdown node 2 | Crsctl stop crs | vip drifts to node 1, and the connection originally connected to node 2 is automatically connected to node 1 | Normal or not: |

For point 1 in the test list: client connection to database (RAC load balancing test)

Oracle client's tnsnames.ora The mode is as follows: just configure the name or ip address corresponding to scan, as shown below:

fgerpdb = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = www.fgedu.net.cn)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = fgerpdb) ) )

The final Oracle RAC test results are as follows:

For point 12 in the test list: client connects to the database (RAC transparent switch test),

client tnsnames.ora to configure

fgerpdbtaf= (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = www.fgedu.net.cn)(PORT = 1521)) (LOAD_BALANCE = YES) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = fgerpdb) (FAILOVER_MODE = (TYPE = SELECT)(METHOD = BASIC)(RETRIES = 180)(DELAY = 5) ) ) )

Tnspin fgerpdbtaf test connectivity

After connecting ok, test transparent failover with point 12

5, Oracle RAC cluster maintenance command

5.1.Oracle RAC common command tools

The following contents are the common commands that Fengge recommends you to be familiar with. You need to use them frequently in daily work.

$ srvctl -h Usage: srvctl [-V] Usage: srvctl add database -d <db_unique_name> -o <oracle_home> [-m <domain_name>] [-p <spfile>] [-r {PRIMARY | PHYSICAL_STANDBY | LOGICAL_STANDBY | SNAPSHOT_STANDBY}] [-s <start_options>] [-t <stop_options>] [-n <db_name>] [-y {AUTOMATIC | MANUAL}] [-g "<serverpool_list>"] [-x <node_name>] [-a "<diskgroup_list>"] Usage: srvctl config database [-d <db_unique_name> [-a] ] Usage: srvctl start database -d <db_unique_name> [-o <start_options>] Usage: srvctl stop database -d <db_unique_name> [-o <stop_options>] [-f] Usage: srvctl status database -d <db_unique_name> [-f] [-v] Usage: srvctl enable database -d <db_unique_name> [-n <node_name>] Usage: srvctl disable database -d <db_unique_name> [-n <node_name>] Usage: srvctl modify database -d <db_unique_name> [-n <db_name>] [-o <oracle_home>] [-u <oracle_user>] [-m <domain>] [-p <spfile>] [-r {PRIMARY | PHYSICAL_STANDBY | LOGICAL_STANDBY | SNAPSHOT_STANDBY}] [-s <start_options>] [-t <stop_options>] [-y {AUTOMATIC | MANUAL}] [-g "<serverpool_list>" [-x <node_name>]] [-a "<diskgroup_list>"|-z] Usage: srvctl remove database -d <db_unique_name> [-f] [-y] Usage: srvctl getenv database -d <db_unique_name> [-t "<name_list>"] Usage: srvctl setenv database -d <db_unique_name> {-t <name>=<val>[,<name>=<val>,...] | -T <name>=<val>} Usage: srvctl unsetenv database -d <db_unique_name> -t "<name_list>" Usage: srvctl add instance -d <db_unique_name> -i <inst_name> -n <node_name> [-f] Usage: srvctl start instance -d <db_unique_name> {-n <node_name> [-i <inst_name>] | -i <inst_name_list>} [-o <start_options>] Usage: srvctl stop instance -d <db_unique_name> {-n <node_name> | -i <inst_name_list>} [-o <stop_options>] [-f] Usage: srvctl status instance -d <db_unique_name> {-n <node_name> | -i <inst_name_list>} [-f] [-v] Usage: srvctl enable instance -d <db_unique_name> -i "<inst_name_list>" Usage: srvctl disable instance -d <db_unique_name> -i "<inst_name_list>" Usage: srvctl modify instance -d <db_unique_name> -i <inst_name> { -n <node_name> | -z } Usage: srvctl remove instance -d <db_unique_name> [-i <inst_name>] [-f] [-y] Usage: srvctl add service -d <db_unique_name> -s <service_name> {-r "<preferred_list>" [-a "<available_list>"] [-P {BASIC | NONE | PRECONNECT}] | -g <server_pool> [-c {UNIFORM | SINGLETON}] } [-k <net_num>] [-l [PRIMARY][,PHYSICAL_STANDBY][,LOGICAL_STANDBY][,SNAPSHOT_STANDBY]] [-y {AUTOMATIC | MANUAL}] [-q {TRUE|FALSE}] [-x {TRUE|FALSE}] [-j {SHORT|LONG}] [-B {NONE|SERVICE_TIME|THROUGHPUT}] [-e {NONE|SESSION|SELECT}] [-m {NONE|BASIC}] [-z <failover_retries>] [-w <failover_delay>] Usage: srvctl add service -d <db_unique_name> -s <service_name> -u {-r "<new_pref_inst>" | -a "<new_avail_inst>"} Usage: srvctl config service -d <db_unique_name> [-s <service_name>] [-a] Usage: srvctl enable service -d <db_unique_name> -s "<service_name_list>" [-i <inst_name> | -n <node_name>] Usage: srvctl disable service -d <db_unique_name> -s "<service_name_list>" [-i <inst_name> | -n <node_name>] Usage: srvctl status service -d <db_unique_name> [-s "<service_name_list>"] [-f] [-v] Usage: srvctl modify service -d <db_unique_name> -s <service_name> -i <old_inst_name> -t <new_inst_name> [-f] Usage: srvctl modify service -d <db_unique_name> -s <service_name> -i <avail_inst_name> -r [-f] Usage: srvctl modify service -d <db_unique_name> -s <service_name> -n -i "<preferred_list>" [-a "<available_list>"] [-f] Usage: srvctl modify service -d <db_unique_name> -s <service_name> [-c {UNIFORM | SINGLETON}] [-P {BASIC|PRECONNECT|NONE}] [-l [PRIMARY][,PHYSICAL_STANDBY][,LOGICAL_STANDBY][,SNAPSHOT_STANDBY]] [-y {AUTOMATIC | MANUAL}][-q {true|false}] [-x {true|false}] [-j {SHORT|LONG}] [-B {NONE|SERVICE_TIME|THROUGHPUT}] [-e {NONE|SESSION|SELECT}] [-m {NONE|BASIC}] [-z <integer>] [-w <integer>] Usage: srvctl relocate service -d <db_unique_name> -s <service_name> {-i <old_inst_name> -t <new_inst_name> | -c <current_node> -n <target_node>} [-f] Specify instances for an administrator-managed database, or nodes for a policy managed database Usage: srvctl remove service -d <db_unique_name> -s <service_name> [-i <inst_name>] [-f] Usage: srvctl start service -d <db_unique_name> [-s "<service_name_list>" [-n <node_name> | -i <inst_name>] ] [-o <start_options>] Usage: srvctl stop service -d <db_unique_name> [-s "<service_name_list>" [-n <node_name> | -i <inst_name>] ] [-f] Usage: srvctl add nodeapps { { -n <node_name> -A <name|ip>/<netmask>/[if1[|if2...]] } | { -S <subnet>/<netmask>/[if1[|if2...]] } } [-p <portnum>] [-m <multicast-ip-address>] [-e <eons-listen-port>] [-l <ons-local-port>] [-r <ons-remote-port>] [-t <host>[:<port>][,<host>[:<port>]...]] [-v] Usage: srvctl config nodeapps [-a] [-g] [-s] [-e] Usage: srvctl modify nodeapps {[-n <node_name> -A <new_vip_address>/<netmask>[/if1[|if2|...]]] | [-S <subnet>/<netmask>[/if1[|if2|...]]]} [-m <multicast-ip-address>] [-p <multicast-portnum>] [-e <eons-listen-port>] [ -l <ons-local-port> ] [-r <ons-remote-port> ] [-t <host>[:<port>][,<host>[:<port>]...]] [-v] Usage: srvctl start nodeapps [-n <node_name>] [-v] Usage: srvctl stop nodeapps [-n <node_name>] [-f] [-r] [-v] Usage: srvctl status nodeapps Usage: srvctl enable nodeapps [-v] Usage: srvctl disable nodeapps [-v] Usage: srvctl remove nodeapps [-f] [-y] [-v] Usage: srvctl getenv nodeapps [-a] [-g] [-s] [-e] [-t "<name_list>"] Usage: srvctl setenv nodeapps {-t "<name>=<val>[,<name>=<val>,...]" | -T "<name>=<val>"} Usage: srvctl unsetenv nodeapps -t "<name_list>" [-v] Usage: srvctl add vip -n <node_name> -k <network_number> -A <name|ip>/<netmask>/[if1[|if2...]] [-v] Usage: srvctl config vip { -n <node_name> | -i <vip_name> } Usage: srvctl disable vip -i <vip_name> [-v] Usage: srvctl enable vip -i <vip_name> [-v] Usage: srvctl remove vip -i "<vip_name_list>" [-f] [-y] [-v] Usage: srvctl getenv vip -i <vip_name> [-t "<name_list>"] Usage: srvctl start vip { -n <node_name> | -i <vip_name> } [-v] Usage: srvctl stop vip { -n <node_name> | -i <vip_name> } [-f] [-r] [-v] Usage: srvctl status vip { -n <node_name> | -i <vip_name> } Usage: srvctl setenv vip -i <vip_name> {-t "<name>=<val>[,<name>=<val>,...]" | -T "<name>=<val>"} Usage: srvctl unsetenv vip -i <vip_name> -t "<name_list>" [-v] Usage: srvctl add asm [-l <lsnr_name>] Usage: srvctl start asm [-n <node_name>] [-o <start_options>] Usage: srvctl stop asm [-n <node_name>] [-o <stop_options>] [-f] Usage: srvctl config asm [-a] Usage: srvctl status asm [-n <node_name>] [-a] Usage: srvctl enable asm [-n <node_name>] Usage: srvctl disable asm [-n <node_name>] Usage: srvctl modify asm [-l <lsnr_name>] Usage: srvctl remove asm [-f] Usage: srvctl getenv asm [-t <name>[, ...]] Usage: srvctl setenv asm -t "<name>=<val> [,...]" | -T "<name>=<value>" Usage: srvctl unsetenv asm -t "<name>[, ...]" Usage: srvctl start diskgroup -g <dg_name> [-n "<node_list>"] Usage: srvctl stop diskgroup -g <dg_name> [-n "<node_list>"] [-f] Usage: srvctl status diskgroup -g <dg_name> [-n "<node_list>"] [-a] Usage: srvctl enable diskgroup -g <dg_name> [-n "<node_list>"] Usage: srvctl disable diskgroup -g <dg_name> [-n "<node_list>"] Usage: srvctl remove diskgroup -g <dg_name> [-f] Usage: srvctl add listener [-l <lsnr_name>] [-s] [-p "[TCP:]<port>[, ...][/IPC:<key>][/NMP:<pipe_name>][/TCPS:<s_port>] [/SDP:<port>]"] [-o <oracle_home>] [-k <net_num>] Usage: srvctl config listener [-l <lsnr_name>] [-a] Usage: srvctl start listener [-l <lsnr_name>] [-n <node_name>] Usage: srvctl stop listener [-l <lsnr_name>] [-n <node_name>] [-f] Usage: srvctl status listener [-l <lsnr_name>] [-n <node_name>] Usage: srvctl enable listener [-l <lsnr_name>] [-n <node_name>] Usage: srvctl disable listener [-l <lsnr_name>] [-n <node_name>] Usage: srvctl modify listener [-l <lsnr_name>] [-o <oracle_home>] [-p "[TCP:]<port>[, ...][/IPC:<key>][/NMP:<pipe_name>][/TCPS:<s_port>] [/SDP:<port>]"] [-u <oracle_user>] [-k <net_num>] Usage: srvctl remove listener [-l <lsnr_name> | -a] [-f] Usage: srvctl getenv listener [-l <lsnr_name>] [-t <name>[, ...]] Usage: srvctl setenv listener [-l <lsnr_name>] -t "<name>=<val> [,...]" | -T "<name>=<value>" Usage: srvctl unsetenv listener [-l <lsnr_name>] -t "<name>[, ...]" Usage: srvctl add scan -n <scan_name> [-k <network_number> [-S <subnet>/<netmask>[/if1[|if2|...]]]] Usage: srvctl config scan [-i <ordinal_number>] Usage: srvctl start scan [-i <ordinal_number>] [-n <node_name>] Usage: srvctl stop scan [-i <ordinal_number>] [-f] Usage: srvctl relocate scan -i <ordinal_number> [-n <node_name>] Usage: srvctl status scan [-i <ordinal_number>] Usage: srvctl enable scan [-i <ordinal_number>] Usage: srvctl disable scan [-i <ordinal_number>] Usage: srvctl modify scan -n <scan_name> Usage: srvctl remove scan [-f] [-y] Usage: srvctl add scan_listener [-l <lsnr_name_prefix>] [-s] [-p [TCP:]<port>[/IPC:<key>][/NMP:<pipe_name>][/TCPS:<s_port>] [/SDP:<port>]] Usage: srvctl config scan_listener [-i <ordinal_number>] Usage: srvctl start scan_listener [-n <node_name>] [-i <ordinal_number>] Usage: srvctl stop scan_listener [-i <ordinal_number>] [-f] Usage: srvctl relocate scan_listener -i <ordinal_number> [-n <node_name>] Usage: srvctl status scan_listener [-i <ordinal_number>] Usage: srvctl enable scan_listener [-i <ordinal_number>] Usage: srvctl disable scan_listener [-i <ordinal_number>] Usage: srvctl modify scan_listener {-u|-p [TCP:]<port>[/IPC:<key>][/NMP:<pipe_name>][/TCPS:<s_port>] [/SDP:<port>]} Usage: srvctl remove scan_listener [-f] [-y] Usage: srvctl add srvpool -g <pool_name> [-l <min>] [-u <max>] [-i <importance>] [-n "<server_list>"] Usage: srvctl config srvpool [-g <pool_name>] Usage: srvctl status srvpool [-g <pool_name>] [-a] Usage: srvctl status server -n "<server_list>" [-a] Usage: srvctl relocate server -n "<server_list>" -g <pool_name> [-f] Usage: srvctl modify srvpool -g <pool_name> [-l <min>] [-u <max>] [-i <importance>] [-n "<server_list>"] Usage: srvctl remove srvpool -g <pool_name> Usage: srvctl add oc4j [-v] Usage: srvctl config oc4j Usage: srvctl start oc4j [-v] Usage: srvctl stop oc4j [-f] [-v] Usage: srvctl relocate oc4j [-n <node_name>] [-v] Usage: srvctl status oc4j [-n <node_name>] Usage: srvctl enable oc4j [-n <node_name>] [-v] Usage: srvctl disable oc4j [-n <node_name>] [-v] Usage: srvctl modify oc4j -p <oc4j_rmi_port> [-v] Usage: srvctl remove oc4j [-f] [-v] Usage: srvctl start home -o <oracle_home> -s <state_file> -n <node_name> Usage: srvctl stop home -o <oracle_home> -s <state_file> -n <node_name> [-t <stop_options>] [-f] Usage: srvctl status home -o <oracle_home> -s <state_file> -n <node_name> Usage: srvctl add filesystem -d <volume_device> -v <volume_name> -g <dg_name> [-m <mountpoint_path>] [-u <user>] Usage: srvctl config filesystem -d <volume_device> Usage: srvctl start filesystem -d <volume_device> [-n <node_name>] Usage: srvctl stop filesystem -d <volume_device> [-n <node_name>] [-f] Usage: srvctl status filesystem -d <volume_device> Usage: srvctl enable filesystem -d <volume_device> Usage: srvctl disable filesystem -d <volume_device> Usage: srvctl modify filesystem -d <volume_device> -u <user> Usage: srvctl remove filesystem -d <volume_device> [-f] Usage: srvctl start gns [-v] [-l <log_level>] [-n <node_name>] Usage: srvctl stop gns [-v] [-n <node_name>] [-f] Usage: srvctl config gns [-v] [-a] [-d] [-k] [-m] [-n <node_name>] [-p] [-s] [-V] Usage: srvctl status gns -n <node_name> Usage: srvctl enable gns [-v] [-n <node_name>] Usage: srvctl disable gns [-v] [-n <node_name>] Usage: srvctl relocate gns [-v] [-n <node_name>] [-f] Usage: srvctl add gns [-v] -d <domain> -i <vip_name|ip> [-k <network_number> [-S <subnet>/<netmask>[/<interface>]]] srvctl modify gns [-v] [-f] [-l <log_level>] [-d <domain>] [-i <ip_address>] [-N <name> -A <address>] [-D <name> -A <address>] [-c <name> -a <alias>] [-u <alias>] [-r <address>] [-V <name>] [-F <forwarded_domains>] [-R <refused_domains>] [-X <excluded_interfaces>] Usage: srvctl remove gns [-f] [-d <domain_name>] $ ./crsctl -h Usage: crsctl add - add a resource, type or other entity crsctl check - check a service, resource or other entity crsctl config - output autostart configuration crsctl debug - obtain or modify debug state crsctl delete - delete a resource, type or other entity crsctl disable - disable autostart crsctl enable - enable autostart crsctl get - get an entity value crsctl getperm - get entity permissions crsctl lsmodules - list debug modules crsctl modify - modify a resource, type or other entity crsctl query - query service state crsctl pin - Pin the nodes in the nodelist crsctl relocate - relocate a resource, server or other entity crsctl replace - replaces the location of voting files crsctl setperm - set entity permissions crsctl set - set an entity value crsctl start - start a resource, server or other entity crsctl status - get status of a resource or other entity crsctl stop - stop a resource, server or other entity crsctl unpin - unpin the nodes in the nodelist crsctl unset - unset a entity value, restoring its default

5.2 daily maintenance command of Oracle RAC cluster

The following are the common operations that Fengge recommends you to remember. They must be used frequently in daily work. Zhengzhou Tongji infertility hospital: http://yyk.39.net/zz3/zonghe/fc964.html

1.Stop all nodes on the database and start all nodes on the database Srvctl stop database -d fgedu –o immediate Srvctl start database -d fgedu 2.Stop all on Database asm Disk groups and all disk groups on the boot database Srvctl stop asm –g crs Srvctl stop asm –g dgsystem Srvctl stop asm –g fgedudata1 Srvctl stop asm –g dgrecover Srvctl start asm –g crs Srvctl start asm –g dgsystem Srvctl start asm –g fgedudata1 Srvctl start asm –g dgrecover 3.Stop on the corresponding node listener And start the listener Srvctl stop listener -n fgerp61 Srvctl stop listener -n fgerp62 Srvctl start listener -n fgerp61 Srvctl start listener -n fgerp62 4.stop it scan_listener And startup scan_listener Srvctl stop scan_listener Srvctl start scan_listener 5.stop it scan And startup scan Srvctl stop scan Srvctl start scan 6.Stop the resource on the corresponding node and start the resource on the corresponding node Srvctl stop nodeapps -n fgerp62 Srvctl stop nodeapps -n fgerp61 Srvctl start nodeapps -n fgerp62 Srvctl start nodeapps -n fgerp61 7.stop it crs And startup crs //Stop crs crsctl stop crs Crsctl stop crs In a way that grid All processes shut down //Start crs crsctl start crs 8.inspect crs Resource status crsctl status resource -t crs_stat –t 9,use asmcmd Tool management asm Disk group //View disk group capacity asmcmd lsdg

If you already have a set of Oracle RAC cluster environment, you can test it according to the above procedure. If you do not have this environment, you can refer to the Oracle RAC tutorial of Fengge tutorial: Production environment Linux+Oracle 11gR2 RAC cluster installation configuration and maintenance(https://edu.51cto.com/course/3733.html )To build an Oracle RAC cluster environment for learning and testing.