1, Background

Durid is a widely used database connection pool, which has superior performance, powerful monitoring mechanism, and also supports expansion through the mechanism of filter.

Druid has a StatFilter for slow sql recording, but I found some shortcomings in using it:

- The print log of this Filter is ERROR level. When the system monitors the ERROR log, alarms may be triggered frequently,

- The judgment threshold can only be set in the configuration file, and dynamic adjustment is not supported,

- Only log printing is realized, but subsequent statistics and other functions are not available

So we try to use a custom Filter to record slow Sql, and realize dynamic switch and threshold adjustment.

In addition to implementing specific methods, custom filters need to be added to the filter chain of DruidDataSource. In general, you need to manually add to the filter collection of dataSource. In the spring boot environment, as long as the custom filter is declared as Component, it can be automatically assembled into the FilterChain without additional configuration.

Reference article:

Custom Druid interceptor

Nacos quick start: start Nacos server (console service)

Nacos quick start: integrating spring boot for configuration management and service discovery

2, Custom Druid Filter

1. Main steps:

- Inherits the FilterEventAdapter class and implements four methods, and then intercepts all types of sql execution:

- statementExecuteUpdateAfter()

- statementExecuteQueryAfter()

- statementExecuteAfter()

- statementExecuteBatchAfter()

- Declare two properties: logSwitch, slowSqlMillis, and add the comments of nacos for dynamic configuration

- Add a new method, judge the execution time after the execution of sql, record the sql statements and parameters (here mainly refer to the code in StatFilter), and print them as warn logs

- It can also be expanded to send sql records to mq, es, or other databases for subsequent statistical monitoring

- Open the Druid's own StatFilter:

- application.propertis: spring.datasource.druid.filter.stat.enabled=true

2. Complete code:

@Slf4j @Component public class CustomDruidStatLogFilter extends FilterEventAdapter { private static final Pattern LINE_BREAK = Pattern.compile("\n"); private static final Pattern BLANKS = Pattern.compile(" +"); private static final String BLANK = " "; /** * On state * (This annotation indicates that this attribute is a nacos dynamic attribute. It corresponds to the value of logSwitch in the configuration. It is true by default and supports dynamic refresh.) */ @NacosValue(value = "${logSwitch:true}", autoRefreshed = true) private boolean logSwitch; /** * Slow sql judgment threshold (MS) */ @NacosValue(value = "${slowSqlMillis:100}", autoRefreshed = true) private long slowSqlMillis; @Override protected void statementExecuteUpdateAfter(StatementProxy statement, String sql, int updateCount) { internalAfterStatementExecute(statement); } @Override protected void statementExecuteQueryAfter(StatementProxy statement, String sql, ResultSetProxy resultSet) { internalAfterStatementExecute(statement); } @Override protected void statementExecuteAfter(StatementProxy statement, String sql, boolean firstResult) { internalAfterStatementExecute(statement); } @Override protected void statementExecuteBatchAfter(StatementProxy statement, int[] result) { internalAfterStatementExecute(statement); } /** Core record method: judge slow record sql */ private void internalAfterStatementExecute(StatementProxy statement) { if (logSwitch) { if (statement.getSqlStat() != null) { long nanos = System.nanoTime() - statement.getLastExecuteStartNano(); long millis = nanos / (1000 * 1000); if (millis >= slowSqlMillis) { String slowParameters = buildSlowParameters(statement); String sql = statement.getLastExecuteSql(); sql = LINE_BREAK.matcher(sql).replaceAll(BLANK); sql = BLANKS.matcher(sql).replaceAll(BLANK); // Print the log. It can also be saved by using database and other methods for subsequent statistics log.warn("slow sql [" + millis + "] millis, sql: [" + sql + "], params: " + slowParameters); } } } } /** Assembly query parameters */ private String buildSlowParameters(StatementProxy statement) { JSONWriter out = new JSONWriter(); out.writeArrayStart(); for (int i = 0, parametersSize = statement.getParametersSize(); i < parametersSize; ++i) { JdbcParameter parameter = statement.getParameter(i); if (i != 0) { out.writeComma(); } if (parameter == null) { continue; } Object value = parameter.getValue(); if (value == null) { out.writeNull(); } else if (value instanceof String) { String text = (String) value; if (text.length() > 100) { out.writeString(text.substring(0, 97) + "..."); } else { out.writeString(text); } } else if (value instanceof Number) { out.writeObject(value); } else if (value instanceof java.util.Date) { out.writeObject(value); } else if (value instanceof Boolean) { out.writeObject(value); } else if (value instanceof InputStream) { out.writeString("<InputStream>"); } else if (value instanceof NClob) { out.writeString("<NClob>"); } else if (value instanceof Clob) { out.writeString("<Clob>"); } else if (value instanceof Blob) { out.writeString("<Blob>"); } else { out.writeString('<' + value.getClass().getName() + '>'); } } out.writeArrayEnd(); return out.toString(); } }

3, Test effect

1. Preparation

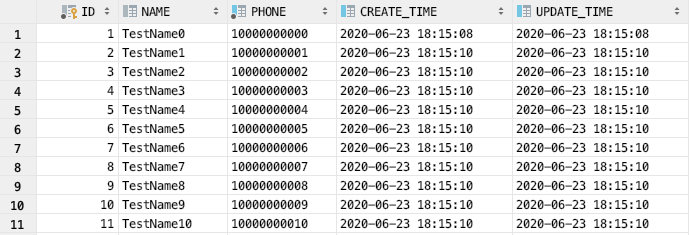

1.1 table building

It mainly saves the NAME and PHONE fields, where PHONE creates the index

CREATE TABLE `USER`( `ID` INT(20) NOT NULL AUTO_INCREMENT COMMENT 'Primary key ID', `PHONE` VARCHAR(60) NOT NULL DEFAULT '' COMMENT 'cell-phone number', `NAME` VARCHAR(60) NOT NULL DEFAULT '' COMMENT 'full name', `CREATE_TIME` TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT 'Creation time', `UPDATE_TIME` TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT 'Update time', PRIMARY KEY (`ID`), KEY `idx_phone` (`PHONE`) ) ENGINE = InnoDB DEFAULT CHARSET = utf8mb4 COMMENT ='User table'

1.2 initialization data

Insert 50000 pieces of data into the USER table.

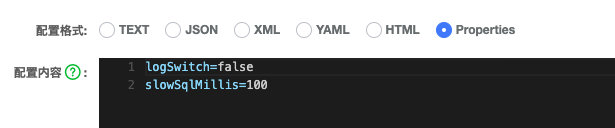

1.3 initialize nacos configuration

Publish the configuration through the nacos console:

[failed to transfer the pictures in the external chain. The source station may have anti-theft chain mechanism. It is recommended to save the pictures and upload them directly (img-5i77tcwv-1593395663229) (/ users / wangkai / library / Application Support / typora user images / image-202006228202207915. PNG))

1.4 query code

The test uses mybatis plus to query

Use the scheduled task to query the USER table with random name and phone every 5s

@Component @Slf4j public class QueryDataJob { @Autowired private UserMapper userMapper; /** Use random name query */ @Scheduled(fixedRate = 5000) public void queryByName() { int randomIndex = RandomUtil.randomInt(0, 100000); String randomName = "TestName" + randomIndex; User query = new User(); query.setName(randomName); List<User> result = userMapper.select(query); log.info("query by name,result:{}", JSONUtil.toJsonStr(result)); } /** Use random mobile number (with index) to query */ @Scheduled(fixedRate = 5000) public void queryByPhone(){ int randomIndex = RandomUtil.randomInt(0, 100000); String randomName = "TestName" + randomIndex; User query = new User(); query.setPhone(randomName); List<User> result = userMapper.select(query); log.info("query by phone,result:{}", JSONUtil.toJsonStr(result)); } }

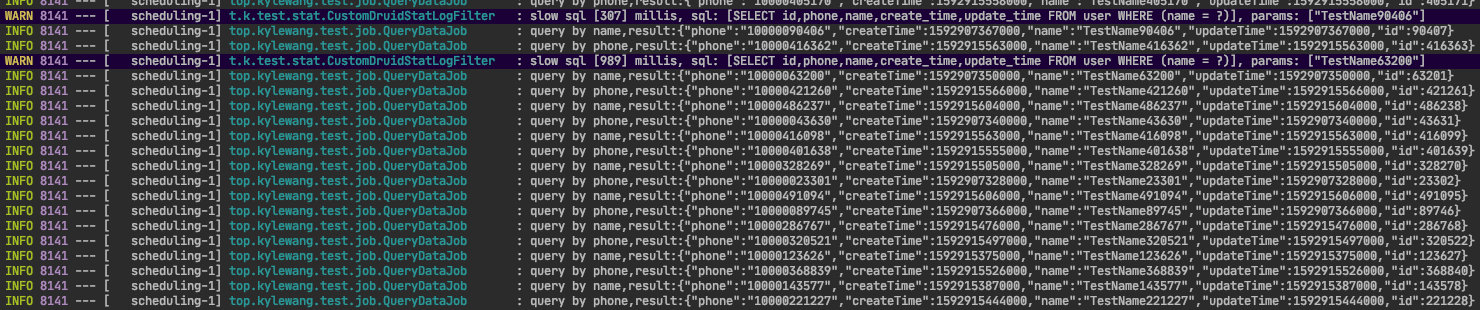

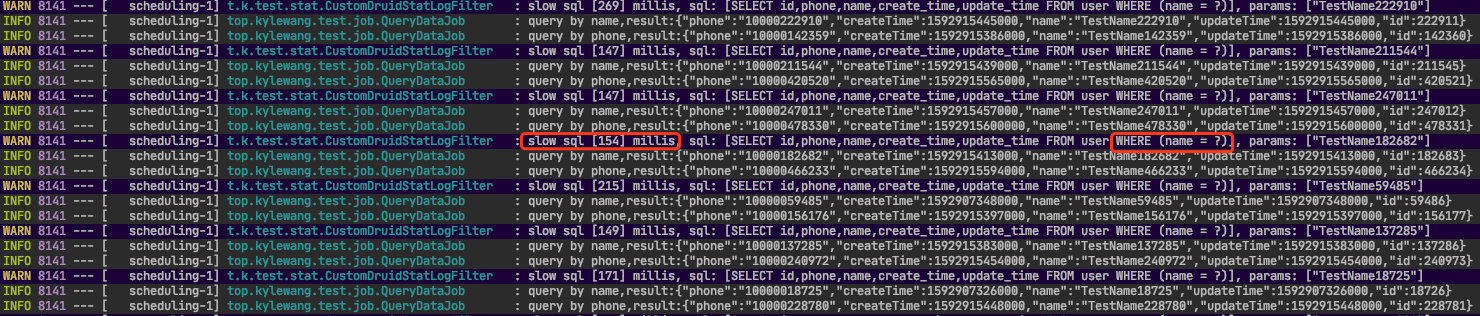

2. Slow SQL record

When using name query, there is no index, and the execution time of sql is longer than 100ms. It is recorded through the warn log of CustomDruidStatLogFilter:

3. Dynamic adjustment

Try adjusting the nacos configuration to change the recording results:

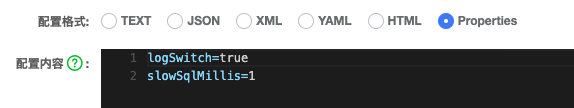

3.1 on / off

In the nacos console, set logSwitch to false:

After the configuration is published, slow sql records will no longer print logs, indicating that the dynamic adjustment of logSwitch configuration takes effect:

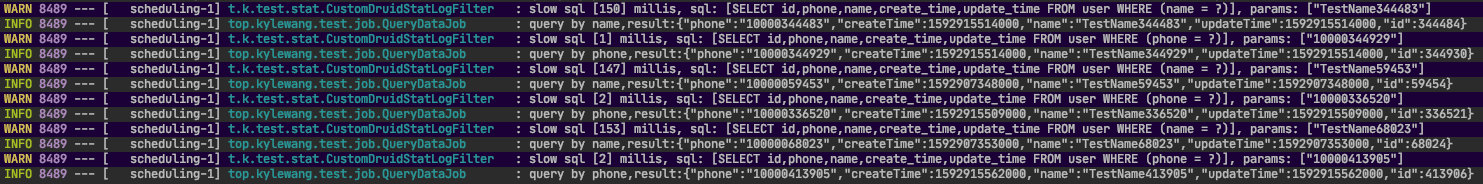

3.2 adjust judgment threshold

Turn on the logSwitch and set the judgment threshold to 1ms:

Both queries are recorded and the threshold dynamic adjustment takes effect:

All tests completed