8. Ribbon load balancing service call

8.1 general

1. What is ribbon

Spring Cloud Ribbon is a set of client-side load balancing tools based on Netfix Ribbon.

Ribbon is an open source project released by Netfix. Its main function is to provide software load balancing algorithms and service calls on the client. The ribbon client component provides a series of complete configuration items, such as connection timeout, Retry, etc. List all the machines behind the load balancer (LB) in the configuration file. The ribbon will automatically help you connect these machines based on certain rules (such as simple polling, random connection, etc.). We can easily use ribbon to implement a custom load balancing algorithm.

2. Official website information

https://github.com/Netflix/ribbon

Ribbon is also in maintenance mode

The future alternative is the Spring Cloud loadbalancer developed by Spring Cloud itself

3. What can I do

3.1 lb (load balancing)

Distribute user requests equally to multiple services, so as to achieve the ha (high availability) of the system. Common load balancing software include Nginx, LVS, hardware F5, etc

The difference between Ribbon local load balancing client and nginx server load balancing

- Nginx is server load balancing. All client requests will be handed over to nginx, and then nginx will forward the requests. That is, load balancing is realized by the server.

- Ribbon is local load balancing. When calling the microservice interface, it will obtain the registration information service list in the registry and cache it locally to the JVM, so as to realize the RPC remote service call technology locally.

Centralized LB

That is, an independent LB facility (either hardware, such as F5, or software, such as Nginx) is used between the service consumer and the service provider, and the facility is responsible for forwarding the access request to the service provider through some policy;

In process LB

Integrate LB logic into the consumer. The consumer knows which addresses are available from the service registry, and then selects an appropriate server from these addresses.

Ribbon belongs to in-process LB, which is just a class library integrated into the consumer process, through which the consumer obtains the address of the service provider;

8.2 Ribbon load balancing demonstration

1. Architecture description

Ribbon works in two steps

- First select Eureka server, which gives priority to servers with less load in the same region

- Then select an address from the server channel service registration list according to the policy specified by the user

Ribbon provides a variety of strategies: such as polling, randomization, and weighting according to response time

Summary: Ribbon is actually a client component of soft load balancing. It can be used in combination with other clients requiring requests. The combination with eureka is just one example

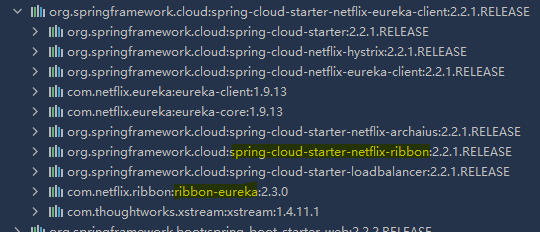

2. pom

You can see that the spring cloud starter Netflix Eureka client package already contains Ribbon related dependencies

3. Use of resttemplate

@RestController

@Slf4j

public class OrderController {

//private static final String PAYMENT_URL = "http://localhost:8001";

private static final String PAYMENT_URL = "http://CLOUD-PAYMENT-SERVICE";

private final RestTemplate restTemplate;

@Autowired

public OrderController(RestTemplate restTemplate) {

this.restTemplate = restTemplate;

}

/**

* The return object is the object converted from the data in the response entity, which can be basically understood as JSON

* @param payment

* @return

*/

@GetMapping("/consumer/payment/create")

public CommonResult<Payment> create(Payment payment) {

log.info("payment = {}", JSON.toJSONString(payment));

return restTemplate.postForObject(PAYMENT_URL + "/payment/create", payment, CommonResult.class);

}

@GetMapping("/consumer/payment/get/{id}")

public CommonResult<Payment> getPayment(@PathVariable("id") Long id) {

return restTemplate.getForObject(PAYMENT_URL + "/payment/get/" + id, CommonResult.class);

}

@GetMapping("/consumer/payment/getEntity/{id}")

public CommonResult<Payment> getPayment2(@PathVariable("id") Long id) {

ResponseEntity<CommonResult> forEntity = restTemplate.getForEntity(PAYMENT_URL + "/payment/get/" + id,

CommonResult.class);

if (forEntity.getStatusCode().is2xxSuccessful()) {

log.info("{} {} {}", forEntity.getStatusCode(), forEntity.getHeaders(), forEntity.getBody());

return forEntity.getBody();

}

return new CommonResult<>(444, "operation failed");

}

/**

* The returned object is the ResponseEntity object, which contains some important information in the response, such as response header, response status code, response body, etc

* @param payment

* @return

*/

@GetMapping("/consumer/payment/createEntity")

public CommonResult<Payment> create2(Payment payment) {

ResponseEntity<CommonResult> postForEntity = restTemplate.postForEntity(

PAYMENT_URL + "/payment/create", payment, CommonResult.class);

if (postForEntity.getStatusCode().is2xxSuccessful()){

log.info("{} {} {}", postForEntity.getStatusCode(), postForEntity.getHeaders(), postForEntity.getBody());

return postForEntity.getBody();

}

return new CommonResult<>(444, "operation failed");

}

}

8.3 Ribbon core component IRule

IRule: select a service to access from the service list according to a specific algorithm

- com. netflix. loadbalancer. Roundrobin rule polling

- com.netflix.loadbalancer.RandomRule random

- com.netflix.loadbalancer.RetryRule first obtains the service according to the roundrobin rule policy. If it fails to obtain the service, it will retry within the specified time to obtain the available service

- com.netflix.loadbalancer.WeightedResponseTimeRule is an extension of roundrobin rule. The faster the response speed, the greater the instance selection weight, and the easier it is to be selected

- com.netflix.loadbalancer.BestAvailableRule will first filter out the services in the circuit breaker tripping state due to multiple access faults, and then select a service with the least concurrency

- com. netflix. loadbalancer. Availability filtering rule filters out failed instances first, and then selects smaller concurrent instances

- com.netflix.loadbalancer.ZoneAvoidanceRule is the default rule, which determines the performance of the region where the server is located and the availability of the server, and selects the server

How to replace?

Modify cloud-consumer-order80

Pay attention to configuration details

- The official document clearly gives a warning:

- This custom configuration class cannot be placed under the current package and sub package scanned by @ ComponentScan, otherwise our custom configuration class will be shared by all Ribbon clients and cannot achieve the purpose of special customization

On COM Create a new sibling package rule for spring cloud under Zzx

Create a new MySelfRule rule class

package com.zzx.rule;

import com.netflix.loadbalancer.IRule;

import com.netflix.loadbalancer.RandomRule;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @description: Custom load balancing routing rule class

*/

@Configuration

public class MySelfRule {

/**

* Define rules as random

* @return

*/

@Bean

public IRule getRule(){

return new RandomRule();

}

}

Add the @ RibbonClient annotation on the main startup class

package com.zzx.springcloud;

import com.zzx.rule.MySelfRule;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.cloud.netflix.eureka.EnableEurekaClient;

import org.springframework.cloud.netflix.ribbon.RibbonClient;

@SpringBootApplication

@EnableEurekaClient

@RibbonClient(name = "CLOUD-PAYMENT-SERVICE", configuration = MySelfRule.class)

public class OrderMain80 {

public static void main(String[] args) {

SpringApplication.run(OrderMain80.class, args);

}

}

Test with chrome

Through the background log, it is found that there are two micro services 8001 and 8002 accessed randomly

8.4 Ribbon load balancing algorithm

principle

Load balancing algorithm: the number of requests of the rest interface% the total number of server clusters = the subscript of the actual calling server location. The count of the rest interface starts from 1 after each service restart

eg:

List[0] instances = 127.0.0.1:8002

List[0] instances = 127.0.0.1:8001

8001 + 8002 form a cluster. There are two machines in total. The total number of clusters is 2. According to the principle of polling algorithm:

When the total number of requests is 1: 1% 2 = 1 and the corresponding subscript position is 1, the obtained service address is 127.0.0.1:8001

When the total number of requests is 2: 2% 2 = 0 and the corresponding subscript position is 0, the obtained service address is 127.0.0.1:8002

When the total number of requests is 3: 3% 2 = 1 and the corresponding subscript position is 1, the obtained service address is 127.0.0.1:8001

And so on

Source code

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by FernFlower decompiler)

//

package com.netflix.loadbalancer;

import com.netflix.client.config.IClientConfig;

import java.util.List;

import java.util.concurrent.atomic.AtomicInteger;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class RoundRobinRule extends AbstractLoadBalancerRule {

private AtomicInteger nextServerCyclicCounter;

private static final boolean AVAILABLE_ONLY_SERVERS = true;

private static final boolean ALL_SERVERS = false;

private static Logger log = LoggerFactory.getLogger(RoundRobinRule.class);

// Initialize the cycle counter nextServerCyclicCounter with an initial value of 0

public RoundRobinRule() {

this.nextServerCyclicCounter = new AtomicInteger(0);

}

public RoundRobinRule(ILoadBalancer lb) {

this();

this.setLoadBalancer(lb);

}

public Server choose(ILoadBalancer lb, Object key) {

if (lb == null) {

log.warn("no load balancer");

return null;

} else {

Server server = null;

int count = 0;

while(true) {

// count is the maximum number of retries

if (server == null && count++ < 10) {

// Get live services

List<Server> reachableServers = lb.getReachableServers();

// Get all services

List<Server> allServers = lb.getAllServers();

int upCount = reachableServers.size();

int serverCount = allServers.size();

if (upCount != 0 && serverCount != 0) {

// Get the next server index by taking the server template

int nextServerIndex = this.incrementAndGetModulo(serverCount);

// Get service based on server index

server = (Server)allServers.get(nextServerIndex);

/**

* Judge whether the current service is null. If it is null

* Let the current thread enter the "ready state" from the "running state", so that other waiting threads with the same priority can obtain the execution right; However, there is no guarantee

* Verify that after the current thread calls yield(), other threads with the same priority will be able to obtain the execution right;

* It is also possible that the current thread enters the "running state" to continue running!

*/

if (server == null) {

Thread.yield();

} else {

// If it is not null, judge whether the service is alive and ready. If so, return the service

// Otherwise, set the service to null and proceed to the next loop

if (server.isAlive() && server.isReadyToServe()) {

return server;

}

server = null;

}

continue;

}

log.warn("No up servers available from load balancer: " + lb);

return null;

}

if (count >= 10) {

log.warn("No available alive servers after 10 tries from load balancer: " + lb);

}

return server;

}

}

}

// Self increment the nextServerCyclicCounter and take the module according to the number of servers

private int incrementAndGetModulo(int modulo) {

int current;

int next;

do {

current = this.nextServerCyclicCounter.get();

next = (current + 1) % modulo;

} while(!this.nextServerCyclicCounter.compareAndSet(current, next));

return next;

}

public Server choose(Object key) {

return this.choose(this.getLoadBalancer(), key);

}

public void initWithNiwsConfig(IClientConfig clientConfig) {

}

}