1. First two files

<1> Tasks (general name fixed)

tasks.py

from celery import Celery

import time

# app = Celery('tasks')

app = Celery('celery_name',

backend='redis://127.0.0.1:6379/3',

broker='redis://127.0.0.1:6379 / 2 ') # configure the backend and broker of the celery

# app.config_from_object('tasks') #Config is the file name of config.py in this directory. You can specify your own directory

@app.task(name='func') # The task needs to be registered here. The value of the name parameter is the task name. Note that it is a string

def func(x, y):

time.sleep(3)

print('Already running..')

return x + y

<2> The second file is the entry of asynchronous task scheduling

celery_test_main.py

from tasks import func

import time

for i in range(100):

func.delay(2, i)

time.sleep(1)2. Start asynchrony

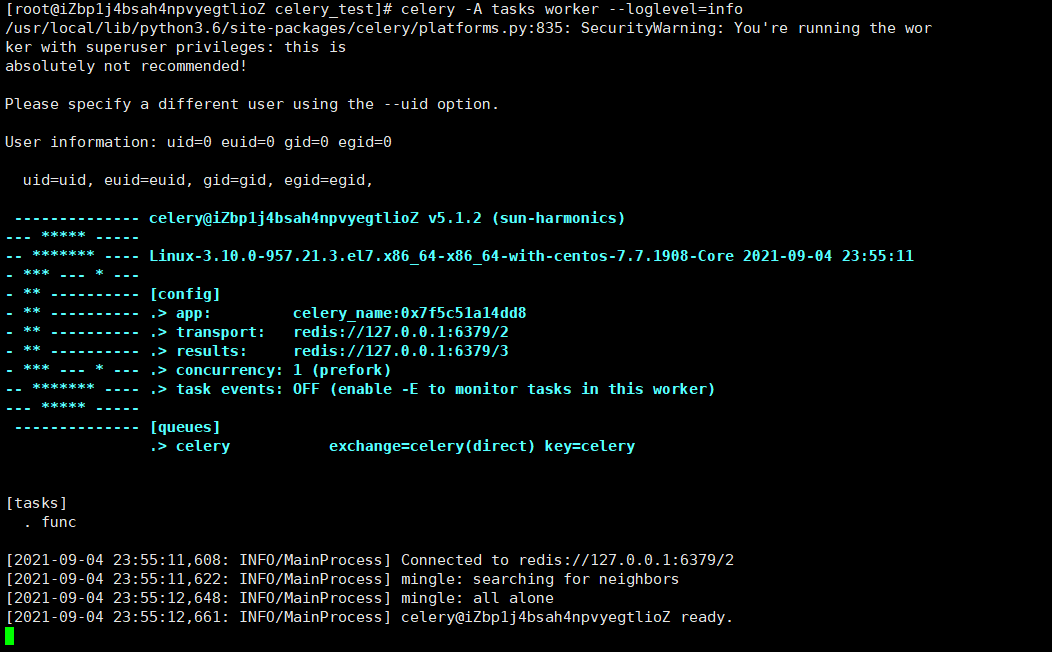

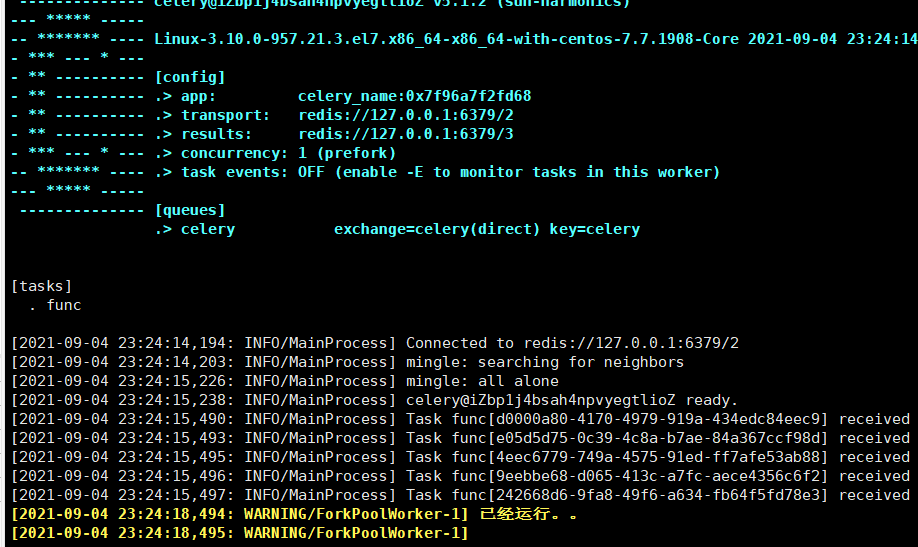

celery -A tasks worker --loglevel=info

3. Run celery_ test_ Add task to main.py file

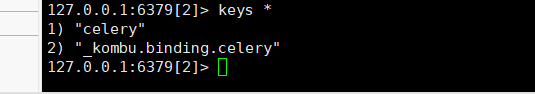

Here you can see that redis library 2 is like this

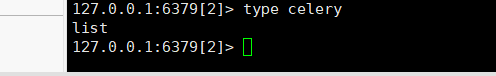

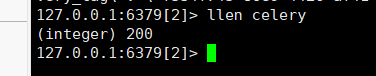

In fact, tasks mainly exist in the cell, and this key is a list

There are many tasks in the list. An element in the list is a string data structure:

{

"body": "W1syLCA5OV0sIHt9LCB7ImNhbGxiYWNrcyI6IG51bGwsICJlcnJiYWNrcyI6IG51bGwsICJjaGFpbiI6IG51bGwsICJjaG9yZCI6IG51bGx9XQ==",

"content-encoding": "utf-8",

"content-type": "application/json",

"headers": {

"lang": "py",

"task": "func",

"id": "3fb1edf9-9b4e-4671-a1a4-c9a23b68a2b8",

"shadow": null,

"eta": null,

"expires": null,

"group": null,

"group_index": null,

"retries": 0,

"timelimit": [null, null],

"root_id": "3fb1edf9-9b4e-4671-a1a4-c9a23b68a2b8",

"parent_id": null,

"argsrepr": "(2, 99)",

"kwargsrepr": "{}",

"origin": "gen15587@iZbp1j4bsah4npvyegtlioZ",

"ignore_result": false

},

"properties": {

"correlation_id": "3fb1edf9-9b4e-4671-a1a4-c9a23b68a2b8",

"reply_to": "3bfd1eca-d23d-3bfe-8ce9-29f2dcbc27be",

"delivery_mode": 2,

"delivery_info": {

"exchange": "",

"routing_key": "celery"

},

"priority": 0,

"body_encoding": "base64",

"delivery_tag": "46650cc0-690c-43f1-b97d-6881ba9b358f"

}

}

4. When all the tasks are executed, the cell list will not exist

5. If you stop the celery service, run celery_ test_ The main.py file is still able to add tasks in library 2 of redis; You can see the task pile up

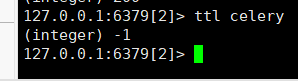

It seems that the task will not expire:

6. When you restart asynchronous, you can see that the unexecuted tasks can still be executed normally

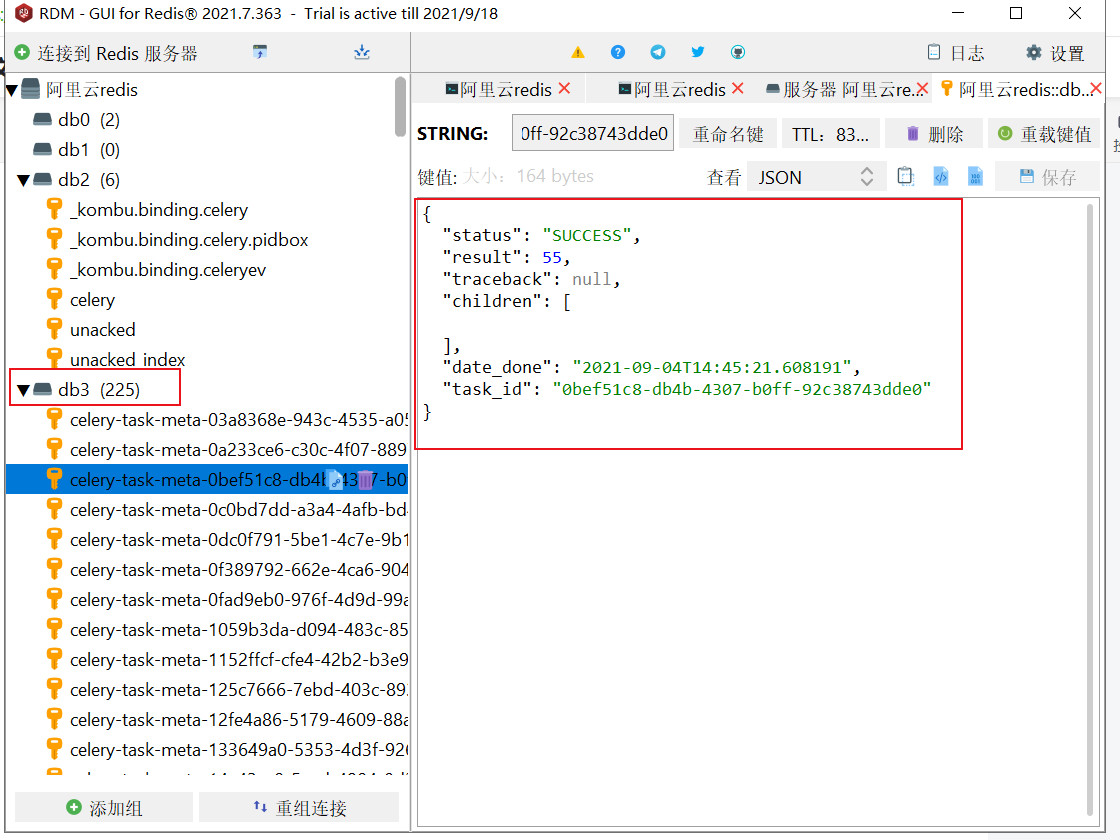

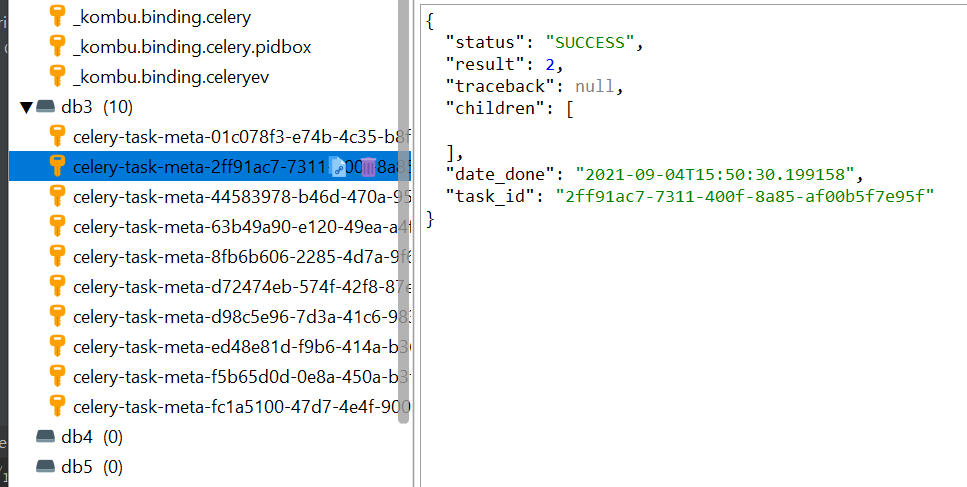

7. The test uses library 3 as the database for storing task results. The structure is like this

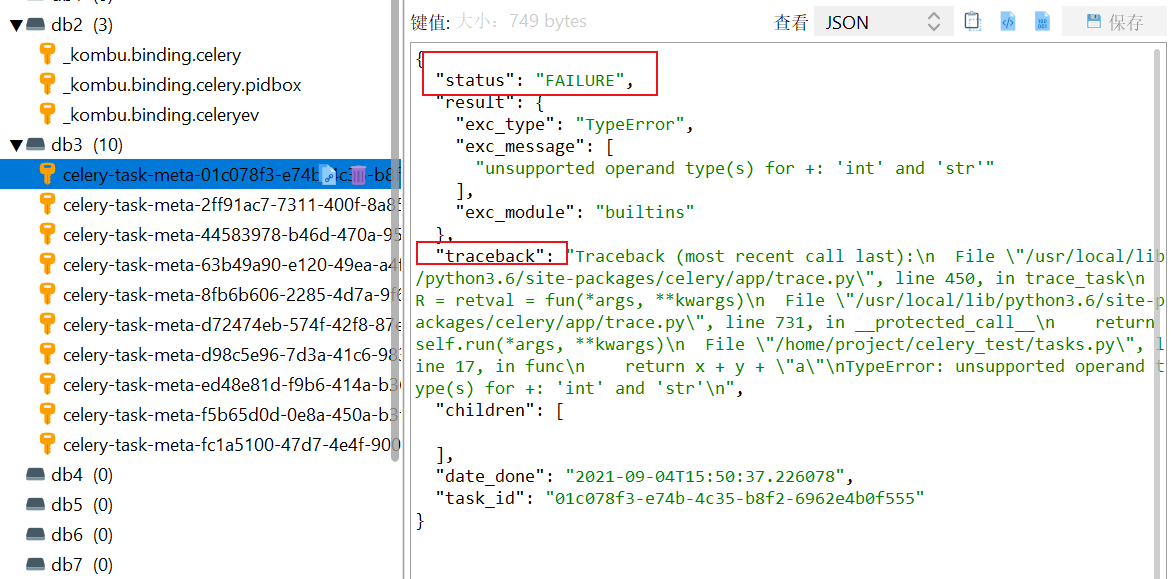

You can see whether the task execution is successful or not and the execution completion time; And which task_id)

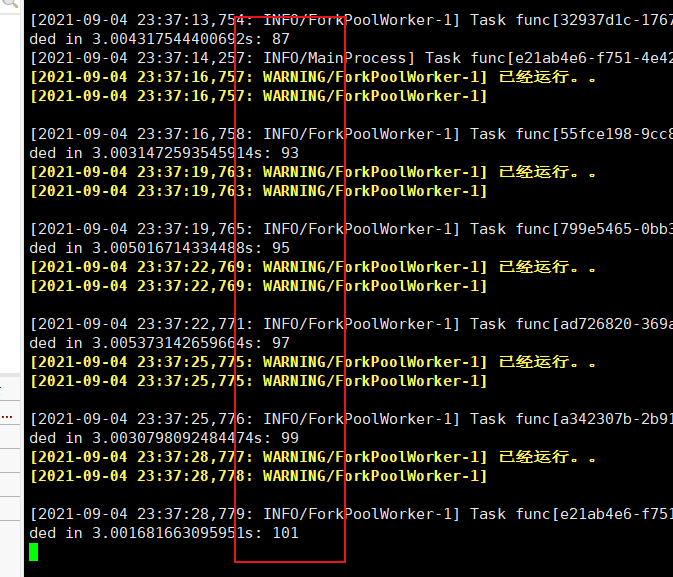

8. You can open another terminal and start a worker with the same command

You can see that the two tasks are started faster and execute different tasks respectively (the tasks will not be executed repeatedly, which worker gets from the queue and which executes which)

9. What if the task fails?

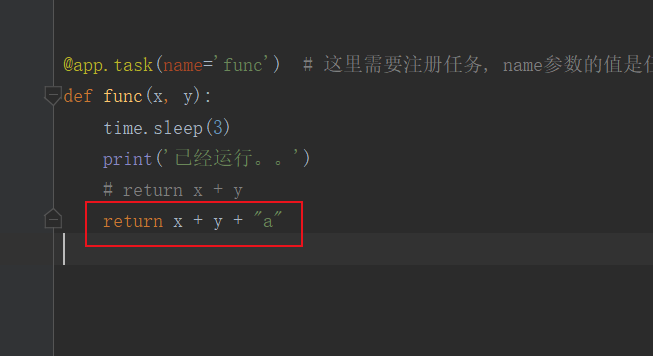

<1> Modify the code and make a deliberate error

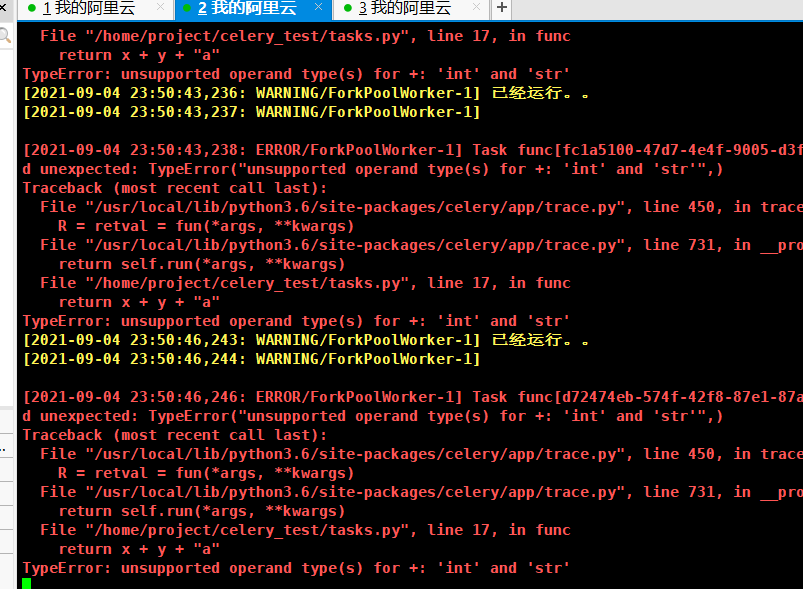

After the modification, it can still be executed successfully. I wonder if it's time to restart asynchronous? Try restarting asynchrony

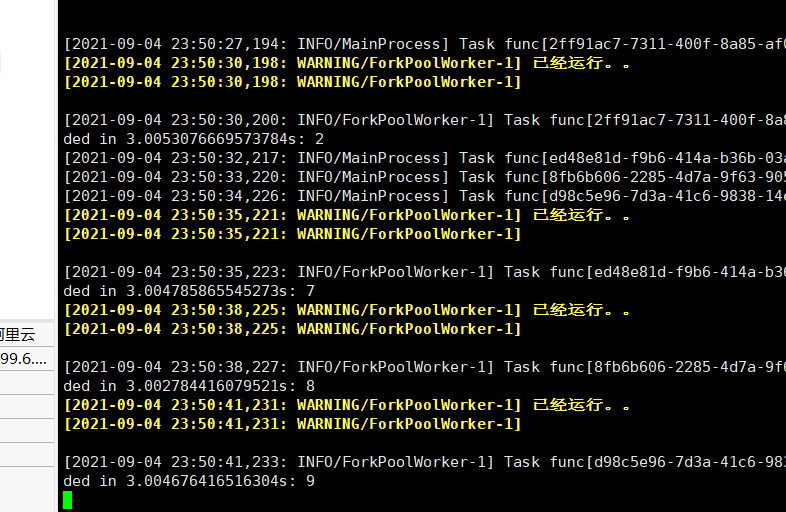

After restarting an asynchronous, only the one that is restarted will fail, and the other asynchronous that is not restarted can succeed:

Result Library:

There are failures and successes. It seems that after modifying the asynchronous task, you should restart the worker, otherwise it will not take effect