1.Docker network

docker's network function is still relatively weak.

After docker is installed, three types of networks will be created automatically: bridge, host and none

1.1 bridging network

Premise:

Stop the docker compose warehouse in front

docker-compose stop

Install bridge network

yum install bridge-utils -y

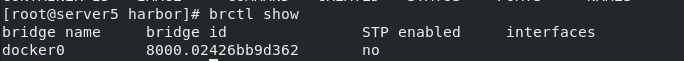

brctl show

docker run -d --name demo nginx

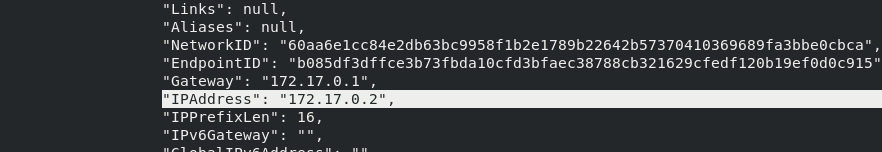

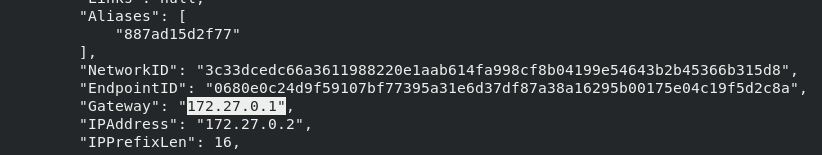

The docker inspect demo view information ip is 0.2

docker run -it --rm busybox

docker run -it --rm busybox

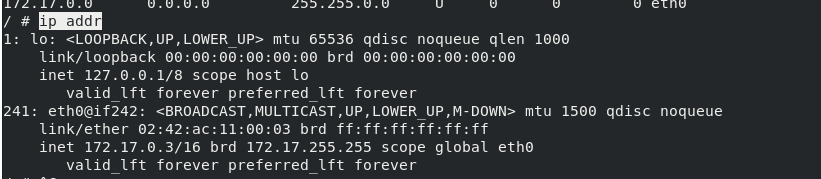

ip addr ##ip is 0.3

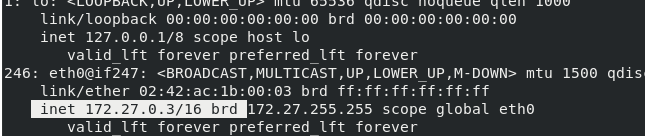

1.2.host network mode

docker run -d --name demo --network host nginx

Looking at the port, you can find that the image nginx running docker occupies port 80

Parameter - network host will occupy port 80 of the virtual machine. Other nginx will not use port 80

Parameter - network host will occupy port 80 of the virtual machine. Other nginx will not use port 80

1.3.none mode

docker run -it --rm --network none busybox

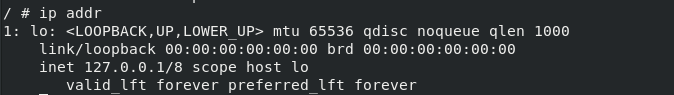

Only lo interface

Only lo interface

2. Custom network

2.1. Create a custom bridge

It is recommended to use a custom network to control which containers can communicate with each other. You can also automatically DNS resolve container names to IP addresses.

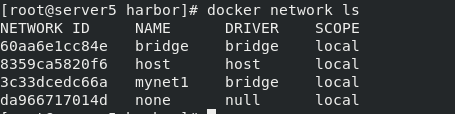

Create a bridge. The view type is bridge

docker network create mynet1 (Create bridge by default)or docker network create -d bridge mynet1 docker network ls

Test:

docker run -d --name demo1 --network mynet1 nginx

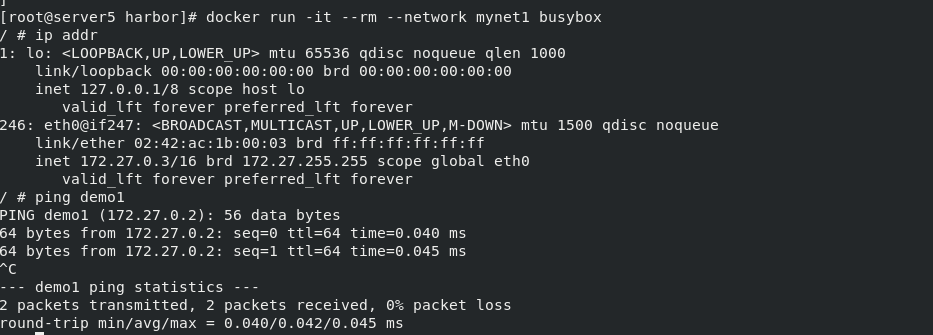

docker run -it --rm --network mynet1 busybox

ping demo1

Yes, the ping demo can be directly successful, indicating that this mode can provide parsing for

In addition, the system will automatically allocate ip, and the ip will increase monotonically according to the startup order, but the address will be resolved automatically when ping ing the name

2.2. User defined network segment

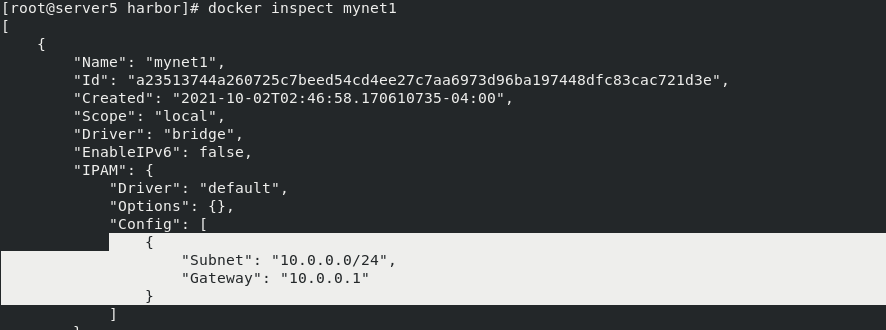

docker network rm mynet1 docker network create -d bridge --subnet 10.0.0.0/24 --gateway 10.0.0.1 mynet1

docker inspect mynet1 ## view information

2.3. Specify ip manually

Use the – ip parameter to specify the container ip address, but it must be on a custom bridge

docker run -d --name web1 --network mynet1 nginx

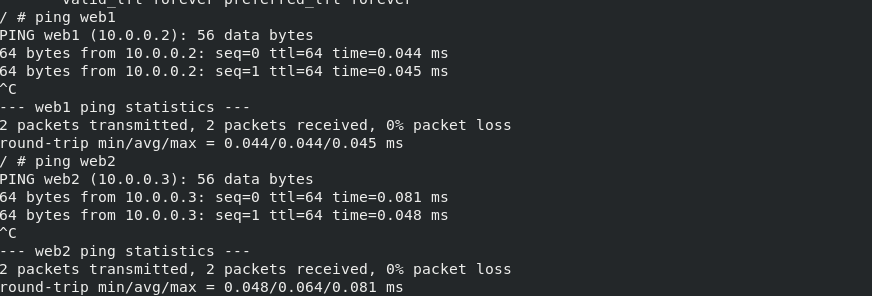

docker run -it --rm --network mynet1 busybox

/ # ip addr

254: eth0@if255: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:0a:00:00:03 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.3/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

/ # ping web1 PING 10.0.0.1 (10.0.0.2): 56 data bytes 64 bytes from 10.0.0.2: seq=0 ttl=64 time=0.043 ms 64 bytes from 10.0.0.2: seq=1 ttl=64 time=0.042 ms --- 10.0.0.1 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.042/0.042/0.043 ms

cat /etc/hosts

Automatic parsing

Automatic parsing

You can also ping the gateway

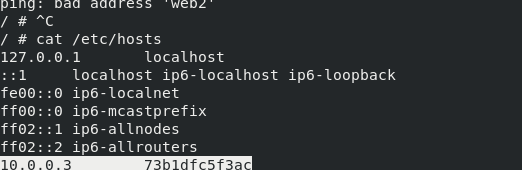

2.4. Dual network cards to realize communication between different network segments

Containers bridged to different bridges do not communicate with each other.

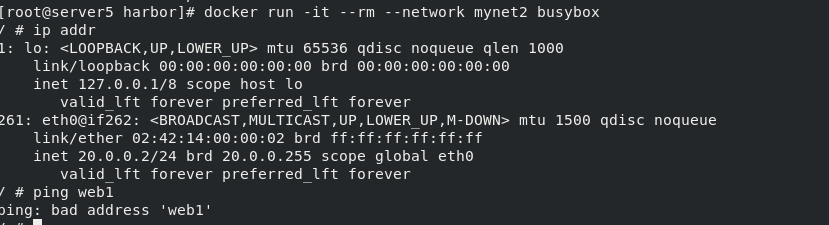

docker network create -d bridge --subnet 20.0.0.0/24 --gateway 20.0.0.1 mynet2

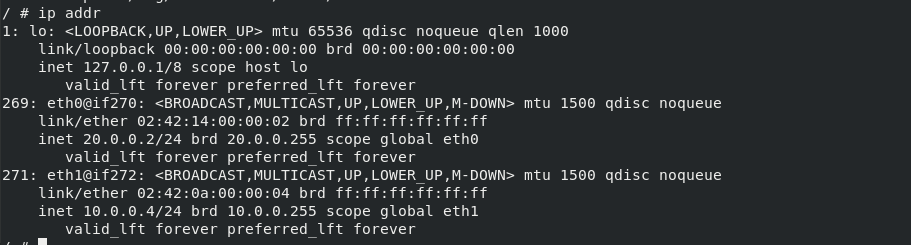

docker run -it --rm --network mynet2 busybox

ping failed

ping failed

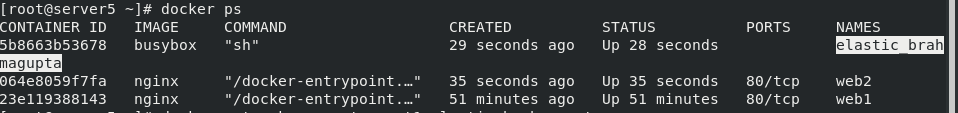

docker ps docker network connect mynet1 elastic_ After Brahmagupta

docker network connect mynet1 elastic_ After Brahmagupta

ping web1/web2

success

success

The principle is to add 10.0.0 ip in busybox

3.Docker container communication

In addition to ip communication, containers can also communicate by container name.

dns resolution must be used in custom networks.

Use – network container:web1 to specify when creating a container.

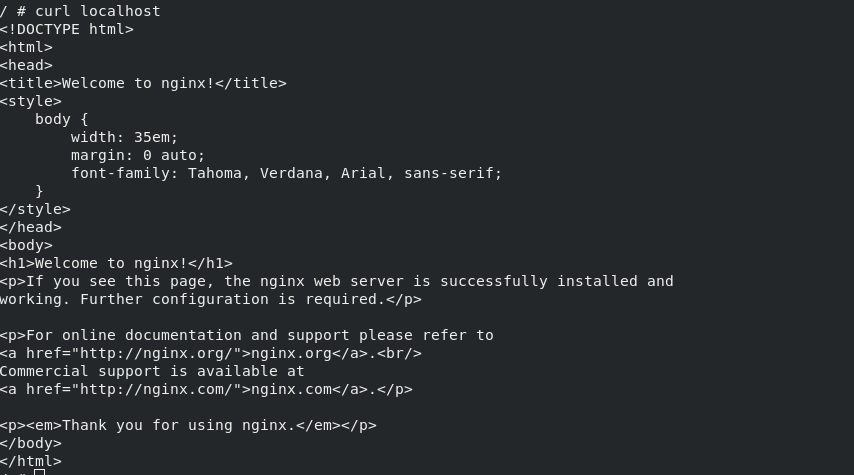

docker run -it --rm --network container:web1 busyboxplus

The Docker container in this mode will share a network stack, so that the two containers can communicate efficiently and quickly using localhost.

curl localhost

– link can be used to link 2 containers.

– link format:

–link **:alias

Name is the name of the source container, and alias is the alias of the source container under link.

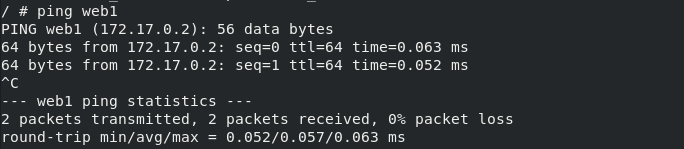

docker run -d --name web1 nginx docker run -it --rm --link web1:nginx busyboxplus

ping web1

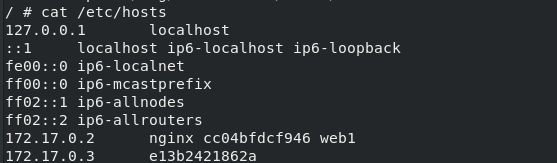

cat /etc/hosts

cat /etc/hosts

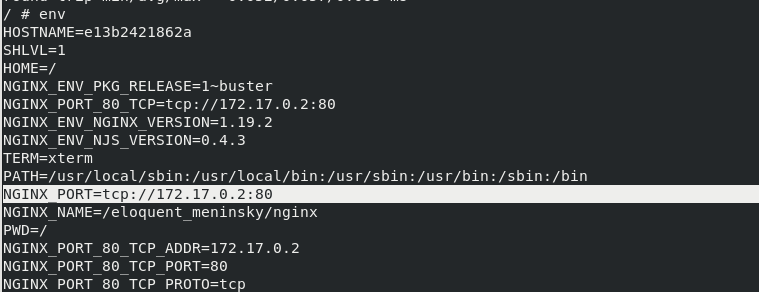

env

Open a terminal link server5 again

docker stop web1 docker run -d --name web2 nginx docker start web1

We ping again and find that the IP has changed (from 172.17.02 > > 172.17.0.4), but we can still ping the name web1 or nginx successfully, indicating that its i name changes with the real-time update of the IP

At this time, we check / etc/hosts and have automatically modified the parsing for us

127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.4 nginx cc04bfdcf946 web1 172.17.0.3 01239d8f8819

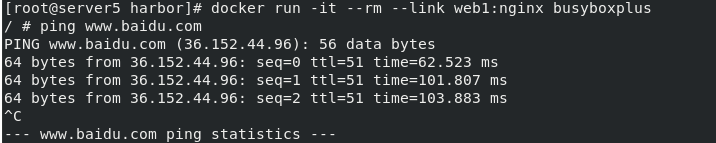

3.1. Internal access external

The inside of the container can also access the external network

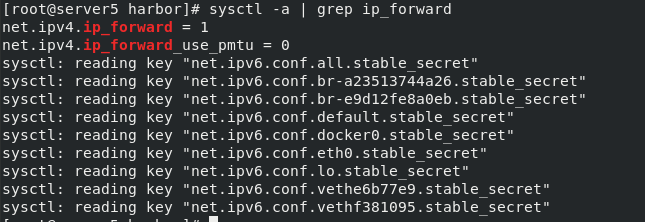

sysctl -a | grep ip_forward

We can ping baidu.com in the container

We can ping baidu.com in the container

docker run -it --rm --link web1:nginx busybox ping baidu.com

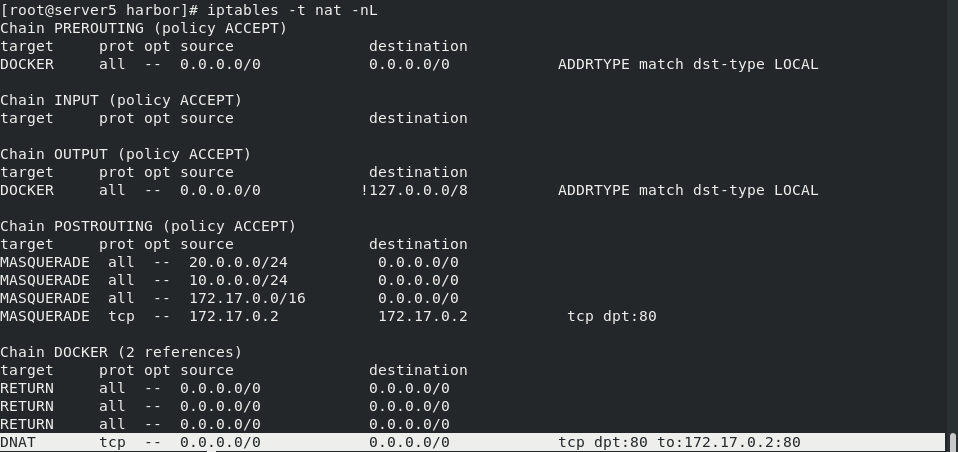

3.2. External access internal

How the container accesses the Internet is realized through the SNAT of iptables

Internet access container

Port mapping

-The p option specifies the mapping port

Delete the previous container first

docker run -d --name web1 -p 80:80 nginx

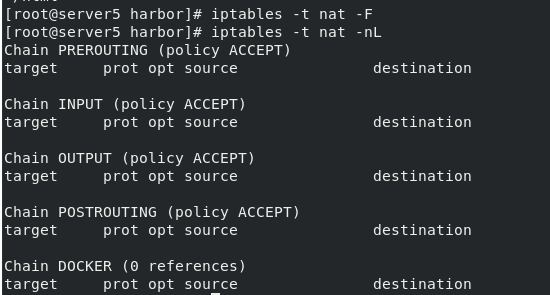

iptables -t nat -nL

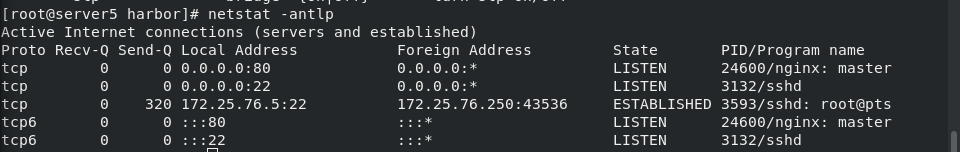

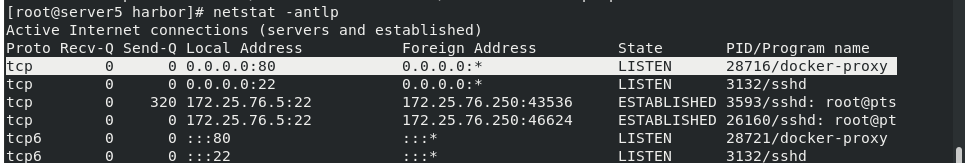

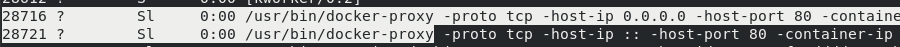

netstat -antlp found docker proxy

netstat -antlp found docker proxy

3.2.1 principle

Docker proxy and iptables DNAT are used in the external network access container

The host accesses the native container using iptables DNAT

External hosts access containers or access between containers is a docker proxy implementation

Test:

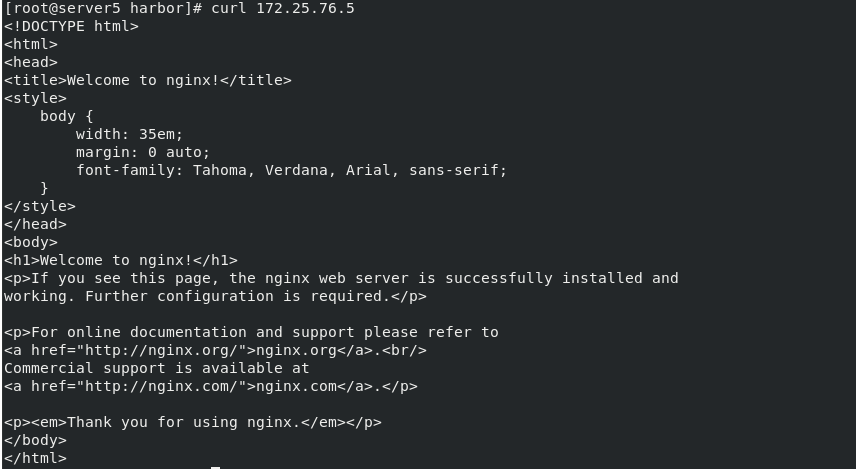

curl 172.25.76.5

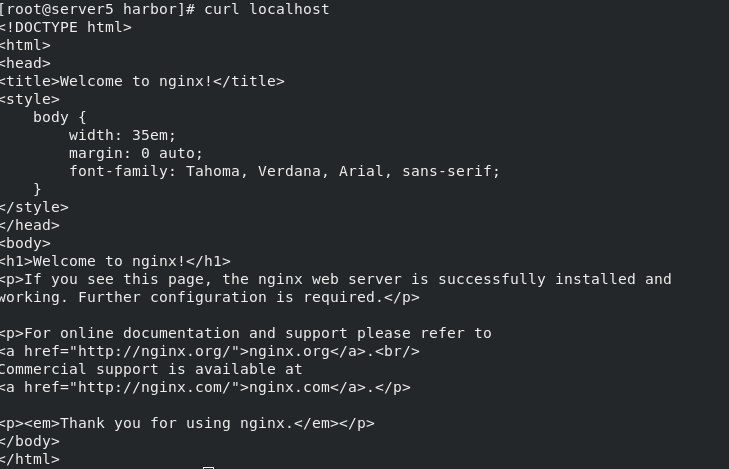

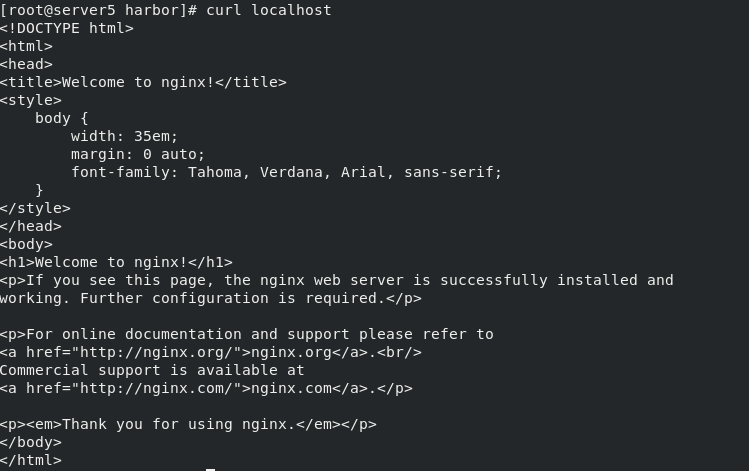

curl localhost

Now try to delete the policy in iptables and test again

iptables -t nat -F iptables -t nat -nL

curl localhost

curl localhost

Because the docker proxy is still there

Because the docker proxy is still there

If you kill the docker proxy process, it's gone

kill -9 28716 28721

kill -9 28716 28721

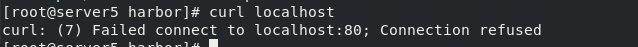

Visit again

When we reopen docker

When we reopen docker

iptables will be regenerated

systemctl restart docker iptables -t nat -I POSTROUTING -s 172.17.0.0/16 -j MASQUERADE

`curl 172.25.76.5

You can also access the release page of nginx

The results show that:

There must be at least one policy in docker proxy and iptables

4. Create MAC VLAN network

Construction of experimental environment:

Server5 (original virtual machine):

cat /etc/yum.repos.d/dvd.repo

[dvd] name=rhel7.6 baseurl=http://172.25.76.250/rhel7.6 gpgcheck=0 [docker] name=docker-ce baseurl=http://172.25.76.250/20 gpgcheck=0

scp /etc/yum.repos.d/dvd.repo root@172.25.76.4:/etc/yum.repos.d/ scp /etc/sysctl.d/docker.conf server4:/etc/sysctl.d/

server4:

Yum install docker ce - y

systemctl enable --now docker

The steps of installing docker are omitted. Please read the previous blog

Plus busyboxplus

docker pull radial/busyboxplus

docker tag radial/busyboxplus:latest busyboxplus:latest

docker rmi radial/busyboxplus:latest

4.1. Use eth1 network card to communicate

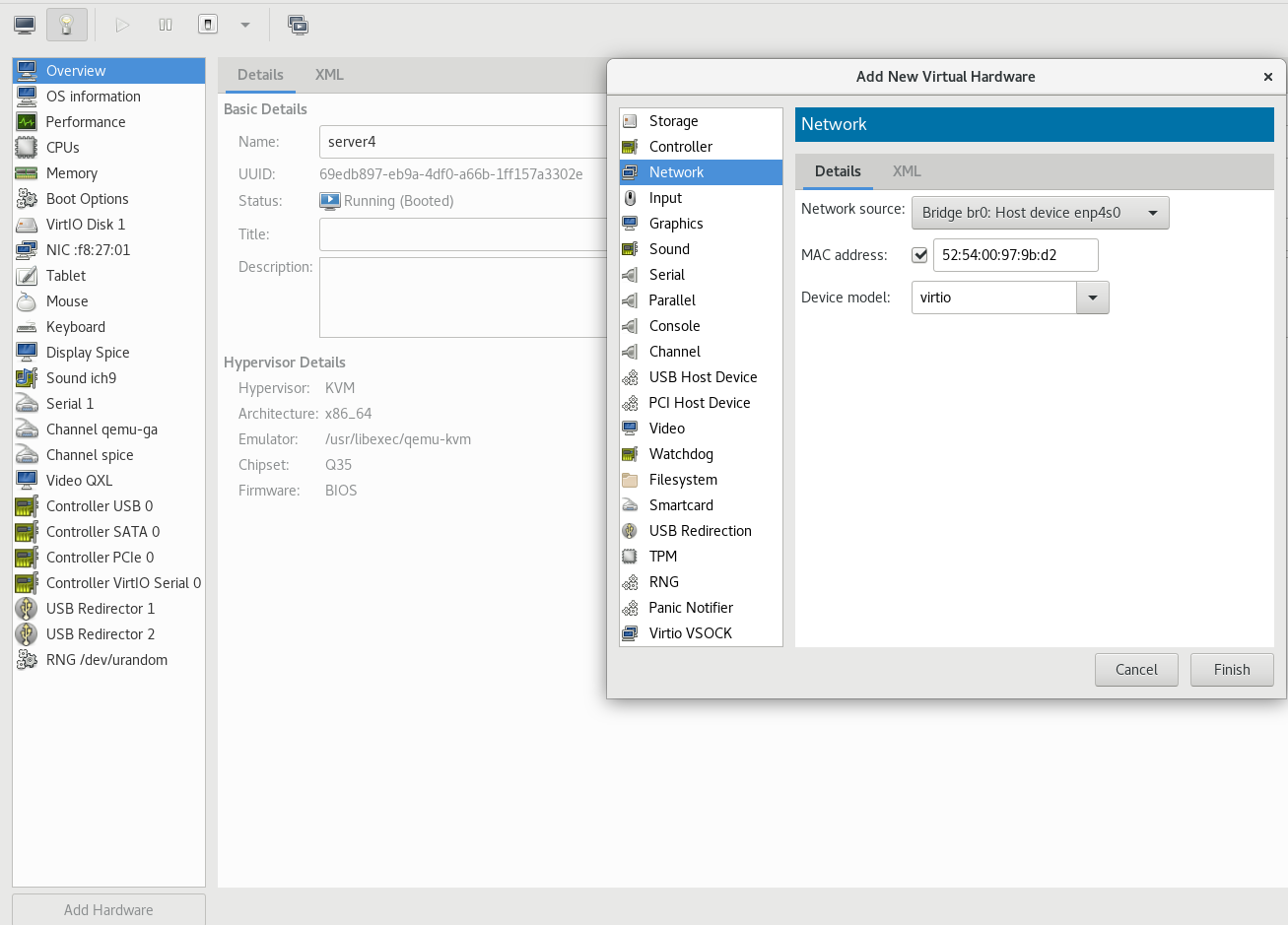

Server 4 (5): all need to do the same

Add a network card

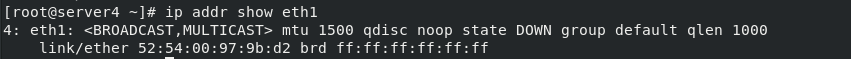

ip addr show eth1 #View ip

cp /etc/sysconfig/network-scripts/ifcfg-eth0 /etc/sysconfig/network-scripts/ifcfg-eth1

cp /etc/sysconfig/network-scripts/ifcfg-eth0 /etc/sysconfig/network-scripts/ifcfg-eth1

vim /etc/sysconfig/network-scripts/ifcfg-eth1

BOOTPROTO=none ONBOOT=yes DEVICE=eth1

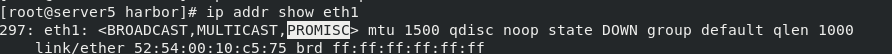

ip link set eth1 promisc on

ifup eth1

It is found that the promise mode appears in eth1 of ip addr

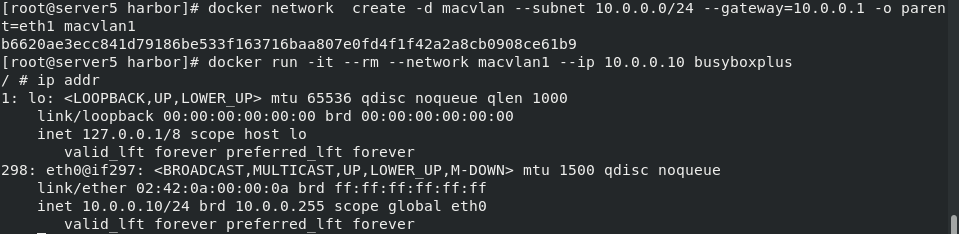

server5:

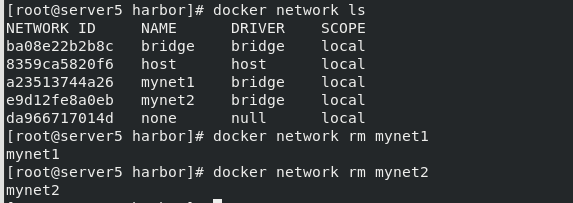

Delete the network bridge of the previous experiment

Create a container with specified conditions on server5, and then run the container:

docker network create -d macvlan --subnet 10.0.0.0/24 --gateway=10.0.0.1 -o parent=eth1 macvlan1 docker run -it --rm --network macvlan1 --ip 10.0.0.10 busyboxplus ip addr

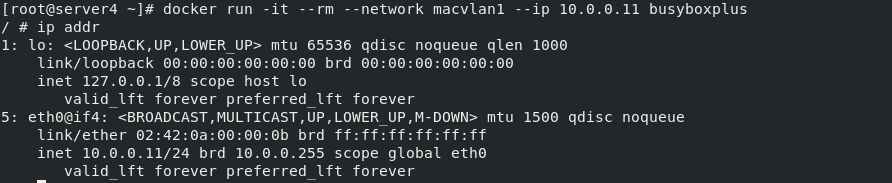

On server4:

On server4:

Create a container and run the same operation as server5 (only the IP is inconsistent!)!

docker network create -d macvlan --subnet 10.0.0.0/24 --gateway=10.0.0.1 -o parent=eth1 macvlan1 docker run -it --rm --network macvlan1 --ip 10.0.0.11 busyboxplus ip addr

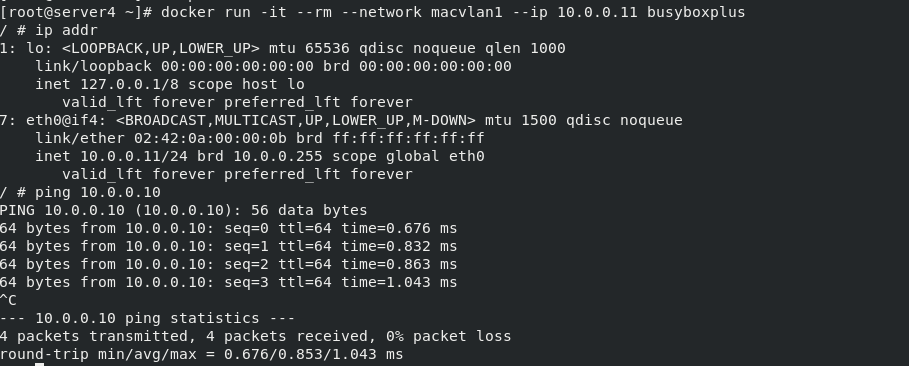

Test:

Test:

ping the ip address of the docker of server4 on the docker of server5:

server5:

docker run -d --name web1 --network macvlan1 --ip 10.0.0.10 nginx

server4:

docker run -it --rm --network macvlan1 --ip 10.0.0.11 busyboxplus

ping 10.0.0.10

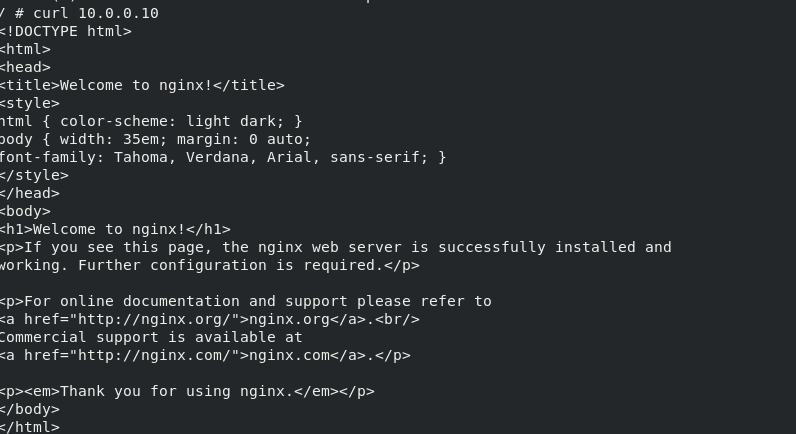

curl 10.0.0.10

curl 10.0.0.10

success

success

Virtual VLAN network card can also be used for experiments:

On server4 and server5:

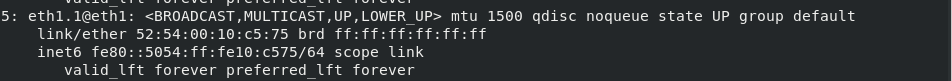

docker network create -d macvlan --subnet 20.0.0.0/24 --gateway 20.0.0.1 -o parent=eth1.1 macvlan2 ip addr

Create a container with ip 20.0.0.10 on server5 and 20.0.0.11 on server4

Create a container with ip 20.0.0.10 on server5 and 20.0.0.11 on server4

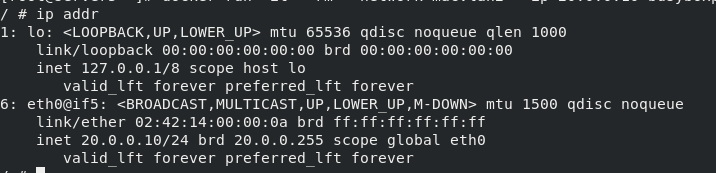

[root@server5 ~]# docker run -it --rm --network macvlan2 --ip 20.0.0.10 busyboxplus [root@server4 ~]# docker run -it --rm --network macvlan2 --ip 20.0.0.11 busyboxplus

ipaddr

View ip

server5:

server4:

server4:

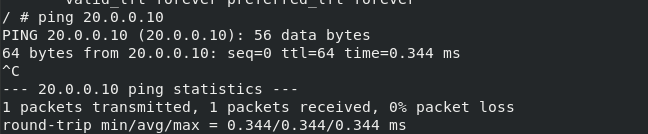

ping 20.0.0.10

success

success

conclusion

The IP of different network segments cannot be interconnected, but two containers in different network segments can generate the same network segment IP address by docker network connect macvlan1 to realize the communication between containers.

About virtual VLAN cards:

Virtual VLAN network cards are not actual network interface devices, and can also appear in the system as network interfaces. However, they do not have their own configuration files, but only VLAN virtual network cards generated by adding physical networks to different VLANs. Their network interface names are eth0.1 and eth1.1.

Note: when VLAN virtual network card needs to be enabled, there must be no IP address configuration information on the network interface of the associated physical network card, and the subnet cards of these primary physical network cards must not be enabled and must not have IP address configuration information.