Article reference source: javaguide

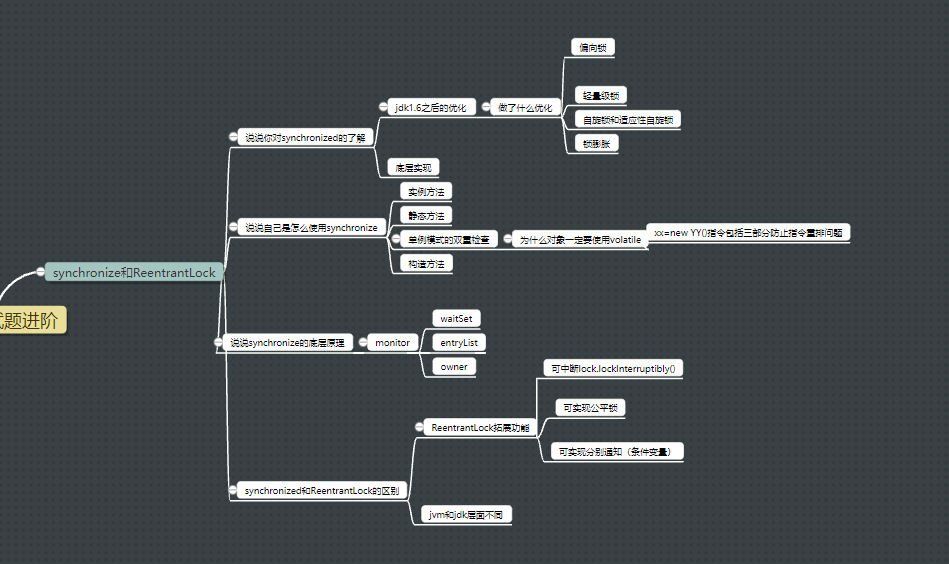

1.synchronized

1.1 what do you know about synchronized?

- Heavyweight lock. The monitor lock is used at the bottom

- Context switching consumes a lot of time and resources because java threads need to be converted to operating system threads and the transfer from user state to kernel state

- After jdk1.6, a large number of optimizations are carried out, including bias lock, lightweight lock, spin lock and lock expansion

1.2 how do you use synchronized?

- Decorated instance method (object instance as lock)

- Modify static methods (class objects as locks), and call static methods and instance methods respectively, which are not mutually exclusive

- Decorated code block

Talk about the singleton mode of double verification?

- Multiple threads may pass through the first judgment, and then only one thread can enter the creation, but it should be judged once before creation. After the creation is successful, other threads that are judged to be successful enter the synchronization block creation, but they are rejected in the second judgment to prevent multiple creation.

Why does double validation use volatile to decorate objects?

Because there are three steps when xx=new yy()

- Allocate space

- initialization

- Assignment address

If volatile is not added, instruction replays may occur in these executions. After allocating space, the address assignment may be executed first, and then the switching thread obtains incomplete (uninitialized) objects.

1.3 does the construction method need to use synchronized?

No, because the constructor itself is thread safe. Create new objects individually. Is not a shared resource.

1.3 talk about the underlying principle of synchronized

- At the jvm level

- Obtain the lock through the monitorentry instruction and save a reference

- Then, monitorexit releases the lock

- Moreover, the object has an ObjectMonitor. If there is an associated monitor, the counter is 1, otherwise it is 0. This is why wait/notify cannot be used without synchronized. If it is used, an error IllegalMonitorStateException will be reported

- If it is a method, ACC will be used_ Synchronized to identify and call monitor

//javac SynchronizedDemo.class compilation

//javap -c -s -v -l SynchronizedDemo.class generate bytecode file

public class SynchronizedDemo {

public void method() {

synchronized (this) {

System.out.println("synchronized Code block");

}

}

}

3: monitorenter

4: getstatic #2 // Field java/lang/System.out:Ljava/io/PrintStream;

7: ldc #3 // String synchronized

9: invokevirtual #4 // Method java/io/PrintStream.println:(Ljava/lang/String;)V

12: aload_1

13: monitorexit

Summary: the essence is the acquisition of monitor

What optimization has been done after 1.4jdk1.6?

- Bias lock, lightweight lock, spin lock, lock expansion, lock elimination, lock coarsening, adaptive spin lock

- Lock status, no lock, bias lock, lightweight lock, heavyweight lock

1.5 talk about the difference between synchronized and ReentrantLock?

-

Are reentrant locks

-

synchronize is implemented at the jvm level, and ReentrantLock is implemented at the JDK level

-

Additional features of ReentrantLock include

- Waiting can be interrupted. lock.lockInterruptibly() can be implemented. The waiting thread can choose to give up. Assuming that two threads are competing for the same lock, and one of them obtains it and takes a long time to execute, the waiting thread can interrupt the waiting through the external call interrupt. But if it's lock, it won't work. You still have to wait for the previous thread to execute and find yourself interrupted after entering.

- Fair lock can be realized,

- Optional notification is possible. By conditional variable

2.volatile keyword

2.2 talk about JMM

Threads can read variables into local memory for execution (caching), resulting in data inconsistency. If two threads are running at the same time to read variables and one performs I + +, dirty data will appear in one i-i. another explanation is that JIT recompiles commonly used variables directly instead of fetching them from memory.

2.3 three important features of concurrent programming

- Atomicity

- visibility

- Order

2.4 what is the difference between synchronize and volatile?

- volatile is lightweight and performs better, but it cannot achieve atomicity

- volatile can only modify variables, and synchronized can modify methods and synchronization blocks

- volatile is used for multi-threaded visibility, and synchronized is used to solve the synchronization of multi-threaded access to resources

3.ThreadLocal

Talk about the principle of ThreadLocal

After reading many articles, I understand ThreadLocal like this. A threadload is created here, but multiple threads can have their own ThreadLocal values independently. The reason is threadLocals in the Thread in the source code. In fact, the set method of threadload creates ThreadLocalMap, and then stores itself in the Thread as a key and the passed in value. Even if ThreadLocal is referenced by multiple threads, the final value is obtained by what you store in the ThreadLocalMap of the corresponding Thread. This is the principle of isolation

ThreadLocal.ThreadLocalMap threadLocals = null;//map reference in Thread

//ThreadLocal is the method called when initializing set

void createMap(Thread t, T firstValue) {

t.threadLocals = new ThreadLocalMap(this, firstValue);

}

//Then look at the set method. In fact, it takes out the threadLocals reference of thread t to create a ThreadLocalMap object. ThreadLocal is used as the key and stored in value. Even if the keys of multiple threads are the same, their values are different

public void set(T value) {

Thread t = Thread.currentThread();

ThreadLocalMap map = getMap(t);

if (map != null)

map.set(this, value);

else

createMap(t, value);

}

public class ThreadLocalExample implements Runnable{

// In the example of brother guide, ThreadLocal is only one.

private static final ThreadLocal<SimpleDateFormat> formatter = ThreadLocal.withInitial(() -> new SimpleDateFormat("yyyyMMdd HHmm"));

public static void main(String[] args) throws InterruptedException {

ThreadLocalExample obj = new ThreadLocalExample();

for(int i=0 ; i<10; i++){

Thread t = new Thread(obj, ""+i);

Thread.sleep(new Random().nextInt(1000));

t.start();

}

}

@Override

public void run() {

System.out.println("Thread Name= "+Thread.currentThread().getName()+" default Formatter = "+formatter.get().toPattern());

try {

Thread.sleep(new Random().nextInt(1000));

} catch (InterruptedException e) {

e.printStackTrace();

}

//formatter pattern is changed here by thread, but it won't reflect to other threads

formatter.set(new SimpleDateFormat());

System.out.println("Thread Name= "+Thread.currentThread().getName()+" formatter = "+formatter.get().toPattern());

}

}

4. Thread pool

4.1 why use thread pool

- Reduce resource consumption and reuse threads

- Improve the response speed, so that the task can be executed since, and there is no need to create a thread

- Improve thread manageability

4.2 difference between runnable and Callable

- Runnable cannot return an exception, but Callable can

4.3 difference between execute and submit

- execute does not return results, but submit can return results

- Moreover, execute cannot know the task execution status. submit can obtain the task execution information and results by returning the future object

public Future<?> submit(Runnable task) {//Create FutureTask and execute

if (task == null) throw new NullPointerException();

RunnableFuture<Void> ftask = newTaskFor(task, null);

execute(ftask);

return ftask;

}

protected <T> RunnableFuture<T> newTaskFor(Runnable runnable, T value) {

return new FutureTask<T>(runnable, value);

}

4.4 how to create a thread pool

Why are Executors not recommended?

- The queues of FixedThreadPool and singlethreadexecution are infinite, and countless tasks can be created, resulting in oom memory overflow

- CacheThreadPool and ScheduleThreadPool create threads infinitely, resulting in memory overflow and applying for too many thread stacks

Mode 1

Implement ThreadPoolExecutor through construction method

Mode 2

It is constructed through Executors, and in fact, it is constructed through ThreadPoolExecutor

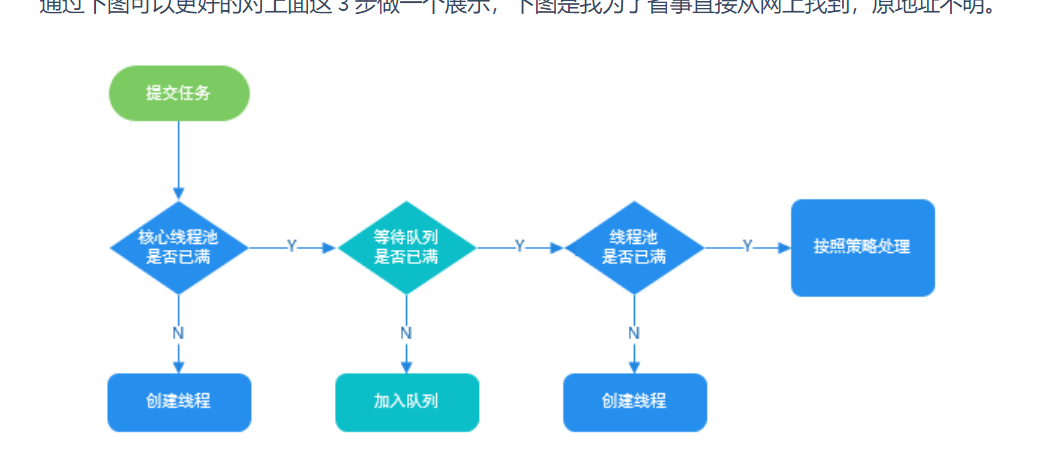

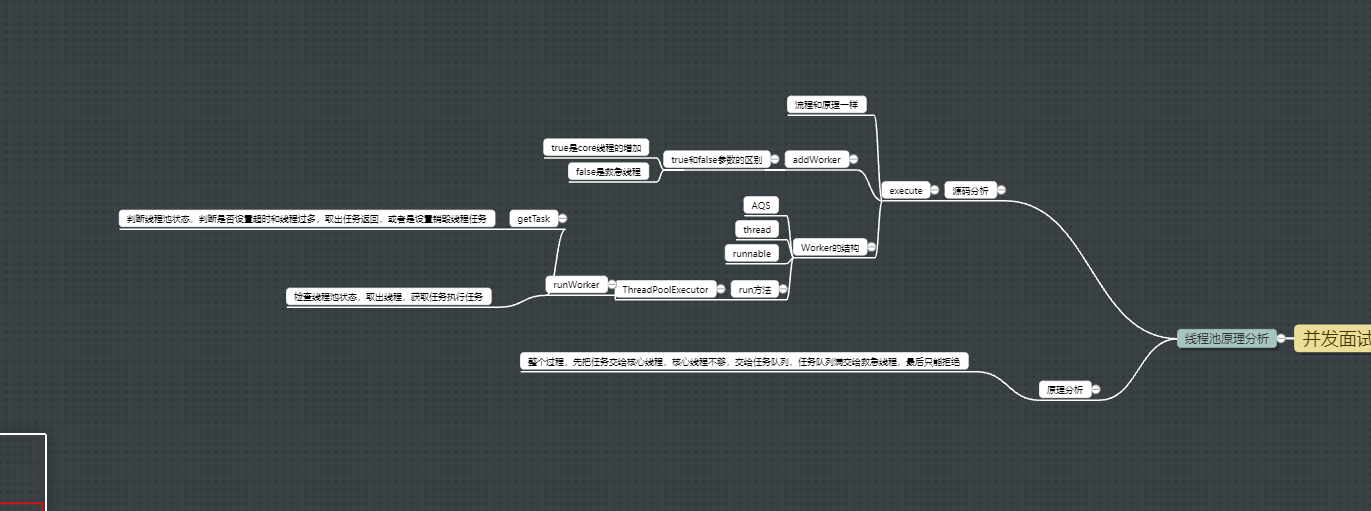

4.7 principle analysis of thread pool

In fact, the task is handed over to the worker of the workSet for execution. If the thread is not idle, it should be added to the blocking queue. If the blocking queue is full, it should create an emergency thread

execute

//32 bits. Here, the top 3 bits of int are used as thread pool status, and the last 29 bits are used as the number of currently running worker s private final AtomicInteger ctl = new AtomicInteger(ctlOf(RUNNING, 0)); //Blocking queue for storing tasks private final BlockingQueue<Runnable> workQueue; //A collection of worker s, which is stored in set private final HashSet<Worker> workers = new HashSet<Worker>(); //Maximum number of worker s reached in history private int largestPoolSize;

public void execute(Runnable command) {

//No task threw an exception

if (command == null)

throw new NullPointerException();

//ctl is the status of the thread, which determines the status of the current thread

int c = ctl.get();

//If the thread pool execution task is smaller than the core thread

if (workerCountOf(c) < corePoolSize) {

//Add thread processing task

if (addWorker(command, true))

return;

c = ctl.get();

}

//If the execution task is larger than the core thread, judge whether the thread pool is working. If so, add the task to the queue

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

//Judge again that if the thread pool is not working, remove the task and execute the reject policy (the thread pool may end)

if (! isRunning(recheck) && remove(command))

reject(command);

//If the number of valid threads is 0

else if (workerCountOf(recheck) == 0)

//Then you need to create a temporary thread. The boundary used by false is maxPoolSize. If the task is empty, the thread will directly look in the task queue.

addWorker(null, false);

}

//Create a temporary thread. If it cannot be created, execute the reject policy

else if (!addWorker(command, false))

reject(command);

}

- First look at the core, then the queue, and finally see if there is an emergency thread

addWorker source code

- Judge the status of thread pool. If it is stop and shutdown, stop immediately and return false

- If there is no problem, use CAS+while to modify the status and add a thread. If the addition fails, first check whether the status changes. If the status changes to stop or shutdown, return to the outer for again and return to false.

- No problem. The following is to create a thread worker. After adding it successfully, start the thread (the thread is started here)

- Obviously, t here is actually the thread inside the worker, that is, the task is executed by the thread inside the worker.

private boolean addWorker(Runnable firstTask, boolean core) {

retry:

for (;;) {

//This state variable

int c = ctl.get();

//Get thread pool status

int rs = runStateOf(c);

//If it is in the shutdown state or stop state, you cannot join

if (rs >= SHUTDOWN &&

! (rs == SHUTDOWN &&

firstTask == null &&

! workQueue.isEmpty()))

return false;

//CAS+while retry modification status

for (;;) {

int wc = workerCountOf(c);

if (wc >= CAPACITY ||

wc >= (core ? corePoolSize : maximumPoolSize))

return false;

if (compareAndIncrementWorkerCount(c))//If the status is modified successfully, the cycle ends

break retry;

//Get the state again. If it is found that it has been changed, start from the outermost layer again

c = ctl.get(); // Re-read ctl

if (runStateOf(c) != rs)

continue retry;

// else CAS failed due to workerCount change; retry inner loop

}

}

boolean workerStarted = false;

boolean workerAdded = false;

Worker w = null;

try {

//Create and join threads

w = new Worker(firstTask);

final Thread t = w.thread;

if (t != null) {

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

// Recheck while holding lock.

// Back out on ThreadFactory failure or if

// shut down before lock acquired.

int rs = runStateOf(ctl.get());

if (rs < SHUTDOWN ||

(rs == SHUTDOWN && firstTask == null)) {

if (t.isAlive()) // precheck that t is startable

throw new IllegalThreadStateException();

workers.add(w);

int s = workers.size();

if (s > largestPoolSize)

largestPoolSize = s;

//Join successfully

workerAdded = true;

}

} finally {

mainLock.unlock();

}

//If the join is successful, the thread is started

if (workerAdded) {

t.start();

//Thread started successfully

workerStarted = true;

}

}

} finally {

//If the startup fails, execute another method

if (! workerStarted)

addWorkerFailed(w);

}

return workerStarted;

}

Structure of Worker

- Thread is an internal thread used to execute tasks. This thread is opened in execute

- AQS is used to ensure thread safety when obtaining and executing tasks. Tasks are not taken out and executed twice

- And runnable is implemented

- The run method calls runWorker and passes itself as a lock to ensure thread safety

private final class Worker

extends AbstractQueuedSynchronizer

implements Runnable

{

/**

* This class will never be serialized, but we provide a

* serialVersionUID to suppress a javac warning.

*/

private static final long serialVersionUID = 6138294804551838833L;

/** Thread this worker is running in. Null if factory fails. */

//The thread used to execute the task

final Thread thread;

/** Initial task to run. Possibly null. */

//Stored tasks currently ready for execution

Runnable firstTask;

/** Per-thread task counter */

//Total number of tasks completed

volatile long completedTasks;

Worker(Runnable firstTask) {

setState(-1); // inhibit interrupts until runWorker

this.firstTask = firstTask;

this.thread = getThreadFactory().newThread(this);

}

public void run() {

//Method of ThreadPoolExecutor

runWorker(this);

}

. . . . AQS Implementation method of

}

Operation method of ThreadPoolExecutor

The reason why this place run s directly is that the method caller is worker

- Execute after starting the thread, the thread can be interrupted, and it keeps looking for tasks to execute in a loop

final void runWorker(Worker w) {

//Get current thread

Thread wt = Thread.currentThread();

Runnable task = w.firstTask;

w.firstTask = null;

//Allow to be interrupted

w.unlock(); // allow interrupts

boolean completedAbruptly = true;

try {

//If a task is executed directly, if not, go to the queue. If there is no queue, end the cycle

while (task != null || (task = getTask()) != null) {

//Lock it. The shutdown method should also lock it. If it finds that the thread is executing, it will not be interrupted

w.lock();

if ((runStateAtLeast(ctl.get(), STOP) ||

(Thread.interrupted() &&

runStateAtLeast(ctl.get(), STOP))) &&

!wt.isInterrupted())

wt.interrupt();

try {

//Pre mission development

beforeExecute(wt, task);

Throwable thrown = null;

try {

//Direct execution of tasks

task.run();

} catch (RuntimeException x) {

thrown = x; throw x;

} catch (Error x) {

thrown = x; throw x;

} catch (Throwable x) {

thrown = x; throw new Error(x);

} finally {

//Post mission expansion

afterExecute(task, thrown);

}

} finally {

task = null;

w.completedTasks++;

w.unlock();

}

}

completedAbruptly = false;

} finally {

processWorkerExit(w, completedAbruptly);

}

}

getTask

- Check the thread pool status, and shutdown or stop will return null

- Then calculate the number of effective threads. If it exceeds the number of cores, check whether the core threads are set to wait for timeout destruction, or if there are too many effective threads, the waiting timeout keepAliveTime will be set when the task is returned to the thread. If the waiting timeout is set, the worker will be destroyed automatically.

private Runnable getTask() {

boolean timedOut = false; // Did the last poll() time out?

for (;;) {

//Get status

int c = ctl.get();

int rs = runStateOf(c);

// Check if queue empty only if necessary.

//If the status is shutdown or stop or the queue is empty, null is returned

if (rs >= SHUTDOWN && (rs >= STOP || workQueue.isEmpty())) {

decrementWorkerCount();

return null;

}

int wc = workerCountOf(c);

// Are workers subject to culling?

//In fact, the meaning here is to see whether the core thread is also set to wait for timeout destruction, or the number of effective threads is greater than the number of core threads

boolean timed = allowCoreThreadTimeOut || wc > corePoolSize;

//If the acquisition task times out or the number of valid threads is too large, the number of valid threads will be modified to reduce the number of threads

if ((wc > maximumPoolSize || (timed && timedOut))

&& (wc > 1 || workQueue.isEmpty())) {

//Modify the state and reduce one valid thread

if (compareAndDecrementWorkerCount(c))

return null;

continue;

}

try {

//Take out ordinary tasks or timeout tasks. If wait timeout is set, the worker will be automatically destroyed when no task comes in the queue for more than a time

Runnable r = timed ?

workQueue.poll(keepAliveTime, TimeUnit.NANOSECONDS) :

workQueue.take();

if (r != null)

return r;

timedOut = true;

} catch (InterruptedException retry) {

timedOut = false;

}

}

}

- Essentially, the execute trilogy checks the thread pool status, creates threads, and starts threads. Then, the thread spins while waiting for the task. If the task is larger than the core thread, it is stored in the queue first. If the queue is full, try to create an emergency thread. If the emergency thread is not enough, reject the policy.

- Finally, after the task is executed, when the thread spins and waits, it will judge whether to set the core thread timeout, or the number of effective threads is greater than the number of core threads, then a timeout destruction task will be set for the task queue and handed over to the thread for execution. Once the timeout occurs, the worker will be destroyed immediately

- worker is both a lock and a runnable thread.

5. Atomic

Talk about the principle of atomic class

- In fact, CAS+volatile and native methods are used to ensure that the value obtained is the latest value and an atomic operation

What's wrong with an ordinary int?

- i + + actually includes multiple instructions, such as get, add and put. When thread switching occurs, the instructions are interleaved, resulting in the final data calculation error

classification

Single basic type

- AtomicIteger

- AtomicBoolean

array

- AtomicIntegerArray

Reference (handling atomicity of object assignment)

- AtomicReference

Object property modification type

- AtomicIntegerFieldUpdater

The way to use it is to pass the object class and modify the field through the newUpdater. When you want to modify, you can call the method and pass in the object to be modified

class AtomicIntegerTest {

public static void main(String[] args) {

AtomicIntegerFieldUpdater<User> user=AtomicIntegerFieldUpdater.newUpdater(User.class,"age");

User user1 = new User("123", 23);

System.out.println(user.getAndIncrement(user1));

System.out.println(user.get(user1));

}

}

class User {

private String name;

public volatile int age;

public User(String name, int age) {

super();

this.name = name;

this.age = age;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

How to solve ABA problem?

- In fact, atomic classes can know whether they have been modified by other threads by comparing the old values, but the only unavoidable situation is. Thread 1 wants to change a to B. at this time, it switches to thread 2. The task of thread 2 is to change a to B and then change B to A. then, it switches to thread 1 again and changes a to B. However, it has been modified but not recorded, because the old value is compared with the new value, resulting in the wrong judgment of thread 1

Application scenario of the problem?

For example, if you want to do a preferential activity, people with a card less than 100 can get a reward of 20 yuan. At this time, the boss uses CAS and while to operate the account, but after adding money to one of the accounts, the user happens to spend another 20 yuan, and he will be rewarded repeatedly. The boss lost a lot.

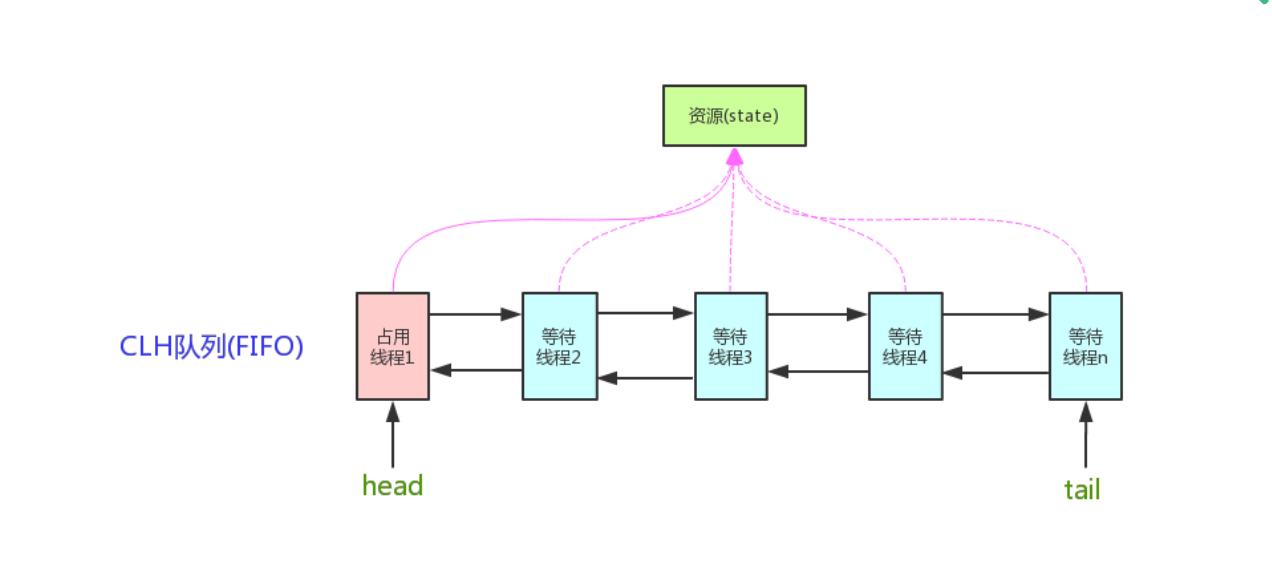

6.AQS

6.1 talk about the principle of AQS

If the shared resource is idle, the thread currently requesting the shared resource sets a valid thread and locks the shared resource. If the shared resource is occupied, other threads will enter the blocking queue and wait

6.2 how does AQS share resources?

- Exclusive. Only one thread can execute. ReentrantLock

- Shared sharing can be executed by multiple threads. CountDownLatch,Semaphore,CycliBarrier

The underlying implementation of AQS?

The template method is used

- Is the ishldexclusive() thread exclusive to resources

- tryAcquire (int) exclusive mode. The attempt to obtain true succeeded

- tryRelease (int) exclusive mode to release resources

- tryAcquireShared (int) sharing mode

- Tryrereleaseshared (int) sharing mode

Other methods are final and cannot be implemented by subclasses

6.3 summary of AQS components

- Semaphore semaphore, which limits the number of threads executed and allows multi-threaded access

- CountDownLatch countdown timer to coordinate the synchronous execution of multiple threads

- CyclicBarrier: the circular fence is more powerful and can be reused