12.1 overview of this chapter

All the previous chapters describe the rendering system of UE based on the delayed rendering pipeline at the PC end, especially Analyze the unreal rendering system (04) - delay rendering pipeline The flow and steps of the delay rendering pipeline on the PC side are described in detail.

As long as this article describes the rendering pipeline at the mobile end of the UE, it will finally compare the rendering differences between the mobile end and the PC end, as well as special optimization measures. This chapter mainly describes the following contents of UE rendering system:

- Main processes and steps of fmobilescenerer.

- Forward and delayed rendering pipelines at the mobile end.

- Light, shadow and shadow at the mobile end.

- The similarities and differences between mobile terminal and PC terminal, as well as the special optimization skills involved.

In particular, the UE source code analyzed in this article has been upgraded to 4.27.1. Students who need to watch the source code synchronously should pay attention to the update.

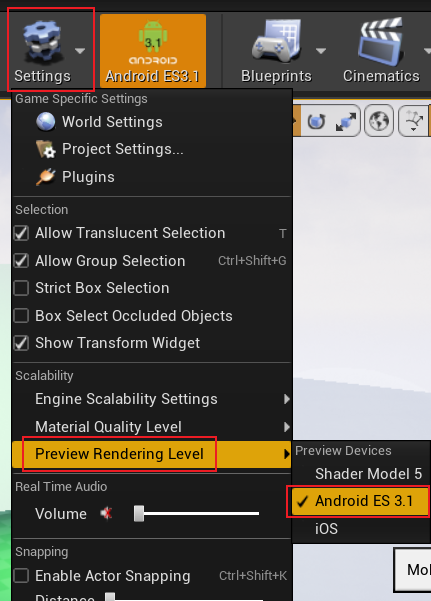

If you want to open the mobile end rendering pipeline in the UE editor of the PC, you can select the following menu:

Wait until the Shader is compiled, and the preview effect of the mobile end will be in the viewport of the UE editor.

12.1.1 characteristics of mobile equipment

Compared with the PC desktop platform, the mobile terminal has significant differences in size, power, hardware performance and many other aspects, which are specifically reflected in:

-

Smaller size. The portability of the mobile terminal requires that the whole machine equipment must be lightweight and can be placed in the palm or pocket, so the whole machine can only be limited to a very small volume.

-

Limited energy and power. Limited by battery storage technology, the current mainstream lithium battery is generally 10000 Ma, but the resolution and image quality of mobile devices are getting higher and higher. In order to meet the long enough endurance and heat dissipation restrictions, the overall power of mobile devices must be strictly controlled, usually within 5w.

-

Limited heat dissipation. PC devices can usually be equipped with cooling fans or even water cooling systems, while mobile devices do not have these active cooling methods and can only rely on heat conduction. If the heat dissipation is improper, the CPU and GPU will actively reduce the frequency to run with very limited performance, so as to avoid damage to equipment components due to overheating.

-

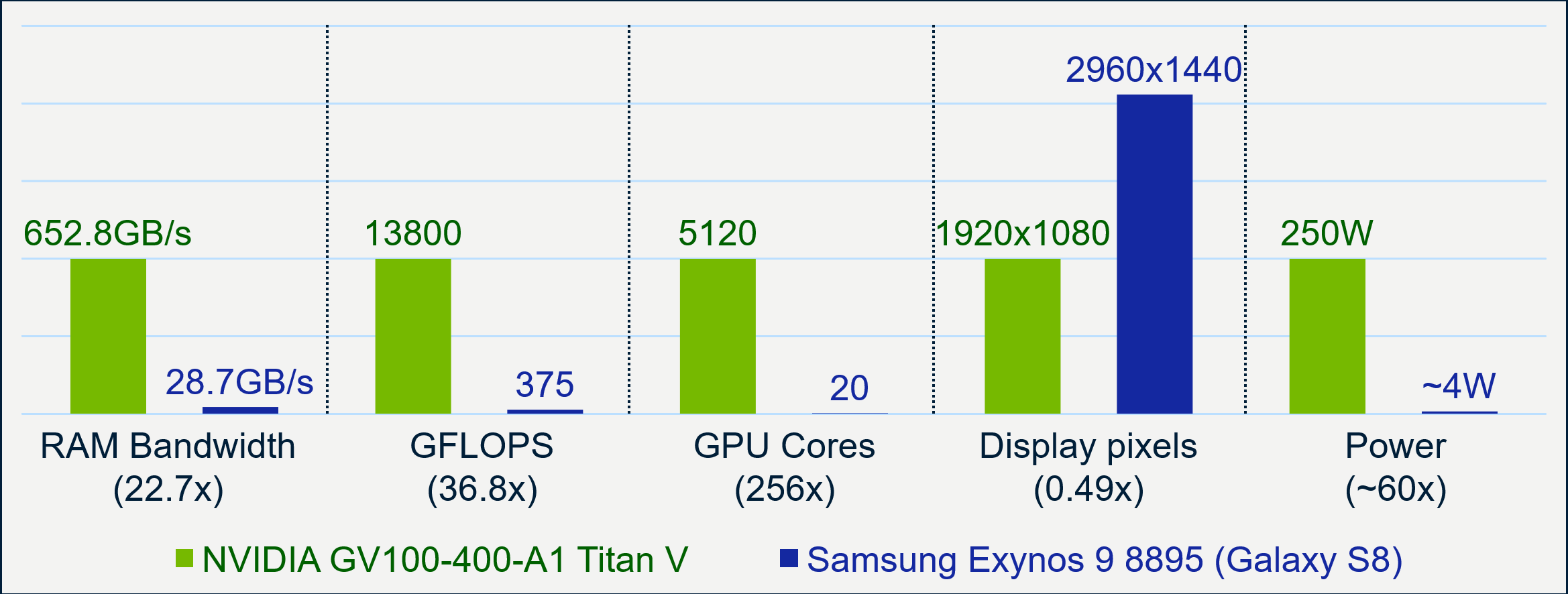

Limited hardware performance. The performance of various components of mobile devices (CPU, bandwidth, memory, GPU, etc.) is only one tenth of that of PC devices.

Performance comparison chart of mainstream PC devices (NV GV100-400-A1 Titan V) and mainstream mobile devices (Samsung Exynos 9 8895) in 2018. Many hardware performance of mobile devices is only one tenth of that of PC devices, but the resolution is close to half of that of PC devices, which highlights the challenges and dilemmas of mobile devices.

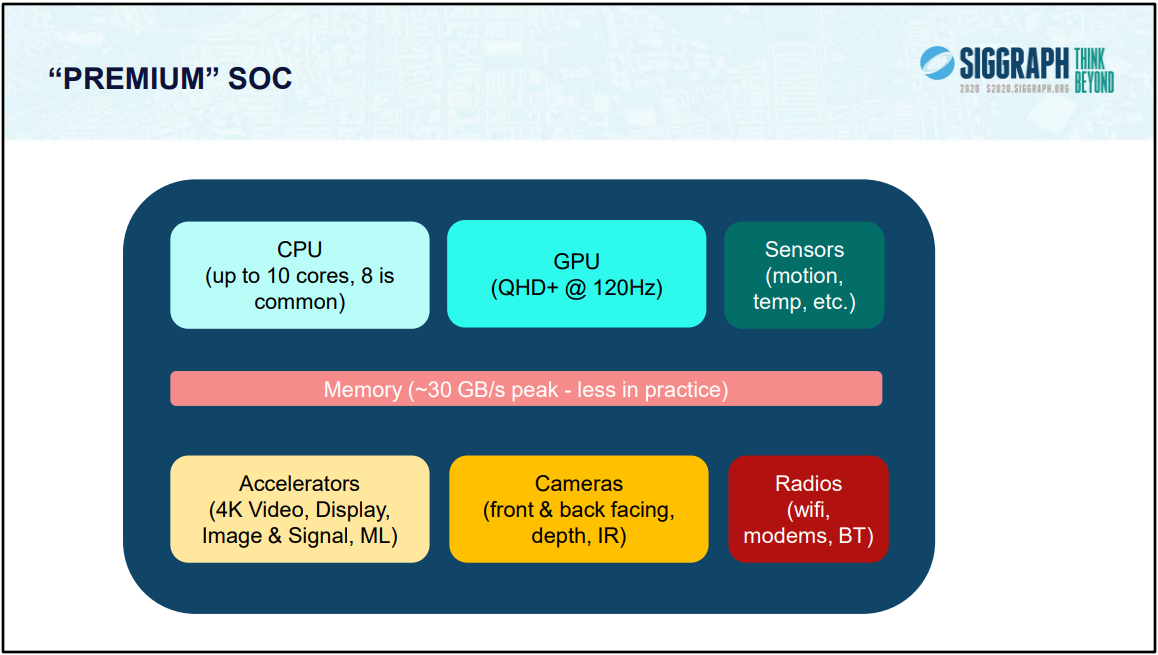

By 2020, the performance of mainstream mobile devices is as follows:

-

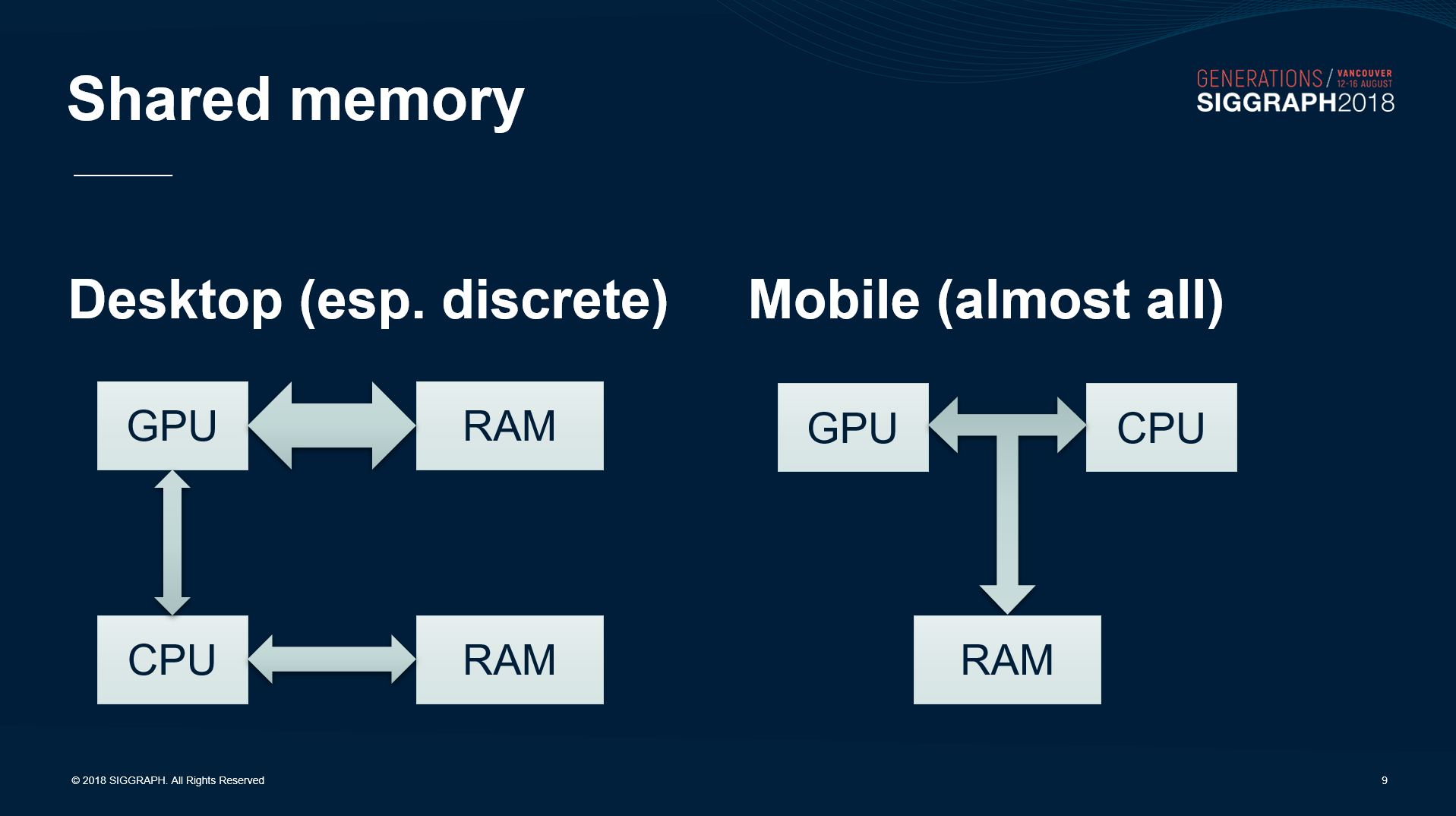

Special hardware architecture. For example, CPU and GPU share memory storage devices, which are called coupled architecture, and GPU's TB(D)R architecture, which are designed to complete as many operations as possible in low power consumption.

Comparison diagram of decoupled hardware architecture of PC device and coupled hardware architecture of mobile device.

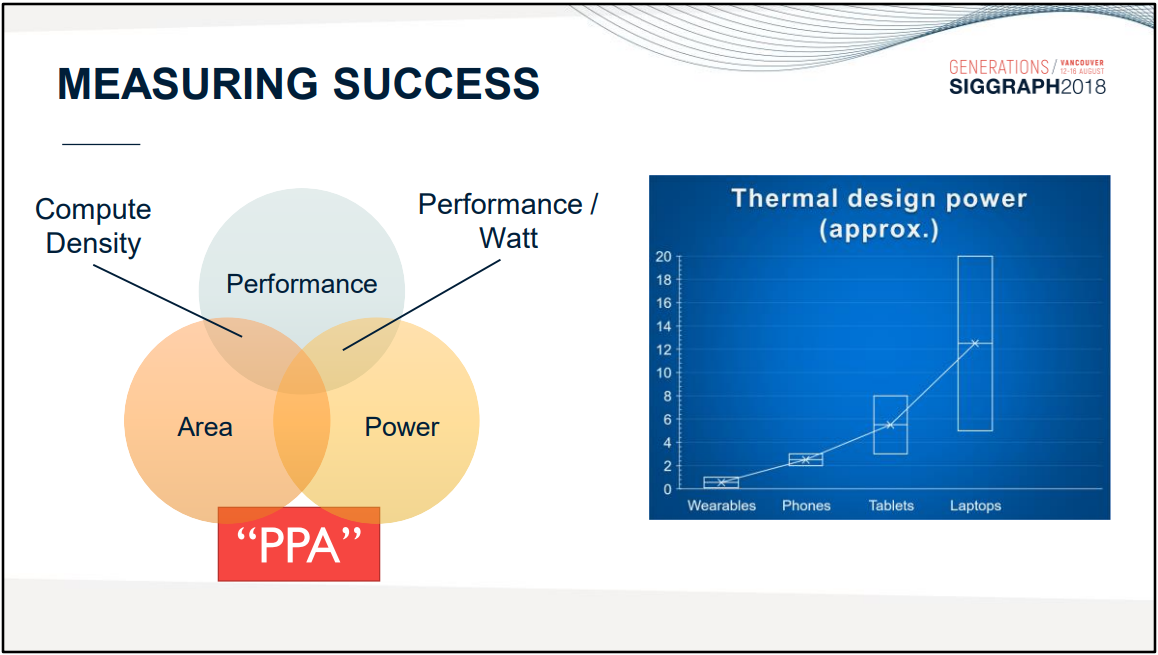

In addition, unlike the CPU and GPU on the PC side, which purely pursue computing Performance, there are three indicators to measure the Performance of the mobile end: Performance, Power and Area, commonly known as PPA. (figure below)

There are three basic parameters to measure mobile devices: Performance, Area and Power. Among them, Compute Density involves Performance and Area, and energy consumption ratio involves Performance and capacity consumption. The greater the better.

With the rise of mobile devices, XR devices are an important development branch of mobile devices. At present, there are XR devices with different sizes, functions and application scenarios:

Various forms of XR equipment.

With the recent explosion of Metaverse and the change of the name of FaceBook to Meta, and technology giants such as Apple, MicroSoft, NVidia and Google are stepping up the layout of future oriented immersive experience, XR devices, as the carrier and entrance closest to the imagination of Metaverse, naturally become a brand-new track with great potential to appear Big Macs in the future.

12.2 UE mobile terminal rendering characteristics

This chapter describes the rendering characteristics of UE4.27 on the mobile terminal.

12.2.1 Feature Level

The UE supports the following graphical API s at the mobile terminal:

| Feature Level | explain |

|---|---|

| OpenGL ES 3.1 | The default feature level of Android system. You can configure specific material parameters in project settings (Project Settings > platforms > Android material quality - es31). |

| Android Vulkan | The high-end renderer that can be used for some specific Android devices supports Vulkan 1.2 API. Vulkan with lightweight design concept will be more efficient than OpenGL in most cases. |

| Metal 2.0 | Feature level dedicated to iOS devices. You can configure material parameters in Project Settings > platforms > iOS material quality. |

In the current mainstream Android devices, better performance can be obtained by using Vulkan. The reason is that Vulkan's lightweight design concept enables applications such as UE to perform optimization more accurately. The following is a comparison table of Vulkan and OpenGL:

| Vulkan | OpenGL |

|---|---|

| Based on the state of the object, there is no global state. | A single global state machine. |

| All state concepts are placed in the command buffer. | The state is bound to a single context. |

| Can be multi-threaded coding. | Rendering operations can only be performed sequentially. |

| It can accurately and explicitly manipulate the memory and synchronization of GPU. | The memory and synchronization details of the GPU are usually hidden by the driver. |

| The driver has no runtime error detection, but there is a verification layer for developers. | Extensive runtime error detection. |

On the Windows platform, the UE editor can also start the simulators of OpenGL, Vulkan and Metal to preview the effect during the editor, but it may be different from the actual running device screen, so this function cannot be completely relied on.

Before opening Vulkan, you need to configure some parameters in the project. See the official document for details Android Vulkan Mobile Renderer.

In addition, the OpenGL support under windows has been removed in previous versions of UE. Although the simulation option of OpenGL still exists in the UE editor, the bottom layer is actually rendered with D3D.

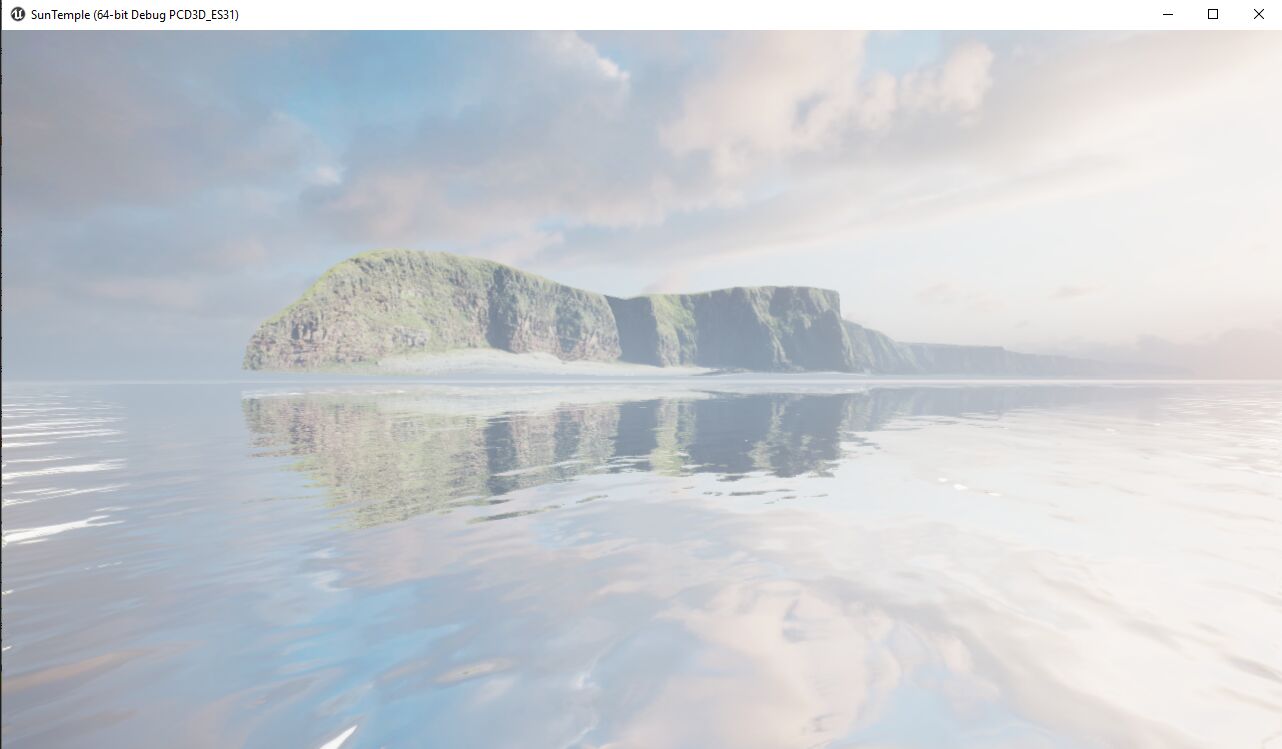

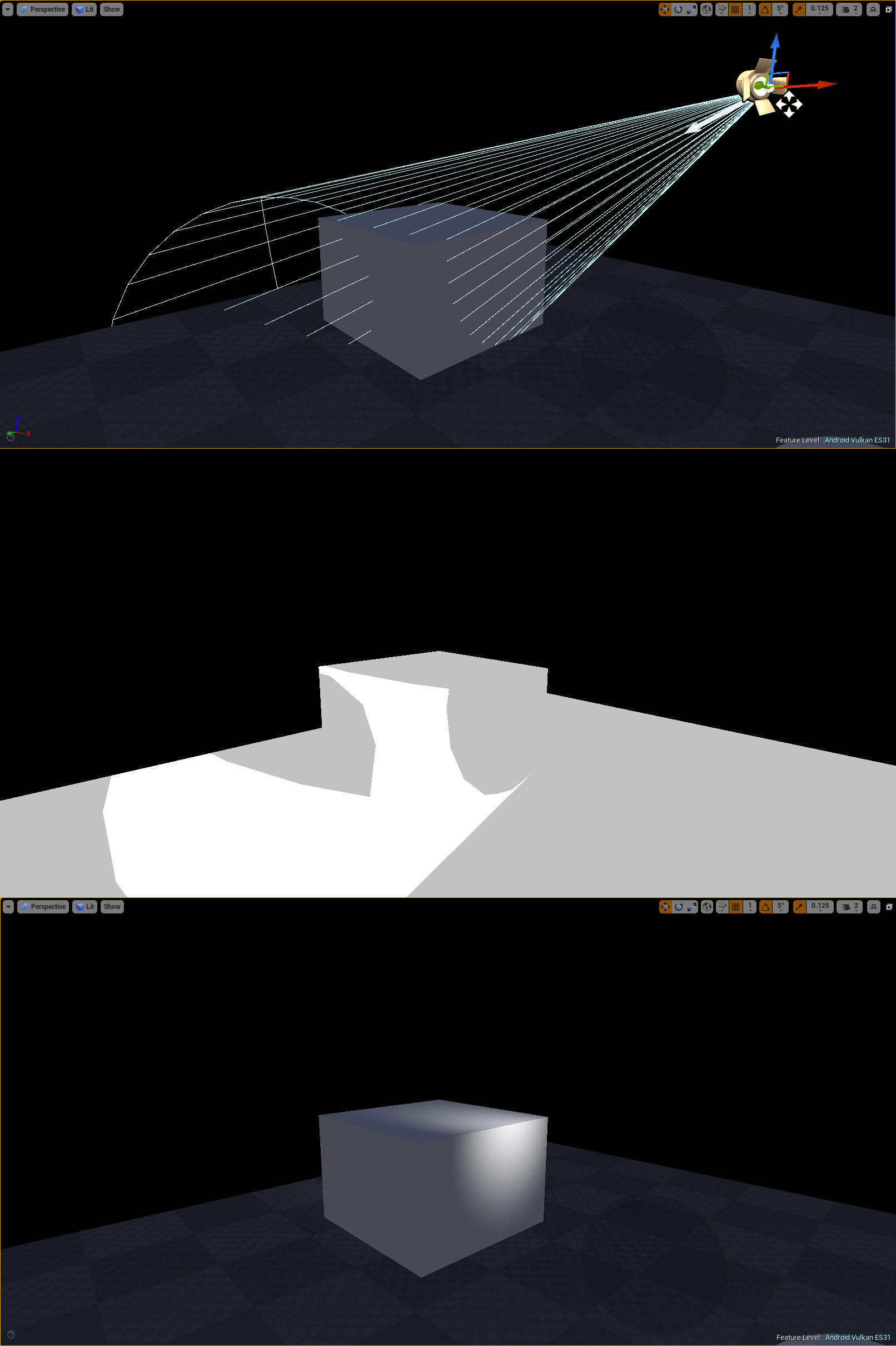

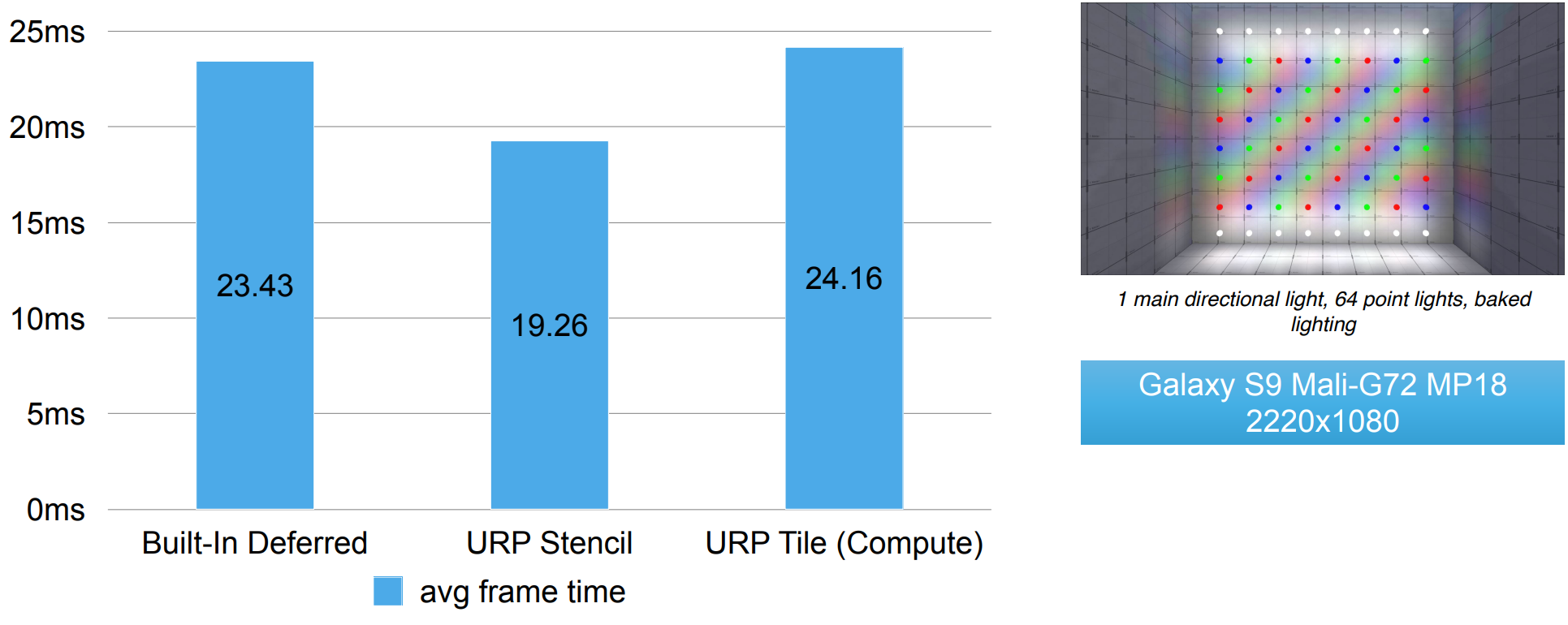

12.2.2 Deferred Shading

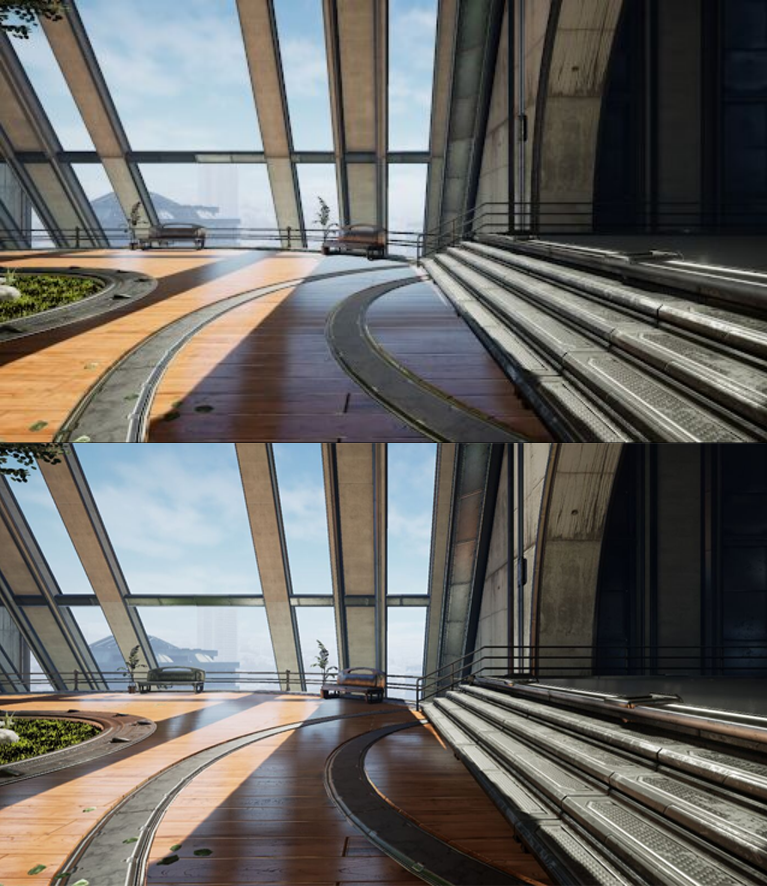

Delayed shading of UE is a function added only in 4.26, which enables developers to realize more complex light and shadow effects on the mobile terminal, such as high-quality reflection, multi dynamic lighting, decals and advanced lighting features.

Up: forward rendering; Bottom: delay rendering.

If you want to enable delayed rendering on the mobile terminal, you need to add the r.Mobile.ShadingPath=1 field in DefaultEngine.ini under the project configuration directory, and then restart the editor.

12.2.3 Ground Truth Ambient Occlusion

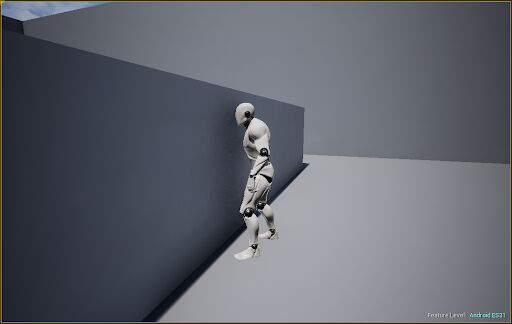

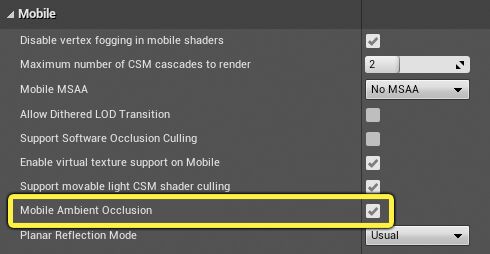

Ground Truth Ambient Occlusion (GTAO) is an ambient occlusion technology close to the real world. It is a kind of shadow compensation. It can mask some indirect light, so as to obtain good soft shadow effect.

Turn on the effect of GTAO. Note that when the robot approaches the wall, it will leave a gradual soft shadow effect on the wall.

To enable GTAO, you need to check the following options:

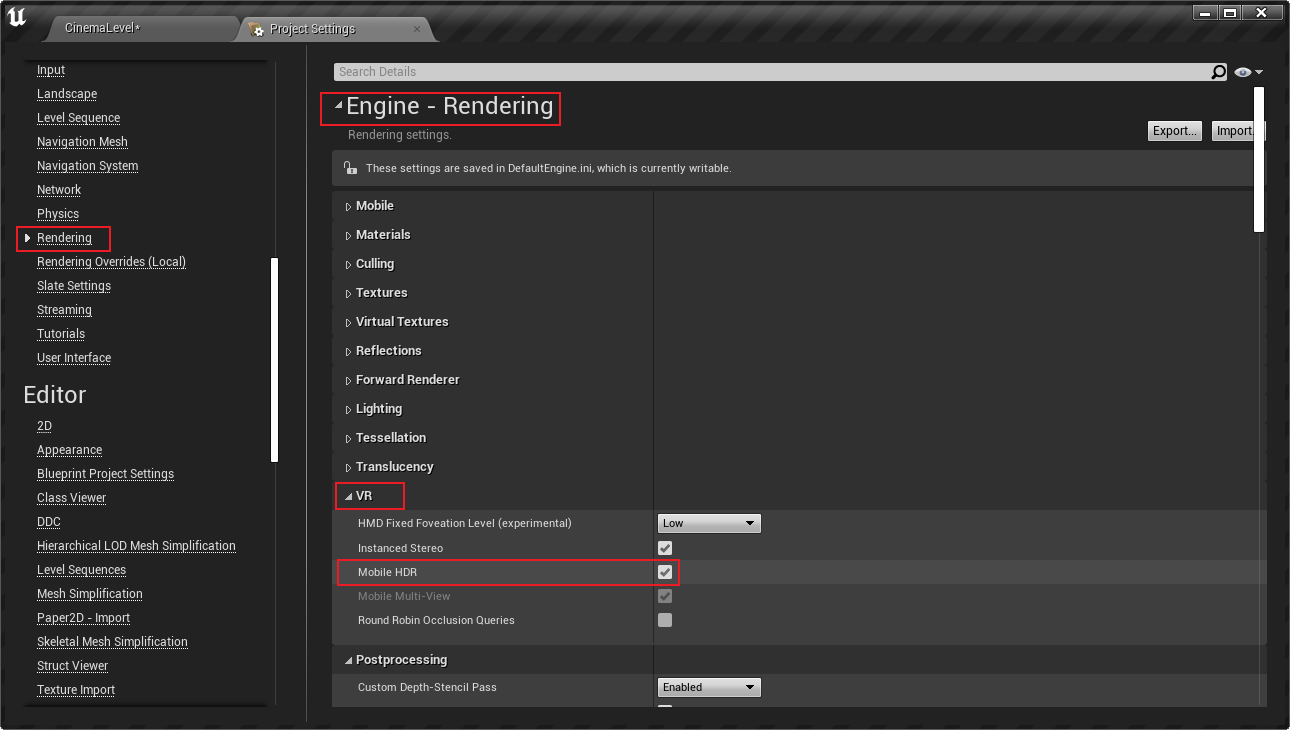

In addition, GTAO depends on the option of Mobile HDR. In order to enable it on the corresponding target device, you also need to add the r.Mobile.AmbientOcclusionQuality field in the configuration of [Platform]Scalability.ini, and the value must be greater than 0, otherwise GTAO will be disabled.

It is worth noting that GTAO has performance problems on Mali devices because their maximum number of Compute Shader threads is less than 1024.

12.2.4 Dynamic Lighting and Shadow

The light source characteristics realized by UE at the mobile end include:

- HDR illumination in linear space.

- Lighting map with direction (considering normal).

- The sun (horizontal light) supports distance field shadows + resolved specular highlights.

- IBL illumination: each object samples the nearest reflection catcher without parallax correction.

- Dynamic objects can correctly receive light and cast shadows.

The type, quantity, shadow and other information of dynamic light sources supported by the UE mobile terminal are as follows:

| Light source type | Maximum quantity | shadow | describe |

|---|---|---|---|

| Parallel light | 1 | CSM | CSM is level 2 by default and supports level 4 at most. |

| Point source | 4 | I won't support it | Point light shadow requires cube shadow map, while the technology of single Pass rendering cube shadow (OnePassPointLightShadow) requires GS (SM5). |

| Spotlight | 4 | support | It is disabled by default and needs to be enabled in the project. |

| Area light | 0 | I won't support it | Dynamic area lighting effects are not currently supported. |

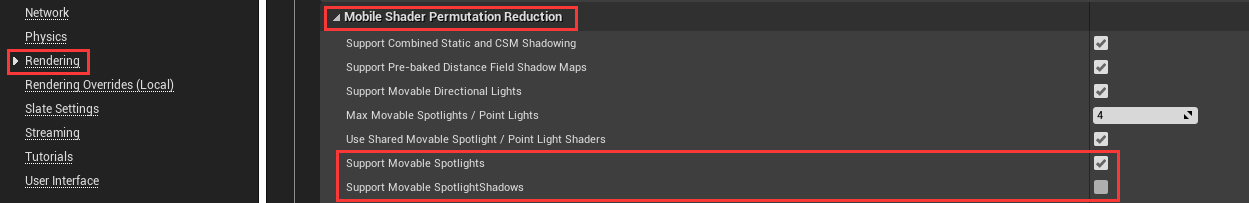

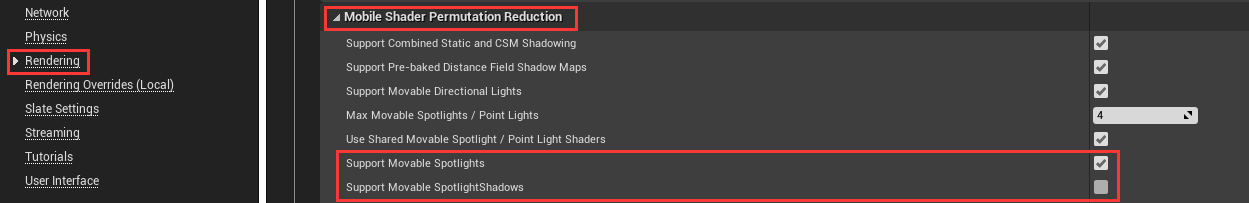

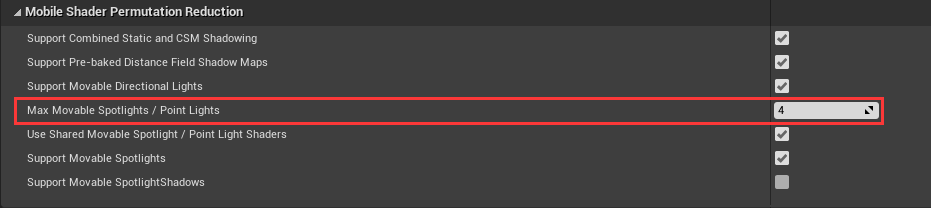

Dynamic spotlights need to be explicitly turned on in the project configuration:

In the pixel shader of mobile BasePass, spotlight shadow map shares the same texture sampler with CSM, and spotlight shadow and CSM use the same shadow map atlas. CSM can ensure that there is enough space, and the spotlights will be sorted by shadow resolution.

By default, the maximum number of visible shadows is limited to 8, but the upper limit can be changed by changing the value of r.mobile.maxvisiblemovablespotlightsshow. The resolution of spotlight shadows is based on screen size and r.Shadow.TexelsPerPixelSpotlight.

The total number of local lights (point lights and spotlights) in the forward render path cannot exceed 4.

The mobile terminal also supports a special shadow mode, that is, Modulated Shadows, which can only be used for fixed directional lights. The effect picture with modulation shadow turned on is as follows:

Modulation shadows also support changing shadow color and blending ratio:

Left: dynamic shadow; Right: modulation shadow.

The shadow at the mobile end also supports the setting of self shadow, shadow quality (r.shadowquality), depth offset and other parameters.

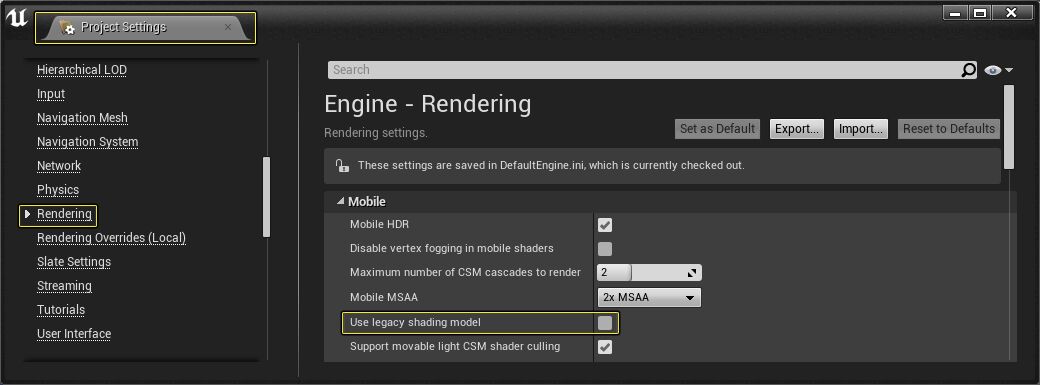

In addition, the mobile terminal uses GGX specular reflection by default. If you want to switch to the traditional specular shading model, you can modify it in the following configuration:

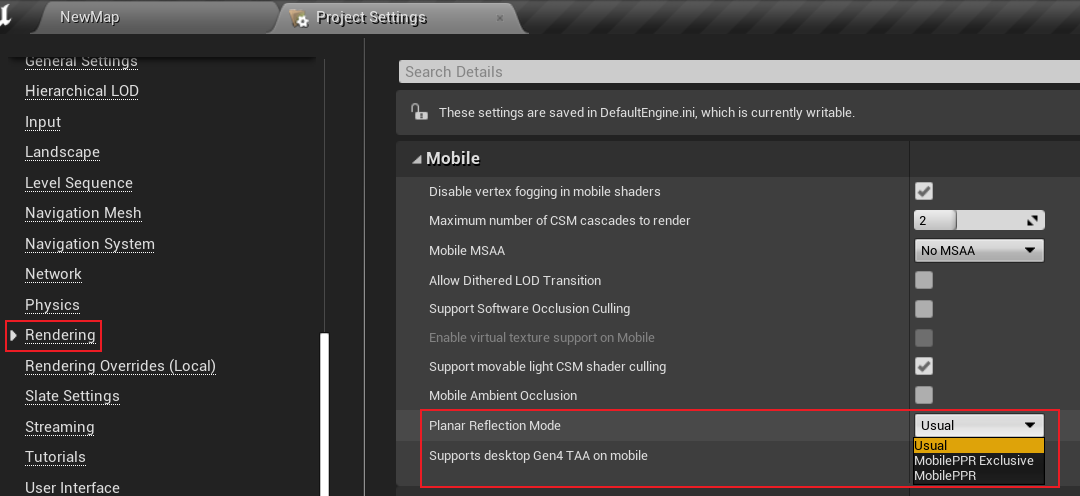

12.2.5 Pixel Projected Reflection

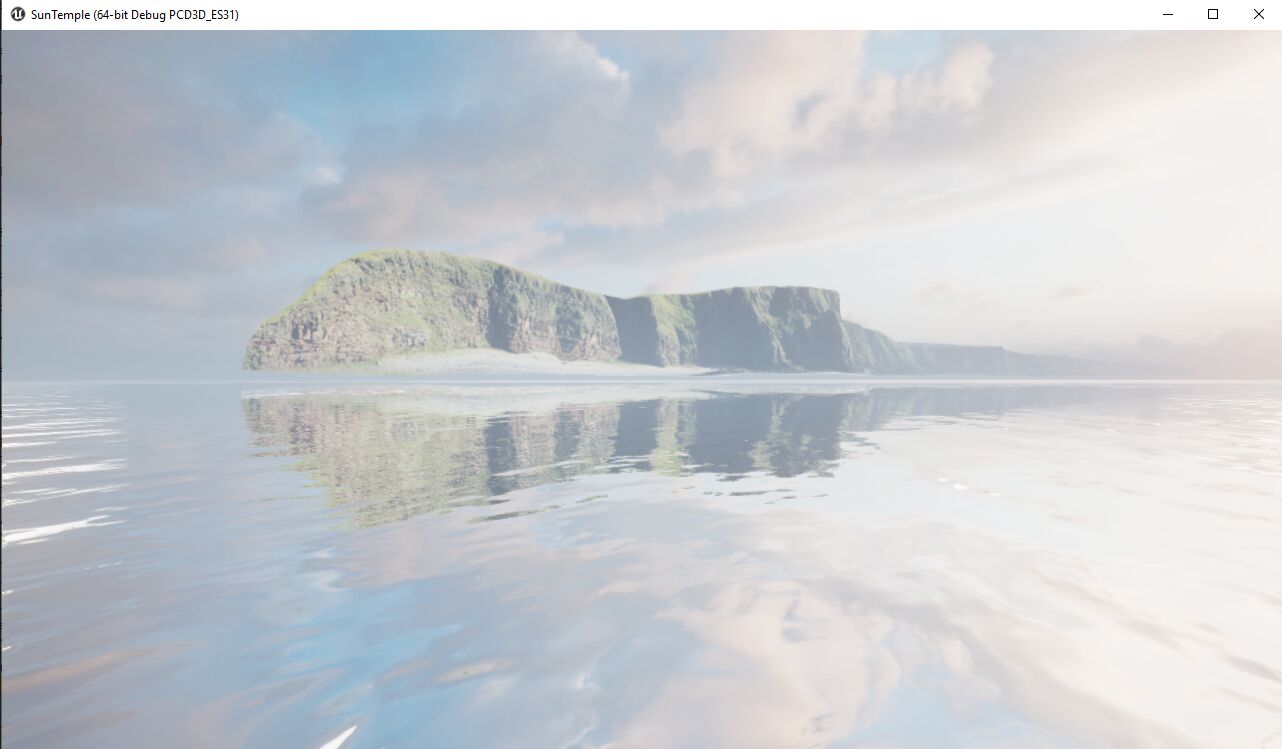

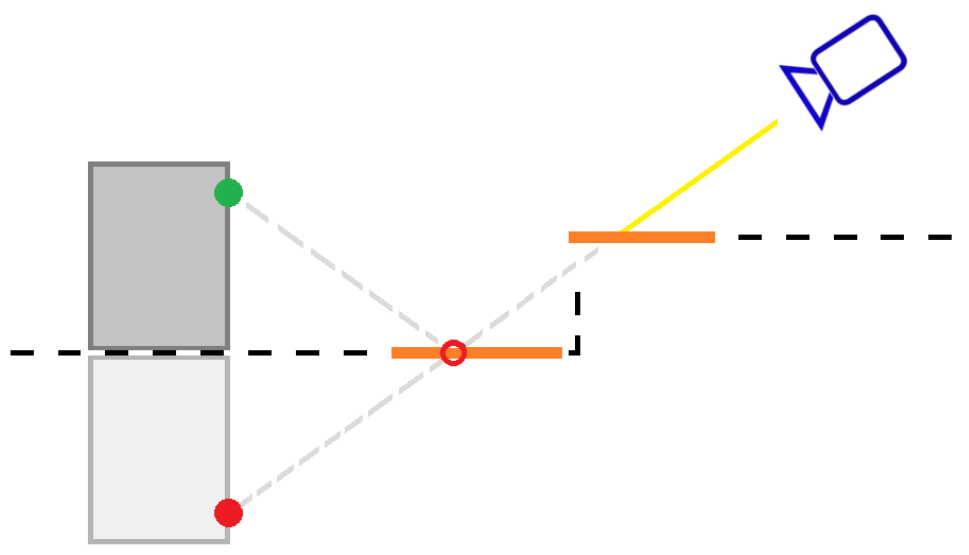

UE has made an optimized version of SSR for the mobile terminal, called Pixel Projected Reflection (PPR), which is also the core idea of reusing screen space pixels.

PPR renderings.

In order to turn on the PPR effect, the following conditions need to be met:

-

Turn on the MobileHDR option.

-

r. The value of mobile.pixelprojectedreflectionquality is greater than 0.

-

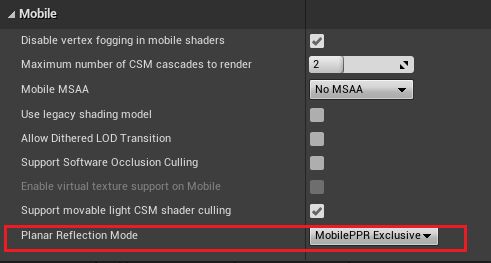

Set Project Settings > mobile and set the planar reflection mode to the correct mode:

The Planar Reflection Mode has three options:

- Usual: the function of planar reflection Actor is the same on all platforms.

- MobilePPR: the planar reflection Actor works normally on PC / host platforms, but uses PPR rendering on mobile platforms.

- MobilePPRExclusive: the planar reflection Actor will only be used for PPR on mobile platforms, leaving room for PC and Console projects to use traditional SSR.

By default, only high-end mobile devices can turn on r.Mobile.PixelProjectedReflectionQuality in [Project]Scalability.ini.

12.2.6 Mesh Auto-Instancing

Grid drawing pipeline at PC end Automatic mesh instance and merge rendering have been supported, which can greatly improve rendering performance. 4.27 this feature has been supported on the mobile terminal.

If you want to open it, you need to open DefaultEngine.ini under the project configuration directory and add the following fields:

r.Mobile.SupportGPUScene=1 r.Mobile.UseGPUSceneTexture=1

Restart the editor and wait for the Shader to compile to preview the effect.

Due to the need for gpuscenetext support, and the maximum Uniform Buffer of the Mali device is only 64kb, so it cannot support enough space. Therefore, the Mali device will use texture instead of buffer to store GPUScene data.

However, there are some limitations:

-

Automatic instantiation on mobile devices is mainly conducive to CPU intensive projects rather than GPU intensive projects. Although enabling automatic instantiation is unlikely to harm GPU intensive projects, it is unlikely to see significant performance improvements with it.

-

If a game or application requires a lot of memory, it may be better to turn off r.mobile.usegpuscenetext and use the buffer because it does not work properly on the Mali device.

You can also turn off r.mobile.usegpuscenetext for Mali devices, while devices from other GPU manufacturers are in normal use.

The effectiveness of automatic instantiation largely depends on the exact specification and positioning of the project. It is recommended to create a build with automatic instantiation enabled and conduct a summary analysis to determine whether substantive performance improvement will be seen.

12.2.7 Post Processing

Because mobile devices have such restrictive factors as slower dependent texture read, limited hardware features, special hardware architecture, additional rendering target resolution, limited bandwidth and so on, post-processing on mobile devices will consume performance, and some extreme cases will jam the rendering pipeline.

Nevertheless, in some games or applications with high image quality requirements, they still rely heavily on the strong expressiveness of post-processing to take several steps for high quality. UE will not restrict developers from using post-processing.

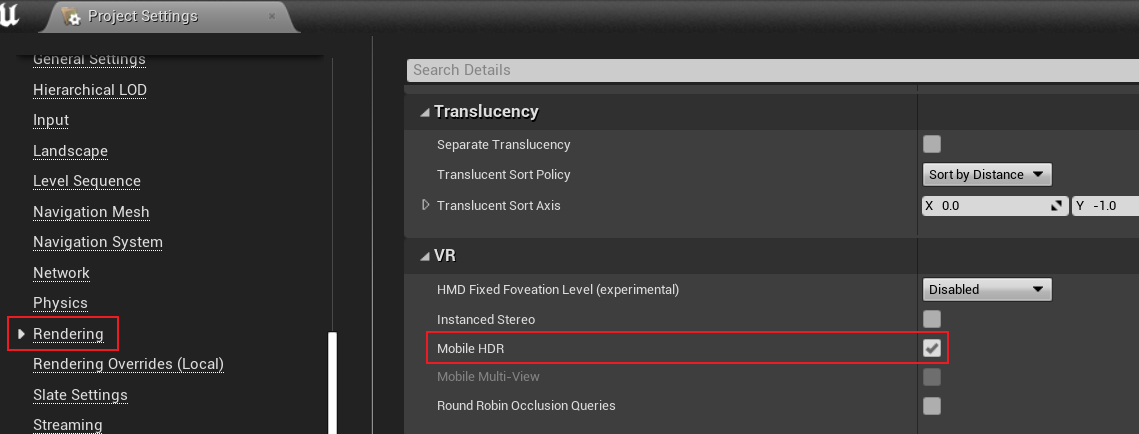

In order to enable post-processing, you must first enable the MobileHDR option:

After enabling post-processing, you can set various post-processing effects in the Post Process Volume.

The post-processing supported on the mobile terminal includes Mobile Tonemapper, Color Grading, Lens, Bloom, Dirt Mask, Auto Exposure, Lens Flares, Depth of Field, etc.

In order to obtain better performance, the official suggestion is to only open Bloom and TAA on the mobile terminal.

12.2.8 other characteristics and limitations

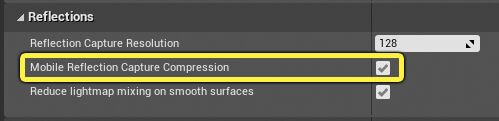

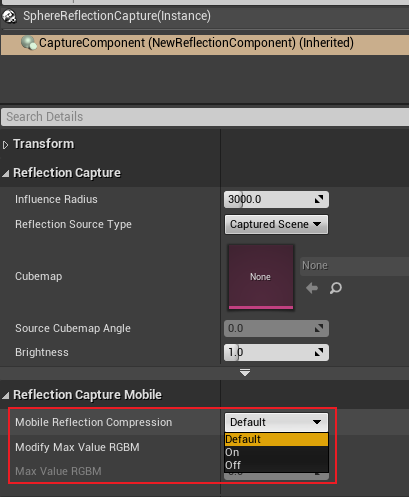

- Reflection Capture Compression

The mobile terminal supports the compression of the Reflection Capture Component, which can reduce the memory and bandwidth of the Reflection Capture runtime and improve the rendering efficiency. It needs to be enabled in the project configuration:

When enabled, ETC2 is used for compression by default. In addition, you can adjust for each Reflection Capture Component:

- Material properties

Materials on mobile platforms (property level Open ES 3.1) use the same node based creation process as other platforms, and most nodes are supported on the mobile end.

The material properties supported by the mobile platform include BaseColor, Roughness, Metallic, spectral, Normal, Emissive and reflection, but do not support Scene Color expression, Tessellation input and subsurface scattering shading model.

There are some limitations on the materials supported by mobile platforms:

- Due to hardware limitations, only 16 texture samplers can be used.

- Only DefaultLit and Unlit shading models are available.

- Custom UVs should be used to avoid relying on texture reading (no mathematical calculation of texture UV).

- Translucent and Masked materials are very energy consuming, so it is recommended to use opaque materials as much as possible.

- Depth fade can be used in translucent materials on iOS platforms, but it is not supported on platforms where the hardware does not support obtaining data from the depth buffer, resulting in unacceptable performance costs.

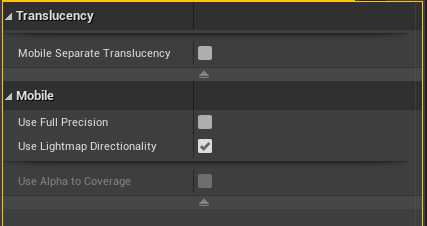

The material properties panel has some special options for the mobile end:

These attributes are described as follows:

-

Mobile separate transparency: whether to turn on a separate translucent rendering texture at the mobile end.

-

Use Full Precision: whether to Use Full Precision. If yes, it can reduce bandwidth occupation and energy consumption and improve performance, but there may be defects of distant objects:

Left: full precision material; Right: semi precision material, the sun in the distance has a defect.

-

Use Lightmap Directionality: whether to turn on the directionality of the lightmap. If checked, the direction and pixel normals of the lightmap will be considered, but the performance consumption will be improved.

-

Use Alpha to Coverage: whether MSAA anti aliasing is enabled for Masked materials. If checked, MSAA will be enabled.

-

Full rough: whether it is completely rough. If checked, it will greatly improve the rendering efficiency of this material.

In addition, the grid types supported by the mobile terminal include:

- Skeletal Mesh

- Static Mesh

- Landscape

- CPU particle sprites, particle meshes

None of the above types are supported. Other restrictions include:

- A single mesh can only be up to 65k, because the vertex index is only 16 bits.

- The number of bones in a single Skeletal Mesh must be less than 75 because of the limitation of hardware performance.

12.3 FMobileSceneRenderer

Fmobilescenereader inherits from fscenereader, which is responsible for the scene rendering process of the mobile terminal, while the PC terminal also inherits from fscenereader FDeferredShadingSceneRenderer . Their inheritance relationship is as follows:

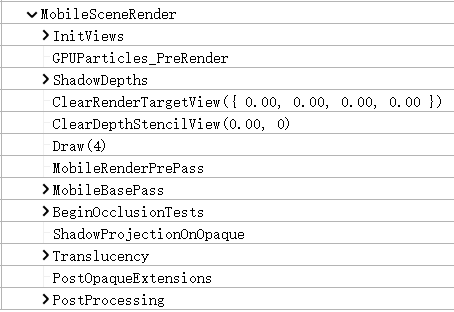

Fdeferredshadingscenerer has been mentioned in the previous articles. Its rendering process is particularly complex, including complex light and shadow and rendering steps. In contrast, the logic and steps of fmobilescenerer will be much simpler. The following is the screenshot of RenderDoc:

The above mainly includes InitViews, ShadowDepths, PrePass, BasePass, OcclusionTest, shadowprojectonopaque, translucence, PostProcessing and other steps. These steps exist on the PC side, but the implementation process may be different. See the analysis in the following chapters.

12.3.1 renderer main flow

The main process of the scene renderer on the mobile end also occurs in fmobilescenerer:: render. The code and analysis are as follows:

// Engine\Source\Runtime\Renderer\Private\MobileShadingRenderer.cpp

void FMobileSceneRenderer::Render(FRHICommandListImmediate& RHICmdList)

{

// Update element scene information.

Scene->UpdateAllPrimitiveSceneInfos(RHICmdList);

// Prepare the rendered area of the view

PrepareViewRectsForRendering(RHICmdList);

// Prepare sky atmosphere data

if (ShouldRenderSkyAtmosphere(Scene, ViewFamily.EngineShowFlags))

{

for (int32 LightIndex = 0; LightIndex < NUM_ATMOSPHERE_LIGHTS; ++LightIndex)

{

if (Scene->AtmosphereLights[LightIndex])

{

PrepareSunLightProxy(*Scene->GetSkyAtmosphereSceneInfo(), LightIndex, *Scene->AtmosphereLights[LightIndex]);

}

}

}

else

{

Scene->ResetAtmosphereLightsProperties();

}

if(!ViewFamily.EngineShowFlags.Rendering)

{

return;

}

// Wait for occlusion rejection test

WaitOcclusionTests(RHICmdList);

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// Initialize the view, find visible entities, and prepare RT and buffer data for rendering

InitViews(RHICmdList);

if (GRHINeedsExtraDeletionLatency || !GRHICommandList.Bypass())

{

QUICK_SCOPE_CYCLE_COUNTER(STAT_FMobileSceneRenderer_PostInitViewsFlushDel);

// Occlusion queries may be suspended, so it is best to let RHI thread and GPU work while waiting. In addition, when RHI thread is executed, this is the only place to process pending deletion

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

FRHICommandListExecutor::GetImmediateCommandList().ImmediateFlush(EImmediateFlushType::FlushRHIThreadFlushResources);

}

GEngine->GetPreRenderDelegate().Broadcast();

// Commit global dynamic buffer before rendering starts

DynamicIndexBuffer.Commit();

DynamicVertexBuffer.Commit();

DynamicReadBuffer.Commit();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_SceneSim));

if (ViewFamily.bLateLatchingEnabled)

{

BeginLateLatching(RHICmdList);

}

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// Working with virtual textures

if (bUseVirtualTexturing)

{

SCOPED_GPU_STAT(RHICmdList, VirtualTextureUpdate);

FVirtualTextureSystem::Get().Update(RHICmdList, FeatureLevel, Scene);

// Clear virtual texture feedback to default value

FUnorderedAccessViewRHIRef FeedbackUAV = SceneContext.GetVirtualTextureFeedbackUAV();

RHICmdList.Transition(FRHITransitionInfo(FeedbackUAV, ERHIAccess::SRVMask, ERHIAccess::UAVMask));

RHICmdList.ClearUAVUint(FeedbackUAV, FUintVector4(~0u, ~0u, ~0u, ~0u));

RHICmdList.Transition(FRHITransitionInfo(FeedbackUAV, ERHIAccess::UAVMask, ERHIAccess::UAVMask));

RHICmdList.BeginUAVOverlap(FeedbackUAV);

}

// Sorted lighting information

FSortedLightSetSceneInfo SortedLightSet;

// Delay rendering

if (bDeferredShading)

{

// Collect and sort lights

GatherAndSortLights(SortedLightSet);

int32 NumReflectionCaptures = Views[0].NumBoxReflectionCaptures + Views[0].NumSphereReflectionCaptures;

bool bCullLightsToGrid = (NumReflectionCaptures > 0 || GMobileUseClusteredDeferredShading != 0);

FRDGBuilder GraphBuilder(RHICmdList);

// Calculate the light grid

ComputeLightGrid(GraphBuilder, bCullLightsToGrid, SortedLightSet);

GraphBuilder.Execute();

}

// Generate sky / atmosphere LUT

const bool bShouldRenderSkyAtmosphere = ShouldRenderSkyAtmosphere(Scene, ViewFamily.EngineShowFlags);

if (bShouldRenderSkyAtmosphere)

{

FRDGBuilder GraphBuilder(RHICmdList);

RenderSkyAtmosphereLookUpTables(GraphBuilder);

GraphBuilder.Execute();

}

// Inform the special effects system to prepare the scene for rendering

if (FXSystem && ViewFamily.EngineShowFlags.Particles)

{

FXSystem->PreRender(RHICmdList, NULL, !Views[0].bIsPlanarReflection);

if (FGPUSortManager* GPUSortManager = FXSystem->GetGPUSortManager())

{

GPUSortManager->OnPreRender(RHICmdList);

}

}

// Polling occlusion culling requests

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Shadows));

// Render shadows

RenderShadowDepthMaps(RHICmdList);

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// Collect a list of views

TArray<const FViewInfo*> ViewList;

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

ViewList.Add(&Views[ViewIndex]);

}

// Render custom depth

if (bShouldRenderCustomDepth)

{

FRDGBuilder GraphBuilder(RHICmdList);

FSceneTextureShaderParameters SceneTextures = CreateSceneTextureShaderParameters(GraphBuilder, Views[0].GetFeatureLevel(), ESceneTextureSetupMode::None);

RenderCustomDepthPass(GraphBuilder, SceneTextures);

GraphBuilder.Execute();

}

// Render depth PrePass

if (bIsFullPrepassEnabled)

{

// SDF and AO require full PrePass depth

FRHIRenderPassInfo DepthPrePassRenderPassInfo(

SceneContext.GetSceneDepthSurface(),

EDepthStencilTargetActions::ClearDepthStencil_StoreDepthStencil);

DepthPrePassRenderPassInfo.NumOcclusionQueries = ComputeNumOcclusionQueriesToBatch();

DepthPrePassRenderPassInfo.bOcclusionQueries = DepthPrePassRenderPassInfo.NumOcclusionQueries != 0;

RHICmdList.BeginRenderPass(DepthPrePassRenderPassInfo, TEXT("DepthPrepass"));

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLM_MobilePrePass));

// Render full depth PrePass

RenderPrePass(RHICmdList);

// Submit occlusion culling

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Occlusion));

RenderOcclusion(RHICmdList);

RHICmdList.EndRenderPass();

// SDF shadow

if (bRequiresDistanceFieldShadowingPass)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderSDFShadowing);

RenderSDFShadowing(RHICmdList);

}

// HZB.

if (bShouldRenderHZB)

{

RenderHZB(RHICmdList, SceneContext.SceneDepthZ);

}

// AO.

if (bRequiresAmbientOcclusionPass)

{

RenderAmbientOcclusion(RHICmdList, SceneContext.SceneDepthZ);

}

}

FRHITexture* SceneColor = nullptr;

// Delay rendering

if (bDeferredShading)

{

SceneColor = RenderDeferred(RHICmdList, ViewList, SortedLightSet);

}

// Forward rendering

else

{

SceneColor = RenderForward(RHICmdList, ViewList);

}

// Render speed buffer

if (bShouldRenderVelocities)

{

FRDGBuilder GraphBuilder(RHICmdList);

FRDGTextureMSAA SceneDepthTexture = RegisterExternalTextureMSAA(GraphBuilder, SceneContext.SceneDepthZ);

FRDGTextureRef VelocityTexture = TryRegisterExternalTexture(GraphBuilder, SceneContext.SceneVelocity);

if (VelocityTexture != nullptr)

{

AddClearRenderTargetPass(GraphBuilder, VelocityTexture);

}

// Speed buffer for rendering movable objects

AddSetCurrentStatPass(GraphBuilder, GET_STATID(STAT_CLMM_Velocity));

RenderVelocities(GraphBuilder, SceneDepthTexture.Resolve, VelocityTexture, FSceneTextureShaderParameters(), EVelocityPass::Opaque, false);

AddSetCurrentStatPass(GraphBuilder, GET_STATID(STAT_CLMM_AfterVelocity));

// Speed buffer for rendering transparent objects

AddSetCurrentStatPass(GraphBuilder, GET_STATID(STAT_CLMM_TranslucentVelocity));

RenderVelocities(GraphBuilder, SceneDepthTexture.Resolve, VelocityTexture, GetSceneTextureShaderParameters(CreateMobileSceneTextureUniformBuffer(GraphBuilder, EMobileSceneTextureSetupMode::SceneColor)), EVelocityPass::Translucent, false);

GraphBuilder.Execute();

}

// Deal with the logic after scene rendering

{

FRendererModule& RendererModule = static_cast<FRendererModule&>(GetRendererModule());

FRDGBuilder GraphBuilder(RHICmdList);

RendererModule.RenderPostOpaqueExtensions(GraphBuilder, Views, SceneContext);

if (FXSystem && Views.IsValidIndex(0))

{

AddUntrackedAccessPass(GraphBuilder, [this](FRHICommandListImmediate& RHICmdList)

{

check(RHICmdList.IsOutsideRenderPass());

FXSystem->PostRenderOpaque(

RHICmdList,

Views[0].ViewUniformBuffer,

nullptr,

nullptr,

Views[0].AllowGPUParticleUpdate()

);

if (FGPUSortManager* GPUSortManager = FXSystem->GetGPUSortManager())

{

GPUSortManager->OnPostRenderOpaque(RHICmdList);

}

});

}

GraphBuilder.Execute();

}

// Flush / commit command buffer

if (bSubmitOffscreenRendering)

{

RHICmdList.SubmitCommandsHint();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

// Convert the scene color to SRV for subsequent steps to read

if (!bGammaSpace || bRenderToSceneColor)

{

RHICmdList.Transition(FRHITransitionInfo(SceneColor, ERHIAccess::Unknown, ERHIAccess::SRVMask));

}

if (bDeferredShading)

{

// Releases the original reference on the scene render target

SceneContext.AdjustGBufferRefCount(RHICmdList, -1);

}

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Post));

// Work with virtual textures

if (bUseVirtualTexturing)

{

SCOPED_GPU_STAT(RHICmdList, VirtualTextureUpdate);

// No pass after this should make VT page requests

RHICmdList.EndUAVOverlap(SceneContext.VirtualTextureFeedbackUAV);

RHICmdList.Transition(FRHITransitionInfo(SceneContext.VirtualTextureFeedbackUAV, ERHIAccess::UAVMask, ERHIAccess::SRVMask));

TArray<FIntRect, TInlineAllocator<4>> ViewRects;

ViewRects.AddUninitialized(Views.Num());

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ++ViewIndex)

{

ViewRects[ViewIndex] = Views[ViewIndex].ViewRect;

}

FVirtualTextureFeedbackBufferDesc Desc;

Desc.Init2D(SceneContext.GetBufferSizeXY(), ViewRects, SceneContext.GetVirtualTextureFeedbackScale());

SubmitVirtualTextureFeedbackBuffer(RHICmdList, SceneContext.VirtualTextureFeedback, Desc);

}

FMemMark Mark(FMemStack::Get());

FRDGBuilder GraphBuilder(RHICmdList);

FRDGTextureRef ViewFamilyTexture = TryCreateViewFamilyTexture(GraphBuilder, ViewFamily);

// Parsing scene

if (ViewFamily.bResolveScene)

{

if (!bGammaSpace || bRenderToSceneColor)

{

// Complete rendering of each view or full stereo buffer (if enabled)

{

RDG_EVENT_SCOPE(GraphBuilder, "PostProcessing");

SCOPE_CYCLE_COUNTER(STAT_FinishRenderViewTargetTime);

TArray<TRDGUniformBufferRef<FMobileSceneTextureUniformParameters>, TInlineAllocator<1, SceneRenderingAllocator>> MobileSceneTexturesPerView;

MobileSceneTexturesPerView.SetNumZeroed(Views.Num());

const auto SetupMobileSceneTexturesPerView = [&]()

{

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ++ViewIndex)

{

EMobileSceneTextureSetupMode SetupMode = EMobileSceneTextureSetupMode::SceneColor;

if (Views[ViewIndex].bCustomDepthStencilValid)

{

SetupMode |= EMobileSceneTextureSetupMode::CustomDepth;

}

if (bShouldRenderVelocities)

{

SetupMode |= EMobileSceneTextureSetupMode::SceneVelocity;

}

MobileSceneTexturesPerView[ViewIndex] = CreateMobileSceneTextureUniformBuffer(GraphBuilder, SetupMode);

}

};

SetupMobileSceneTexturesPerView();

FMobilePostProcessingInputs PostProcessingInputs;

PostProcessingInputs.ViewFamilyTexture = ViewFamilyTexture;

// Post render effects

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

RDG_EVENT_SCOPE_CONDITIONAL(GraphBuilder, Views.Num() > 1, "View%d", ViewIndex);

PostProcessingInputs.SceneTextures = MobileSceneTexturesPerView[ViewIndex];

AddMobilePostProcessingPasses(GraphBuilder, Views[ViewIndex], PostProcessingInputs, NumMSAASamples > 1);

}

}

}

}

GEngine->GetPostRenderDelegate().Broadcast();

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_SceneEnd));

if (bShouldRenderVelocities)

{

SceneContext.SceneVelocity.SafeRelease();

}

if (ViewFamily.bLateLatchingEnabled)

{

EndLateLatching(RHICmdList, Views[0]);

}

RenderFinish(GraphBuilder, ViewFamilyTexture);

GraphBuilder.Execute();

// Polling occlusion culling requests

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

FRHICommandListExecutor::GetImmediateCommandList().ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

Yes Analyze the unreal rendering system (04) - delay rendering pipeline Students in this chapter should know that the scene rendering process on the mobile terminal simplifies many steps, which is equivalent to a subset of the scene renderer on the PC terminal. Of course, in order to adapt to the unique GPU hardware architecture of the mobile terminal, the scene rendering of the mobile terminal is also different from that of the PC terminal. It will be analyzed in detail later. The main steps and processes of the mobile end scenario are as follows:

As for the above flow chart, the following points need to be explained:

- The flowchart nodes bDeferredShading and bDeferredShading2 are the same variable. The main purpose of distinguishing them here is to prevent mermaid syntax drawing errors.

- Nodes with * are conditional and non inevitable steps.

UE4.26 adds the delayed rendering pipeline of the mobile terminal, so the above code has the forward rendering branch RenderForward and the delayed rendering branch renderderrred, which return the rendering result SceneColor.

The mobile terminal also supports rendering features such as primitive GPU scene, SDF shadow, AO, sky atmosphere, virtual texture, occlusion elimination and so on.

Since UE4.26, rendering system has been widely used RDG system The scene renderer on the mobile end is no exception. A total of several FRDGBuilder instances are declared in the above code, which are used to calculate the light source lattice, render the sky atmosphere LUT, customize the depth, speed buffer, render post event, post-processing, etc. they are relatively independent function modules or rendering stages.

12.3.2 RenderForward

RenderForward is responsible for the branch of forward rendering in the mobile scene renderer. Its code and analysis are as follows:

FRHITexture* FMobileSceneRenderer::RenderForward(FRHICommandListImmediate& RHICmdList, const TArrayView<const FViewInfo*> ViewList)

{

const FViewInfo& View = *ViewList[0];

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

FRHITexture* SceneColor = nullptr;

FRHITexture* SceneColorResolve = nullptr;

FRHITexture* SceneDepth = nullptr;

ERenderTargetActions ColorTargetAction = ERenderTargetActions::Clear_Store;

EDepthStencilTargetActions DepthTargetAction = EDepthStencilTargetActions::ClearDepthStencil_DontStoreDepthStencil;

// Enable mobile MSAA

bool bMobileMSAA = NumMSAASamples > 1 && SceneContext.GetSceneColorSurface()->GetNumSamples() > 1;

// Whether to enable the mobile terminal multi attempt mode

static const auto CVarMobileMultiView = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("vr.MobileMultiView"));

const bool bIsMultiViewApplication = (CVarMobileMultiView && CVarMobileMultiView->GetValueOnAnyThread() != 0);

// The rendering branch of gamma space

if (bGammaSpace && !bRenderToSceneColor)

{

// If MSAA is turned on, the rendered texture (including scene color and resolution texture) is obtained from the SceneContext

if (bMobileMSAA)

{

SceneColor = SceneContext.GetSceneColorSurface();

SceneColorResolve = ViewFamily.RenderTarget->GetRenderTargetTexture();

ColorTargetAction = ERenderTargetActions::Clear_Resolve;

RHICmdList.Transition(FRHITransitionInfo(SceneColorResolve, ERHIAccess::Unknown, ERHIAccess::RTV | ERHIAccess::ResolveDst));

}

// Non MSAA, get render texture from view family

else

{

SceneColor = ViewFamily.RenderTarget->GetRenderTargetTexture();

RHICmdList.Transition(FRHITransitionInfo(SceneColor, ERHIAccess::Unknown, ERHIAccess::RTV));

}

SceneDepth = SceneContext.GetSceneDepthSurface();

}

// Linear space or render to scene texture

else

{

SceneColor = SceneContext.GetSceneColorSurface();

if (bMobileMSAA)

{

SceneColorResolve = SceneContext.GetSceneColorTexture();

ColorTargetAction = ERenderTargetActions::Clear_Resolve;

RHICmdList.Transition(FRHITransitionInfo(SceneColorResolve, ERHIAccess::Unknown, ERHIAccess::RTV | ERHIAccess::ResolveDst));

}

else

{

SceneColorResolve = nullptr;

ColorTargetAction = ERenderTargetActions::Clear_Store;

}

SceneDepth = SceneContext.GetSceneDepthSurface();

if (bRequiresMultiPass)

{

// store targets after opaque so translucency render pass can be restarted

ColorTargetAction = ERenderTargetActions::Clear_Store;

DepthTargetAction = EDepthStencilTargetActions::ClearDepthStencil_StoreDepthStencil;

}

if (bKeepDepthContent)

{

// store depth if post-processing/capture needs it

DepthTargetAction = EDepthStencilTargetActions::ClearDepthStencil_StoreDepthStencil;

}

}

// Depth texture state of prepass

if (bIsFullPrepassEnabled)

{

ERenderTargetActions DepthTarget = MakeRenderTargetActions(ERenderTargetLoadAction::ELoad, GetStoreAction(GetDepthActions(DepthTargetAction)));

ERenderTargetActions StencilTarget = MakeRenderTargetActions(ERenderTargetLoadAction::ELoad, GetStoreAction(GetStencilActions(DepthTargetAction)));

DepthTargetAction = MakeDepthStencilTargetActions(DepthTarget, StencilTarget);

}

FRHITexture* ShadingRateTexture = nullptr;

if (!View.bIsSceneCapture && !View.bIsReflectionCapture)

{

TRefCountPtr<IPooledRenderTarget> ShadingRateTarget = GVRSImageManager.GetMobileVariableRateShadingImage(ViewFamily);

if (ShadingRateTarget.IsValid())

{

ShadingRateTexture = ShadingRateTarget->GetRenderTargetItem().ShaderResourceTexture;

}

}

// Scene color rendering Pass information

FRHIRenderPassInfo SceneColorRenderPassInfo(

SceneColor,

ColorTargetAction,

SceneColorResolve,

SceneDepth,

DepthTargetAction,

nullptr, // we never resolve scene depth on mobile

ShadingRateTexture,

VRSRB_Sum,

FExclusiveDepthStencil::DepthWrite_StencilWrite

);

SceneColorRenderPassInfo.SubpassHint = ESubpassHint::DepthReadSubpass;

if (!bIsFullPrepassEnabled)

{

SceneColorRenderPassInfo.NumOcclusionQueries = ComputeNumOcclusionQueriesToBatch();

SceneColorRenderPassInfo.bOcclusionQueries = SceneColorRenderPassInfo.NumOcclusionQueries != 0;

}

// If the scene color is not multi view, but the application is, you need to render the multi view as single view to the shader

SceneColorRenderPassInfo.MultiViewCount = View.bIsMobileMultiViewEnabled ? 2 : (bIsMultiViewApplication ? 1 : 0);

// Start rendering scene colors

RHICmdList.BeginRenderPass(SceneColorRenderPassInfo, TEXT("SceneColorRendering"));

if (GIsEditor && !View.bIsSceneCapture)

{

DrawClearQuad(RHICmdList, Views[0].BackgroundColor);

}

if (!bIsFullPrepassEnabled)

{

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLM_MobilePrePass));

// Render depth pre pass

RenderPrePass(RHICmdList);

}

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Opaque));

// Render BasePass: opaque and masked objects

RenderMobileBasePass(RHICmdList, ViewList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

//Render debug mode

#if !(UE_BUILD_SHIPPING || UE_BUILD_TEST)

if (ViewFamily.UseDebugViewPS())

{

// Here we use the base pass depth result to get z culling for opaque and masque.

// The color needs to be cleared at this point since shader complexity renders in additive.

DrawClearQuad(RHICmdList, FLinearColor::Black);

RenderMobileDebugView(RHICmdList, ViewList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

#endif // !(UE_BUILD_SHIPPING || UE_BUILD_TEST)

const bool bAdrenoOcclusionMode = CVarMobileAdrenoOcclusionMode.GetValueOnRenderThread() != 0;

if (!bIsFullPrepassEnabled)

{

// Occlusion Culling

if (!bAdrenoOcclusionMode)

{

// Submit occlusion culling

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Occlusion));

RenderOcclusion(RHICmdList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

}

// Post event to handle plug-in rendering

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(ViewExtensionPostRenderBasePass);

QUICK_SCOPE_CYCLE_COUNTER(STAT_FMobileSceneRenderer_ViewExtensionPostRenderBasePass);

for (int32 ViewExt = 0; ViewExt < ViewFamily.ViewExtensions.Num(); ++ViewExt)

{

for (int32 ViewIndex = 0; ViewIndex < ViewFamily.Views.Num(); ++ViewIndex)

{

ViewFamily.ViewExtensions[ViewExt]->PostRenderBasePass_RenderThread(RHICmdList, Views[ViewIndex]);

}

}

}

// If you need to render reflections from transparent objects or pixel projections, you need to split pass

if (bRequiresMultiPass || bRequiresPixelProjectedPlanarRelfectionPass)

{

RHICmdList.EndRenderPass();

}

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Translucency));

// Reopen the transparent render channel if necessary

if (bRequiresMultiPass || bRequiresPixelProjectedPlanarRelfectionPass)

{

check(RHICmdList.IsOutsideRenderPass());

// If the current hardware does not support reading and writing the same depth buffer, the scene depth is copied

ConditionalResolveSceneDepth(RHICmdList, View);

if (bRequiresPixelProjectedPlanarRelfectionPass)

{

const FPlanarReflectionSceneProxy* PlanarReflectionSceneProxy = Scene ? Scene->GetForwardPassGlobalPlanarReflection() : nullptr;

RenderPixelProjectedReflection(RHICmdList, SceneContext, PlanarReflectionSceneProxy);

FRHITransitionInfo TranslucentRenderPassTransitions[] = {

FRHITransitionInfo(SceneColor, ERHIAccess::SRVMask, ERHIAccess::RTV),

FRHITransitionInfo(SceneDepth, ERHIAccess::SRVMask, ERHIAccess::DSVWrite)

};

RHICmdList.Transition(MakeArrayView(TranslucentRenderPassTransitions, UE_ARRAY_COUNT(TranslucentRenderPassTransitions)));

}

DepthTargetAction = EDepthStencilTargetActions::LoadDepthStencil_DontStoreDepthStencil;

FExclusiveDepthStencil::Type ExclusiveDepthStencil = FExclusiveDepthStencil::DepthRead_StencilRead;

if (bModulatedShadowsInUse)

{

ExclusiveDepthStencil = FExclusiveDepthStencil::DepthRead_StencilWrite;

}

// The opaque mesh used for moving end pixel projection reflection must write the depth to the depth RT because the mesh is rendered only once (if the quality level is lower than or equal to BestPerformance)

if (IsMobilePixelProjectedReflectionEnabled(View.GetShaderPlatform())

&& GetMobilePixelProjectedReflectionQuality() == EMobilePixelProjectedReflectionQuality::BestPerformance)

{

ExclusiveDepthStencil = FExclusiveDepthStencil::DepthWrite_StencilWrite;

}

if (bKeepDepthContent && !bMobileMSAA)

{

DepthTargetAction = EDepthStencilTargetActions::LoadDepthStencil_StoreDepthStencil;

}

#if PLATFORM_HOLOLENS

if (bShouldRenderDepthToTranslucency)

{

ExclusiveDepthStencil = FExclusiveDepthStencil::DepthWrite_StencilWrite;

}

#endif

// Transparent object rendering Pass

FRHIRenderPassInfo TranslucentRenderPassInfo(

SceneColor,

SceneColorResolve ? ERenderTargetActions::Load_Resolve : ERenderTargetActions::Load_Store,

SceneColorResolve,

SceneDepth,

DepthTargetAction,

nullptr,

ShadingRateTexture,

VRSRB_Sum,

ExclusiveDepthStencil

);

TranslucentRenderPassInfo.NumOcclusionQueries = 0;

TranslucentRenderPassInfo.bOcclusionQueries = false;

TranslucentRenderPassInfo.SubpassHint = ESubpassHint::DepthReadSubpass;

// Start rendering translucent objects

RHICmdList.BeginRenderPass(TranslucentRenderPassInfo, TEXT("SceneColorTranslucencyRendering"));

}

// The scene depth is read-only and can be obtained

RHICmdList.NextSubpass();

if (!View.bIsPlanarReflection)

{

// Render decals

if (ViewFamily.EngineShowFlags.Decals)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderDecals);

RenderDecals(RHICmdList);

}

// Render modulated shadow casting

if (ViewFamily.EngineShowFlags.DynamicShadows)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderShadowProjections);

RenderModulatedShadowProjections(RHICmdList);

}

}

// Paint translucent

if (ViewFamily.EngineShowFlags.Translucency)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderTranslucency);

SCOPE_CYCLE_COUNTER(STAT_TranslucencyDrawTime);

RenderTranslucency(RHICmdList, ViewList);

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

if (!bIsFullPrepassEnabled)

{

// Adreno occlusion culling mode

if (bAdrenoOcclusionMode)

{

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Occlusion));

// flush

RHICmdList.SubmitCommandsHint();

bSubmitOffscreenRendering = false; // submit once

// Issue occlusion queries

RenderOcclusion(RHICmdList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

}

// Precomputed tone mapping before MSAA is parsed (valid only on iOS)

if (!bGammaSpace)

{

PreTonemapMSAA(RHICmdList);

}

// End scene color rendering

RHICmdList.EndRenderPass();

// Optimize the analytical texture of the returned scene color (only when MSAA is turned on)

return SceneColorResolve ? SceneColorResolve : SceneColor;

}

The main steps of forward rendering at the mobile end are similar to those at the PC end, rendering PrePass, BasePass, special rendering (decals, AO, occlusion culling, etc.) and translucent objects in turn. Their flow chart is as follows:

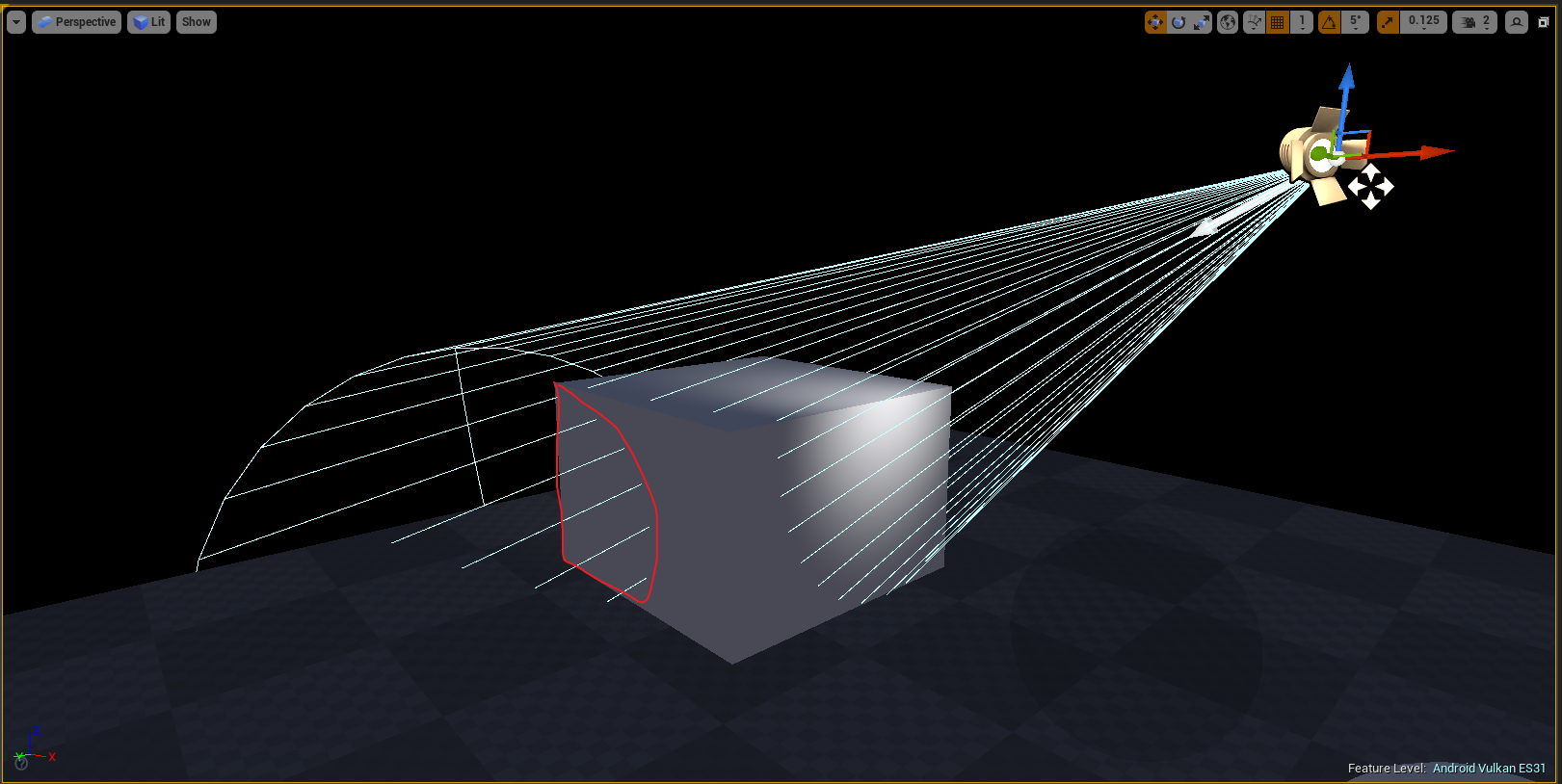

Among them, occlusion removal is related to GPU manufacturers. For example, Qualcomm Adreno series GPU chips require between Flush rendering instructions and Switch FBO:

Render Opaque -> Render Translucent -> Flush -> Render Queries -> Switch FBO

Then UE also follows the special requirements of Adreno series chips and makes special treatment for its occlusion removal.

Adreno series chips support the rendering of Bin and ordinary Direct mixed modes of TBDR architecture, and will automatically switch to Direct mode during occlusion query to reduce the overhead of occlusion query. If the query is not submitted between the Flush rendering instruction and the Switch FBO, the whole rendering pipeline will be stuck and the rendering performance will be degraded.

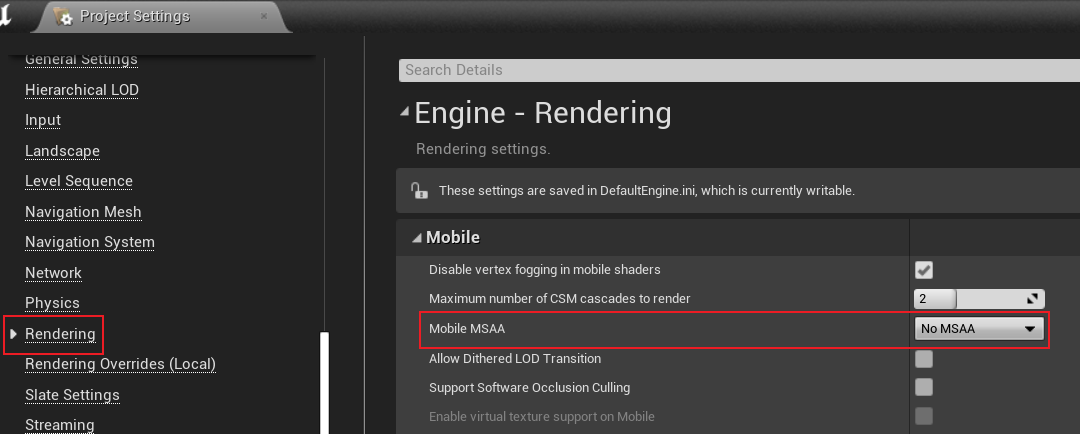

MSAA is the preferred anti aliasing for UE forward rendering at the mobile end due to its natural hardware support and a good balance between effect and efficiency. Therefore, there are many logic to deal with MSAA in the above code, including color, depth texture and its resource status. If MSAA is enabled, by default, the scene color is parsed in RHICmdList.EndRenderPass() (at the same time, the data on the chip block is written back to the system video memory), so as to obtain the anti aliasing texture. MSAA on the mobile terminal is not enabled by default, but can be set in the following interface:

Forward rendering supports both Gamma space and HDR (linear space) color space modes. If it is a linear space, steps such as tone mapping are required in post rendering. The default is HDR, which can be changed in the project configuration:

bRequiresMultiPass of the above code indicates whether a special render Pass is required to draw a translucent object. Its value is determined by the following code:

// Engine\Source\Runtime\Renderer\Private\MobileShadingRenderer.cpp

bool FMobileSceneRenderer::RequiresMultiPass(FRHICommandListImmediate& RHICmdList, const FViewInfo& View) const

{

// Vulkan uses subpasses

if (IsVulkanPlatform(ShaderPlatform))

{

return false;

}

// All iOS support frame_buffer_fetch

if (IsMetalMobilePlatform(ShaderPlatform))

{

return false;

}

if (IsMobileDeferredShadingEnabled(ShaderPlatform))

{

// TODO: add GL support

return true;

}

// Some Androids support frame_buffer_fetch

if (IsAndroidOpenGLESPlatform(ShaderPlatform) && (GSupportsShaderFramebufferFetch || GSupportsShaderDepthStencilFetch))

{

return false;

}

// Always render reflection capture in single pass

if (View.bIsPlanarReflection || View.bIsSceneCapture)

{

return false;

}

// Always render LDR in single pass

if (!IsMobileHDR())

{

return false;

}

// MSAA depth can't be sampled or resolved, unless we are on PC (no vulkan)

if (NumMSAASamples > 1 && !IsSimulatedPlatform(ShaderPlatform))

{

return false;

}

return true;

}

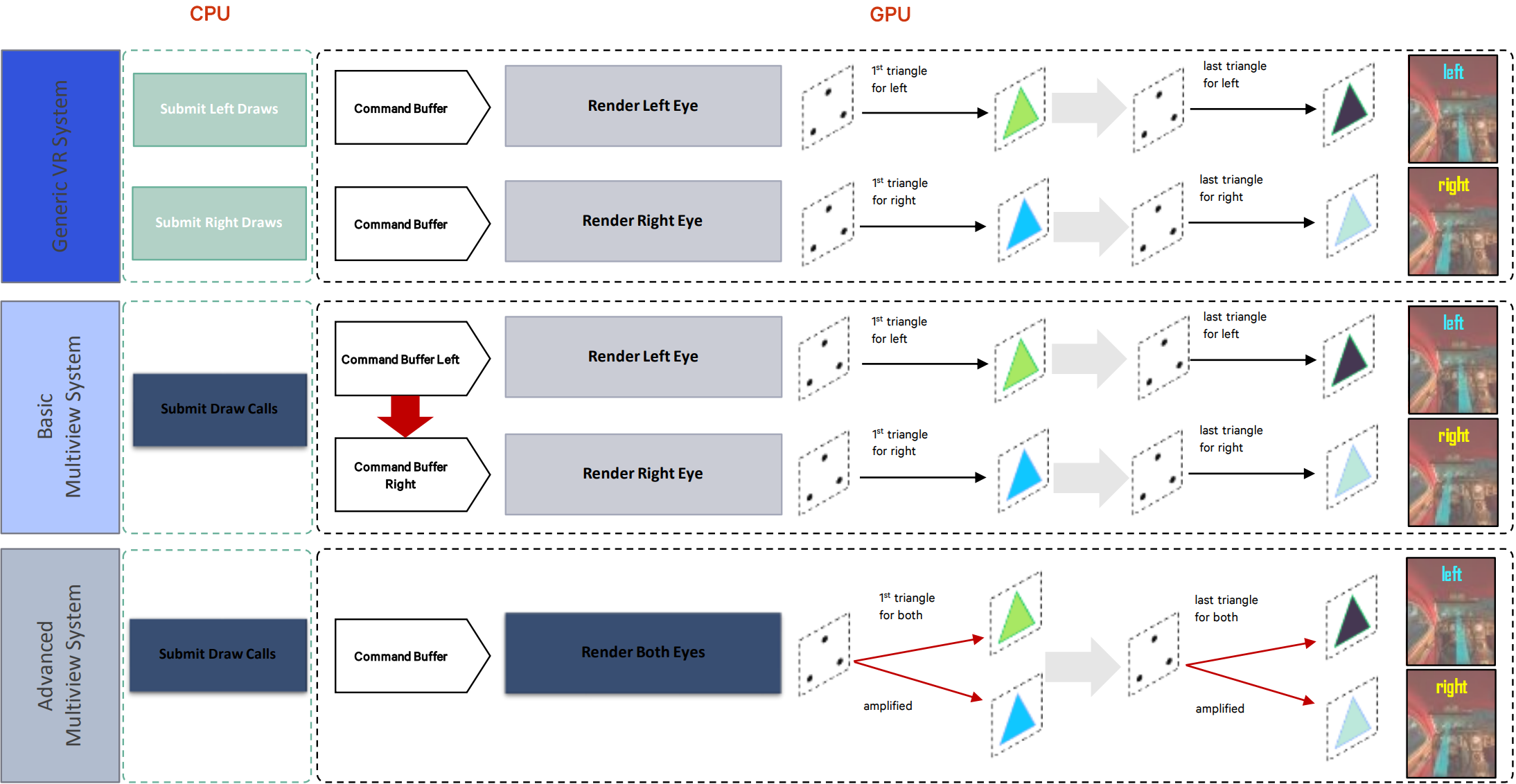

Similar but different in meaning are the bIsMultiViewApplication and bIsMobileMultiViewEnabled tags, indicating whether to turn on multi view rendering and the number of multi views. It is only used for VR, which is determined by console variable vr.MobileMultiView, graphics API and other factors. MultiView is used in XR to optimize rendering twice. It has two modes: Basic and Advanced:

MultiView comparison chart for optimizing rendering such as VR. Above: for rendering without MultiView mode, two eyes submit drawing instructions respectively; Medium: basic MultiView mode, reuse submission instructions, and copy one more Command List at GPU layer; Lower: Advanced MultiView mode, which can reuse DC, Command List and geometric information.

bKeepDepthContent indicates whether to keep the depth content and determines its code:

bKeepDepthContent =

bRequiresMultiPass ||

bForceDepthResolve ||

bRequiresPixelProjectedPlanarRelfectionPass ||

bSeparateTranslucencyActive ||

Views[0].bIsReflectionCapture ||

(bDeferredShading && bPostProcessUsesSceneDepth) ||

bShouldRenderVelocities ||

bIsFullPrepassEnabled;

// The depth with MSAA is never reserved

bKeepDepthContent = (NumMSAASamples > 1 ? false : bKeepDepthContent);

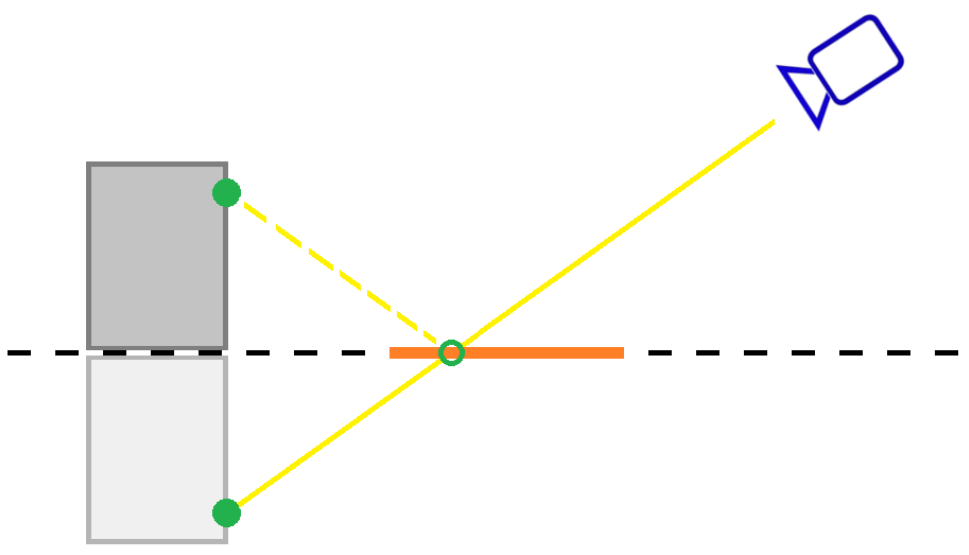

The above code also reveals a special rendering method of plane reflection at the mobile end: Pixel Projected Reflection (PPR). Its implementation principle is similar to SSR, but requires less data, only scene color, depth buffer and reflection area. Its core steps:

- Calculate the mirror position of all pixels of the scene color in the reflection plane.

- Test whether the reflection of the pixel is within the reflection area.

- Rays are cast to mirror pixel locations.

- Test whether the intersection is within the reflection area.

- If the intersection point is found, calculate the mirror position of the pixel on the screen.

- Writes the color of the mirrored pixel at the intersection.

- If the intersection in the reflection area is blocked by other objects, the reflection at this position is eliminated.

PPR effect list.

PPR can be set in the project configuration:

12.3.3 RenderDeferred

UE adds a delayed rendering branch to the mobile end rendering pipeline on 4.26, and improves and optimizes it on 4.27. Whether the mobile terminal turns on the delayed coloring feature is determined by the following code:

// Engine\Source\Runtime\RenderCore\Private\RenderUtils.cpp

bool IsMobileDeferredShadingEnabled(const FStaticShaderPlatform Platform)

{

// Disable delayed shading for OpenGL

if (IsOpenGLPlatform(Platform))

{

// needs MRT framebuffer fetch or PLS

return false;

}

// The console variable "r.Mobile.ShadingPath" should be 1

static auto* MobileShadingPathCvar = IConsoleManager::Get().FindTConsoleVariableDataInt(TEXT("r.Mobile.ShadingPath"));

return MobileShadingPathCvar->GetValueOnAnyThread() == 1;

}

Simply put, it is a non OpenGL graphics API and the console variable r.Mobile.ShadingPath is set to 1.

r.Mobile.ShadingPath cannot be set dynamically in the editor. It can only be opened by adding the following fields in the project root directory / Config/DefaultEngine.ini:

[/Script/Engine.RendererSettings]

r.Mobile.ShadingPath=1

After adding the above fields, restart the UE editor and wait for the shader compilation to preview the delayed shading effect of the mobile terminal.

The following is the code and analysis of the delayed rendering branch fmobilescenerer:: renderdeferred:

FRHITexture* FMobileSceneRenderer::RenderDeferred(FRHICommandListImmediate& RHICmdList, const TArrayView<const FViewInfo*> ViewList, const FSortedLightSetSceneInfo& SortedLightSet)

{

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// Prepare GBuffer

FRHITexture* ColorTargets[4] = {

SceneContext.GetSceneColorSurface(),

SceneContext.GetGBufferATexture().GetReference(),

SceneContext.GetGBufferBTexture().GetReference(),

SceneContext.GetGBufferCTexture().GetReference()

};

// Whether RHI needs to store GBuffer in GPU system memory and shade in a separate render channel

ERenderTargetActions GBufferAction = bRequiresMultiPass ? ERenderTargetActions::Clear_Store : ERenderTargetActions::Clear_DontStore;

EDepthStencilTargetActions DepthAction = bKeepDepthContent ? EDepthStencilTargetActions::ClearDepthStencil_StoreDepthStencil : EDepthStencilTargetActions::ClearDepthStencil_DontStoreDepthStencil;

// RT's load/store action

ERenderTargetActions ColorTargetsAction[4] = {ERenderTargetActions::Clear_Store, GBufferAction, GBufferAction, GBufferAction};

if (bIsFullPrepassEnabled)

{

ERenderTargetActions DepthTarget = MakeRenderTargetActions(ERenderTargetLoadAction::ELoad, GetStoreAction(GetDepthActions(DepthAction)));

ERenderTargetActions StencilTarget = MakeRenderTargetActions(ERenderTargetLoadAction::ELoad, GetStoreAction(GetStencilActions(DepthAction)));

DepthAction = MakeDepthStencilTargetActions(DepthTarget, StencilTarget);

}

FRHIRenderPassInfo BasePassInfo = FRHIRenderPassInfo();

int32 ColorTargetIndex = 0;

for (; ColorTargetIndex < UE_ARRAY_COUNT(ColorTargets); ++ColorTargetIndex)

{

BasePassInfo.ColorRenderTargets[ColorTargetIndex].RenderTarget = ColorTargets[ColorTargetIndex];

BasePassInfo.ColorRenderTargets[ColorTargetIndex].ResolveTarget = nullptr;

BasePassInfo.ColorRenderTargets[ColorTargetIndex].ArraySlice = -1;

BasePassInfo.ColorRenderTargets[ColorTargetIndex].MipIndex = 0;

BasePassInfo.ColorRenderTargets[ColorTargetIndex].Action = ColorTargetsAction[ColorTargetIndex];

}

if (MobileRequiresSceneDepthAux(ShaderPlatform))

{

BasePassInfo.ColorRenderTargets[ColorTargetIndex].RenderTarget = SceneContext.SceneDepthAux->GetRenderTargetItem().ShaderResourceTexture.GetReference();

BasePassInfo.ColorRenderTargets[ColorTargetIndex].ResolveTarget = nullptr;

BasePassInfo.ColorRenderTargets[ColorTargetIndex].ArraySlice = -1;

BasePassInfo.ColorRenderTargets[ColorTargetIndex].MipIndex = 0;

BasePassInfo.ColorRenderTargets[ColorTargetIndex].Action = GBufferAction;

ColorTargetIndex++;

}

BasePassInfo.DepthStencilRenderTarget.DepthStencilTarget = SceneContext.GetSceneDepthSurface();

BasePassInfo.DepthStencilRenderTarget.ResolveTarget = nullptr;

BasePassInfo.DepthStencilRenderTarget.Action = DepthAction;

BasePassInfo.DepthStencilRenderTarget.ExclusiveDepthStencil = FExclusiveDepthStencil::DepthWrite_StencilWrite;

BasePassInfo.SubpassHint = ESubpassHint::DeferredShadingSubpass;

if (!bIsFullPrepassEnabled)

{

BasePassInfo.NumOcclusionQueries = ComputeNumOcclusionQueriesToBatch();

BasePassInfo.bOcclusionQueries = BasePassInfo.NumOcclusionQueries != 0;

}

BasePassInfo.ShadingRateTexture = nullptr;

BasePassInfo.bIsMSAA = false;

BasePassInfo.MultiViewCount = 0;

RHICmdList.BeginRenderPass(BasePassInfo, TEXT("BasePassRendering"));

if (GIsEditor && !Views[0].bIsSceneCapture)

{

DrawClearQuad(RHICmdList, Views[0].BackgroundColor);

}

// Depth PrePass

if (!bIsFullPrepassEnabled)

{

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLM_MobilePrePass));

// Depth pre-pass

RenderPrePass(RHICmdList);

}

// BasePass: opaque and hollow objects

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Opaque));

RenderMobileBasePass(RHICmdList, ViewList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

// Occlusion culling

if (!bIsFullPrepassEnabled)

{

// Issue occlusion queries

RHICmdList.SetCurrentStat(GET_STATID(STAT_CLMM_Occlusion));

RenderOcclusion(RHICmdList);

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

// Non multi Pass mode

if (!bRequiresMultiPass)

{

// The next sub Pass: SSceneColor + GBuffer write, SceneDepth read only

RHICmdList.NextSubpass();

// Render decals

if (ViewFamily.EngineShowFlags.Decals)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderDecals);

RenderDecals(RHICmdList);

}

// The next sub Pass: SceneColor is written, and SceneDepth is read-only

RHICmdList.NextSubpass();

// Delay light shading

MobileDeferredShadingPass(RHICmdList, *Scene, ViewList, SortedLightSet);

// Paint translucent

if (ViewFamily.EngineShowFlags.Translucency)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderTranslucency);

SCOPE_CYCLE_COUNTER(STAT_TranslucencyDrawTime);

RenderTranslucency(RHICmdList, ViewList);

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

// End rendering Pass

RHICmdList.EndRenderPass();

}

// Multi Pass mode (mobile terminal simulated by PC device)

else

{

// End Sub pass

RHICmdList.NextSubpass();

RHICmdList.NextSubpass();

RHICmdList.EndRenderPass();

// SceneColor + GBuffer write, SceneDepth is read only

{

for (int32 Index = 0; Index < UE_ARRAY_COUNT(ColorTargets); ++Index)

{

BasePassInfo.ColorRenderTargets[Index].Action = ERenderTargetActions::Load_Store;

}

BasePassInfo.DepthStencilRenderTarget.Action = EDepthStencilTargetActions::LoadDepthStencil_StoreDepthStencil;

BasePassInfo.DepthStencilRenderTarget.ExclusiveDepthStencil = FExclusiveDepthStencil::DepthRead_StencilRead;

BasePassInfo.SubpassHint = ESubpassHint::None;

BasePassInfo.NumOcclusionQueries = 0;

BasePassInfo.bOcclusionQueries = false;

RHICmdList.BeginRenderPass(BasePassInfo, TEXT("AfterBasePass"));

// Render decals

if (ViewFamily.EngineShowFlags.Decals)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderDecals);

RenderDecals(RHICmdList);

}

RHICmdList.EndRenderPass();

}

// SceneColor write, SceneDepth is read only

{

FRHIRenderPassInfo ShadingPassInfo(

SceneContext.GetSceneColorSurface(),

ERenderTargetActions::Load_Store,

nullptr,

SceneContext.GetSceneDepthSurface(),

EDepthStencilTargetActions::LoadDepthStencil_StoreDepthStencil,

nullptr,

nullptr,

VRSRB_Passthrough,

FExclusiveDepthStencil::DepthRead_StencilWrite

);

ShadingPassInfo.NumOcclusionQueries = 0;

ShadingPassInfo.bOcclusionQueries = false;

RHICmdList.BeginRenderPass(ShadingPassInfo, TEXT("MobileShadingPass"));

// Delay light shading

MobileDeferredShadingPass(RHICmdList, *Scene, ViewList, SortedLightSet);

// Paint translucent

if (ViewFamily.EngineShowFlags.Translucency)

{

CSV_SCOPED_TIMING_STAT_EXCLUSIVE(RenderTranslucency);

SCOPE_CYCLE_COUNTER(STAT_TranslucencyDrawTime);

RenderTranslucency(RHICmdList, ViewList);

FRHICommandListExecutor::GetImmediateCommandList().PollOcclusionQueries();

RHICmdList.ImmediateFlush(EImmediateFlushType::DispatchToRHIThread);

}

RHICmdList.EndRenderPass();

}

}

return ColorTargets[0];

}

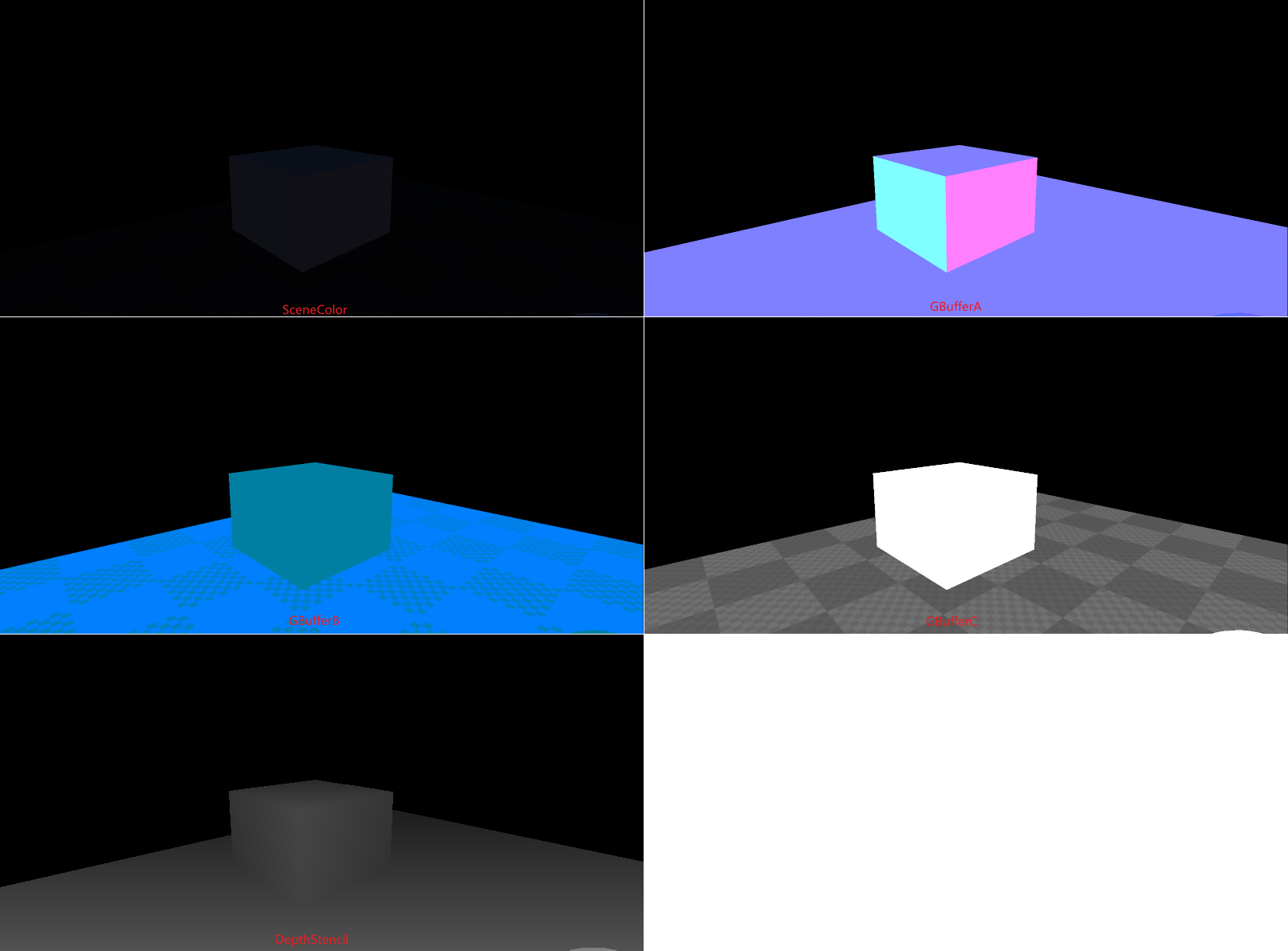

It can be seen from the above that the delayed rendering pipeline at the mobile end is similar to that of the PC. first render the BasePass to obtain the geometric information of GBuffer, and then perform lighting calculation. Their flow chart is as follows:

Of course, there are some differences from PC. the most obvious is that the mobile terminal uses SubPass rendering adapted to TB(D)R architecture, so that when rendering PrePass depth, BasePass and lighting calculation, the mobile terminal keeps the scene color, depth, GBuffer and other information in the buffer of on chip, so as to improve rendering efficiency and reduce equipment energy consumption.

12.3.3.1 MobileDeferredShadingPass

The process of delaying lighting rendering is performed by MobileDeferredShadingPass:

void MobileDeferredShadingPass(

FRHICommandListImmediate& RHICmdList,

const FScene& Scene,

const TArrayView<const FViewInfo*> PassViews,

const FSortedLightSetSceneInfo &SortedLightSet)

{

SCOPED_DRAW_EVENT(RHICmdList, MobileDeferredShading);

const FViewInfo& View0 = *PassViews[0];

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// Create a Uniform Buffer

FUniformBufferRHIRef PassUniformBuffer = CreateMobileSceneTextureUniformBuffer(RHICmdList);

FUniformBufferStaticBindings GlobalUniformBuffers(PassUniformBuffer);

SCOPED_UNIFORM_BUFFER_GLOBAL_BINDINGS(RHICmdList, GlobalUniformBuffers);

// Set the viewport

RHICmdList.SetViewport(View0.ViewRect.Min.X, View0.ViewRect.Min.Y, 0.0f, View0.ViewRect.Max.X, View0.ViewRect.Max.Y, 1.0f);

// The default material for lighting

FCachedLightMaterial DefaultMaterial;

DefaultMaterial.MaterialProxy = UMaterial::GetDefaultMaterial(MD_LightFunction)->GetRenderProxy();

DefaultMaterial.Material = DefaultMaterial.MaterialProxy->GetMaterialNoFallback(ERHIFeatureLevel::ES3_1);

check(DefaultMaterial.Material);

// Draws a directional light

RenderDirectLight(RHICmdList, Scene, View0, DefaultMaterial);

if (GMobileUseClusteredDeferredShading == 0)

{

// Render simple non clustered lights

RenderSimpleLights(RHICmdList, Scene, PassViews, SortedLightSet, DefaultMaterial);

}

// Render non clustered local lights

int32 NumLights = SortedLightSet.SortedLights.Num();

int32 StandardDeferredStart = SortedLightSet.SimpleLightsEnd;

if (GMobileUseClusteredDeferredShading != 0)

{

StandardDeferredStart = SortedLightSet.ClusteredSupportedEnd;

}

// Render local lights

for (int32 LightIdx = StandardDeferredStart; LightIdx < NumLights; ++LightIdx)

{

const FSortedLightSceneInfo& SortedLight = SortedLightSet.SortedLights[LightIdx];

const FLightSceneInfo& LightSceneInfo = *SortedLight.LightSceneInfo;

RenderLocalLight(RHICmdList, Scene, View0, LightSceneInfo, DefaultMaterial);

}

}

Next, continue to analyze the interfaces for rendering different types of lights:

// Engine\Source\Runtime\Renderer\Private\MobileDeferredShadingPass.cpp

// Render directional light

static void RenderDirectLight(FRHICommandListImmediate& RHICmdList, const FScene& Scene, const FViewInfo& View, const FCachedLightMaterial& DefaultLightMaterial)

{

FSceneRenderTargets& SceneContext = FSceneRenderTargets::Get(RHICmdList);

// Find the first directional light

FLightSceneInfo* DirectionalLight = nullptr;

for (int32 ChannelIdx = 0; ChannelIdx < UE_ARRAY_COUNT(Scene.MobileDirectionalLights) && !DirectionalLight; ChannelIdx++)

{

DirectionalLight = Scene.MobileDirectionalLights[ChannelIdx];

}

// Render state

FGraphicsPipelineStateInitializer GraphicsPSOInit;

RHICmdList.ApplyCachedRenderTargets(GraphicsPSOInit);

// Increase self illumination to SceneColor

GraphicsPSOInit.BlendState = TStaticBlendState<CW_RGB, BO_Add, BF_One, BF_One>::GetRHI();

GraphicsPSOInit.RasterizerState = TStaticRasterizerState<>::GetRHI();

// Only the pixels of the default lighting model (MSM_DefaultLit) are drawn

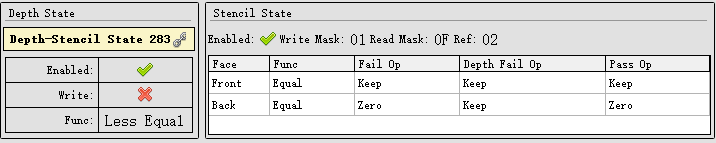

uint8 StencilRef = GET_STENCIL_MOBILE_SM_MASK(MSM_DefaultLit);

GraphicsPSOInit.DepthStencilState = TStaticDepthStencilState<

false, CF_Always,

true, CF_Equal, SO_Keep, SO_Keep, SO_Keep,

false, CF_Always, SO_Keep, SO_Keep, SO_Keep,

GET_STENCIL_MOBILE_SM_MASK(0x7), 0x00>::GetRHI(); // 4 bits for shading models

// Process VS

TShaderMapRef<FPostProcessVS> VertexShader(View.ShaderMap);

const FMaterialRenderProxy* LightFunctionMaterialProxy = nullptr;

if (View.Family->EngineShowFlags.LightFunctions && DirectionalLight)

{

LightFunctionMaterialProxy = DirectionalLight->Proxy->GetLightFunctionMaterial();

}

FMobileDirectLightFunctionPS::FPermutationDomain PermutationVector = FMobileDirectLightFunctionPS::BuildPermutationVector(View, DirectionalLight != nullptr);

FCachedLightMaterial LightMaterial;

TShaderRef<FMobileDirectLightFunctionPS> PixelShader;

GetLightMaterial(DefaultLightMaterial, LightFunctionMaterialProxy, PermutationVector.ToDimensionValueId(), LightMaterial, PixelShader);

GraphicsPSOInit.BoundShaderState.VertexDeclarationRHI = GFilterVertexDeclaration.VertexDeclarationRHI;

GraphicsPSOInit.BoundShaderState.VertexShaderRHI = VertexShader.GetVertexShader();

GraphicsPSOInit.BoundShaderState.PixelShaderRHI = PixelShader.GetPixelShader();

GraphicsPSOInit.PrimitiveType = PT_TriangleList;

SetGraphicsPipelineState(RHICmdList, GraphicsPSOInit);

// Process PS

FMobileDirectLightFunctionPS::FParameters PassParameters;

PassParameters.Forward = View.ForwardLightingResources->ForwardLightDataUniformBuffer;

PassParameters.MobileDirectionalLight = Scene.UniformBuffers.MobileDirectionalLightUniformBuffers[1];

PassParameters.ReflectionCaptureData = Scene.UniformBuffers.ReflectionCaptureUniformBuffer;

FReflectionUniformParameters ReflectionUniformParameters;

SetupReflectionUniformParameters(View, ReflectionUniformParameters);

PassParameters.ReflectionsParameters = CreateUniformBufferImmediate(ReflectionUniformParameters, UniformBuffer_SingleDraw);

PassParameters.LightFunctionParameters = FVector4(1.0f, 1.0f, 0.0f, 0.0f);

if (DirectionalLight)

{

const bool bUseMovableLight = DirectionalLight && !DirectionalLight->Proxy->HasStaticShadowing();

PassParameters.LightFunctionParameters2 = FVector(DirectionalLight->Proxy->GetLightFunctionFadeDistance(), DirectionalLight->Proxy->GetLightFunctionDisabledBrightness(), bUseMovableLight ? 1.0f : 0.0f);

const FVector Scale = DirectionalLight->Proxy->GetLightFunctionScale();

// Switch x and z so that z of the user specified scale affects the distance along the light direction

const FVector InverseScale = FVector(1.f / Scale.Z, 1.f / Scale.Y, 1.f / Scale.X);

PassParameters.WorldToLight = DirectionalLight->Proxy->GetWorldToLight() * FScaleMatrix(FVector(InverseScale));

}

FMobileDirectLightFunctionPS::SetParameters(RHICmdList, PixelShader, View, LightMaterial.MaterialProxy, *LightMaterial.Material, PassParameters);

RHICmdList.SetStencilRef(StencilRef);

const FIntPoint TargetSize = SceneContext.GetBufferSizeXY();

// Draw with a full screen rectangle

DrawRectangle(

RHICmdList,

0, 0,

View.ViewRect.Width(), View.ViewRect.Height(),

View.ViewRect.Min.X, View.ViewRect.Min.Y,

View.ViewRect.Width(), View.ViewRect.Height(),

FIntPoint(View.ViewRect.Width(), View.ViewRect.Height()),

TargetSize,

VertexShader);

}

// Render simple lights in non - clustered mode

static void RenderSimpleLights(

FRHICommandListImmediate& RHICmdList,

const FScene& Scene,

const TArrayView<const FViewInfo*> PassViews,

const FSortedLightSetSceneInfo &SortedLightSet,

const FCachedLightMaterial& DefaultMaterial)

{

const FSimpleLightArray& SimpleLights = SortedLightSet.SimpleLights;

const int32 NumViews = PassViews.Num();

const FViewInfo& View0 = *PassViews[0];

// Process VS

TShaderMapRef<TDeferredLightVS<true>> VertexShader(View0.ShaderMap);

TShaderRef<FMobileRadialLightFunctionPS> PixelShaders[2];

{

const FMaterialShaderMap* MaterialShaderMap = DefaultMaterial.Material->GetRenderingThreadShaderMap();

FMobileRadialLightFunctionPS::FPermutationDomain PermutationVector;

PermutationVector.Set<FMobileRadialLightFunctionPS::FSpotLightDim>(false);

PermutationVector.Set<FMobileRadialLightFunctionPS::FIESProfileDim>(false);

PermutationVector.Set<FMobileRadialLightFunctionPS::FInverseSquaredDim>(false);

PixelShaders[0] = MaterialShaderMap->GetShader<FMobileRadialLightFunctionPS>(PermutationVector);

PermutationVector.Set<FMobileRadialLightFunctionPS::FInverseSquaredDim>(true);

PixelShaders[1] = MaterialShaderMap->GetShader<FMobileRadialLightFunctionPS>(PermutationVector);

}

// Set PSO

FGraphicsPipelineStateInitializer GraphicsPSOLight[2];

{

SetupSimpleLightPSO(RHICmdList, View0, VertexShader, PixelShaders[0], GraphicsPSOLight[0]);

SetupSimpleLightPSO(RHICmdList, View0, VertexShader, PixelShaders[1], GraphicsPSOLight[1]);

}

// Set template buffer

FGraphicsPipelineStateInitializer GraphicsPSOLightMask;

{

RHICmdList.ApplyCachedRenderTargets(GraphicsPSOLightMask);

GraphicsPSOLightMask.PrimitiveType = PT_TriangleList;

GraphicsPSOLightMask.BlendState = TStaticBlendStateWriteMask<CW_NONE, CW_NONE, CW_NONE, CW_NONE, CW_NONE, CW_NONE, CW_NONE, CW_NONE>::GetRHI();

GraphicsPSOLightMask.RasterizerState = View0.bReverseCulling ? TStaticRasterizerState<FM_Solid, CM_CCW>::GetRHI() : TStaticRasterizerState<FM_Solid, CM_CW>::GetRHI();

// set stencil to 1 where depth test fails

GraphicsPSOLightMask.DepthStencilState = TStaticDepthStencilState<

false, CF_DepthNearOrEqual,

true, CF_Always, SO_Keep, SO_Replace, SO_Keep,

false, CF_Always, SO_Keep, SO_Keep, SO_Keep,

0x00, STENCIL_SANDBOX_MASK>::GetRHI();

GraphicsPSOLightMask.BoundShaderState.VertexDeclarationRHI = GetVertexDeclarationFVector4();

GraphicsPSOLightMask.BoundShaderState.VertexShaderRHI = VertexShader.GetVertexShader();

GraphicsPSOLightMask.BoundShaderState.PixelShaderRHI = nullptr;

}

// Traverse the list of all simple lights and perform shading calculations

for (int32 LightIndex = 0; LightIndex < SimpleLights.InstanceData.Num(); LightIndex++)

{

const FSimpleLightEntry& SimpleLight = SimpleLights.InstanceData[LightIndex];

for (int32 ViewIndex = 0; ViewIndex < NumViews; ViewIndex++)

{

const FViewInfo& View = *PassViews[ViewIndex];

const FSimpleLightPerViewEntry& SimpleLightPerViewData = SimpleLights.GetViewDependentData(LightIndex, ViewIndex, NumViews);

const FSphere LightBounds(SimpleLightPerViewData.Position, SimpleLight.Radius);

if (NumViews > 1)

{

// set viewports only we we have more than one

// otherwise it is set at the start of the pass

RHICmdList.SetViewport(View.ViewRect.Min.X, View.ViewRect.Min.Y, 0.0f, View.ViewRect.Max.X, View.ViewRect.Max.Y, 1.0f);

}

// Render a light mask

SetGraphicsPipelineState(RHICmdList, GraphicsPSOLightMask);

VertexShader->SetSimpleLightParameters(RHICmdList, View, LightBounds);

RHICmdList.SetStencilRef(1);

StencilingGeometry::DrawSphere(RHICmdList);

// Render lights

FMobileRadialLightFunctionPS::FParameters PassParameters;

FDeferredLightUniformStruct DeferredLightUniformsValue;

SetupSimpleDeferredLightParameters(SimpleLight, SimpleLightPerViewData, DeferredLightUniformsValue);

PassParameters.DeferredLightUniforms = TUniformBufferRef<FDeferredLightUniformStruct>::CreateUniformBufferImmediate(DeferredLightUniformsValue, EUniformBufferUsage::UniformBuffer_SingleFrame);

PassParameters.IESTexture = GWhiteTexture->TextureRHI;

PassParameters.IESTextureSampler = GWhiteTexture->SamplerStateRHI;

if (SimpleLight.Exponent == 0)

{

SetGraphicsPipelineState(RHICmdList, GraphicsPSOLight[1]);

FMobileRadialLightFunctionPS::SetParameters(RHICmdList, PixelShaders[1], View, DefaultMaterial.MaterialProxy, *DefaultMaterial.Material, PassParameters);

}

else

{

SetGraphicsPipelineState(RHICmdList, GraphicsPSOLight[0]);

FMobileRadialLightFunctionPS::SetParameters(RHICmdList, PixelShaders[0], View, DefaultMaterial.MaterialProxy, *DefaultMaterial.Material, PassParameters);

}

VertexShader->SetSimpleLightParameters(RHICmdList, View, LightBounds);

// Only the pixels of the default lighting model (MSM_DefaultLit) are drawn

uint8 StencilRef = GET_STENCIL_MOBILE_SM_MASK(MSM_DefaultLit);

RHICmdList.SetStencilRef(StencilRef);

// Render light sources (point light and spotlight) with spheres to quickly eliminate pixels outside the influence of light sources

StencilingGeometry::DrawSphere(RHICmdList);

}

}

}

// Render local lights

static void RenderLocalLight(

FRHICommandListImmediate& RHICmdList,

const FScene& Scene,

const FViewInfo& View,

const FLightSceneInfo& LightSceneInfo,

const FCachedLightMaterial& DefaultLightMaterial)

{

if (!LightSceneInfo.ShouldRenderLight(View))

{

return;

}

// Ignore nonlocal lights (lights other than lights and spotlights)

const uint8 LightType = LightSceneInfo.Proxy->GetLightType();

const bool bIsSpotLight = LightType == LightType_Spot;

const bool bIsPointLight = LightType == LightType_Point;

if (!bIsSpotLight && !bIsPointLight)

{

return;

}

// Draw a lighting template

if (GMobileUseLightStencilCulling != 0)

{

RenderLocalLight_StencilMask(RHICmdList, Scene, View, LightSceneInfo);

}

// Handle IES illumination

bool bUseIESTexture = false;

FTexture* IESTextureResource = GWhiteTexture;

if (View.Family->EngineShowFlags.TexturedLightProfiles && LightSceneInfo.Proxy->GetIESTextureResource())

{

IESTextureResource = LightSceneInfo.Proxy->GetIESTextureResource();

bUseIESTexture = true;

}

FGraphicsPipelineStateInitializer GraphicsPSOInit;

RHICmdList.ApplyCachedRenderTargets(GraphicsPSOInit);

GraphicsPSOInit.BlendState = TStaticBlendState<CW_RGBA, BO_Add, BF_One, BF_One, BO_Add, BF_One, BF_One>::GetRHI();

GraphicsPSOInit.PrimitiveType = PT_TriangleList;

const FSphere LightBounds = LightSceneInfo.Proxy->GetBoundingSphere();

// Sets the light rasterization and depth state

if (GMobileUseLightStencilCulling != 0)

{

SetLocalLightRasterizerAndDepthState_StencilMask(GraphicsPSOInit, View);

}

else

{

SetLocalLightRasterizerAndDepthState(GraphicsPSOInit, View, LightBounds);

}

// Set VS

TShaderMapRef<TDeferredLightVS<true>> VertexShader(View.ShaderMap);

const FMaterialRenderProxy* LightFunctionMaterialProxy = nullptr;

if (View.Family->EngineShowFlags.LightFunctions)

{

LightFunctionMaterialProxy = LightSceneInfo.Proxy->GetLightFunctionMaterial();

}

FMobileRadialLightFunctionPS::FPermutationDomain PermutationVector;

PermutationVector.Set<FMobileRadialLightFunctionPS::FSpotLightDim>(bIsSpotLight);

PermutationVector.Set<FMobileRadialLightFunctionPS::FInverseSquaredDim>(LightSceneInfo.Proxy->IsInverseSquared());

PermutationVector.Set<FMobileRadialLightFunctionPS::FIESProfileDim>(bUseIESTexture);

FCachedLightMaterial LightMaterial;

TShaderRef<FMobileRadialLightFunctionPS> PixelShader;

GetLightMaterial(DefaultLightMaterial, LightFunctionMaterialProxy, PermutationVector.ToDimensionValueId(), LightMaterial, PixelShader);

GraphicsPSOInit.BoundShaderState.VertexDeclarationRHI = GetVertexDeclarationFVector4();

GraphicsPSOInit.BoundShaderState.VertexShaderRHI = VertexShader.GetVertexShader();

GraphicsPSOInit.BoundShaderState.PixelShaderRHI = PixelShader.GetPixelShader();

SetGraphicsPipelineState(RHICmdList, GraphicsPSOInit);

VertexShader->SetParameters(RHICmdList, View, &LightSceneInfo);

// Set PS

FMobileRadialLightFunctionPS::FParameters PassParameters;

PassParameters.DeferredLightUniforms = TUniformBufferRef<FDeferredLightUniformStruct>::CreateUniformBufferImmediate(GetDeferredLightParameters(View, LightSceneInfo), EUniformBufferUsage::UniformBuffer_SingleFrame);

PassParameters.IESTexture = IESTextureResource->TextureRHI;

PassParameters.IESTextureSampler = IESTextureResource->SamplerStateRHI;

const float TanOuterAngle = bIsSpotLight ? FMath::Tan(LightSceneInfo.Proxy->GetOuterConeAngle()) : 1.0f;

PassParameters.LightFunctionParameters = FVector4(TanOuterAngle, 1.0f /*ShadowFadeFraction*/, bIsSpotLight ? 1.0f : 0.0f, bIsPointLight ? 1.0f : 0.0f);

PassParameters.LightFunctionParameters2 = FVector(LightSceneInfo.Proxy->GetLightFunctionFadeDistance(), LightSceneInfo.Proxy->GetLightFunctionDisabledBrightness(), 0.0f);

const FVector Scale = LightSceneInfo.Proxy->GetLightFunctionScale();

// Switch x and z so that z of the user specified scale affects the distance along the light direction

const FVector InverseScale = FVector(1.f / Scale.Z, 1.f / Scale.Y, 1.f / Scale.X);

PassParameters.WorldToLight = LightSceneInfo.Proxy->GetWorldToLight() * FScaleMatrix(FVector(InverseScale));

FMobileRadialLightFunctionPS::SetParameters(RHICmdList, PixelShader, View, LightMaterial.MaterialProxy, *LightMaterial.Material, PassParameters);

// Only the pixels of the default lighting model (MSM_DefaultLit) are drawn

uint8 StencilRef = GET_STENCIL_MOBILE_SM_MASK(MSM_DefaultLit);

RHICmdList.SetStencilRef(StencilRef);

// Point lights are drawn with spheres

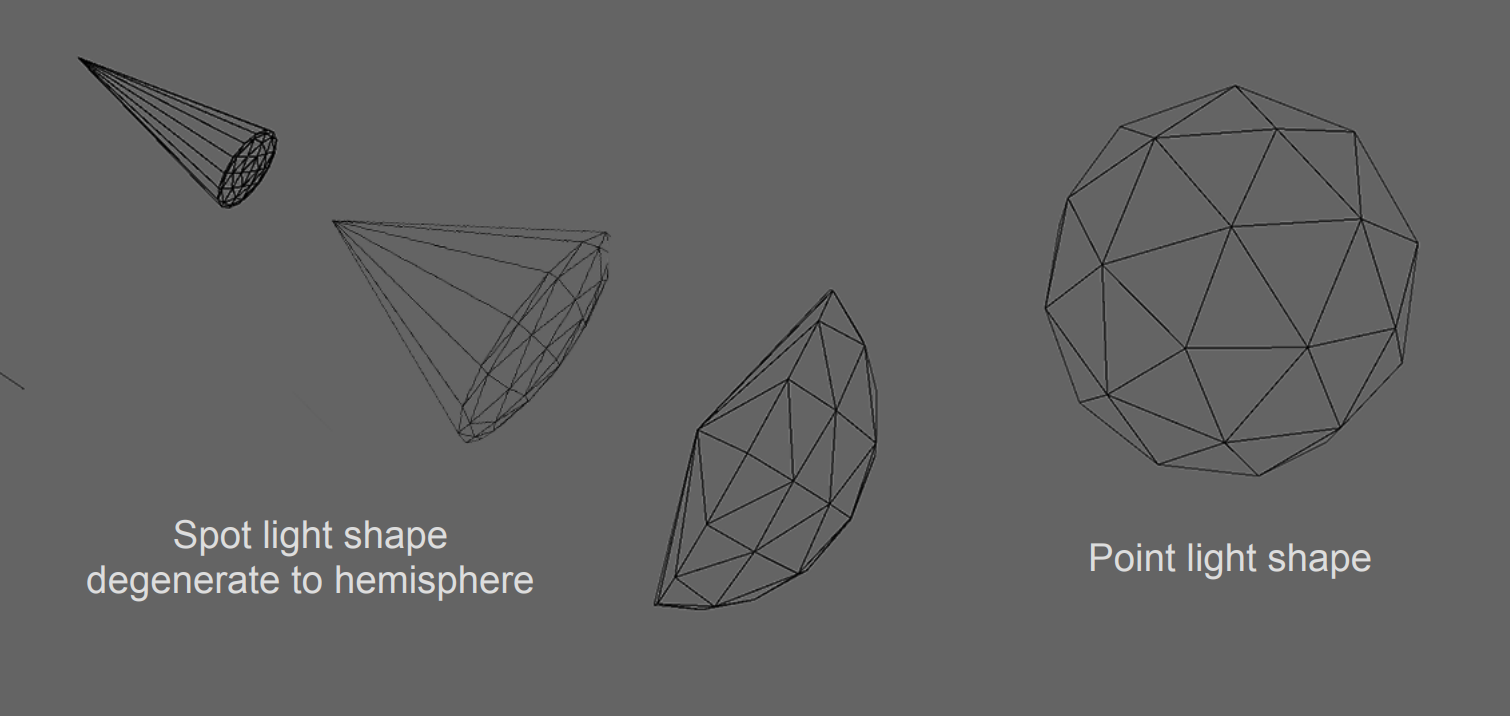

if (LightType == LightType_Point)

{