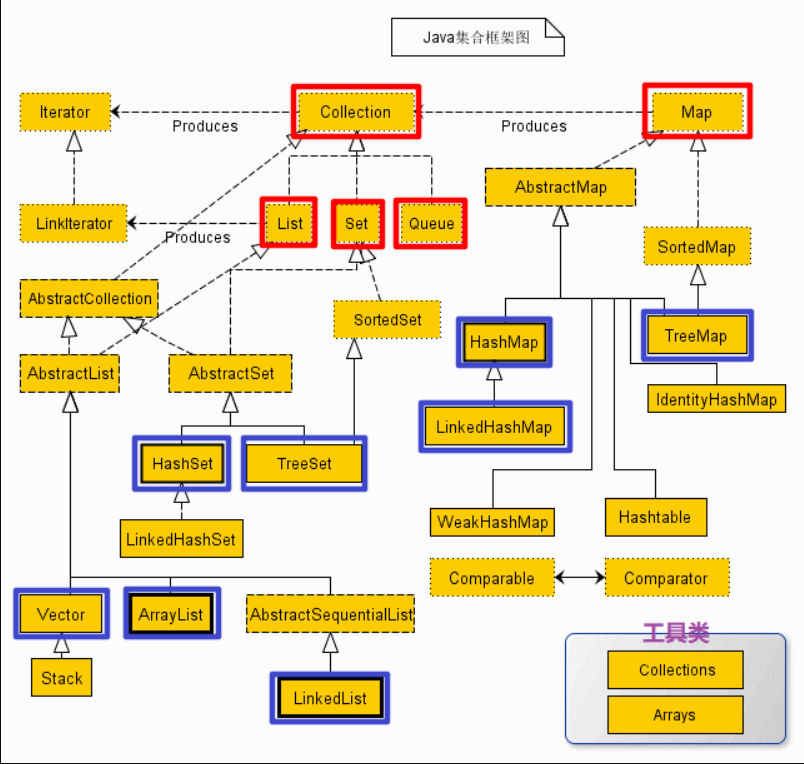

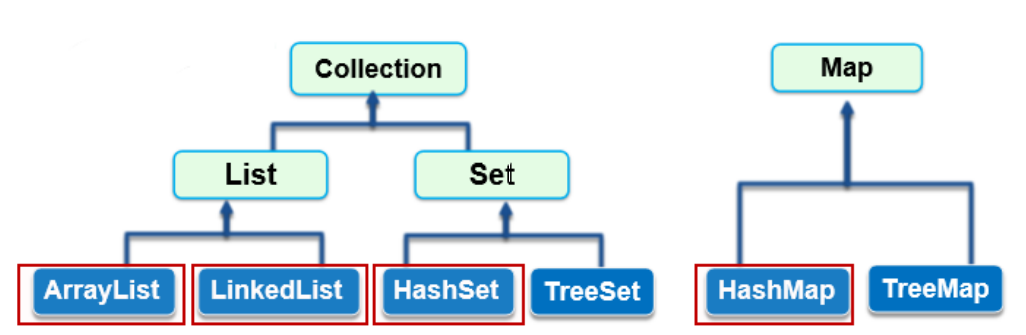

Set framework generalization

A collection can be regarded as a container for storing object information. Some excellent algorithms and data structures are encapsulated in the collection framework of Java.

- The Collection interface stores a set of non unique and unordered objects

- The List interface stores a set of non unique and ordered objects.

- The Set interface stores a unique, unordered Set of objects

- The Map interface stores a set of key value objects and provides key to value mapping

Collection

public interface Collection<E> extends Iterable<E> {

//Returns the number of elements in the collection. If the number of elements in the collection exceeds Integer.MAX_VALUE (integer maximum), return Integer.MAX_VALUE

int size();

//Returns whether the collection is empty

boolean isEmpty();

//Returns whether the collection contains elements o

boolean contains(Object o);

//Return an iterator that can traverse the collection. There is no way to ensure that the returned elements are orderly (unless the instance of this class provides an orderly guarantee)

Iterator<E> iterator();

//Returns an array containing all the elements in the collection. The returned array will not contain any references (equivalent to allocating a new array, even if the collection is supported by the array.)

Object[] toArray();

//Returns an array of type T; If the array is of type T, you can return t directly. Otherwise, a new array will be allocated as the runtime type.

<T> T[] toArray(T[] a);

//Add a singleton to the collection

boolean add(E e);

//If this object is in the collection, delete it

boolean remove(Object o);

//Returns true if this collection contains all elements in the specified collection

boolean containsAll(Collection<?> c);

//Adds all elements in the specified collection to the current collection

boolean addAll(Collection<? extends E> c);

//Remove all elements in this collection that are also contained in the c collection

boolean removeAll(Collection<?> c);

//Delete all elements except set c

boolean retainAll(Collection<?> c);

//Delete all elements in this collection

void clear();

//Compares whether this collection is equal to the specified object

boolean equals(Object o);

//Returns the hash value of this collection

int hashCode();

}

Array

// array define

String[] strs = new String[]{"111","222","333"};

// array define

String[] strs2 = new String[3];

strs2[0] = "111";

strs2[1] = "222";

strs2[2] = "333";

- Array length in Java cannot be changed. Once the length is fixed, you cannot add elements longer than the length, which is responsible for the array out of bounds exception.

- Elements cannot be appended dynamically.

List

- ArrayList

(1) . dynamic array, which solves the expansion problem of java dynamic array. The array element type is Object type, that is, it can store all types of data

Object[] elementData;

(2) Maximum limit of array length of. ArrayList?

// Maximum boundary value of expansion

// 1. Prevent stack overflow and cross-border 2. Maximize in advance and reduce the chance of capacity expansion

// Data storage in the collection reaches its limit, and any data migration and replication will cause memory overflow

private static final int MAX_ARRAY_SIZE = Integer.MAX_VALUE - 8;

// Expansion method

private static int hugeCapacity(int minCapacity) {

if (minCapacity < 0) // overflow

throw new OutOfMemoryError();

return (minCapacity > MAX_ARRAY_SIZE) ?

Integer.MAX_VALUE :

MAX_ARRAY_SIZE;

}

(3) Initialization length of. ArrayList?

/** * Default initial capacity. */ private static final int DEFAULT_CAPACITY = 10;

(3) Nonparametric construction of ArrayList?

However, new ArrayList < > () or new HashMap < > () use a delayed loading method to complete initialization (after JDK1.8).

private static final Object[] DEFAULTCAPACITY_EMPTY_ELEMENTDATA = {};

public ArrayList() {

this.elementData = DEFAULTCAPACITY_EMPTY_ELEMENTDATA;

}

Array with initialization length of 10 before JDK1.8:

this.elementData = new Object[initialCapacity];

This is to save space. An array with a length of 10 is defined but not used. When was JDK 1.8 initialized?

Initialize at the time of the add() method.

(4) How is ArrayList dynamically expanded? What is the timing of capacity expansion?

public boolean add(E e) {

ensureCapacityInternal(size + 1); // Increments modCount!!

elementData[size++] = e;

return true;

}

private void ensureCapacityInternal(int minCapacity) {

if (elementData == DEFAULTCAPACITY_EMPTY_ELEMENTDATA) {

minCapacity = Math.max(DEFAULT_CAPACITY, minCapacity);

}

ensureExplicitCapacity(minCapacity);

}

private void ensureExplicitCapacity(int minCapacity) {

modCount++;

// overflow-conscious code

if (minCapacity - elementData.length > 0)

grow(minCapacity);

}

private void grow(int minCapacity) {

// overflow-conscious code

int oldCapacity = elementData.length;

// 1.5 times, the speed of bit operation is much faster than simple addition, subtraction, multiplication and division

int newCapacity = oldCapacity + (oldCapacity >> 1);

if (newCapacity - minCapacity < 0)

newCapacity = minCapacity;

if (newCapacity - MAX_ARRAY_SIZE > 0)

newCapacity = hugeCapacity(minCapacity);

// minCapacity is usually close to size, so this is a win:

elementData = Arrays.copyOf(elementData, newCapacity);

}

(5) remove from ArrayList?

public E remove(int index) {

rangeCheck(index);

modCount++;

E oldValue = elementData(index);//Find the element directly by index

int numMoved = size - index - 1;//Calculate the number of digits to move

if (numMoved > 0)

System.arraycopy(elementData, index+1, elementData, index,

numMoved);

//Assign the position on -- size to null to make GC (garbage collection mechanism) recycle it faster.

elementData[--size] = null; // clear to let GC do its work

return oldValue;

}

- LinkedList

1) It is realized through linked list

2) If you use linkedList when inserting or deleting data frequently, the performance will be better.

Structure of LinkedList class:

public class LinkedList<E>

extends AbstractSequentialList<E>

implements List<E>, Deque<E>, Cloneable, java.io.Serializable

{

transient int size = 0;// Actual number of elements

transient Node<E> first;// Head node

transient Node<E> last;// Tail node

}

The properties of LinkedList are very simple, including a head node, a tail node and a variable representing the actual number of elements in the linked list. The head node and tail node are decorated with the transient keyword, which also means that the field will not be serialized during serialization.

Node inner class

private static class Node<E> {

E item;// Data field (value of current node)

Node<E> next;// Subsequent (point to the next node of the current node)

Node<E> prev;// Precursor (point to the previous node of the current node)

Node(Node<E> prev, E element, Node<E> next) {

this.item = element;

this.next = next;

this.prev = prev;

}

}

Implementation of the core method add:

public boolean add(E e) {

linkLast(e);

return true;

}

The add function is used to add an element to the LinkedList and add it to the end of the linked list. The logic added to the tail is completed by the linkLast function.

void linkLast(E e) {

final Node<E> l = last;

final Node<E> newNode = new Node<>(l, e, null);//E is encapsulated as a node, and e.prev points to the last node

last = newNode;//newNode becomes the last node

if (l == null)//Judge whether there is nothing in the linked list at the beginning. If not, newNode becomes the first node, and both first and last point to it

first = newNode;

else//If you normally append after the last node, the next of the original last node will point to the real last node, and the original last node will become the penultimate node

l.next = newNode;

size++;//Add a node, and the size increases automatically

modCount++;

}

Set

public class HashSet<E>

extends AbstractSet<E>

implements Set<E>, Cloneable, java.io.Serializable

{

static final long serialVersionUID = -5024744406713321676L;

private transient HashMap<E,Object> map;

private static final Object PRESENT = new Object();

// The bottom layer of the construction method is HashMap

public HashSet() {

map = new HashMap<>();

}

Core method add method:

public boolean add(E e) {

return map.put(e, PRESENT)==null;

}

The bottom layer of HashSet is HashMap.

At the bottom of TreeSet is TreeMap(). A collation can be passed in the constructor

TreeSet objects = new TreeSet<>(new Comparator<Student>() {

@Override

public int compare(Student o1, Student o2) {

return o1.getAge()-o2.getAge();

}

});

Map

The Map interface is dedicated to the storage of key value mapping data, and the value can be operated according to the key.

The most commonly used implementation class is HashMap.

1.HashMap overview and principle

HashMap is implemented based on the Map interface of hash table. It stores the mapping of key value pairs < key, value >. This class does not guarantee the order of mapping. It is assumed that the hash function distributes the elements appropriately between the buckets, which can provide stable performance for basic operations (get and put).

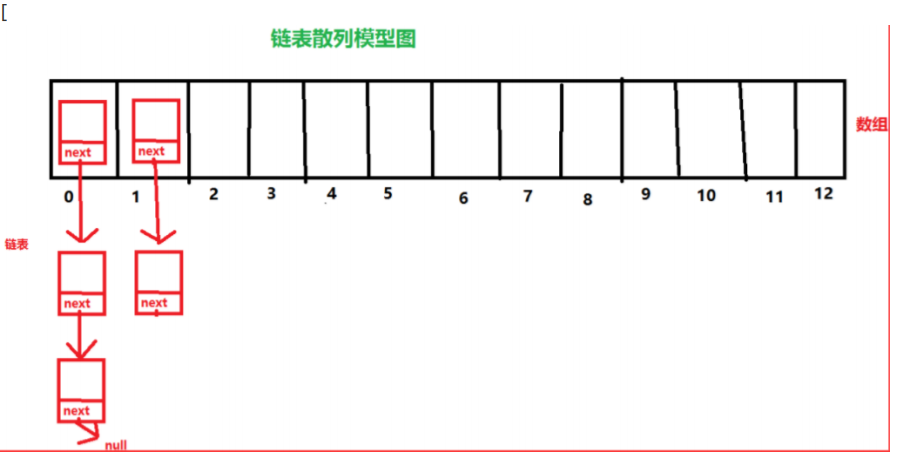

- HashMap data structure and storage principle before JDK1.8

The data structure of HashMap is the linked list hash used (the data structure of HashMap is the linked list hash used).

1. By default, the HashMap has a header array element Entry with a length of 16, which stores the header element node of the linked list.

2. Determine where the element is stored through a hash algorithm similar to hash(key%hashCode())%len.

How does the underlying HashMap use this data structure for data access?

Step 1: there is an internal class of entry in HashMap, which has four attributes. If we want to store a value, we need a key and a value. If we save it in the map, we will first save the key and value in the object created by the entry class.

static class Entry<K,V> implements Map.Entry<K,V> {

final K key; //key of map

V value; //value

Entry<K,V> next;//Point to the next entry object

int hash;//Your hashcode value calculated by key.

}

Step 2: construct the entry object and put it into the array.

The key and value are encapsulated into an entry object, and then the hash value of the entry is calculated through the value of the key. The position of the entry in the array is calculated through the hash value of the entry and the length of the array.

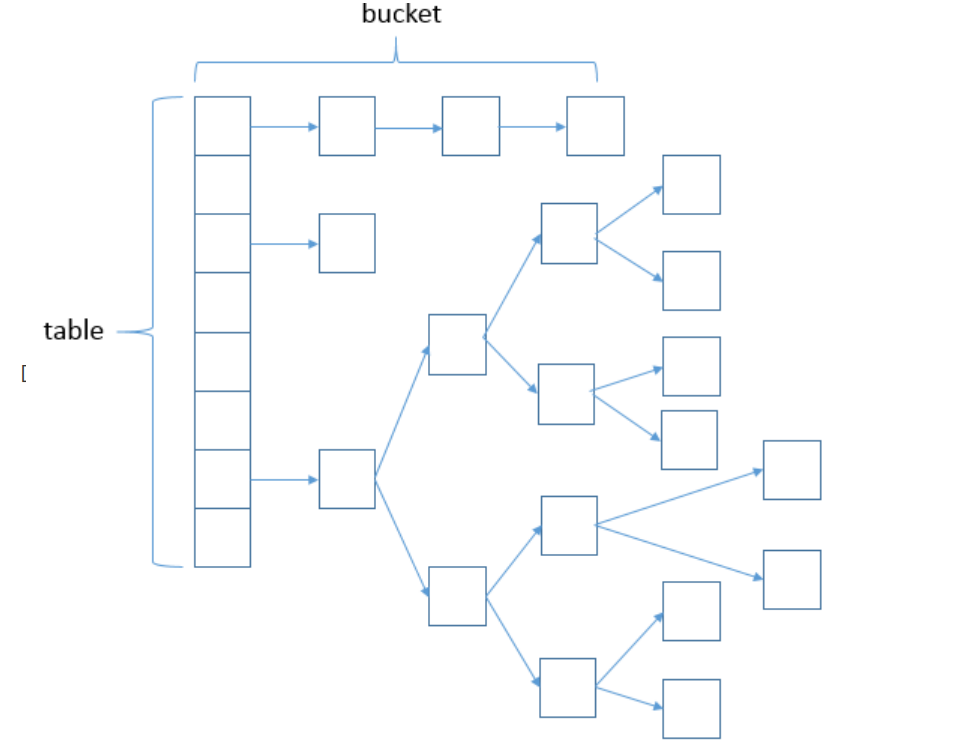

- Data structure of HashMap after JDK1.8

The bottom layer is based on: array + linked list + red black tree. The structure in the bucket may be linked list or red black tree. Red black tree is introduced to improve efficiency.

The HashMap has a header array element node with a length of 16 (it was called Entry before JDK1.8 and changed its name in 1.8)

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next;

}

2. Construction method of HashMap

HashMap has four construction methods. The construction method does not initialize the array. The array has been created at the beginning. The construction method only does two things, one is to initialize the loading factor, and the other is to record the initialization size of the array with threshold.

The parameterless construction method of HashMap uses the method of delayed loading to initialize the array. The default length is 16,.

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR;

}

Initialization is in the put method of HashMap.

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

// The table is uninitialized or has a length of 0. Capacity expansion is required

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// (n - 1) & hash determines the bucket in which the elements are stored. The bucket is empty, and the newly generated node is placed in the bucket (at this time, the node is placed in the array)

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

// Element already exists in bucket

else {

Node<K,V> e; K k;

// The hash value of the first element (node in the array) in the comparison bucket is equal, and the key is equal

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

// Assign the first element to e and record it with E

e = p;

// hash values are not equal, that is, key s are not equal; Red black tree node

else if (p instanceof TreeNode)

// Put it in the tree

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

// Is a linked list node

else {

// Insert a node at the end of the linked list

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {// Reach the end of the linked list

// Insert a new node at the end

p.next = newNode(hash, key, value, null);

// When the number of nodes reaches the threshold, it is transformed into a red black tree

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

// Jump out of loop

break;

}

// Judge whether the key value of the node in the linked list is equal to the key value of the inserted element

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

// Equal, jump out of loop

break;

// Used to traverse the linked list in the bucket. Combined with the previous e = p.next, you can traverse the linked list

p = e;

}

}

// Indicates that a node whose key value and hash value are equal to the inserted element is found in the bucket

if (e != null) { // existing mapping for key

// Record the value of e

V oldValue = e.value;

// onlyIfAbsent is false or the old value is null

if (!onlyIfAbsent || oldValue == null)

//Replace old value with new value

e.value = value;

// Post access callback

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

// If the actual size is greater than the threshold, the capacity will be expanded

if (++size > threshold)

resize();

// Post insert callback

afterNodeInsertion(evict);

return null;

}

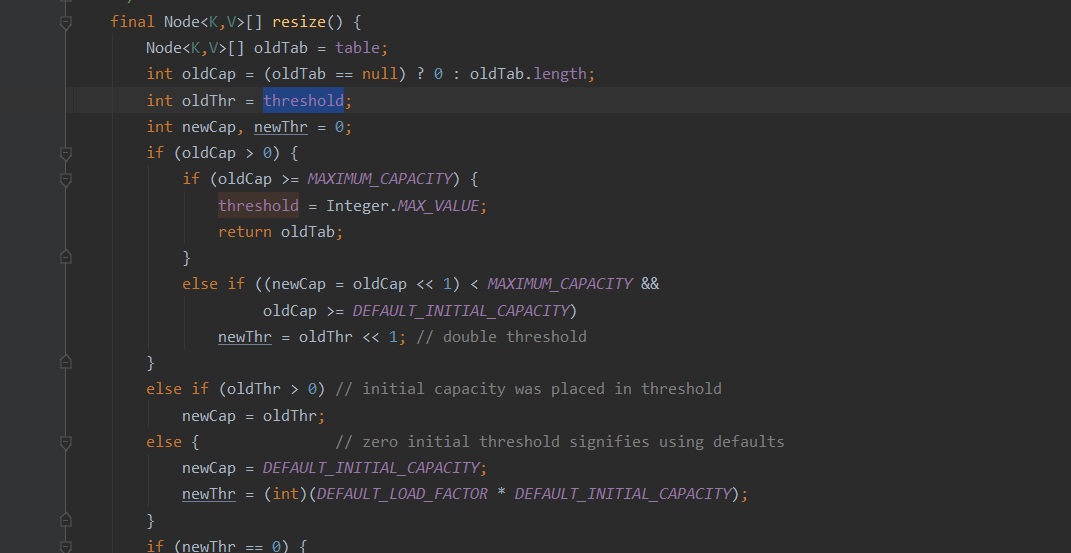

Click to enter the resize() method:

final Node<K,V>[] resize() {

Node<K,V>[] oldTab = table;

int oldCap = (oldTab == null) ? 0 : oldTab.length;

int oldThr = threshold;

int newCap, newThr = 0;

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

}

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // double threshold

}

else if (oldThr > 0)

newCap = oldThr;

else {

// Parameterless method initialization

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int)(DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

float ft = (float)newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float)MAXIMUM_CAPACITY ?

(int)ft : Integer.MAX_VALUE);

}

threshold = newThr;

@SuppressWarnings({"rawtypes","unchecked"})

Node<K,V>[] newTab = (Node<K,V>[])new Node[newCap];

table = newTab;

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

Node<K,V> e;

if ((e = oldTab[j]) != null) {

oldTab[j] = null;

if (e.next == null)

newTab[e.hash & (newCap - 1)] = e;

else if (e instanceof TreeNode)

((TreeNode<K,V>)e).split(this, newTab, j, oldCap);

else { // preserve order

Node<K,V> loHead = null, loTail = null;

Node<K,V> hiHead = null, hiTail = null;

Node<K,V> next;

do {

next = e.next;

if ((e.hash & oldCap) == 0) {

if (loTail == null)

loHead = e;

else

loTail.next = e;

loTail = e;

}

else {

if (hiTail == null)

hiHead = e;

else

hiTail.next = e;

hiTail = e;

}

} while ((e = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

2. What is the default value of the underlying array of HashMap? What is the limit value?

The default value is 16 and the maximum value is 2 to the 30th power

static final int MAXIMUM_CAPACITY = 1 << 30;

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

// The initial capacity cannot be greater than the maximum value, otherwise it is the maximum value

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

// The fill factor cannot be less than or equal to 0 and cannot be non numeric

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

// Initialize fill factor

this.loadFactor = loadFactor;

// Initialize threshold size

this.threshold = tableSizeFor(initialCapacity);

}

tableSizeFor(initialCapacity): the function of this method is to ensure that the number of hash buckets is n-power of 2 and convert initialCapacity to the smallest n-power of 2.

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

When n=1<<30 When:

01 00000 00000 00000 00000 00000 00000 (n)

01 10000 00000 00000 00000 00000 00000 (n |= n >>> 1)

01 11100 00000 00000 00000 00000 00000 (n |= n >>> 2)

01 11111 11000 00000 00000 00000 00000 (n |= n >>> 4)

01 11111 11111 11111 00000 00000 00000 (n |= n >>> 8)

01 11111 11111 11111 11111 11111 11111 (n |= n >>> 16)

3. What is the role of threshold?

- Capacity is translated into the capacity of the array represented by capacity, that is, the length of the array and the number of buckets in the HashMap. The default value is 16.

- loadFactor is translated into load factor. The load factor is used to measure how full the HashMap is. The default value of loadFactor is 0.75f.

- threshold = capacity * loadFactor: when size > = threshold, the expansion of the array should be considered, that is, this means a standard to measure whether the array needs to be expanded (considering the expansion from the perspective of performance and stability).

- The capacity expansion factor of the default parameterless constructor is 16 * 0.75 = 12. The critical judgment of capacity expansion is whether the length of the array is greater than the capacity expansion factor threshold. The size of capacity expansion is twice that of the original array.

// Double the capacity of the array

else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY &&

oldCap >= DEFAULT_INITIAL_CAPACITY)

newThr = oldThr << 1; // Factor expansion twice

3. Where is the node < key, value > stored through hash calculation?