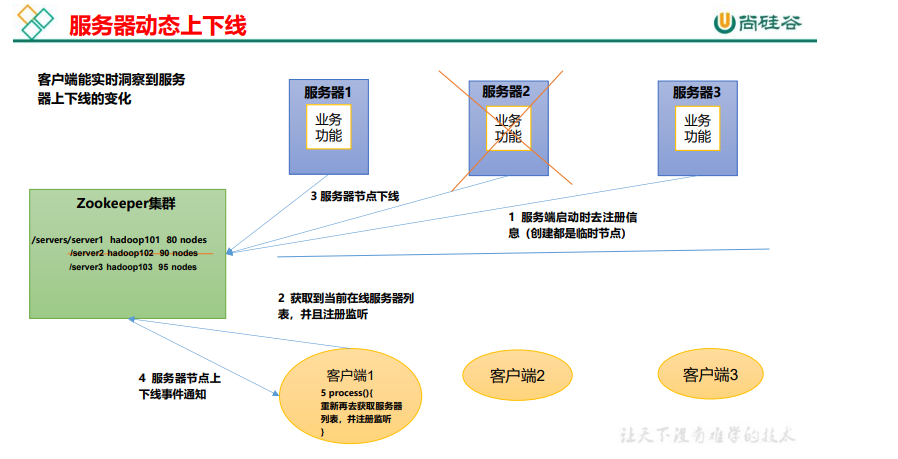

Server dynamic online and offline monitoring cases

In a distributed system, there can be multiple master nodes, which can dynamically go online and offline. Any client can sense the online and offline of the master node server in real time.

As shown in the figure above, we hope that the client can monitor the node changes of the server in real time.

Concrete implementation

- (1) First create the / servers node on the cluster

[zk: localhost:2181(CONNECTED) 10] create /servers "servers" Created /servers

- (2) Create package name in Idea: com.atguigu.zkcase1

- (3) The server side registers the code with Zookeeper

package com.atguigu.zkcase1; import java.io.IOException; import org.apache.zookeeper.CreateMode; import org.apache.zookeeper.WatchedEvent; import org.apache.zookeeper.Watcher; import org.apache.zookeeper.ZooKeeper; import org.apache.zookeeper.ZooDefs.Ids; public class DistributeServer { private static String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181"; private static int sessionTimeout = 2000; private ZooKeeper zk = null; private String parentNode = "/servers"; // Create a client connection to zk public void getConnect() throws IOException{ zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() { @Override public void process(WatchedEvent event) { } }); } // Registration server public void registServer(String hostname) throws Exception{ String create = zk.create(parentNode + "/server", hostname.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL); System.out.println(hostname +" is online "+ create); } // Business function public void business(String hostname) throws Exception{ System.out.println(hostname + " is working ..."); Thread.sleep(Long.MAX_VALUE); } public static void main(String[] args) throws Exception { // 1 get zk connection DistributeServer server = new DistributeServer(); server.getConnect(); // 2 use zk connection to register server information server.registServer(args[0]); // 3 start business function server.business(args[0]); } } - Client code to listen for zk node changes

package com.atguigu.zkcase1; import java.io.IOException; import java.util.ArrayList; import java.util.List; import org.apache.zookeeper.WatchedEvent; import org.apache.zookeeper.Watcher; import org.apache.zookeeper.ZooKeeper; public class DistributeClient { private static String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181"; private static int sessionTimeout = 2000; private ZooKeeper zk = null; private String parentNode = "/servers"; // Create a client connection to zk public void getConnect() throws IOException { zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() { @Override public void process(WatchedEvent event) { // Start listening again try { getServerList(); } catch (Exception e) { e.printStackTrace(); } } }); } // Get server list information public void getServerList() throws Exception { // 1 obtain the information of the server's child nodes and listen to the parent nodes List<String> children = zk.getChildren(parentNode, true); // 2 storage server information list ArrayList<String> servers = new ArrayList<>(); // 3 traverse all nodes to obtain the host name information in the node for (String child : children) { byte[] data = zk.getData(parentNode + "/" + child, false, null); servers.add(new String(data)); } // 4 print server list information System.out.println(servers); } // Business function public void business() throws Exception{ System.out.println("client is working ..."); Thread.sleep(Long.MAX_VALUE); } public static void main(String[] args) throws Exception { // 1 get zk connection DistributeClient client = new DistributeClient(); client.getConnect(); // 2 get the child node information of servers and get the server information list from it client.getServerList(); // 3 business process startup client.business(); } }

test

1) Operate on the Linux command line to increase or decrease servers

-

(1) Start the DistributeClient client

-

(2) Create a temporary numbered node in zk the client / servers directory on Hadoop 102

[zk: localhost:2181(CONNECTED) 1] create -e -s /servers/hadoop102 "hadoop102"

[zk: localhost:2181(CONNECTED) 2] create -e -s /servers/hadoop103 "hadoop103"

-

(3) Observe the change of Idea console

# The client monitors the output of node changes [hadoop102, hadoop103]

-

(4) Perform delete operation

[zk: localhost:2181(CONNECTED) 8] delete /servers/hadoop1020000000000

-

(5) Observe the change of Idea console

[hadoop103]

2) Operate on Idea to increase or decrease servers

- (1) Start the DistributeClient client (if it has been started, it does not need to be restarted)

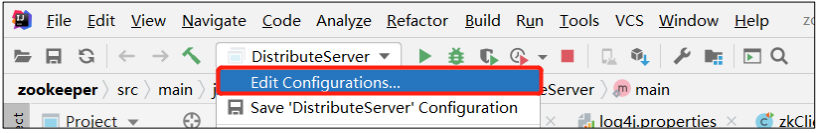

- (2) Start the DistributeServer service

- ① Click Edit Configurations

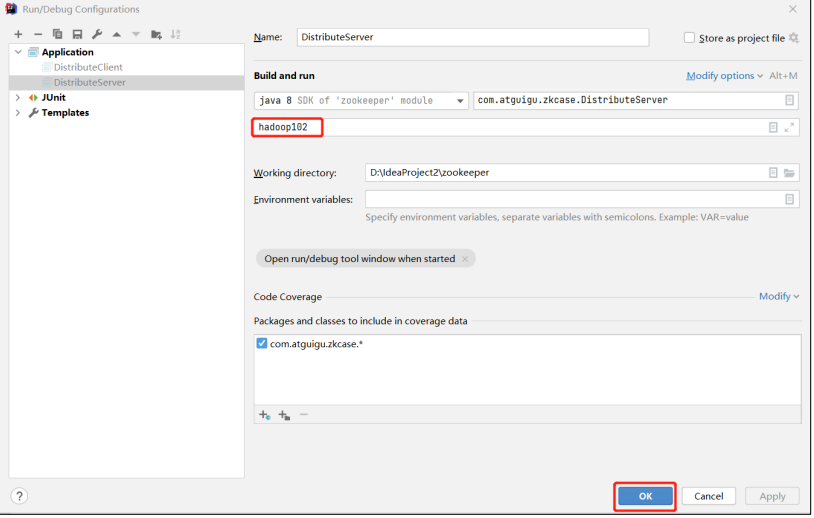

- ② In the pop-up window (Program arguments), enter the host you want to start, for example, Hadoop 102

- ① Click Edit Configurations

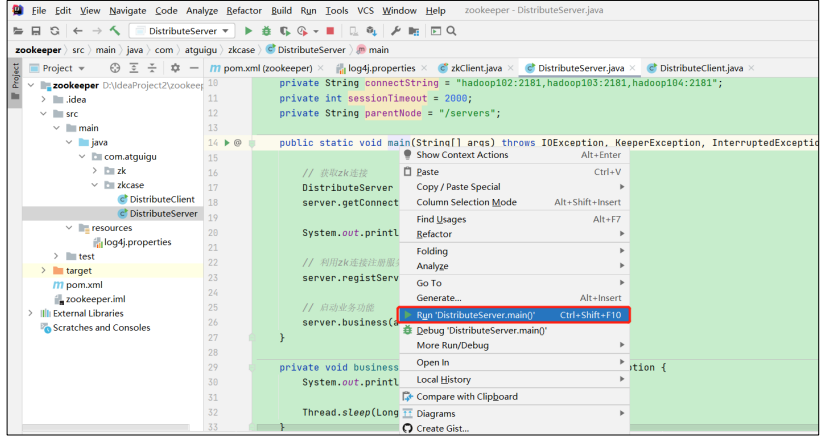

- Go back to the main method of DistributeServer, right-click and click Run "DistributeServer.main()" in the pop-up window

- ④ Observe the DistributeServer console and prompt Hadoop is working

- ⑤ Observe the DistributeClient console and prompt that Hadoop 102 is online

ZooKeeper distributed lock case

Acquire lock

- Create a persistent section in Zookeeper. When the first client Client1 wants to obtain a lock, it needs to create a temporary order node under this node.

- Client1 finds all temporary sequential nodes under the persistent node and sorts them to determine whether the node created by itself is the one in the top order. If it is the first node, the lock is successfully obtained.

- If another client Client2 comes to acquire the lock, create another temporary order node Lock2 under the persistent node.

- Client2 finds all temporary sequential nodes under the persistent node and sorts them. It determines whether the node Lock2 it created is the one with the highest order. It is found that node Lock2 is not the smallest. Therefore, client2 registers a Watcher with node Lock1 whose ranking is only higher than it to monitor whether the Lock1 node exists. This means that client2 fails to grab the lock and enters the waiting state .

- If another client, Client3, comes to acquire the lock, a temporary sequence node Lock3 is created in the persistent node download.

- Client3 finds all temporary sequential nodes under the persistent node and sorts them. It judges whether the node Lock3 created by itself is the one with the highest order. It is also found that node Lock3 is not the smallest. Therefore, client3 registers a Watcher with the node Lock2 whose ranking is only higher than it to monitor whether the Lock2 node exists. This means that client3 also fails to grab the lock and enters the Wait state.

Release lock

It is easier to release the lock. Because of the temporary sequence node created earlier, the lock will be automatically released in the following two cases:

- After the task is completed, the Client releases the lock.

- If the task is not completed, the Client crashes and the lock will be released automatically.

Code writing

- Writing of distributed locks

package com.atguigu.lock2; import org.apache.zookeeper.*; import org.apache.zookeeper.data.Stat; import java.io.IOException; import java.util.Collections; import java.util.List; import java.util.concurrent.CountDownLatch; public class DistributedLock { // zookeeper server list private String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181"; // Timeout private int sessionTimeout = 2000; private ZooKeeper zk; private String rootNode = "locks"; private String subNode = "seq-"; // The child node that the current client is waiting for private String waitPath; //ZooKeeper connection private CountDownLatch connectLatch = new CountDownLatch(1); //ZooKeeper node waiting private CountDownLatch waitLatch = new CountDownLatch(1); // Child nodes created by the current client private String currentNode; // Establish a connection with the zk service and create a root node public DistributedLock() throws IOException, InterruptedException, KeeperException { zk = new ZooKeeper(connectString, sessionTimeout, new Watcher() { @Override public void process(WatchedEvent event) { // When the connection is established, open the latch to wake up the wait thread on the latch if (event.getState() == Event.KeeperState.SyncConnected) { connectLatch.countDown(); } // A delete event of waitPath occurred if (event.getType() == Event.EventType.NodeDeleted && event.getPath().equals(waitPath)) { waitLatch.countDown(); } } }); // Waiting for connection to be established connectLatch.await(); //Get root node status Stat stat = zk.exists("/" + rootNode, false); //If the root node does not exist, a root node is created. The root node type is permanent if (stat == null) { System.out.println("The root node does not exist"); zk.create("/" + rootNode, new byte[0], ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } } // Locking method public void zkLock() { try { //Create a temporary order node under the root node, and the return value is the created node path currentNode = zk.create("/" + rootNode + "/" + subNode, null, ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL); // wait a minute to make the result clearer Thread.sleep(10); // Note that it is not necessary to listen to the changes of the child nodes of "/ locks" List<String> childrenNodes = zk.getChildren("/" + rootNode, false); // There is only one child node in the list, which must be currentNode, indicating that the client obtains the lock if (childrenNodes.size() == 1) { return; } else { //Sort all temporary order nodes under the root node from small to large Collections.sort(childrenNodes); //Current node name String thisNode = currentNode.substring(("/" + rootNode + "/").length()); //Gets the location of the current node int index = childrenNodes.indexOf(thisNode); if (index == -1) { System.out.println("Data exception"); } else if (index == 0) { // index == 0 indicates that thisNode is the smallest in the list, and the current client obtains the lock return; } else { // Obtain the node ranking 1 higher than currentNode this.waitPath = "/" + rootNode + "/" + childrenNodes.get(index - 1); // Register the listener on the waitPath. When the waitPath is deleted, zookeeper will call back the listener's process method zk.getData(waitPath, true, new Stat()); //Enter the waiting lock state waitLatch.await(); return; } } } catch (KeeperException e) { e.printStackTrace(); } catch (InterruptedException e) { e.printStackTrace(); } } // Unlocking method public void zkUnlock() { try { zk.delete(this.currentNode, -1); } catch (InterruptedException | KeeperException e) { e.printStackTrace(); } } } - Testing distributed locks for zookeeper

package com.atguigu.lock2; import org.apache.zookeeper.KeeperException; import java.io.IOException; public class DistributedLockTest { public static void main(String[] args) throws InterruptedException, IOException, KeeperException { // Create distributed lock 1 final DistributedLock lock1 = new DistributedLock(); // Create distributed lock 2 final DistributedLock lock2 = new DistributedLock(); new Thread(new Runnable() { @Override public void run() { // Get lock object try { lock1.zkLock(); System.out.println("Thread 1 acquire lock"); Thread.sleep(5 * 1000); lock1.zkUnlock(); System.out.println("Thread 1 release lock"); } catch (Exception e) { e.printStackTrace(); } } }).start(); new Thread(new Runnable() { @Override public void run() { // Get lock object try { lock2.zkLock(); System.out.println("Thread 2 acquire lock"); Thread.sleep(5 * 1000); lock2.zkUnlock(); System.out.println("Thread 2 releases the lock"); } catch (Exception e) { e.printStackTrace(); } } }).start(); } } - Observe console changes:

Thread 1 acquire lock Thread 1 release lock Thread 2 acquire lock Thread 2 releases the lock

The case of implementing distributed lock with cursor framework

1) Problems in native Java API development

- (1) The session connection is asynchronous and needs to be handled by yourself. For example, use ` CountDownLatch

- (2) The Watch needs to be registered repeatedly, otherwise it will not take effect

- (3) The complexity of development is still relatively high

- (4) Multi node deletion and creation are not supported. You need to recurse yourself

2) Cursor is a framework dedicated to solving distributed locks

- Cursor solves the problems encountered in distributed development of native Java API

- For details, please check the official documents: https://curator.apache.org/index.html

3) Curator case practice

- 1. Add dependency

<dependency> <groupId>org.apache.curator</groupId> <artifactId>curator-framework</artifactId> <version>4.3.0</version> </dependency> <dependency> <groupId>org.apache.curator</groupId> <artifactId>curator-recipes</artifactId> <version>4.3.0</version> </dependency> <dependency> <groupId>org.apache.curator</groupId> <artifactId>curator-client</artifactId> <version>4.3.0</version> </dependency>

- Code writing

package com.atguigu.lock; import org.apache.curator.RetryPolicy; import org.apache.curator.framework.CuratorFramework; import org.apache.curator.framework.CuratorFrameworkFactory; import org.apache.curator.framework.recipes.locks.InterProcessLock; import org.apache.curator.framework.recipes.locks.InterProcessMutex; import org.apache.curator.retry.ExponentialBackoffRetry; public class CuratorLockTest { private String rootNode = "/locks"; // zookeeper server list private String connectString ="hadoop102:2181,hadoop103:2181,hadoop104:2181"; // connection timeout private int connectionTimeout = 2000; // session timeout private int sessionTimeout = 2000; public static void main(String[] args) { new CuratorLockTest().test(); } // test private void test() { // Create distributed lock 1 final InterProcessLock lock1 = new InterProcessMutex(getCuratorFramework(), rootNode); // Create distributed lock 2 final InterProcessLock lock2 = new InterProcessMutex(getCuratorFramework(), rootNode); new Thread(new Runnable() { @Override public void run() { // Get lock object try { lock1.acquire(); System.out.println("Thread 1 acquire lock"); // Test lock reentry lock1.acquire(); System.out.println("Thread 1 acquires the lock again"); Thread.sleep(5 * 1000); lock1.release(); System.out.println("Thread 1 release lock"); lock1.release(); System.out.println("Thread 1 releases the lock again"); } catch (Exception e) { e.printStackTrace(); } } }).start(); new Thread(new Runnable() { @Override public void run() { // Get lock object try { lock2.acquire(); System.out.println("Thread 2 acquire lock"); // Test lock reentry lock2.acquire(); System.out.println("Thread 2 acquires the lock again"); Thread.sleep(5 * 1000); lock2.release(); System.out.println("Thread 2 releases the lock"); lock2.release(); System.out.println("Thread 2 releases the lock again"); } catch (Exception e) { e.printStackTrace(); } } }).start(); } // Distributed lock initialization public CuratorFramework getCuratorFramework () { //Retry strategy, initial test time 3 seconds, retry 3 times RetryPolicy policy = new ExponentialBackoffRetry(3000, 3); //Create a cursor from a factory CuratorFramework client = CuratorFrameworkFactory.builder() .connectString(connectString) .connectionTimeoutMs(connectionTimeout) .sessionTimeoutMs(sessionTimeout) .retryPolicy(policy).build(); //Open connection client.start(); System.out.println("zookeeper Initialization complete..."); return client; } } - Observe console changes

Observe console changes: Thread 1 acquire lock Thread 1 acquires the lock again Thread 1 release lock Thread 1 releases the lock again Thread 2 acquire lock Thread 2 acquires the lock again Thread 2 releases the lock Thread 2 releases the lock again