1. Keyed window, non keyed window (windowAll)

- Keyed window

val input:DataStream[T1] = ... val result:DataStream[T2] = input .keyBy(...) // Group by key .window(...) // Specify a window partition rule for a key [.trigger(...)] // Optional, otherwise, use the default trigger; The function is to specify the trigger conditions for the execution of window functions and delete the elements of the window before deleting the window [.evictor(...)] // Optional, otherwise, evictor is not used; The function is to delete the elements of the window after the trigger, before and after the execution of the window function [.allowedLateness(...)] // Optional, otherwise the allowed delay is 0 [.sideOutputLateData(...)] // Optional, otherwise the delay data will not be output .reduce/aggregate/apply() // Specify the data processing function in a window corresponding to a key val lateStream:DataStream[T1] = result.getSideOutput(...) // Optional, corresponding to outputTag of sideOutputLateData

- Non keyed window

The difference between the Keyed window and the Keyed window is that: (1) the keyBy is not used for grouping, (2) the windowAll function is used to specify the window partition rules for all keys, and (3) the reduce / aggregate / apply function processes the data in a window corresponding to all keys. The parallelism of data processing is 1

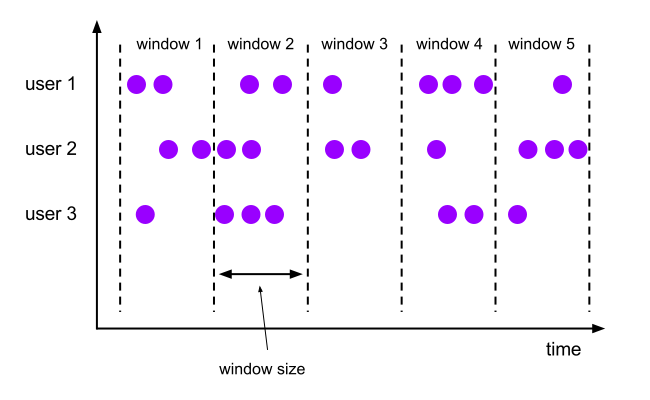

2. Windowassigner (time based)

Flink provides four kinds of windowassignors. We can also implement custom windowassignors. The divided window time range does not include the end time of the window

2.1 the offset of Time.hours(-8L) must be specified

Let's first look at the case where offset is not specified

package datastreamApi

import org.apache.commons.lang3.time.FastDateFormat

import org.apache.flink.api.common.eventtime._

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

class RecordTimestampAssigner extends TimestampAssigner[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

override def extractTimestamp(element: (String, Int, String), recordTimestamp: Long): Long = {

fdf.parse(element._3).getTime

}

}

class PeriodWatermarkGenerator extends WatermarkGenerator[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

var maxTimestamp: Long = _

val maxOutofOrderness = 0

override def onEvent(event: (String, Int, String), eventTimestamp: Long, output: WatermarkOutput): Unit = {

maxTimestamp = math.max(fdf.parse(event._3).getTime, maxTimestamp)

}

override def onPeriodicEmit(output: WatermarkOutput): Unit = {

output.emitWatermark(new Watermark(maxTimestamp - maxOutofOrderness - 1))

}

}

class MyWatermarkStrategy extends WatermarkStrategy[(String, Int, String)] {

override def createTimestampAssigner(context: TimestampAssignerSupplier.Context): TimestampAssigner[(String, Int, String)] = {

new RecordTimestampAssigner()

}

override def createWatermarkGenerator(context: WatermarkGeneratorSupplier.Context): WatermarkGenerator[(String, Int, String)] = {

new PeriodWatermarkGenerator()

}

}

object WindowTest {

def main(args: Array[String]): Unit = {

val senv = StreamExecutionEnvironment.getExecutionEnvironment

val input = senv.fromElements(

("A", 10, "2021-09-08 22:00:00"),

("A", 20, "2021-09-08 23:00:00"),

("A", 30, "2021-09-09 06:00:00"),

("B", 100, "2021-09-08 22:00:00"),

("B", 200, "2021-09-08 23:00:00"),

("B", 300, "2021-09-09 06:00:00")

).assignTimestampsAndWatermarks(new MyWatermarkStrategy())

val result: DataStream[(String, Int, String)] = input.keyBy(_._1)

.window(TumblingEventTimeWindows.of(Time.days(1L)))

.sum(1)

result.print("result")

senv.execute("WindowTest")

}

}

The execution results are:

result:7> (A,60,2021-09-08 22:00:00) result:2> (B,600,2021-09-08 22:00:00)

It can be seen that the window range is from 8:00 on the first day to 8:00 on the second day, but the actual window range we want to calculate is from 0:00 on the first day to 0:00 on the second day. You can replace the window (tumbling eventtimewindows. Of (time. Days (1L))) in the above code with window (tumbling eventtimewindows. Of (time. Days (1L), time. Hours (- 8L)). The results of re execution are as follows:

result:2> (B,300,2021-09-08 22:00:00) result:2> (B,300,2021-09-09 06:00:00) result:7> (A,30,2021-09-08 22:00:00) result:7> (A,30,2021-09-09 06:00:00)

2.2 Tumbling Windows

// Window size, offset time window(TumblingEventTimeWindows.of(Time.days(1L),Time.hours(-8L))) window(TumblingProcessingTimeWindows.of(Time.days(1L),Time.hours(-8L)))

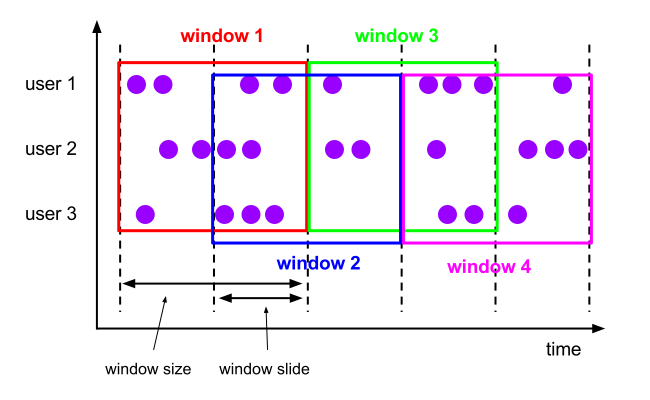

2.3 Sliding Windows

// Window size, sliding time, offset time window(SlidingEventTimeWindows.of(Time.minutes(10L), Time.minutes(5L),Time.hours(-8L))) window(SlidingProcessingTimeWindows.of(Time.minutes(10L), Time.minutes(5L),Time.hours(-8L)))

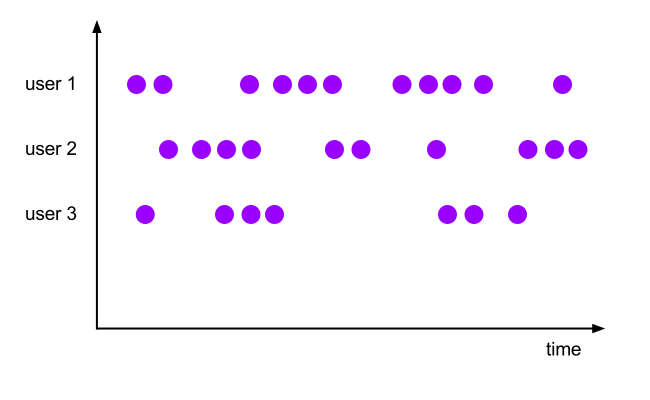

2.4 Session Windows

For a key, a new window will be created for each arriving element. If the time interval between the two elements is smaller than the defined, their windows will be merged; In order to merge all windows, you need to merge trigger s and merge window element processing functions, such as ReduceFunction, AggregateFunction and ProcessWindowFunction

// Fixed session interval

window(EventTimeSessionWindows.withGap(Time.minutes(5L)))

window(ProcessingTimeSessionWindows.withGap(Time.minutes(5L)))

// Dynamic time interval

window(EventTimeSessionWindows.withDynamicGap(new SessionWindowTimeGapExtractor[(String, Int, String)]{

override def extract(element: (String, Int, String)): Long = {

if(element._3 >= "2021-01-01 00:00:00") 5L else 10L

}

}))

window(ProcessingTimeSessionWindows.withDynamicGap(new SessionWindowTimeGapExtractor[(String, Int, String)]{

override def extract(element: (String, Int, String)): Long = {

if(element._3 >= "2021-01-01 00:00:00") 5L else 10L

}

}))

2.5 Global Windows

The windowassignor does not depend on the timestamp timestamp, but needs to specify a trigger, otherwise there is no calculation result

window(GlobalWindows.create())

3. Windowassigner (based on data)

- You do not need to specify timestamp and watermarks through DataStream.assignTimestampsAndWatermarks

// ==============Keyed window============== // Number of window elements countWindow(10L) // Number of window elements, number of window sliding elements countWindow(10L, 5L) // ==============Non keyed window============== countWindowAll(10L) countWindowAll(10L, 5L)

4. Understanding the life cycle of the window

- Window creation: when the first element of the window arrives, the window will be created

- Window deletion:

- For time window: when watermarks exceeds (window end time + allowedlatency), the window will be deleted

- For Global Windows and data windows: windows will not be deleted

5. Recognize window function

5.1 ReduceFunction and ProcessWindowFunction

- Aggregating ReduceFunction increments

- ProcessWindowFunction obtains all elements of a window of a key for processing, but can obtain RuntimeContext

- ReduceFunction passes the aggregation result to ProcessWindowFunction

package datastreamApi

import org.apache.commons.lang3.time.FastDateFormat

import org.apache.flink.api.common.eventtime._

import org.apache.flink.streaming.api.scala.function.ProcessWindowFunction

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

class RecordTimestampAssigner extends TimestampAssigner[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

override def extractTimestamp(element: (String, Int, String), recordTimestamp: Long): Long = {

fdf.parse(element._3).getTime

}

}

class PeriodWatermarkGenerator extends WatermarkGenerator[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

var maxTimestamp: Long = _

val maxOutofOrderness = 0

override def onEvent(event: (String, Int, String), eventTimestamp: Long, output: WatermarkOutput): Unit = {

maxTimestamp = math.max(fdf.parse(event._3).getTime, maxTimestamp)

}

override def onPeriodicEmit(output: WatermarkOutput): Unit = {

output.emitWatermark(new Watermark(maxTimestamp - maxOutofOrderness - 1))

}

}

class MyWatermarkStrategy extends WatermarkStrategy[(String, Int, String)] {

override def createTimestampAssigner(context: TimestampAssignerSupplier.Context): TimestampAssigner[(String, Int, String)] = {

new RecordTimestampAssigner()

}

override def createWatermarkGenerator(context: WatermarkGeneratorSupplier.Context): WatermarkGenerator[(String, Int, String)] = {

new PeriodWatermarkGenerator()

}

}

object WindowTest {

def main(args: Array[String]): Unit = {

val senv = StreamExecutionEnvironment.getExecutionEnvironment

val input = senv.fromElements(

("A", 10, "2021-09-08 01:00:00"),

("A", 20, "2021-09-08 02:00:00"),

("A", 30, "2021-09-08 03:00:00"),

("B", 100, "2021-09-08 01:00:00"),

("B", 200, "2021-09-08 02:00:00"),

("B", 300, "2021-09-08 03:00:00")

).assignTimestampsAndWatermarks(new MyWatermarkStrategy())

val result: DataStream[String] = input.keyBy(_._1)

.window(TumblingEventTimeWindows.of(Time.days(1L), Time.hours(-8L)))

.reduce((x1: (String, Int, String), x2: (String, Int, String)) => {

val datetime1 = x1._3

val datetime2 = x2._3

val datetime = if (datetime1 > datetime2) datetime1 else datetime2

(x1._1, x1._2 + x2._2, datetime)

}, new ProcessWindowFunction[(String, Int, String), String, String, TimeWindow] {

override def process(key: String, context: Context, elements: Iterable[(String, Int, String)], out: Collector[String]): Unit = {

val element_str = elements.mkString(", ")

out.collect(s"=========Window information: ${context.window}========${element_str}=====")

}

}

)

result.print("result")

senv.execute("WindowTest")

}

}

Execution results:

result:2> =========Window information: TimeWindow{start=1631030400000, end=1631116800000}========(B,600,2021-09-08 03:00:00)=====

result:7> =========Window information: TimeWindow{start=1631030400000, end=1631116800000}========(A,60,2021-09-08 03:00:00)=====

5.2 AggregateFunction and ProcessWindowFunction

- AggregateFunction aggregates incrementally

- ProcessWindowFunction obtains all elements of a window of a key for processing, but can obtain RuntimeContext

- AggregateFunction passes the aggregation result to ProcessWindowFunction

package datastreamApi

import org.apache.commons.lang3.time.FastDateFormat

import org.apache.flink.api.common.eventtime._

import org.apache.flink.api.common.functions.AggregateFunction

import org.apache.flink.streaming.api.scala.function.ProcessWindowFunction

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

class RecordTimestampAssigner extends TimestampAssigner[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

override def extractTimestamp(element: (String, Int, String), recordTimestamp: Long): Long = {

fdf.parse(element._3).getTime

}

}

class PeriodWatermarkGenerator extends WatermarkGenerator[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

var maxTimestamp: Long = _

val maxOutofOrderness = 0

override def onEvent(event: (String, Int, String), eventTimestamp: Long, output: WatermarkOutput): Unit = {

maxTimestamp = math.max(fdf.parse(event._3).getTime, maxTimestamp)

}

override def onPeriodicEmit(output: WatermarkOutput): Unit = {

output.emitWatermark(new Watermark(maxTimestamp - maxOutofOrderness - 1))

}

}

class MyWatermarkStrategy extends WatermarkStrategy[(String, Int, String)] {

override def createTimestampAssigner(context: TimestampAssignerSupplier.Context): TimestampAssigner[(String, Int, String)] = {

new RecordTimestampAssigner()

}

override def createWatermarkGenerator(context: WatermarkGeneratorSupplier.Context): WatermarkGenerator[(String, Int, String)] = {

new PeriodWatermarkGenerator()

}

}

class MyAggregateFunction extends AggregateFunction[(String, Int, String), (String, Int, String), (String, Int, String)] {

def maxDatetime(datetime1: String, datetime2: String) = {

if (datetime1 > datetime2) datetime1 else datetime2

}

override def createAccumulator(): (String, Int, String) = {

("", 0, "0000-00-00 00:00:00")

}

override def add(in: (String, Int, String), acc: (String, Int, String)): (String, Int, String) = {

(in._1, in._2 + acc._2, maxDatetime(in._3, acc._3))

}

override def merge(acc1: (String, Int, String), acc2: (String, Int, String)): (String, Int, String) = {

(acc1._1, acc1._2 + acc2._2, maxDatetime(acc1._3, acc2._3))

}

override def getResult(acc: (String, Int, String)): (String, Int, String) = {

acc

}

}

object WindowTest {

def main(args: Array[String]): Unit = {

val senv = StreamExecutionEnvironment.getExecutionEnvironment

val input = senv.fromElements(

("A", 10, "2021-09-08 01:00:00"),

("A", 20, "2021-09-08 02:00:00"),

("A", 30, "2021-09-08 03:00:00"),

("B", 100, "2021-09-08 01:00:00"),

("B", 200, "2021-09-08 02:00:00"),

("B", 300, "2021-09-08 03:00:00")

).assignTimestampsAndWatermarks(new MyWatermarkStrategy())

val result: DataStream[String] = input.keyBy(_._1)

.window(TumblingEventTimeWindows.of(Time.days(1L), Time.hours(-8L)))

.aggregate(new MyAggregateFunction(),

new ProcessWindowFunction[(String, Int, String), String, String, TimeWindow] {

override def process(key: String, context: Context, elements: Iterable[(String, Int, String)], out: Collector[String]): Unit = {

val element_str = elements.mkString(", ")

out.collect(s"=========Window information: ${context.window}========${element_str}=====")

}

}

)

result.print("result")

senv.execute("WindowTest")

}

}

Execution results:

result:2> =========Window information: TimeWindow{start=1631030400000, end=1631116800000}========(B,600,2021-09-08 03:00:00)=====

result:7> =========Window information: TimeWindow{start=1631030400000, end=1631116800000}========(A,60,2021-09-08 03:00:00)=====

6. Processing late data

The data sent by ncat are as follows:

[root@bigdata005 ~]# nc -lk 9998 A,10,2021-09-08 01:00:05 A,20,2021-09-08 01:00:06 A,30,2021-09-08 01:00:12 A,40,2021-09-08 01:00:07 A,30,2021-09-08 01:00:13 A,40,2021-09-08 01:00:08

The sample program is as follows:

package datastreamApi

import org.apache.commons.lang3.time.FastDateFormat

import org.apache.flink.api.common.eventtime._

import org.apache.flink.streaming.api.scala.{DataStream, OutputTag, StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

class RecordTimestampAssigner extends TimestampAssigner[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

override def extractTimestamp(element: (String, Int, String), recordTimestamp: Long): Long = {

fdf.parse(element._3).getTime

}

}

class PeriodWatermarkGenerator extends WatermarkGenerator[(String, Int, String)] {

val fdf = FastDateFormat.getInstance("yyyy-MM-dd HH:mm:ss")

var maxTimestamp: Long = _

val maxOutofOrderness = 0

override def onEvent(event: (String, Int, String), eventTimestamp: Long, output: WatermarkOutput): Unit = {

maxTimestamp = math.max(fdf.parse(event._3).getTime, maxTimestamp)

}

override def onPeriodicEmit(output: WatermarkOutput): Unit = {

output.emitWatermark(new Watermark(maxTimestamp - maxOutofOrderness - 1))

}

}

class MyWatermarkStrategy extends WatermarkStrategy[(String, Int, String)] {

override def createTimestampAssigner(context: TimestampAssignerSupplier.Context): TimestampAssigner[(String, Int, String)] = {

new RecordTimestampAssigner()

}

override def createWatermarkGenerator(context: WatermarkGeneratorSupplier.Context): WatermarkGenerator[(String, Int, String)] = {

new PeriodWatermarkGenerator()

}

}

object WindowTest {

def main(args: Array[String]): Unit = {

val senv = StreamExecutionEnvironment.getExecutionEnvironment

val input = senv.socketTextStream("192.168.23.51", 9998)

.map(line => {

val words = line.split(",")

(words(0), words(1).toInt, words(2))

})

.assignTimestampsAndWatermarks(new MyWatermarkStrategy())

.setParallelism(1)

val myOutputTag = new OutputTag[(String, Int, String)]("my_output_tag")

val result = input.keyBy(_._1)

.window(TumblingEventTimeWindows.of(Time.seconds(10L)))

.allowedLateness(Time.seconds(3L))

.sideOutputLateData(myOutputTag)

.sum(1)

result.print("result")

val output_result: DataStream[(String, Int, String)] = result.getSideOutput(myOutputTag)

output_result.print("output_result")

senv.execute("WindowTest")

}

}

The results are as follows:

result:7> (A,30,2021-09-08 01:00:05) result:7> (A,70,2021-09-08 01:00:05) output_result:7> (A,40,2021-09-08 01:00:08)

The procedure is described as follows:

- Set the Parallelism of the assignTimestampsAndWatermarks function to 1 because the default Parallelism of the senv is 8. When Watermarks are specified through assignTimestampsAndWatermarks, the Watermarks of slots without elements are the initial value 0, resulting in the Watermarks passed to downstream operators being 0, and all windows will not be triggered for calculation

- When Watermarks is greater than (endTimestamp - 1) of window A, the window is triggered for calculation

- When Watermarks is less than or equal to (endtimestamp + allowedlatency - 1) of window A, the new element falls into window A, and the window is triggered again. At this time, pay attention to data De duplication

- When Watermarks is greater than window A's (endtimestamp + allowedlatency - 1), window A is deleted. If the new element falls into window A, it is output through sideOutputLateData

Allowedlatency delay data description:

- For Session Windows, delaying the calculation triggered by data will lead to the merging of Session Windows

7. Processing window results

- The result returned by the window function is a DataStream. The timestamp of the elements of the DataStream is set to the of the window (endTimestamp - 1)

- Therefore, the partition rule of the downstream window (such as window (tumbling eventtimewindows. Of (time. Seconds (10L))) should preferably be a multiple of the partition rule of the upstream window (such as window (tumbling eventtimewindows. Of (time. Seconds (10L)))

- For the data size of a window, the following points should be considered:

- For sliding windows, if an element belongs to 3 windows, 3 copies of the element are created

- The size of a window's State

- Is the window function used incremental or full calculation