Part I: [embedded AI Development & Maxim Part IV] maxim78000 Evaluation Kit AI actual combat development II Describes the use of Maxim78000 Evaluation Kit is the whole process of development and actual combat.

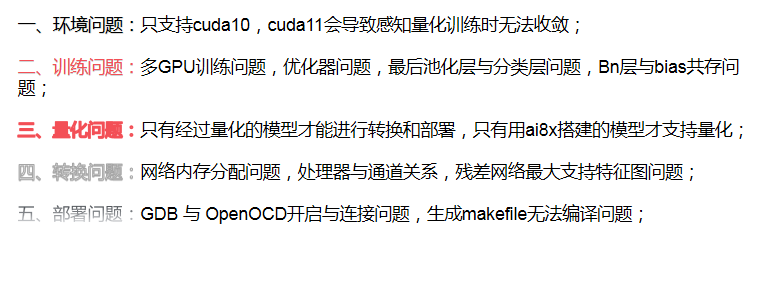

Based on the problems encountered in the development practice and the key and difficult points, this paper makes a brief summary, and some specific solutions are also introduced above. It mainly includes five aspects:

1. Environmental aspects

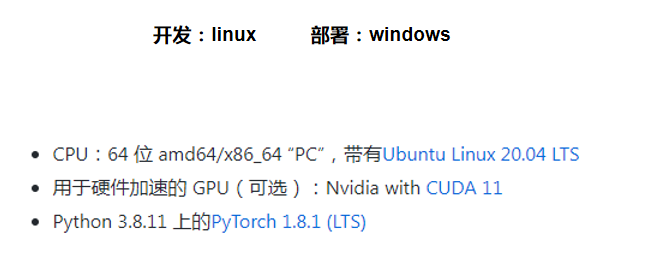

Recommended environment configuration:

However, Meixin's model quantization tool does not seem to support cuda11. When the training reaches the start of quantization_ During epoch, the training accuracy decreases rapidly. Therefore, cuda10 is recommended for GPUA acceleration.

2. Training issues

2.1 model problems

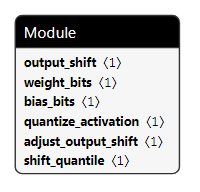

Although Meixin also carries out model development and deployment based on PyTorch, the CNN template library used has been rewritten and the design supporting quantification and MAX78000 deployment has been added. Therefore, to develop your own AI model, you first need to rewrite the AI model according to the PyTorch class customized by Meixin. Any design for the MAX78000 All models running on should use these classes. There are three main changes between ai8x.py and the default class torch.nn.Module (as follows):

-

Additional "fusion" operations for pooling and activating layers in the model;

-

Rounding and clipping matched with hardware;

-

Quantization operations are supported (when using the - 8 command line parameter).

Because of these special designs, it seems that the model does not support global pooling before the full connection layer, and the characteristic graph of the convolution of the last layer cannot be too small. It will cause the problem of non convergence.

2.2 optimizer problems

Due to the existence of perceptual quantization training, it is more difficult to train the model. Therefore, for the more complex tasks, it is recommended to use Adam optimizer and train with a smaller learning rate. (SGD parameter adjustment is not good and cannot converge)

2.3 training issues

In addition, there will be bug s in the model during multi GPU training, resulting in the problem of low test accuracy during evaluation. Please refer to the specific solutions [embedded AI Development & Meixin problem part I] Maxim78000 AI actual combat development - there is a big gap between training and test evaluation accuracy , the first one is recommended.

three Quantitative problem

There are two main methods of quantization - quantization perception training (recommended, enabled by default) and quantization after training.

Quantitative perception training is a better method. It is enabled by default. QAT learns other parameters that help to quantify during training. You need to enter checkpoint of quantify. Py., or qat_best.pth.tar, the best checkpoint in QAT period, or qat_checkpoint.pth.tar, the checkpoint in the final QAT period.

At present, only the models built in ai8x.py library support quantification, and only after quantification can they be correctly evaluated and deployed. nn.module library is not supported because there are bug s in the tool, which can be fixed by itself or quantified by external tools

https://github.com/pytorch/glow/blob/master/docs/Quantization.md,

https://github.com/ARM-software/ML-examples/tree/master/cmsisnn-cifar10,

https://github.com/ARM-software/ML-KWS-for-MCU/blob/master/Deployment/Quant_guide.md or

Distiller's method (Meixin is actually adjusting distiller's package)

4. Conversion issues

The most critical and error prone step in converting the model into c code is actually the memory and processor configuration. yaml file of the network.

Refer to the tutorial:

https://github.com/MaximIntegratedAI/MaximAI_Documentation/blob/master/Guides/YAML%20Quickstart.md

I also introduced it in detail in the previous article: [embedded AI Development & Maxim Part IV] maxim78000 Evaluation Kit AI actual combat development II

# Model: # def forward(self, x): # x = self.conv1(x) # x_res = self.conv2(x) # x = self.conv3(x_res) # x = self.add1(x, x_res) # x = self.conv4(x) # ... # Layer 0: self.conv1 = ai8x.FusedConv2dReLU(num_channels, 16, 3, stride=1, padding=1, bias=bias, **kwargs) - out_offset: 0x2000 processors: 0x7000000000000000 operation: conv2d kernel_size: 3x3 pad: 1 activate: ReLU data_format: HWC # Layer 1: self.conv2 = ai8x.FusedConv2dReLU(16, 20, 3, stride=1, padding=1, bias=bias, **kwargs) - out_offset: 0x0000 processors: 0x0ffff00000000000 operation: conv2d kernel_size: 3x3 pad: 1 activate: ReLU # Layer 2 - re-form layer 1 data with gap - out_offset: 0x2000 processors: 0x00000000000fffff output_processors: 0x00000000000fffff operation: passthrough write_gap: 1 # output is interleaved with 1 word gaps, i.e. 0x2000, 0x2008, ... # Layer 3: self.conv3 = ai8x.FusedConv2dReLU(20, 20, 3, stride=1, padding=1, bias=bias, **kwargs) - in_offset: 0x0000 # output of conv2, layer 1 out_offset: 0x2004 # start output from 0x2004 processors: 0x00000000000fffff operation: conv2d kernel_size: 3x3 pad: 1 activate: ReLU write_gap: 1 # output is interleaved with 1 word gap, i.e. 0x2004, 0x200C, ... # Layer 4: self.add1 = ai8x.Add() # self.conv4 = ai8x.FusedConv2dReLU(20, 20, 3, stride=1, padding=1, bias=bias, **kwargs) - in_sequences: [2, 3] # get input from layer 2 and 3 in_offset: 0x2000 # Layer 2 and 3 outputs are interleaved starting from 0x2000 out_offset: 0x0000 processors: 0x00000000000fffff eltwise: add # element-wise add from output of layer 2 and 3 executed in the same layer as conv4 operation: conv2d kernel_size: 3x3 pad: 1 activate: ReLU

five Deployment issues

GDB and OpenOCD are two tools mainly used for deployment. GDB is a server and OpenOCD is a code burning and debugging tool. GDB is used to guide OpenOCD for deployment.

Note that the makefile files generated under linux can only be made under linux. Therefore, if you want to make on windows and then deploy, you need to replace the makefile files of other examples. Because several files related to CNN are processed, others are the same.

6. More highlights

Embedded AI Development Series tutorial recommendation:

stm32:

Flathead:

Meixin:

[embedded AI Development & Maxim Part II] maxim78000 Evaluation Kit AI development environment

[embedded AI Development & Maxim Part IV] maxim78000 Evaluation Kit AI actual combat development II