docker container network configuration

The creation of namespace in Linux kernel

ip netns command

You can complete various operations on the Network Namespace with the help of the ip netns command. The ip netns command comes from the iproute installation package. Generally, the system will install it by default. If not, please install it yourself.

Note: sudo permission is required when the ip netns command modifies the network configuration.

You can complete the operations related to the Network Namespace through the ip netns command. You can view the command help information through the ip netns help:

[root@localhost ~]# ip netns help

Usage: ip netns list

ip netns add NAME

ip netns attach NAME PID

ip netns set NAME NETNSID

ip [-all] netns delete [NAME]

ip netns identify [PID]

ip netns pids NAME

ip [-all] netns exec [NAME] cmd ...

ip netns monitor

ip netns list-id [target-nsid POSITIVE-INT] [nsid POSITIVE-INT]

By default, there is no Network Namespace in the Linux system, so the ip netns list command will not return any information.

Create a Network Namespace

Create a namespace named ns0 through the command:

[root@localhost ~]# ip netns list [root@localhost ~]# ip netns add lq0 [root@localhost ~]# ip netns list lq0

The newly created Network Namespace will appear in the / var/run/netns / directory. If a namespace with the same name already exists, the command will report the error of "Cannot create namespace file" / var/run/netns/ns0 ": File exists.

[root@localhost ~]# ls /var/run/netns/ lq0 [root@localhost ~]# ip netns add lq0 Cannot create namespace file "/var/run/netns/lq0": File exists

For each Network Namespace, it will have its own independent network card, routing table, ARP table, iptables and other network related resources.

Operation Network Namespace

The ip command provides the ip netns exec subcommand, which can be executed in the corresponding Network Namespace.

View the network card information of the newly created Network Namespace

[root@localhost ~]# ip netns exec lq0 ip addr

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

You can see that a lo loopback network card will be created by default in the newly created Network Namespace, and the network card is closed at this time. At this time, if you try to ping the lo loopback network card, you will be prompted that Network is unreachable

[root@localhost ~]# ip netns exec lq0 ping 127.0.0.1 connect: Network unreachable

Enable lo loopback network card with the following command:

[root@localhost ~]# ip netns exec lq0 ip link set lo up

[root@localhost ~]# ip netns exec lq0 ping 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.032 ms

64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.050 ms

64 bytes from 127.0.0.1: icmp_seq=3 ttl=64 time=0.028 ms

^C

--- 127.0.0.1 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2056ms

rtt min/avg/max/mdev = 0.028/0.036/0.050/0.011 ms

[root@localhost ~]# ip netns exec lq0 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

Transfer equipment

We can transfer devices (such as veth) between different network namespaces. Since a device can only belong to one Network Namespace, the device cannot be seen in the Network Namespace after transfer.

Among them, veth devices are transferable devices, while many other devices (such as lo, vxlan, ppp, bridge, etc.) are not transferable.

veth pair

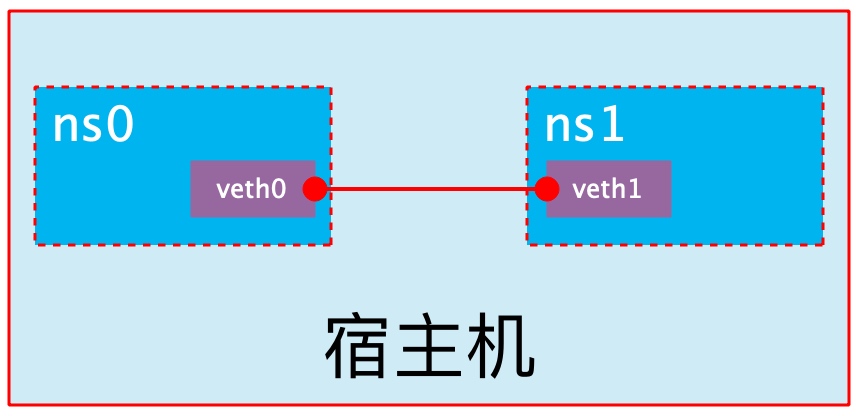

The full name of veth pair is Virtual Ethernet Pair. It is a pair of ports. All packets entering from one end of the pair of ports will come out from the other end, and vice versa.

veth pair is introduced to communicate directly in different network namespaces. It can be used to connect two network namespaces directly.

Create veth pair

[root@localhost ~]# ip link add type veth

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:cc:68:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.240.40/24 brd 192.168.240.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::b0aa:5d3b:1352:b7cb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:51:41:82:fc brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

4: veth0@veth1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether aa:07:34:bd:2f:36 brd ff:ff:ff:ff:ff:ff

5: veth1@veth0: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether f6:96:64:4e:59:d2 brd ff:ff:ff:ff:ff:ff

You can see that a pair of Veth pairs are added to the system to connect the two virtual network cards veth0 and veth1. At this time, the pair of Veth pairs are in the "not enabled" state.

Enable communication between network namespaces

Next, we use veth pair to realize the communication between two different network namespaces. Just now, we have created a Network Namespace named lq0. Next, we will create another information Network Namespace named lq1

[root@localhost ~]# ip netns add lq1 [root@localhost ~]# ip netns list lq1 lq0

Then we add veth0 to lq0 and veth1 to lq1

[root@localhost ~]# ip link set veth0 netns lq0 [root@localhost ~]# ip link set veth1 netns lq1

Then we configure the ip addresses for these Veth pairs and enable them

[root@localhost ~]# ip netns exec lq0 ip link set veth0 up [root@localhost ~]# ip netns exec lq0 ip addr add 192.168.240.10/24 dev veth0 [root@localhost ~]# ip netns exec lq1 ip link set lo up [root@localhost ~]# ip netns exec lq1 ip link set veth1 up [root@localhost ~]# ip netns exec lq1 ip addr add 192.168.240.20/24 dev veth1

View the status of this pair of Veth pairs

lq0

[root@localhost ~]# ip netns exec lq0 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

4: veth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether aa:07:34:bd:2f:36 brd ff:ff:ff:ff:ff:ff link-netns lq1

inet 192.168.240.10/24 scope global veth0

valid_lft forever preferred_lft forever

inet6 fe80::a807:34ff:febd:2f36/64 scope link

valid_lft forever preferred_lft forever

lq1

[root@localhost ~]# ip netns exec lq1 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

5: veth1@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether f6:96:64:4e:59:d2 brd ff:ff:ff:ff:ff:ff link-netns lq0

inet 192.168.240.20/24 scope global veth1

valid_lft forever preferred_lft forever

inet6 fe80::f496:64ff:fe4e:59d2/64 scope link

valid_lft forever preferred_lft forever

As can be seen from the above, we have successfully enabled this veth pair and assigned the corresponding ip address to each veth device. We try to access the ip address in lq0 in lq1:

[root@localhost ~]# ip netns exec lq1 ping 192.168.240.10 PING 192.168.240.10 (192.168.240.10) 56(84) bytes of data. 64 bytes from 192.168.240.10: icmp_seq=1 ttl=64 time=0.040 ms 64 bytes from 192.168.240.10: icmp_seq=2 ttl=64 time=0.032 ms ^C --- 192.168.240.10 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1048ms rtt min/avg/max/mdev = 0.032/0.036/0.040/0.004 ms

It can be seen that veth pair successfully realizes the network interaction between two different network namespaces.

veth device rename

[root@localhost ~]# ip netns exec lq0 ip link set veth0 down

[root@localhost ~]# ip netns exec lq0 ip link set dev veth0 name eth0

##ifconfig needs to be installed to use this command

[root@localhost ~]# yum -y install net-tools

[root@localhost ~]# ip netns exec lq0 ifconfig -a

eth0: flags=4098<BROADCAST,MULTICAST> mtu 1500

inet 192.168.240.10 netmask 255.255.255.0 broadcast 0.0.0.0

ether aa:07:34:bd:2f:36 txqueuelen 1000 (Ethernet)

RX packets 19 bytes 1426 (1.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 19 bytes 1426 (1.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 6 bytes 504 (504.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6 bytes 504 (504.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Four network mode configurations

bridge mode configuration

[root@localhost ~]# docker run -it --name test1 --rm centos

[root@81876c60c189 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 1905 bytes 12696429 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1420 bytes 81744 (79.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

## Adding -- network bridge when creating a container has the same effect as not adding -- network option

[root@localhost ~]# docker run -it --name test1 --network bridge --rm centos

[root@a791be8ff1ed /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 2083 bytes 14602033 (13.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1659 bytes 96478 (94.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

none mode configuration

[root@localhost ~]# docker run -it --name t1 --network none --rm busybox

/ # ifconfig -a

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

container mode configuration

Start the first container

[root@localhost ~]# docker run -it --name test1 --rm centos

[root@461f4cb58c9c /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 1991 bytes 12700989 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1571 bytes 89898 (87.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Start the second container

[root@localhost ~]# docker run -it --name test2 --rm centos

[root@8d4e2eb289d2 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.3 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:03 txqueuelen 0 (Ethernet)

RX packets 1567 bytes 12678005 (12.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1340 bytes 77424 (75.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

You can see that the IP address of the container named test2 is 127.17.0.3, which is not the same as the IP address of the first container, that is, there is no shared network. At this time, if we change the startup mode of the second container, we can make the container IP named test2 consistent with the B1 container IP, that is, share the IP, but do not share the file system.

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

461f4cb58c9c centos "/bin/bash" 4 minutes ago Up 4 minutes test1

[root@localhost ~]# docker run -it --name test2 --network container:test1 --rm centos

[root@461f4cb58c9c /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 5044 bytes 30513598 (29.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4106 bytes 238432 (232.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

At this point, we create a directory on the test1 container

[root@461f4cb58c9c opt]# mkdir test [root@461f4cb58c9c opt]# ls test

Check the / opt directory on the test2 container and find that it does not exist because the file system is isolated and only shares the network.

[root@461f4cb58c9c opt]# ls [root@461f4cb58c9c opt]#

Check the / opt directory on the test2 container and find that it does not exist because the file system is isolated and only shares the network.

Deploy a site on the test2 container

# Deploy a web site on b3 [root@461f4cb58c9c ~]# echo "me httpd" > www/index.html [root@461f4cb58c9c ~]# httpd -h www/ [root@461f4cb58c9c ~]# netstat -antl Active Internet connections (servers and established) Proto Recv-Q Send-Q Local Address Foreign Address State tcp 0 0 :::80 :::* LISTE # Access on test1 [root@461f4cb58c9c ~]# curl 172.17.0.2 me httpd [root@461f4cb58c9c ~]# # It can be seen that the relationship between containers in container mode is equivalent to two different processes on a host

This mode specifies that the newly created container and an existing container share a Network Namespace instead of sharing with the host. The newly created container will not create its own network card and configure its own IP, but share IP, port range, etc. with a specified container. Similarly, in addition to the network, the other two containers, such as file system, process list, etc Isolated. The processes of the two containers can communicate through the lo network card device.

host mode configuration

Directly indicate that the mode is host when starting the container

[root@localhost ~]# docker run -it --name t1 --network host --rm centos

[root@localhost /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:cc:68:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.240.40/24 brd 192.168.240.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::b0aa:5d3b:1352:b7cb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:51:41:82:fc brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:51ff:fe41:82fc/64 scope link

valid_lft forever preferred_lft forever

At this time, if we start an http site in this container, we can directly access the site in this container in the browser with the IP of the host

Common operations of containers

View the host name of the container

[root@localhost ~]# docker run -it --name test1 --rm centos [root@abb020e4587f /]# hostname abb020e4587f

Inject hostname when container starts

[root@localhost ~]# docker run -it --name test1 --hostname lql --rm centos [root@lql /]# hostname lql [root@lql /]# cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.2 lql # Host name to IP mapping is automatically created when host name is injected [root@lql /]# cat /etc/resolv.conf # Generated by NetworkManager nameserver 114.114.114.114 nameserver 8.8.8.8 # DNS is also automatically configured as the DNS of the host [root@lql /]# ping baidu.com PING baidu.com (220.181.38.251) 56(84) bytes of data. 64 bytes from 220.181.38.251 (220.181.38.251): icmp_seq=1 ttl=127 time=111 ms 64 bytes from 220.181.38.251 (220.181.38.251): icmp_seq=2 ttl=127 time=144 ms

Manually specify the DNS to be used by the container

[root@localhost ~]# docker run -it --name test1 --hostname lql --dns 114.114.114.114 --rm centos [root@lql /]# cat /etc/resolv.conf nameserver 114.114.114.114 [root@lql /]# nslookup -type=a baidu.com Server: 114.114.114.114 Address: 114.114.114.114#53 Non-authoritative answer: Name: baidu.com Address: 220.181.38.251 Name: baidu.com Address: 220.181.38.148

Manually inject the host name to IP address mapping into the / etc/hosts file

[root@localhost ~]# docker run -it --name test1 --hostname lql --add-host www.liu.com:110.110.110.110 --rm centos [root@lql /]# cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 110.110.110.110 www.liu.com 172.17.0.2 lql

Open container port

When docker run is executed, there is a - p option to map the application ports in the container to the host, so that the external host can access the applications in the container by accessing a port of the host.

-The p option can be used multiple times, and the port it can expose must be the port that the container is actually listening to.

-Use format of p option:

- -p

- Maps the specified container port to a dynamic port at all addresses of the host

- -p :

- Map the container port to the specified host port

- -p ::

- Maps the specified container port to the dynamic port specified by the host

- -p ::

- Map the specified container port to the port specified by the host

Dynamic ports refer to random ports. The specific mapping results can be viewed using the docker port command.

[root@localhost ~]# docker run -d --name test --rm -p 80 best2001/nginx:v0.3 681e2161bdad77d2ef7b1937faf22f2f1082b4bf0874a1188c5a3b4f3e73a1b7

After the above command is executed, it will occupy the front end all the time. Let's open a new terminal connection to see what port 80 of the container is mapped to the host

[root@localhost ~]# docker port test 80/tcp -> 0.0.0.0:49154 80/tcp -> :::49154

It can be seen that port 80 of the container is exposed to port 32769 of the host. At this time, we can access this port on the host to see if we can access the sites in the container

[root@localhost ~]# curl 192.168.240.40:49154

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

iptables firewall rules will be generated automatically with the creation of the container and deleted automatically with the deletion of the container.

Maps the container port to a random port of the specified IP

[root@localhost ~]# docker run -d --name test --rm -p 192.168.240.40::80 best2001/nginx:v0.3 defa708d33058efde55145fc85cd2c64a3c2f3c6ce3b9e83a6566953dfab61a2 [root@localhost ~]# docker port test 80/tcp -> 192.168.240.40:49153

Map the container port to the specified port of the host

[root@localhost ~]# docker run -d --name test --rm -p 80:80 best2001/nginx:v0.3 fdcd5347cc2f3ed31ba8d6ba0f46661acbb4a7dc2aa397a2758abc87360d1559 [root@localhost ~]# docker port test 80/tcp -> 0.0.0.0:80 80/tcp -> :::80

Network attribute information of custom docker0 Bridge

Official document related configuration

To customize the network attribute information of docker0 bridge, you need to modify the / etc/docker/daemon.json configuration file

[root@localhost ~]# vim /etc/docker/daemon.json

[root@localhost ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://wn5c7d7w.mirror.aliyuncs.com/"],

"bip": "192.168.2.1/24" ## Change the docker0 network card IP of the host

}

[root@localhost ~]# systemctl restart docker

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:cc:68:c5 brd ff:ff:ff:ff:ff:ff

inet 192.168.240.40/24 brd 192.168.240.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::b0aa:5d3b:1352:b7cb/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:51:41:82:fc brd ff:ff:ff:ff:ff:ff

inet 192.168.2.1/24 brd 192.168.2.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:51ff:fe41:82fc/64 scope link

valid_lft forever preferred_lft forever

The core option is bip, which means bridge ip. It is used to specify the IP address of docker0 bridge itself; Other options can be calculated from this address.

docker create custom bridge

Create an additional custom bridge, which is different from docker0

[root@localhost ~]# docker network create -d bridge --subnet "172.17.2.0/24" --gateway "172.17.2.1" br0 6c81564402952e69ffe6fd1735fa94790d58c3d58f6a426ffb1d47b53b8d3e4b [root@localhost ~]# [root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 6c8156440295 br0 bridge local 0c4dfc56dda3 bridge bridge local 775ddefd7576 host host local 9e8ab03a1af5 none null local

Create a container using the newly created custom bridge:

[root@localhost ~]# docker run -it --name test --rm --network br0 centos

[root@8bcfbcefbc48 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.2.2 netmask 255.255.255.0 broadcast 172.17.2.255

ether 02:42:ac:11:02:02 txqueuelen 0 (Ethernet)

RX packets 1868 bytes 12694425 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1470 bytes 84444 (82.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 64 bytes 5516 (5.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 64 bytes 5516 (5.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Create another container and use the default bridge

[root@localhost ~]# docker run -it --name test2 --rm centos

[root@0ac299077b3c /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.2.2 netmask 255.255.255.0 broadcast 192.168.2.255

ether 02:42:c0:a8:02:02 txqueuelen 0 (Ethernet)

RX packets 1970 bytes 12699829 (12.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1532 bytes 87812 (85.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Test communicates with test2

test

[root@localhost ~]# docker run -it --name test --rm --network br0 best2001/nginx:v0.3 /bin/bash

[root@00172f85f3ea /]#

[root@00172f85f3ea /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

41: eth0@if42: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:02:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.2.2/24 brd 172.17.2.255 scope global eth0

valid_lft forever preferred_lft forever

test2

[root@localhost ~]# docker run -it --name test2 --rm best2001/nginx:v0.3 /bin/bash

[root@02ca5fa59566 /]#

[root@02ca5fa59566 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

45: eth0@if46: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:02:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.2.2/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

Connect the br0 network test to test2, and one container runs two bridges

[root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 02ca5fa59566 best2001/nginx:v0.3 "/bin/bash" 2 minutes ago Up 2 minutes test2 00172f85f3ea best2001/nginx:v0.3 "/bin/bash" 3 minutes ago Up 3 minutes test [root@localhost ~]# docker network connet br0 02ca5fa59566

test2 view and ping test

[root@02ca5fa59566 /]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

45: eth0@if46: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:c0:a8:02:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.2.2/24 brd 192.168.2.255 scope global eth0

valid_lft forever preferred_lft forever

47: eth1@if48: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:02:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.2.3/24 brd 172.17.2.255 scope global eth1

valid_lft forever preferred_lft forever

[root@02ca5fa59566 /]# ping 127.17.2.2

PING 127.17.2.2 (127.17.2.2) 56(84) bytes of data.

64 bytes from 127.17.2.2: icmp_seq=1 ttl=64 time=0.037 ms

64 bytes from 127.17.2.2: icmp_seq=2 ttl=64 time=0.026 ms

64 bytes from 127.17.2.2: icmp_seq=3 ttl=64 time=0.027 ms

^C

--- 127.17.2.2 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2046ms

rtt min/avg/max/mdev = 0.026/0.030/0.037/0.005 ms