Before using hadoop API, configure the dependency of Windows

Windows-hadoop-3.1.0

Alicloud download link: Windows-hadoop-3.1.0

CSDN download link: Windows-hadoop-3.1.0

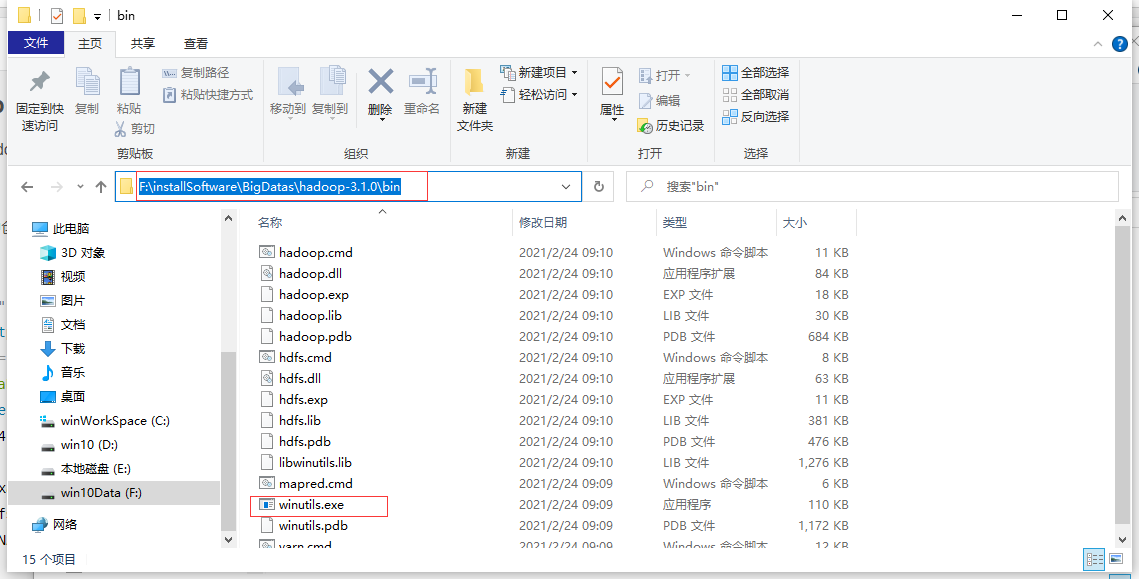

1. Download Windows-hadoop-3.1.0 and extract it to your installation directory

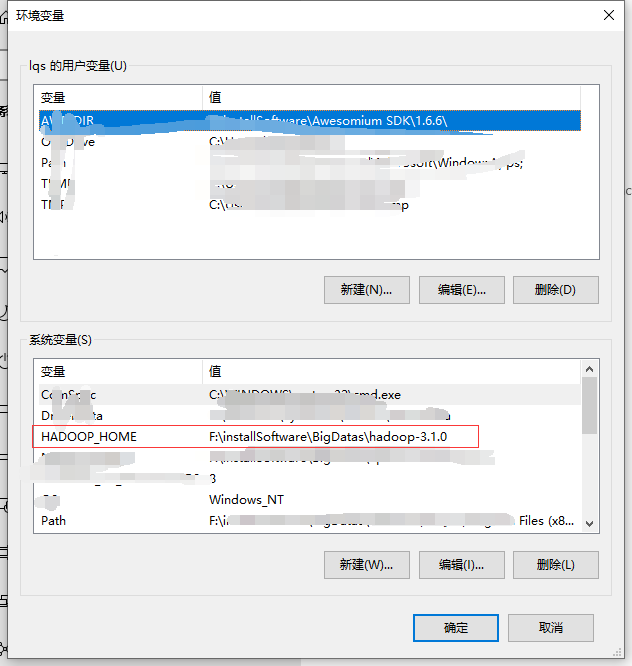

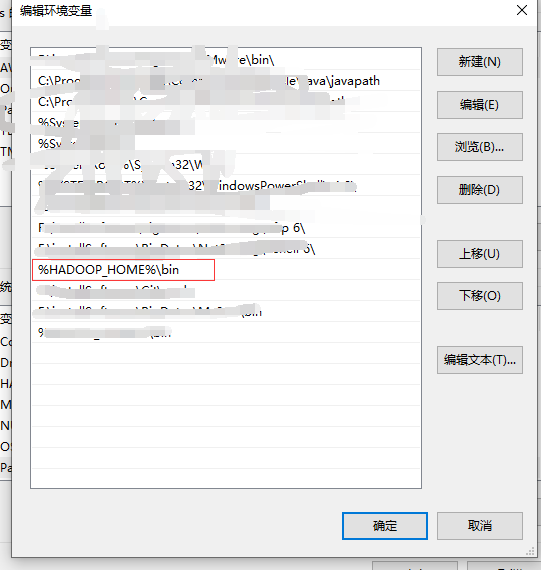

Configure the following environment variables:

Finally, click the exe file drawn in the first picture box. If there is no error, the configuration is successful.

IDEA_Maven_Hadooop_API

pom.xml file configuration

1. Before use, create Maven project (Project Name: HdfsClientDemo) in idea. First configure it in pom.xml as follows:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>HdfsClientDemo</artifactId>

<version>1.0-SNAPSHOT</version>

<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

</properties>

<dependencies>

<!-- to configure hadoop API Related plug-ins -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.30</version>

</dependency>

</dependencies>

</project>

Description of tool class com.lqs.util.hadoop util

be careful:

Used in the following code

//Close resource

HadoopUtil.close(fs);

This is a tool class written by myself. The content is as follows:

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by FernFlower decompiler)

//

package com.lqs.util;

import java.io.IOException;

import org.apache.hadoop.fs.FileSystem;

public class HadoopUtil {

private HadoopUtil() {

}

public static void close(FileSystem fileSystem) {

try {

if (fileSystem != null) {

fileSystem.close();

System.out.println("close hadoop hdfs File remote connection succeeded...");

}

} catch (IOException e) {

System.out.println("close hadoop hdfs File remote connection failed...\n The reasons are as follows:" + e);

}

}

}

configuration file

2. Then create the configuration file log4j.properties under the path HdfsClientDemo\src\main\resources, and add the following contents:

log4j.rootLogger=INFO, stdout log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n log4j.appender.logfile=org.apache.log4j.FileAppender log4j.appender.logfile.File=target/spring.log log4j.appender.logfile.layout=org.apache.log4j.PatternLayout log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

folders creating

3. Create the folder class file HdfsClient.java under the \ src\main\java path, and enter the following

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 10:51

*

* This is the test connection

*/

public class HdfsClient {

@Test

public void testMkdirs() throws IOException, URISyntaxException, InterruptedException {

//1. Get file system

Configuration configuration = new Configuration();

// FileSystem fileSystem = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration);

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

//Perform the create directory operation

fs.mkdirs(new Path("/test/test1/lqs.txt"));

//close resource

HadoopUtil.close(fs);

}

}

File upload

4. Create the folder class file HdfsClientUpload.java under the \ src\main\java path, and enter the following

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 16:24

*/

public class HdfsClientUpload {

@Test

public void testCopyFromLocalFile() throws URISyntaxException, IOException, InterruptedException {

//1. Get file system

Configuration configuration = new Configuration();

configuration.set("dfs.replication", "2");

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

//Perform file upload operation

fs.copyFromLocalFile(new Path("F:\\test\\lqs.txt"), new Path("/test/test1"));

//close resource

HadoopUtil.close(fs);

}

}

File download

5. Create the folder class file HdfsClientDownload.java under the \ src\main\java path, and enter the following

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 16:10

*/

public class HdfsClientDownload {

@Test

public void testCopyToLocalFile() throws URISyntaxException, IOException, InterruptedException {

//1. Get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

//2. Perform download operation

// boolean delSrc indicates whether to delete the original file

// Path src refers to the path of the file to download

// Path dst refers to the path to which the file is downloaded

// boolean useRawLocalFileSystem whether to enable file verification

fs.copyToLocalFile(false, new Path("/test/test1/lqs.txt"), new Path("f:/lqs.txt"), true);

//3. Close resource

HadoopUtil.close(fs);

}

}

File (folder) deletion

6. Create the class file HdfsClientDelete.java to create the folder under the \ src\main\java path, and enter the following:

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 18:36

*/

public class HdfsClientDelete {

@Test

public void testDelete() throws URISyntaxException, IOException, InterruptedException {

//1. Get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

//Perform delete operation

fs.delete(new Path("/test"), true);

//close resource

HadoopUtil.close(fs);

}

}

Renaming or moving files and both

7. Create the class file HdfsClientRename.java to create the folder under the \ src\main\java path, and enter the following

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 17:32

*/

public class HdfsClientRename {

@Test

public void testRename() throws URISyntaxException, IOException, InterruptedException {

//1. Get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

//2. Modify file name

fs.rename(new Path("/test/test1/lqs.txt"), new Path("/test/test1/lqstest.txt"));

//Move the file to the specified directory. Note: the destination directory must exist, otherwise the move will fail

boolean result = fs.rename(new Path("/test/test1/lqs.txt"), new Path("/lqs/test/test.txt"));

if (result) {

System.out.println("File moved successfully");

} else {

System.out.println("Failed to move file");

}

//Move the file and modify the moved file name. Note: the destination directory must exist, otherwise the move will fail

boolean result1 = fs.rename(new Path("/xiyo/test/test1/lqs.txt"), new Path("/lqs/test/test.txt"));

if (result1) {

System.out.println("Successfully moved the file and modified the moved file name");

} else {

System.out.println("Failed to move the file and modify the moved file name");

}

//3. Close resource

HadoopUtil.close(fs);

}

}

File type judgment (folder or file)

8. Create the folder class file HdfsClientListStatus.java under the \ src\main\java path, and enter the following

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Arrays;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 23:24

*/

public class HdfsClientListStatus {

@Test

public void testListStatus() throws URISyntaxException, IOException, InterruptedException {

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

FileStatus[] fileStatuses = fs.listStatus(new Path("/test/test1/lqs.txt"));

for (FileStatus fileStatus : fileStatuses) {

//If it is the filer, output the name

if (fileStatus.isFile()) {

System.out.println("-:" + fileStatus.getPath());

} else {

System.out.println("d:" + fileStatus.getPath());

}

System.out.println("++++++++++++++" + fileStatus.getPath() + "++++++++++++++");

System.out.println(fileStatus.getPermission());

System.out.println(fileStatus.getOwner());

System.out.println(fileStatus.getGroup());

System.out.println(fileStatus.getLen());

System.out.println(fileStatus.getModificationTime());

System.out.println(fileStatus.getReplication());

System.out.println(fileStatus.getBlockSize());

System.out.println(fileStatus.getPath().getName());

//Get block information

long blockLocations = fileStatus.getBlockSize();

System.out.println(Arrays.toString(new long[]{blockLocations}));

}

HadoopUtil.close(fs);

}

}

File details

9. Create the folder class file HdfsClientListFiles.java under the \ src\main\java path, and enter the following

package com.lqs.hdfs;

import com.lqs.util.HadoopUtil;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.junit.Test;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Arrays;

/**

* @author qingSong liu

* @version 1.0

* @time 2021/12/7 19:07

*/

public class HdfsClientListFiles {

@Test

public void testListFiles() throws URISyntaxException, IOException, InterruptedException {

//1. Get the file system and get the hadoop configuration information files: Configuration: core-default.xml, core-site.xml, hdfs-default.xml, hdfs-site.xml, mapred-default.xml, mapred-site.xml, yarn-default.xml, yarn-site.xml

Configuration configuration=new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://bdc112:8020"), configuration, "lqs");

//2. Get file details. Note that it is the obtained file, not the folder (directory)

//final boolean recursive whether to find recursively

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);

System.out.println(listFiles.hasNext());

while (listFiles.hasNext()){

LocatedFileStatus fileStatus=listFiles.next();

System.out.println("++++++++++++++"+fileStatus.getPath()+"++++++++++++++");

System.out.println(fileStatus.getPermission());

System.out.println(fileStatus.getOwner());

System.out.println(fileStatus.getGroup());

System.out.println(fileStatus.getLen());

System.out.println(fileStatus.getModificationTime());

System.out.println(fileStatus.getReplication());

System.out.println(fileStatus.getBlockSize());

System.out.println(fileStatus.getPath().getName());

//Get block information

BlockLocation[] blockLocations=fileStatus.getBlockLocations();

System.out.println(Arrays.toString(blockLocations));

}

//3. Close resource

HadoopUtil.close(fs);

}

}