HDFS transparent encryption, Keystore and Hadoop KMS, encryption area

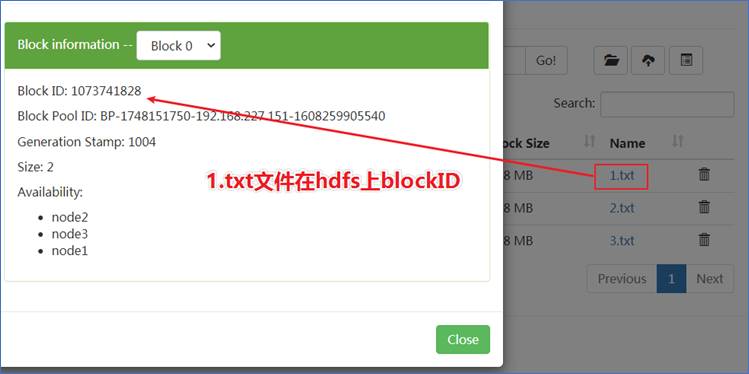

The data in HDFS will be saved in the form of blocks in the local disk of each data node, but these blocks are in clear text. If you directly access the directory where the block is located under the operating system, you can directly view the contents through the cat command of Linux, which is in clear text.

Let's go directly to the directory where the DataNode stores the block and directly view the content of the block:

/export/data/hadoop-3.1.4/dfs/data/current/BP-1748151750-192.168.227.1511608259905540/current/finalized/subdir0/subdir0/

1.2 background and Application

1.2. 1 common encryption levels

-

Application layer encryption

This is the safest and most flexible way. The encrypted content is ultimately controlled by the application, and can accurately reflect the needs of users. However, it is generally difficult to write applications to implement encryption.

-

Database layer encryption

Similar to application encryption. Most database vendors provide some form of encryption, but there may be performance problems. For example, the index cannot be encrypted.

-

File system layer encryption

This approach has little impact on performance, is transparent to applications, and is generally easier to implement. However, the application's fine-grained policy requirements may not be fully met.

-

Ø disk layer encryption

Easy to deploy and high-performance, but quite inflexible, which can only prevent users from stealing data from the physical level.

The transparent encryption of HDFS belongs to the encryption of database layer and file system layer. It has good performance and is transparent to existing applications. HDFS encryption can prevent attacks on or under the file system, also known as OS level attacks. The operating system and disk can only interact with encrypted data because the data has been encrypted by HDFS.

1.2. 2 application scenarios

Data encryption is mandatory for many governments, financial and regulatory agencies around the world to meet privacy and other security requirements. For example, the card payment industry has adopted the payment card industry data security standard (PCI DSS) to improve information security. Other examples include the requirements of the Federal Information Security Management Act (FISMA) and the health insurance portability and Liability Act (HIPAA) of the U.S. government. Encrypting data stored in HDFS can help your organization comply with such regulations.

1.3 introduction to transparent encryption

HDFS Transparent Encryption supports end-to-end Transparent Encryption. After enabling, Transparent Encryption and decryption can be realized for some files in the HDFS directory that need to be encrypted without modifying the user's business code. End to end means that encryption and decryption can only be performed through the client. For the files in the encrypted area, HDFS saves the encrypted files, and the secret key for file encryption is also encrypted. Even if illegal users copy files from the operating system level, they are ciphertext and can't view them.

HDFS transparent encryption has the following features:

-

Only HDFS clients can encrypt or decrypt data.

-

Key management is external to HDFS. HDFS cannot access unencrypted data or encryption keys. HDFS management and key management are independent responsibilities, which are assumed by different user roles (HDFS administrator, key administrator), so as to ensure that no single user can access data and keys without restrictions.

-

The operating system and HDFS interact only with encrypted HDFS data, reducing threats at the operating system and file system levels.

-

HDFS uses the advanced encryption standard counter mode (AES-CTR) encryption algorithm. AES-CTR supports 128 bit encryption keys (default), or 256 bit encryption keys when installing Java Cryptography Extension (JCE) infinite strength JCE.

1.4 key concepts and architecture of transparent encryption

1.4. 1 encryption area and key

HDFS transparent encryption has a new concept, the encryption zone. The encrypted area is a special directory. It will be transparently encrypted when writing files and transparently decrypted when reading files.

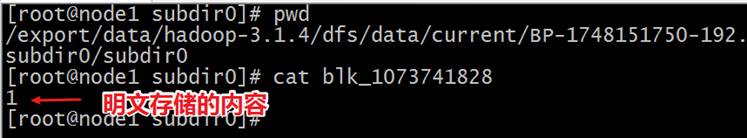

When an encryption zone is created, there will be an encryption zone key (EZ key) corresponding to it, and the EZ key is stored in the backup keystore outside HDFS. Each file in the encryption area has its own encryption key, which is called data encryption key (DEK). DEK will use the EZ key of its respective encryption area for encryption to form an encrypted data encryption key (EDEK). HDFS will not directly process DEK, but HDFS will only process EDEK. The client decrypts the EDEK and then uses the subsequent DEK to read and write data.

The relationship among EZ key, DEK and EDEK is as follows:

1.4.2 Keystore and Hadoop KMS

The key store is called a keystore. Integrating HDFS with an external enterprise keystore is the first step in deploying transparent encryption. This is because the separation of responsibilities between the key administrator and the HDFS administrator is a very important aspect of this function. However, most keystores are not designed for the rate of encryption / decryption requests seen by Hadoop workloads.

Therefore, Hadoop has developed a new service, which is called Hadoop Key Management Server (KMS). This service is used as an agent between HDFS client and keystore. The keystore and Hadoop KMS must interact with each other and with HDFS clients using Hadoop's KeyProvider API.

KMS mainly has the following responsibilities:

1.Provide access to the saved encrypted area secret key( EZ key) 2.generate EDEK,EDEK store in NameNode upper 3.by HDFS Client decryption EDEK

1.4.3 access to files in the encrypted area

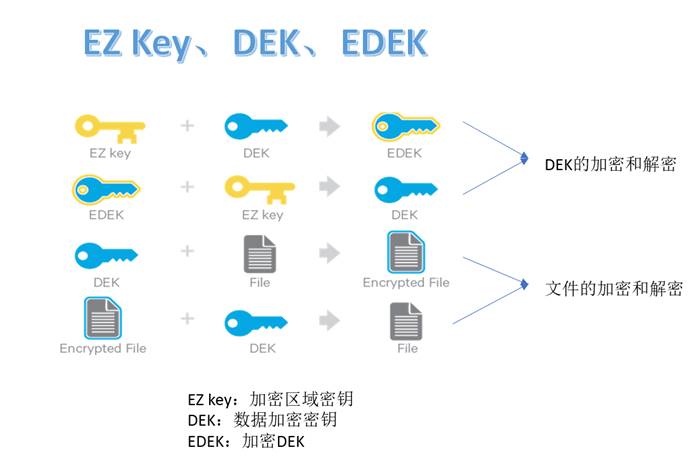

1.4.3.1 process of writing encrypted files

Premise: when creating an HDFS encryption area, an HDFS encryption area (directory) will be created, and a key and its EZ Key and their association will be created in the KMS service.

1.Client towards NN Request in HDFS Create a new file in an encrypted area; 2.NN towards KMS Request for this file EDEK,KMS Use the corresponding EZ key Generate a new EDEK Send to NN; 3.this EDEK Will be NN Write to file metadata Medium; 4.NN send out EDEK to Client; 5.Client send out EDEK to KMS Request decryption, KMS Use the corresponding EZ key take EDEK Decrypt as DEK Send to Client; 6.Client use DEK Send encrypted file content to datanode For storage.

DEK is the key to encrypt and decrypt a file, and EZ key stored in KMS is the key to encrypt and decrypt all files (DEK). * * therefore, EZ key is more important data and is only used inside kms (DEK encryption and decryption is only carried out in KMS memory) , it will not be transferred to the outside for use, and the HDFS server can only contact EDEK, so the HDFS server cannot decrypt the encrypted area file.

1.4.3.2 process of reading and decrypting files

The difference between the read process and the write process is that NN directly reads the EDEK in the metadata of the encrypted file and returns it to the client. The client sends the EDEK to KMS to obtain the DEK, and then decrypts and reads the encrypted content.

The encryption and decryption of EDEK are completely carried out on KMS. More importantly, clients requesting the creation or decryption of EDEK will never process EZ keys. Only KMS can create and decrypt EDEK with EZ key as required.

1.5 KMS configuration

1.5. 1 shut down the HDFS cluster

Execute stop DFS on node1 sh.

1.5.2 key generation

[root@node1 ~]# keytool -genkey -alias 'yida'

Enter keystore password:

Re-enter new password:

What is your first and last name?

[Unknown]:

What is the name of your organizational unit?

[Unknown]:

What is the name of your organization?

[Unknown]:

What is the name of your City or Locality?

[Unknown]:

What is the name of your State or Province?

[Unknown]:

What is the two-letter country code for this unit?

[Unknown]:

Is CN=Unknown, OU=Unknown, O=Unknown, L=Unknown, ST=Unknown, C=Unknown correct?

[no]: yes

Enter key password for <yida>

(RETURN if same as keystore password):

Re-enter new password:

1.5. 3. Configure kms site xml

Configuration file path: / export / server / hadoop-3.1 4/etc/hadoop/kms-site. xml

<configuration>

<property>

<name>hadoop.kms.key.provider.uri</name>

<value>jceks://file@/${user.home}/kms.jks</value>

</property>

<property>

<name>hadoop.security.keystore.java-keystore-provider.password-file</name>

<value>kms.keystore.password</value>

</property>

<property>

<name>dfs.encryption.key.provider.uri</name>

<value>kms://http@node1:16000/kms</value>

</property>

<property>

<name>hadoop.kms.authentication.type</name>

<value>simple</value>

</property>

</configuration>

The password file is found in the configuration directory of Hadoop through the classpath.

1.5.4 kms-env.sh

export KMS_HOME=/export/server/hadoop-3.1.4

export KMS_LOG=${KMS_HOME}/logs/kms

export KMS_HTTP_PORT=16000

export KMS_ADMIN_PORT=16001

1.5. 5. Modify the core | HDFS site xml

core-site.xml

<property>

<name>hadoop.security.key.provider.path</name>

<value>kms://http@node1:16000/kms</value>

</property>

hdfs-site.xml

<property>

<name>dfs.encryption.key.provider.uri</name>

<value>kms://http@node1:16000/kms</value>

</property>

Synchronize profiles to other nodes

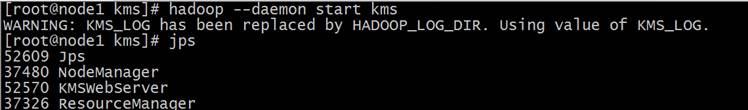

1.5.5.1 KMS service startup

hadoop --daemon start kms

1.5.5.2 HDFS cluster startup

start-dfs.sh

1.6 use of transparent encryption

1.6. 1 create key

Switch to normal user allenwoon operation

# su allenwoon # hadoop key create yida # hadoop key list -metadata

1.6. 2 create an encrypted area

Use root superuser action

#As a superuser, create a new empty directory and set it as an encrypted zone hadoop fs -mkdir /zone hdfs crypto -createZone -keyName itcast -path /zone #Put it chown To ordinary users hadoop fs -chown allenwoon:allenwoon /zone

1.6. 3 test encryption effect

Operate as normal user

#Put the file as an ordinary user and read it out echo helloitcast >> helloWorld hadoop fs -put helloWorld /zone hadoop fs -cat /zone /helloWorld #As an ordinary user, obtain encrypted information from a file hdfs crypto -getFileEncryptionInfo -path /zone/helloWorld

`

1.6. 3 test encryption effect

Operate as normal user

#Put the file as an ordinary user and read it out echo helloitcast >> helloWorld hadoop fs -put helloWorld /zone hadoop fs -cat /zone /helloWorld #As an ordinary user, obtain encrypted information from a file hdfs crypto -getFileEncryptionInfo -path /zone/helloWorld

The block that directly downloads the file cannot read the data.