Authors: kahing, willyi

Introduction: Cloud Log Service (CLS) is a one-stop service provided by Tencent cloud Log data The solution platform provides a number of services from log collection, log storage to log retrieval, chart analysis, monitoring alarm, log delivery and so on to help users solve business problems through logs Operation and maintenance , service monitoring, log audit and other scenario problems.

brief introduction

The log service CLS supports the collection of logs on self built K8s clusters. Before log collection, you need to define the log collection configuration (LogConfig) on the K8s self built cluster through CRD, and deploy and install the log provider, log agent, and LogListener. For users using Tencent kubernetes engine (tke), see TKE start log collection Documents, quickly access and use the log service through the console.

Tencent Kubernetes Engine (TKE) provides a highly scalable high-performance container management service with container as the core based on native kubernetes. Tencent cloud container service is fully compatible with native kubernetes API and extends kubernetes plug-ins such as cloud disk and load balancing of Tencent cloud to provide efficient deployment and resource scheduling for containerized applications.

prerequisite

- Kubernetes 1.10 and above cluster;

- Open the log service, create a log set and log topic, and obtain the log topic ID (topicId). For detailed configuration, see Create a blog topic file;

- Get the domain name (CLS_HOST) of the region where the log subject is located. For a detailed list of CLS domain names, see Available region file;

- To obtain the API Key ID (tmpsecreid) and API Key key (TmpSecretKey) required for CLS side authentication, please go to API key management see.

K8s log collection principle

Deploying log collection on K8s cluster mainly involves three components: log provider, log agent and LogListener, as well as a LogConfig collection configuration.

- LogConfig: log collection configuration, which defines where logs are collected, how they are parsed after collection, and which log topic is delivered to the CLS side after parsing.

- Log provisioner: synchronize the log collection configuration information defined in LogConfig to the CLS side.

- Log agent: monitors LogConfig and container changes on the node, and dynamically calculates the actual location of log files in the container on the node host.

- LogListener: collects the contents of the corresponding log files on the host of the node, parses and uploads them to the CLS side.

Deployment steps

Define LogConfig resource types

Define LogConfig object

Create LogConfig object

Configure CLS authentication ConfigMap

Deploy CLS provisionee

Deploy log agent and loglistener

1. Define LogConfig resource type

Use the Custom Resource Definition (CRD) in K8s to define the LogConfig resource type.

Take the Master node path / usr/local / as an example: wget downloads CRD Yaml declaration file, using kubectl to define the LogConfig resource type. The operation commands are as follows:

# wget https://mirrors.tencent.com/install/cls/k8s/CRD.yaml # kubectl create -f /usr/local/CRD.yaml

2. Define LogConfig object

Define the log collection configuration by creating the LogConfig object, that is, where the log is collected, how it is parsed after collection, and which log topic is delivered to the CLS side after parsing.

Take the Master node path / usr/local / as an example: wget downloads logconfig Yaml declaration file

# wget https://mirrors.tencent.com/install/cls/k8s/LogConfig.yaml

LogConfig.yaml declaration document is mainly divided into two parts:

- clsDetail: defines the log resolution format and the target log topic ID (topicId)

- inputDetail: defines the collection log source, that is, where the log is collected

Note: the topicId item in clsDetail needs to be configured as the log topic ID you created

The following describes the log parsing format and log source configuration:

Log parsing format

CLS supports the following log parsing formats:

Single line full text format

Multiline full text format

Completely regular scheme

JSON lattice

Separator format

1. Single line full text format

Single line full-text log means that the CONTENT of a single line of log is a complete log. When collecting logs, the log service will use the newline character \ n as the end character of a log. For unified structured management, each log will have a default key value CONTENT, but the log data itself will not be subject to log structured processing, nor will the log fields be extracted. The time item of the log attribute is determined by the time of log collection.

Assume that the original data of a log is:

Tue Jan 22 12:08:15 CST 2019 Installed: libjpeg-turbo-static-1.2.90-6.el7.x86_64

The LogConfig configuration reference example is as follows:

apiVersion: cls.cloud.tencent.com/v1 kind: LogConfig spec: clsDetail: topicId: xxxxxx-xx-xx-xx-xxxxxxxx # Single line log logType: minimalist_log

The data collected from the log service is:

__CONTENT__:Tue Jan 22 12:08:15 CST 2019 Installed: libjpeg-turbo-static-1.2.90-6.el7.x86_64

2. Multi line full text format

Multi line full-text log means that a complete log data may span multiple lines (for example, Java stacktrace). In this case, it seems unreasonable to use the newline character \ n as the end identifier of the log. In order to enable the log system to clearly distinguish each log, the first line of the log is matched in a regular way. When a line of log matches the preset regular expression, it is considered to be the beginning of a log, and the beginning of the next line appears as the beginning of the log End identifier of the log.

A default key value CONTENT will also be set for multi line full text, but the log data itself will not be processed in log structure, nor will the log fields be extracted. The time item of the log attribute is determined by the time of log collection.

Assume that the original data of a multi line log is:

2019-12-15 17:13:06,043 [main] ERROR com.test.logging.FooFactory:

java.lang.NullPointerException

at com.test.logging.FooFactory.createFoo(FooFactory.java:15)

at com.test.logging.FooFactoryTest.test(FooFactoryTest.java:11)The reference of LogConfig configuration is as follows:

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

clsDetail:

topicId: xxxxxx-xx-xx-xx-xxxxxxxx

# Multiline log

logType: multiline_log

extractRule:

# Only the line beginning with date and time is considered as the beginning of a new log, otherwise, a line break is added \ NAND appended to the end of the current log

beginningRegex: \d{4}-\d{2}-\d{2}\s\d{2}:\d{2}:\d{2},\d{3}\s.+The data collected from the log service is:

__CONTENT__:2019-12-15 17:13:06,043 [main] ERROR com.test.logging.FooFactory:\njava.lang.NullPointerException\n at com.test.logging.FooFactory.createFoo(FooFactory.java:15)\n at com.test.logging.FooFactoryTest.test(FooFactoryTest.java:11)

3. Single line - fully regular format

Single line fully regular format is usually used to process structured logs, which refers to the log parsing mode of extracting multiple key values from a complete log in a regular manner.

Assume that the original data of a log is:

10.135.46.111 - - [22/Jan/2019:19:19:30 +0800] "GET /my/course/1 HTTP/1.1" 127.0.0.1 200 782 9703 "http://127.0.0.1/course/explore?filter%5Btype%5D=all&filter%5Bprice%5D=all&filter%5BcurrentLevelId%5D=all&orderBy=studentNum" "Mozilla/5.0 (Windows NT 10.0; WOW64; rv:64.0) Gecko/20100101 Firefox/64.0" 0.354 0.354

The reference of LogConfig configuration is as follows:

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

clsDetail:

topicId: xxxxxx-xx-xx-xx-xxxxxxxx

# Completely regular scheme

logType: fullregex_log

extractRule:

# Regular expression, the corresponding value will be extracted according to the () capture group

logRegex: (\S+)[^\[]+(\[[^:]+:\d+:\d+:\d+\s\S+)\s"(\w+)\s(\S+)\s([^"]+)"\s(\S+)\s(\d+)\s(\d+)\s(\d+)\s"([^"]+)"\s"([^"]+)"\s+(\S+)\s(\S+).*

beginningRegex: (\S+)[^\[]+(\[[^:]+:\d+:\d+:\d+\s\S+)\s"(\w+)\s(\S+)\s([^"]+)"\s(\S+)\s(\d+)\s(\d+)\s(\d+)\s"([^"]+)"\s"([^"]+)"\s+(\S+)\s(\S+).*

# The extracted key list corresponds to the extracted value one by one

keys: ['remote_addr','time_local','request_method','request_url','http_protocol','http_host','status','request_length','body_bytes_sent','http_referer','http_user_agent','request_time','upstream_response_time']The data collected from the log service is:

body_bytes_sent: 9703 http_host: 127.0.0.1 http_protocol: HTTP/1.1 http_referer: http://127.0.0.1/course/explore?filter%5Btype%5D=all&filter%5Bprice%5D=all&filter%5BcurrentLevelId%5D=all&orderBy=studentNum http_user_agent: Mozilla/5.0 (Windows NT 10.0; WOW64; rv:64.0) Gecko/20100101 Firefox/64.0 remote_addr: 10.135.46.111 request_length: 782 request_method: GET request_time: 0.354 request_url: /my/course/1 status: 200 time_local: [22/Jan/2019:19:19:30 +0800] upstream_response_time: 0.354

4. Multiline fully regular format

Multi line full regular mode is applicable to the log parsing mode in which a complete log data occupies multiple lines (such as Java program log) in the log text. It can be extracted as multiple key value keys according to regular expression. If it is not necessary to extract key value, please refer to the multi line full-text format for configuration. Suppose that the original data of a log is:

[2018-10-01T10:30:01,000] [INFO] java.lang.Exception: exception happened at TestPrintStackTrace.f(TestPrintStackTrace.java:3) at TestPrintStackTrace.g(TestPrintStackTrace.java:7) at TestPrintStackTrace.main(TestPrintStackTrace.java:16)

The reference of LogConfig configuration is as follows:

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

clsDetail:

topicId: xxxxxx-xx-xx-xx-xxxxxxxx

#Multiline completely regular format

logType: multiline_fullregex_log

extractRule:

#The beginning of the line is a full regular expression. Only the line beginning with date and time is considered to be the beginning of a new log. Otherwise, a newline character will be added \ NAND appended to the end of the current log

beginningRegex: \[\d+-\d+-\w+:\d+:\d+,\d+\]\s\[\w+\]\s.*

#Regular expression, the corresponding value will be extracted according to the () capture group

logRegex: \[(\d+-\d+-\w+:\d+:\d+,\d+)\]\s\[(\w+)\]\s(.*)

# The extracted key list corresponds to the extracted value one by one

keys:

- time

- level

- msgAccording to the extracted key, the data collected from the log service is:

time: 2018-10-01T10:30:01,000` level: INFO` msg: java.lang.Exception: exception happened at TestPrintStackTrace.f(TestPrintStackTrace.java:3) at TestPrintStackTrace.g(TestPrintStackTrace.java:7) at TestPrintStackTrace.main(TestPrintStackTrace.java:16)

5. JSON format

The JSON format log will automatically extract the key of the first layer as the corresponding field name and the value of the first layer as the corresponding field value. In this way, the whole log will be structured. Each complete log will end with a newline \ n identifier.

Assume that the original data of a JSON log is:

{"remote_ip":"10.135.46.111","time_local":"22/Jan/2019:19:19:34 +0800","body_sent":23,"responsetime":0.232,"upstreamtime":"0.232","upstreamhost":"unix:/tmp/php-cgi.sock","http_host":"127.0.0.1","method":"POST","url":"/event/dispatch","request":"POST /event/dispatch HTTP/1.1","xff":"-","referer":"http://127.0.0.1/my/course/4","agent":"Mozilla/5.0 (Windows NT 10.0; WOW64; rv:64.0) Gecko/20100101 Firefox/64.0","response_code":"200"}The reference of LogConfig configuration is as follows:

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

clsDetail:

topicId: xxxxxx-xx-xx-xx-xxxxxxxx

# JSON format log

logType: json_logThe data collected from the log service is:

agent: Mozilla/5.0 (Windows NT 10.0; WOW64; rv:64.0) Gecko/20100101 Firefox/64.0 body_sent: 23 http_host: 127.0.0.1 method: POST referer: http://127.0.0.1/my/course/4 remote_ip: 10.135.46.111 request: POST /event/dispatch HTTP/1.1 response_code: 200 responsetime: 0.232 time_local: 22/Jan/2019:19:19:34 +0800 upstreamhost: unix:/tmp/php-cgi.sock upstreamtime: 0.232 url: /event/dispatch xff: -

6. Separator format

Separator log refers to a log data. The whole log can be structured according to the specified separator. Each complete log ends with a newline \ n identifier. When the log service performs log processing in separator format, you need to define a unique key for each separate field.

Suppose that the original data of one of your logs is:

10.20.20.10 ::: [Tue Jan 22 14:49:45 CST 2019 +0800] ::: GET /online/sample HTTP/1.1 ::: 127.0.0.1 ::: 200 ::: 647 ::: 35 ::: http://127.0.0.1/

The reference of LogConfig configuration is as follows:

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

clsDetail:

topicId: xxxxxx-xx-xx-xx-xxxxxxxx

# Separator log

logType: delimiter_log

extractRule:

# Separator

delimiter: ':::'

# The extracted key list corresponds to the segmented fields one by one

keys: ['IP','time','request','host','status','length','bytes','referer']The data collected from the log service is:

IP: 10.20.20.10 bytes: 35 host: 127.0.0.1 length: 647 referer: http://127.0.0.1/ request: GET /online/sample HTTP/1.1 status: 200 time: [Tue Jan 22 14:49:45 CST 2019 +0800]

Log source

CLS supports the following cluster log sources:

Container standard output

Container file

Host file

1. Container standard output

Example 1: collect the standard output of all containers in the default namespace

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

inputDetail:

type: container_stdout

containerStdout:

namespace: default

allContainers: true

...Example 2: collect the standard output of the container in the pod belonging to ingress gateway deployment in the production namespace

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

inputDetail:

type: container_stdout

containerStdout:

allContainers: false

workloads:

- namespace: production

name: ingress-gateway

kind: deployment

...Example 3: collect the standard output of the container in the pod containing "k8s app = nginx" in the pod tag in the production namespace

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

inputDetail:

type: container_stdout

containerStdout:

namespace: production

allContainers: false

includeLabels:

k8s-app: nginx

...2. Container documentation

Example 1: collect the data in the / data/nginx/log / path of the nginx container in the pod belonging to the ingress gateway deployment in the production namespace, which is named access Log file

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

topicId: xxxxxx-xx-xx-xx-xxxxxxxx

inputDetail:

type: container_file

containerFile:

namespace: production

workload:

name: ingress-gateway

type: deployment

container: nginx

logPath: /data/nginx/log

filePattern: access.log

...Example 2: collect a file named access.log in the / data/nginx/log / path of the nginx container in the pod whose pod tag in the production namespace contains "k8s app = ingress gateway"

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

inputDetail:

type: container_file

containerFile:

namespace: production

includeLabels:

k8s-app: ingress-gateway

container: nginx

logPath: /data/nginx/log

filePattern: access.log

...3. Host file

Example: collect all data in the host / data / path log file

apiVersion: cls.cloud.tencent.com/v1

kind: LogConfig

spec:

inputDetail:

type: host_file

hostFile:

logPath: /data

filePattern: *.log

...3. Create LogConfig object

Based on your requirements, refer to step 2 configuration instructions to define LogConfig Yaml declaration file and create LogConfig object using kubect. The operation commands are as follows:

# kubectl create -f /usr/local/LogConfig.yaml

4. Configure CLS authentication ConfigMap

Uploading logs from self built K8s cluster to CLS involves authentication. ConfigMap needs to be created to store API KEY ID and API KEY.

Take the Master node path / usr/local / as an example: wget downloads configmap Yaml declaration file

# wget https://mirrors.tencent.com/install/cls/k8s/ConfigMap.yaml

Note: configmap The values of TmpSecretId and TmpSecretKey in yaml are configured as your API KEY ID and API KEY key

Create a ConfigMap object using kubect. The operation commands are as follows:

# kubectl create -f /usr/local/ConfigMap.yaml

5. Deploy log provisioner

The log provider is responsible for discovering and listening to the log topic ID, log collection rules and log file path in the LogConfig resource, and synchronizing them to the CLS side.

Take the Master node path / usr/local / as an example: wget downloads log - provisioner Yaml declaration file

# wget https://mirrors.tencent.com/install/cls/k8s/Log-Provisioner.yaml

Note: log provider CLS under environment variable env in yaml_ The host field is configured as the domain name of the region where the target log subject is located. For domain names in different regions, see here

Use kubect to deploy log provisioner in the manner of Deployment. The operation commands are as follows:

# kubectl create -f /usr/local/Log-Provinsioner.yaml

6. Deploy log agent and Loglistener

The log collection of the cluster is mainly divided into two parts: log agent and Loglistener:

- The log agent is responsible for pulling the log source information in LogConfig in the cluster and calculating the absolute path mapped by the container log on the host.

- Loglistener is responsible for collecting and parsing the log files under the log file path of the host computer and uploading them to the CLS side

Take the Master node path / usr/local / as an example: wget downloads the declaration files of log agent and Loglistener

# wget https://mirrors.tencent.com/install/cls/k8s/Log—Agent.yaml

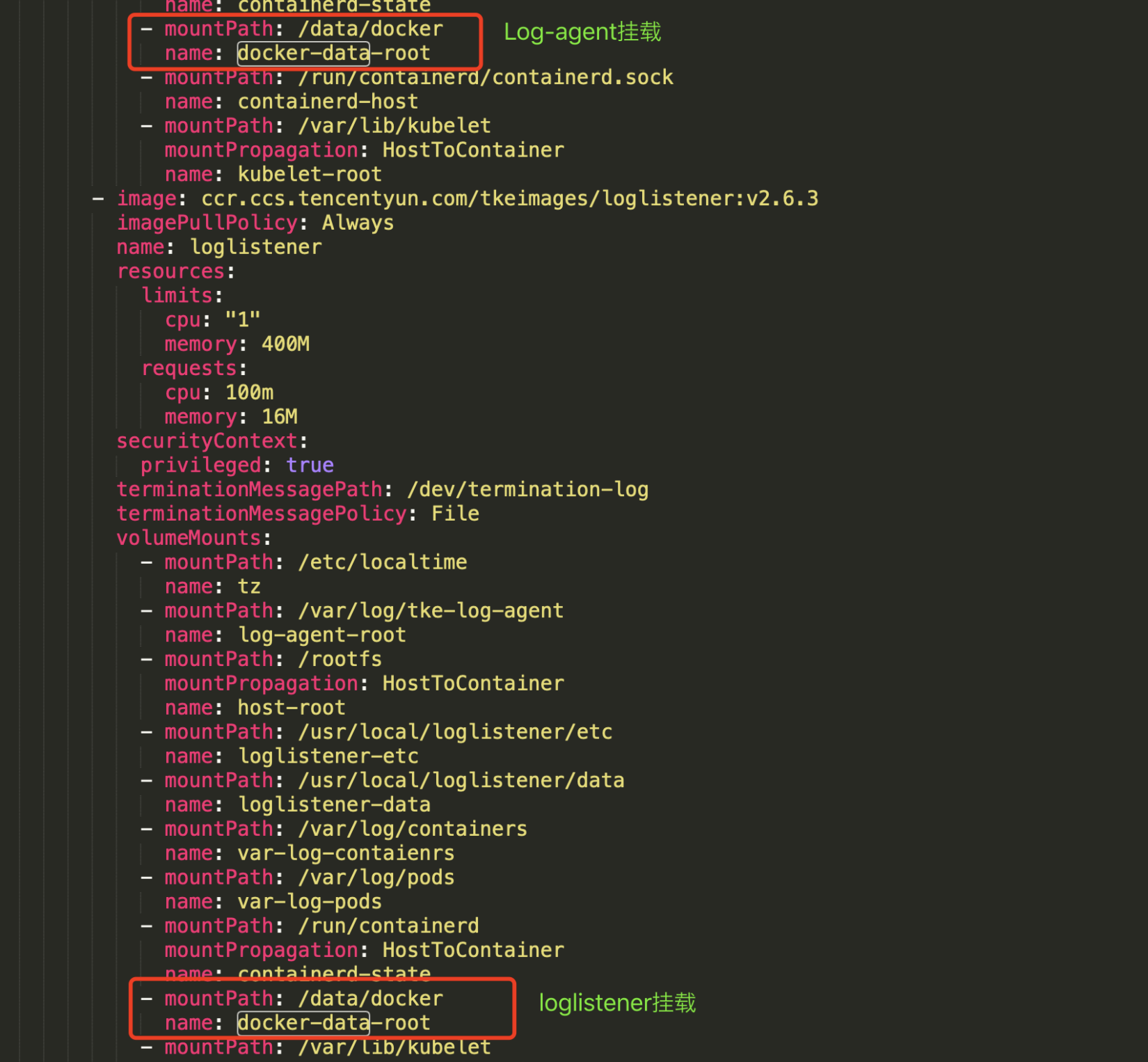

Note: if the docker root directory of the host is not under / var / lib / docker (this is the root directory of the host), you need to Log - agent In the yaml declaration file, map the root directory of docker to the container. As shown in the figure below, mount / data/docker to the container.

Use kubect to deploy log agent and Loglistener in the form of daemon set. The operation commands are as follows:

# kubectl create -f /usr/local/Log—Agent.yaml

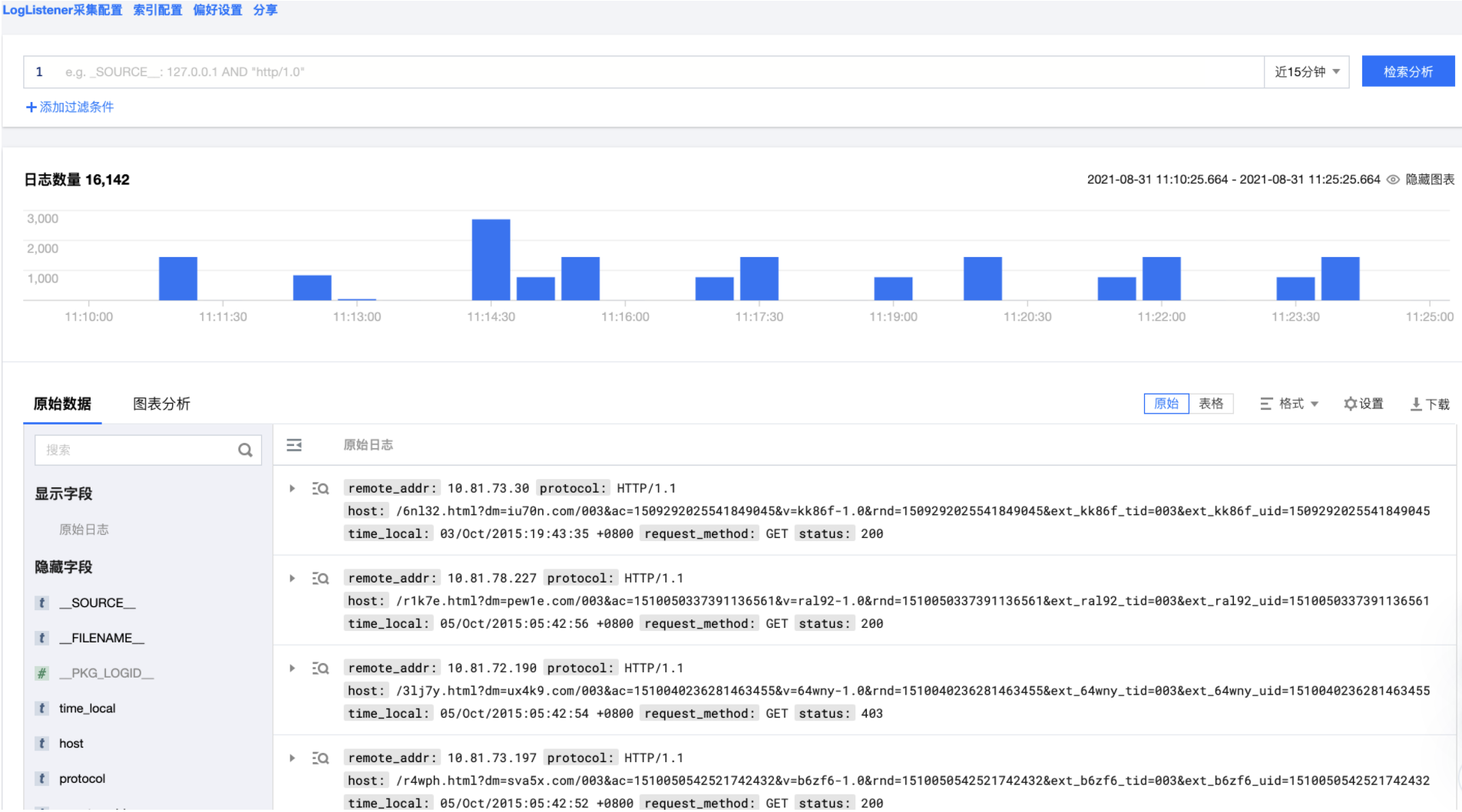

CLS console viewing logs

At this point, you have completed all the deployment of cluster log collection. You can go to CLS side search page View the logs collected.

Other information

[1] TKE start log collection: https://cloud.tencent.com/document/product/457/56751

[2] Create a blog topic: https://cloud.tencent.com/document/product/614/41035

[3] Available regions: https://cloud.tencent.com/document/product/614/18940

[4] API key management: https://console.cloud.tencent.com/cam/capi

[5] CLS log service retrieval page: https://console.cloud.tencent.com/cls/search

[6] CLS log service free quota: https://cloud.tencent.com/document/product/614/47116

The above is the sharing of CLS log service deployed by self built K8s cluster in this period. If you have more interesting log practices, please contribute ~

Previous articles:

[log service CLS] free Demo log experience: one click to open, CLS full function, out of the box

[log service CLS] container service TKE audit log operation and maintenance scenario experience

[log service CLS] comprehensive analysis of Nginx log data

Object storage COS access log scenario experience

[log service CLS] Tencent cloud log service CLS is connected to the content distribution network CDN