The original text is reproduced from "Liu Yue's technology blog" https://v3u.cn/a_id_201

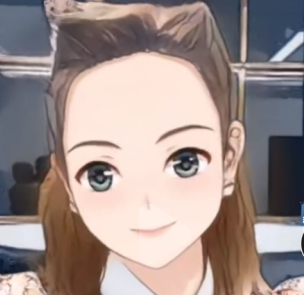

Some time ago, AnimeGAN, the industry's famous animation style transformation filter library, released the latest v2 version. For a time, there was no difference in the limelight. Tiktok two yuan, the largest user base in China is undoubtedly the jitter client. Its built-in animation conversion filter "transform comics", allows users to transform their physical appearance to two dimension "painting style" in live broadcast. For fans of the second dimension, the self entertainment method of "breaking the wall of the second dimension and becoming a paper man" has been tried repeatedly:

However, if you see too much, you will inevitably have some aesthetic fatigue. The "awl face" of thousands of people and the invariable "kazilan" type big eyes make people feel a little bit like chewing wax. It is too much, and it is unrealistic.

The AnimeGAN animation style filter based on CartoonGan can retain the characteristics of the original picture and have both the cool of the second dimension and the realism of the third dimension. It has a feeling of combining hardness and softness and lifting weights as light as a light weight:

Moreover, AnimeGAN project team has released the demo interface online, which can directly run the model effect: https://huggingface.co/spaces... However, due to bandwidth and online resource bottlenecks, online migration queues are often in a queued state. At the same time, the upload of some original images may also lead to the leakage of personal privacy.

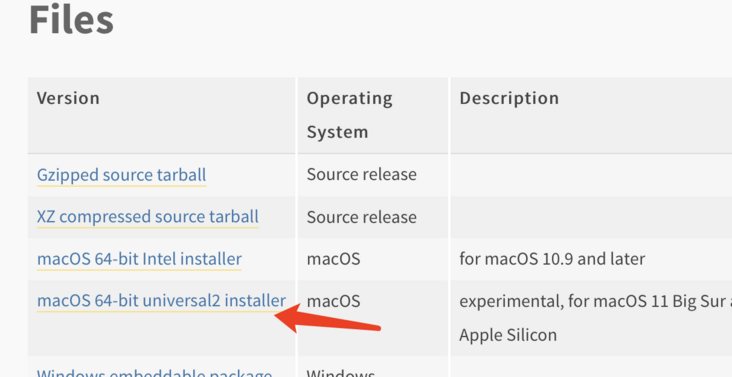

Therefore, this time, we set up the transformation service of static pictures and dynamic videos of AnimeGANV2 version locally on the Mac os Monterey of M1 chip based on the pytoch deep learning framework.

As we know, the cpu version of Pytorch currently supports Python 3.0 on the M1 chip mac 8. In a previous article: Golden and jade are easy to match, but wood and stone are rare. M1 Mac os(Apple Silicon) is a natural pair of Python 3 development environment (integrated deep learning framework tensorflow / Python) , we used to build the development environment of pytoch by using bandaforge. This time, we use the native installation package. First, go to the Python official website and download Python3 8.10 universal2 stable version: https://www.python.org/downlo...

Double click Install, then enter the terminal and type the command to install pytoch:

pip3.8 install torch torchvision torchaudio

Here we install the latest stable version 1.10 by default, and then enter Python 3.0 8 command line, import torch Library:

(base) ➜ video git:(main) ✗ python3.8 Python 3.8.10 (v3.8.10:3d8993a744, May 3 2021, 09:09:08) [Clang 12.0.5 (clang-1205.0.22.9)] on darwin Type "help", "copyright", "credits" or "license" for more information. >>> import torch >>>

After confirming that pytoch can be used, clone the official project:

git clone https://github.com/bryandlee/animegan2-pytorch.git

AnimeGAN is also based on the generative adversarial network. The principle is that we have a certain amount of original pictures in our hands, which can be called cubic pictures. There will be a distribution of real picture features, such as normal distribution, uniform distribution, or more complex distribution forms, Then the purpose of GAN is to generate a batch of data close to the real distribution through the generator. These data can be understood as the optimization of two-dimensional elements, but some characteristics of three-dimensional elements will be retained, such as larger eyes, face closer to the painting style of filter model, etc. in our processing, this generator tends to use neural network because it can represent more complex data distribution.

After downloading successfully, you can see four different weight models in the weights folder, including celeba\_distill.pt and paprika PT is used to transform landscape images, while face\_paint\_512\_v1.pt and face\_paint\_512\_v2.pt pays more attention to the transformation of portrait.

First install the image processing library pilot:

pip3.8 install Pillow

Then create a new test\_img.py file:

`from PIL import Image

import torch

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="celeba_distill")

#model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1")

#model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v2")

#model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="paprika")

face2paint = torch.hub.load("bryandlee/animegan2-pytorch:main", "face2paint", size=512)

img = Image.open("Arc.jpg").convert("RGB")``out = face2paint(model, img)

out.show()`Here, take the photos of the Arc de Triomphe as an example, and use celeba respectively\_ Check the effect through the distill and paprika filters. Note that ssl certificate detection needs to be turned off for local requests, and online model parameters need to be downloaded for the first run:

Here, the image size parameter refers to the total number of width and height channels. The next step is the transformation of character portrait animation style. Adjust the type of imported model generator and change the input image to character portrait:

from PIL import Image

import torch

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

import numpy as np

#model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="celeba_distill")

#model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v2")

#model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="paprika")

face2paint = torch.hub.load("bryandlee/animegan2-pytorch:main", "face2paint", size=512)

img = Image.open("11.png").convert("RGB")

out = face2paint(model, img)

out.show()

It can be seen that v1 filters are more stylized, while v2 is relatively stylistic based on the original features, which is derived from the three dimension and is not rigid in experience. It is not empty but tiktok, and does not know where the higher than the jitter filter is.

Next, let's take a look at the animation filter conversion of dynamic video. In a broad sense, video is the continuous shooting and playback of multiple pictures, which only depends on the video frame rate. The frame rate is also known as the abbreviation of FPS (frames per second) - frame / second, which refers to the number of frames of pictures refreshed per second. It can also be understood as how many times the graphics processor can refresh per second. The higher the frame rate, the smoother and more realistic animation can be obtained. The more frames per second (FPS), the smoother the displayed action will be.

Here, a coherent video can be converted into a picture in FPS through a third-party software. In m1 mac os system, it is recommended to use the famous video processing software: fmpeg

Install using the Homebrew of arm Architecture:

brew install ffmpeg

After successful installation, type ffmpeg command in the terminal to view the version:

(base) ➜ animegan2-pytorch git:(main) ✗ ffmpeg ffmpeg version 4.4.1 Copyright (c) 2000-2021 the FFmpeg developers built with Apple clang version 13.0.0 (clang-1300.0.29.3) configuration: --prefix=/opt/homebrew/Cellar/ffmpeg/4.4.1_3 --enable-shared --enable-pthreads --enable-version3 --cc=clang --host-cflags= --host-ldflags= --enable-ffplay --enable-gnutls --enable-gpl --enable-libaom --enable-libbluray --enable-libdav1d --enable-libmp3lame --enable-libopus --enable-librav1e --enable-librist --enable-librubberband --enable-libsnappy --enable-libsrt --enable-libtesseract --enable-libtheora --enable-libvidstab --enable-libvmaf --enable-libvorbis --enable-libvpx --enable-libwebp --enable-libx264 --enable-libx265 --enable-libxml2 --enable-libxvid --enable-lzma --enable-libfontconfig --enable-libfreetype --enable-frei0r --enable-libass --enable-libopencore-amrnb --enable-libopencore-amrwb --enable-libopenjpeg --enable-libspeex --enable-libsoxr --enable-libzmq --enable-libzimg --disable-libjack --disable-indev=jack --enable-avresample --enable-videotoolbox

There is no problem with the installation. Then prepare a video file and create a new video\_img.py:

import os

# Video to picture

os.system("ffmpeg -i ./video.mp4 -r 15 -s 1280,720 -ss 00:00:20 -to 00:00:22 ./myvideo/%03d.png")Here, we use the os module built in Python 3 to directly run the ffmpeg command to convert the video in the current directory at the rate of 15 frames per second, - s parameter represents the video resolution, - ss parameter can control the start position and end position of the video, and finally the directory of exported pictures.

After running the script, enter the myvideo Directory:

(base) ➜ animegan2-pytorch git:(main) ✗ cd myvideo (base) ➜ myvideo git:(main) ✗ ls 001.png 004.png 007.png 010.png 013.png 016.png 019.png 022.png 025.png 028.png 002.png 005.png 008.png 011.png 014.png 017.png 020.png 023.png 026.png 029.png 003.png 006.png 009.png 012.png 015.png 018.png 021.png 024.png 027.png 030.png (base) ➜ myvideo git:(main) ✗

You can see that the image has been converted according to the number of frames as the subscript file name.

Then you need to use AnimeGAN filter to batch convert the pictures:

from PIL import Image

import torch

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

import numpy as np

import os

img_list = os.listdir("./myvideo/")

# model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="celeba_distill")

# model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v2")

# #model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="paprika")

face2paint = torch.hub.load("bryandlee/animegan2-pytorch:main", "face2paint", size=512)

for x in img_list:

if os.path.splitext(x)[-1] == ".png":

print(x)

img = Image.open("./myvideo/"+x).convert("RGB")

out = face2paint(model, img)

out.show()

out.save("./myimg/"+x)

# exit(-1)In each conversion, the original image is retained, and the image converted by the filter is stored in the relative directory myimg, and then a new img is created\_ video. Py convert it back to video:

import os

# Picture to video

os.system("ffmpeg -y -r 15 -i ./myimg/%03d.png -vcodec libx264 ./myvideo/test.mp4")It's still 15 frames per second, the same as the original video.

If the original video has audio tracks, you can separate the audio tracks first:

# Extract audio

import os

os.system("ffmpeg -y -i ./lisa.mp4 -ss 00:00:20 -to 00:00:22 -vn -y -acodec copy ./myvideo/3.aac")After the animation filter conversion, merge the converted video and the audio track of the original video:

# Merge audio and video

os.system("ffmpeg -y -i ./myvideo/test.mp4 -i ./myvideo/3.aac -vcodec copy -acodec copy ./myvideo/output.mp4")Test case of original video:

Effect after conversion:

With the blessing of m1 chip, the efficiency of Python based on cpu version is still good. Unfortunately, we still need to wait for a while to adapt to the gpu version of m1 chip. Last month, soumith, a member of Python project team, gave this response:

So, here's an update.

We plan to get the M1 GPU supported. @albanD, @ezyang and a few core-devs have been looking into it. I can't confirm/deny the involvement of any other folks right now.

So, what we have so far is that we had a prototype that was just about okay. We took the wrong approach (more graph-matching-ish), and the user-experience wasn't great -- some operations were really fast, some were really slow, there wasn't a smooth experience overall. One had to guess-work which of their workflows would be fast.

So, we're completely re-writing it using a new approach, which I think is a lot closer to your good ole PyTorch, but it is going to take some time. I don't think we're going to hit a public alpha in the next ~4 months.

We will open up development of this backend as soon as we can.

It can be seen that the project team should completely reconstruct the bottom layer of pytoch for m1 chip, and the public beta will not be launched in the near future. It may be released in the second half of next year, which is still very worth looking forward to.

Conclusion: no matter CartoonGAN of Tsinghua University or AnimeGANv2 based on CartoonGAN, there is no doubt that they are leaders in the industry and the peak of the peak. Even if they are placed next to projects such as pytorch Gan in the scope of world artificial intelligence, AnimeGANv2 announces to the world in the field of artificial intelligence, The history that the Chinese can only make pills and supplements is gone forever.

The original text is reproduced from "Liu Yue's technology blog" https://v3u.cn/a_id_201