MyBatis inserts thousands of data in batches. Please use Foreach with caution

Recently, a long-time Job in the project has the problem of high CPU consumption. After investigation, it is found that the main time is spent inserting data into MyBatis in batches. The mapper configuration is done with a foreach loop, almost like this. (due to the confidentiality of the project, the following codes are handwritten demo codes)

<insert id="batchInsert" parameterType="java.util.List">

insert into USER (id, name) values

<foreach collection="list" item="model" index="index" separator=",">

(#{model.id}, #{model.name})

</foreach>

</insert>

The principle of this method to improve the batch insertion speed is to combine the traditional:

INSERT INTO `table1` (`field1`, `field2`) VALUES ("data1", "data2");

INSERT INTO `table1` (`field1`, `field2`) VALUES ("data1", "data2");

INSERT INTO `table1` (`field1`, `field2`) VALUES ("data1", "data2");

INSERT INTO `table1` (`field1`, `field2`) VALUES ("data1", "data2");

INSERT INTO `table1` (`field1`, `field2`) VALUES ("data1", "data2");

Convert to:

INSERT INTO `table1` (`field1`, `field2`)

VALUES ("data1", "data2"),

("data1", "data2"),

("data1", "data2"),

("data1", "data2"),

("data1", "data2");

This trick is also mentioned in MySql Docs. If you want to optimize the insertion speed, you can combine many small operations into one large operation. Ideally, this would send many new rows of data at once in a single connection and delay all index updates and consistency checks until the end.

At first glance, there seems to be no problem with this foreach, but through project practice, it is found that when the number of columns in the table is large (20 +) and the number of rows inserted at one time is large (5000 +), the whole insertion takes a long time, up to 14 minutes, which is unbearable. A sentence is also mentioned in the data:

Of course don't combine ALL of them, if the amount is HUGE. Say you have 1000 rows you need to insert, then don't do it one at a time. You shouldn't equally try to have all 1000 rows in a single query. Instead break it into smaller sizes.

It emphasizes that when the number of inserts is large, it can not be placed in one sentence at one time. But why not put it in the same sentence? Why does this statement take so long? I checked the data and found that:

Insert inside Mybatis foreach is not batch, this is a single (could become giant) SQL statement and that brings drawbacks:

some database such as Oracle here does not support.

in relevant cases: there will be a large number of records to insert and the database configured limit (by default around 2000 parameters per statement) will be hit, and eventually possibly DB stack error if the statement itself become too large.

Iteration over the collection must not be done in the mybatis XML. Just execute a simple Insertstatement in a Java Foreach loop. The most important thing is the session Executor type.

SqlSession session = sessionFactory.openSession(ExecutorType.BATCH);

for (Model model : list) {

session.insert("insertStatement", model);

}

session.flushStatements();

Unlike default ExecutorType.SIMPLE, the statement will be prepared once and executed for each record to insert.

It can be seen from the data that the default executor type is Simple, and a new preprocessing statement will be created for each statement, that is, a PreparedStatement object will be created. In our project, we will keep using the batch insert method. Because MyBatis cannot cache the contained statements, the sql statements will be re parsed every time the method is called.

Internally, it still generates the same single insert statement with many placeholders as the JDBC code above.

MyBatis has an ability to cache PreparedStatement, but this statement cannot be cached because it contains element and the statement varies depending on the parameters. As a result, MyBatis has to 1) evaluate the foreach part and 2) parse the statement string to build parameter mapping [1] on every execution of this statement.

And these steps are relatively costly process when the statement string is big and contains many placeholders.

[1] simply put, it is a mapping between placeholders and the parameters.

It can be seen from the above data that the time is wasted. Since there are 5000 + values after foreach, the PreparedStatement is very long and contains many placeholders. The mapping of placeholders and parameters is particularly time-consuming. Moreover, referring to relevant data, it can be seen that the growth of values and the required analysis time increase exponentially.

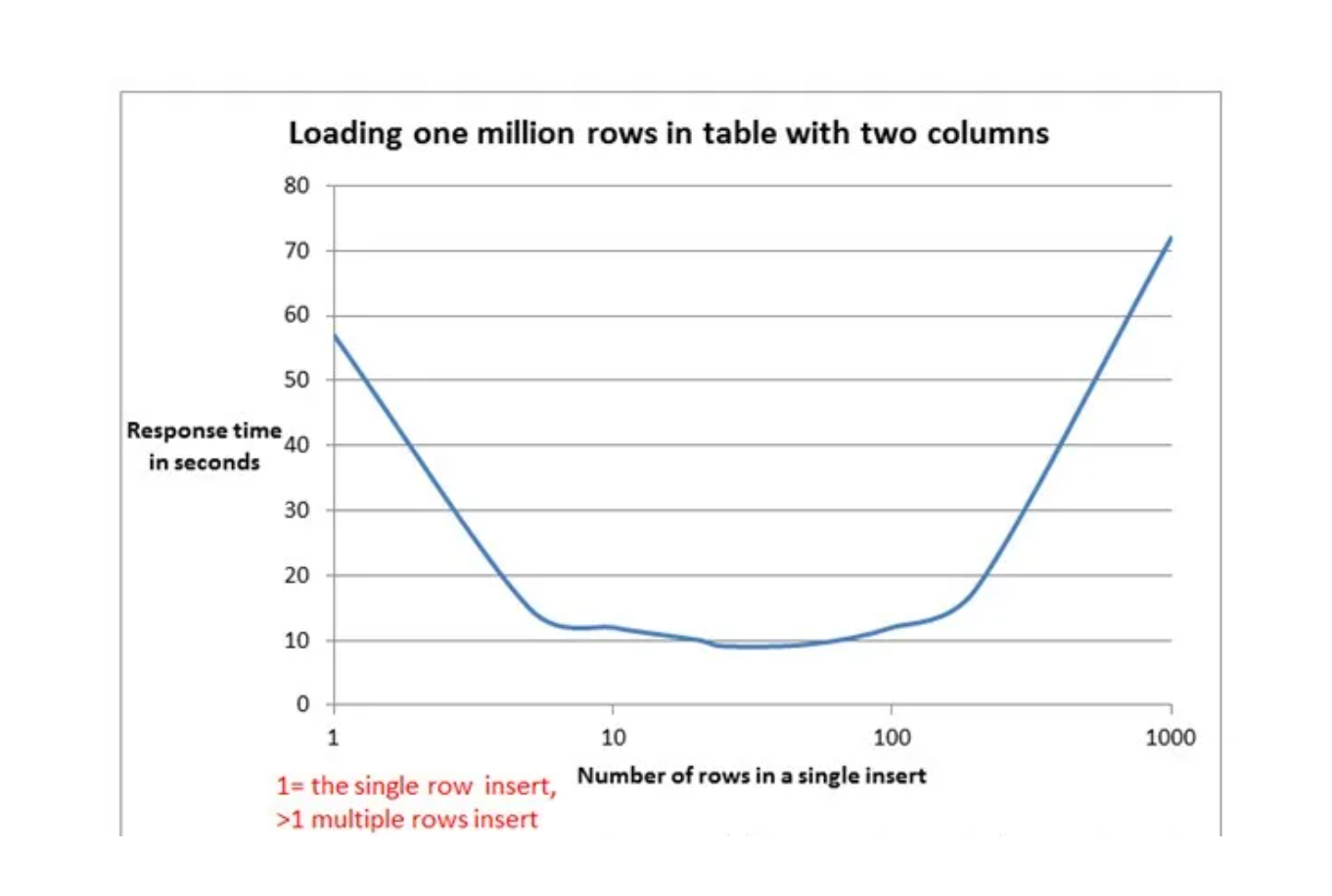

Therefore, if you have to use foreach for batch insertion, you can consider reducing the number of values in an insert statement. It is best to reach the bottom value of the above curve to make the speed the fastest. Generally speaking, according to experience, it is appropriate to insert 20 ~ 50 rows at one time, and the time consumption is acceptable.

Here's the point. As mentioned above, if you have to insert in a way, you can improve the performance. In fact, another method is recommended when batch insertion is written in MyBatis documents. (you can see http://www.mybatis.org/mybatis-dynamic-sql/docs/insert.html Contents in Batch Insert Support header in)

SqlSession session = sqlSessionFactory.openSession(ExecutorType.BATCH);

try {

SimpleTableMapper mapper = session.getMapper(SimpleTableMapper.class);

List<SimpleTableRecord> records = getRecordsToInsert(); // not shown

BatchInsert<SimpleTableRecord> batchInsert = insert(records)

.into(simpleTable)

.map(id).toProperty("id")

.map(firstName).toProperty("firstName")

.map(lastName).toProperty("lastName")

.map(birthDate).toProperty("birthDate")

.map(employed).toProperty("employed")

.map(occupation).toProperty("occupation")

.build()

.render(RenderingStrategy.MYBATIS3);

batchInsert.insertStatements().stream().forEach(mapper::insert);

session.commit();

} finally {

session.close();

}

That is, the basic idea is to set the executor type of MyBatis session to Batch, and then execute the insert statement multiple times. It is similar to the following statement of JDBC.

Connection connection = DriverManager.getConnection("jdbc:mysql://127.0.0.1:3306/mydb?useUnicode=true&characterEncoding=UTF-8&useServerPrepStmts=false&rewriteBatchedStatements=true","root","root");

connection.setAutoCommit(false);

PreparedStatement ps = connection.prepareStatement(

"insert into tb_user (name) values(?)");

for (int i = 0; i < stuNum; i++) {

ps.setString(1,name);

ps.addBatch();

}

ps.executeBatch();

connection.commit();

connection.close();

After testing, executortype The performance of batch insertion method is significantly improved, and all insertion can be completed in less than 2s.

To sum up, if MyBatis needs batch insertion, it is recommended

ExecutorType. For the insertion method of batch, if you do not want to use the insertion, you need to control the record of each insertion to about 20 ~ 50.