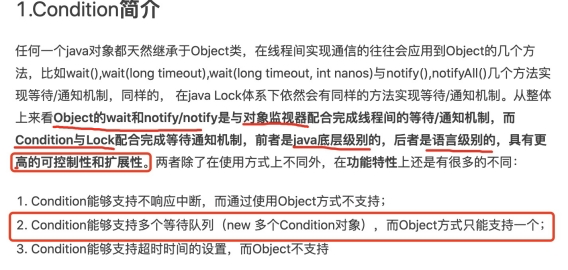

1, Condition

package com.ykq.juc;

import java.util.concurrent.locks.Condition;

import java.util.concurrent.locks.Lock;

import java.util.concurrent.locks.ReentrantLock;

public class App {

public static void main(String[] args) {

Tick tick = new Tick();

new Thread(() -> {

for (int i = 0; i < 10; i++) {

tick.printA();

}

}, "A").start();

new Thread(() -> {

for (int i = 0; i < 10; i++) {

tick.printB();

}

}, "B").start();

new Thread(() -> {

for (int i = 0; i < 10; i++) {

tick.printC();

}

}, "C").start();

}

}

class Tick {

private Lock lock = new ReentrantLock();

private Condition condition1 = lock.newCondition();

private Condition condition2 = lock.newCondition();

private Condition condition3 = lock.newCondition();

private int number = 1;

public void printA() {

lock.lock();

try {

//Business code, judgment - Execution - Notification

while(number!=1) {

condition1.await();

}

System.out.println(Thread.currentThread().getName()+"->AA");

number = 2;

condition2.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

public void printB(){

lock.lock();

try {

//Business code, judgment - Execution - Notification

while(number!=2) {

condition2.await();

}

System.out.println(Thread.currentThread().getName()+"->BB");

number = 3;

condition3.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

public void printC() {

lock.lock();

try {

//Business code, judgment - Execution - Notification

while(number!=3) {

condition3.await();

}

System.out.println(Thread.currentThread().getName()+"->CC");

number = 1;

condition1.signal();

} catch (Exception e) {

e.printStackTrace();

} finally {

lock.unlock();

}

}

}

And issue an unsafe solution for ArrayList

public static void main( String[] args ) {

/**

* And sending ArrayList is not safe

* Solution:

* 1,List<String> list = new Vector<String>();

* 2,List<String> list = Collections.synchronizedList(new ArrayList<>());

* 3,List<String> list = new CopyOnWriteArrayList<>();

*/

List<String> list = new CopyOnWriteArrayList<>();

for (int i = 0; i < 10; i++) {

new Thread(()->{

list.add("a");

System.out.println(list);

},String.valueOf(i)).start();

}

}

2, CountDownLatch

countDownLatch.countDown(); // Quantity minus 1

countDownLatch.await(); // Wait for the counter to return to zero before executing downward

Every time a thread calls countDownLatch(), the number is - 1. Assuming that the counter becomes 0, countdownlatch await(); Will be awakened and continue.

CountDownLatch countDownLatch = new CountDownLatch(6);

for (int i = 0; i < 6; i++) {

new Thread(()->{

System.out.println(Thread.currentThread().getName()+"Go out");

countDownLatch.countDown(); //Quantity minus 1

},String.valueOf(i)).start();

}

countDownLatch.await(); //Wait for the counter to return to zero before executing downward

System.out.println("Close Door");

3, Cyclicbarrier (addition counter)

public static void main(String[] args){

CyclicBarrier cyclicBarrier = new CyclicBarrier(7, ()->{

System.out.println("hello");

});

for (int i = 1; i <= 7; i++) {

int temp = i;

new Thread(()->{

System.out.println(Thread.currentThread().getName()+temp);

try {

cyclicBarrier.await();

} catch (InterruptedException | BrokenBarrierException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}).start();

}

}

4, Semaphore (current limiting)

semaphore.acquire(); Obtain, assuming that if it is full, wait, wait to be released.

==semaphore.release();== Release will release the current signal and wake up the waiting thread.

Function: mutually exclusive use of multiple shared resources, concurrent flow restriction, and control the maximum number of threads.

public static void main(String[] args) {

//Thread limit operation

Semaphore semaphore = new Semaphore(2);

for (int i = 1; i <=6 ; i++) {

new Thread(()->{

try {

semaphore.acquire(); //obtain

System.out.println(Thread.currentThread().getName()+"implement");

TimeUnit.SECONDS.sleep(2); //Simulation execution time

System.out.println(Thread.currentThread().getName()+"end");

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

semaphore.release(); //release

}

},String.valueOf(i)).start();

}

}

5, ReadWriteLock

/**

* An exclusive lock (write lock) can only be held by one thread at a time

* Shared lock (read lock) multiple threads can occupy at the same time

*/

public class ReadWriteLock {

public static void main(String[] args) {

MyLock myLock = new MyLock();

//write in

for (int i = 1; i <=5; i++) {

final int temp = i;

new Thread(()->{

myLock.put(temp+"", temp+"");

},String.valueOf(i)).start();

}

//read

for (int i = 1; i <=5; i++) {

final int temp = i;

new Thread(()->{

myLock.get(temp+"");

},String.valueOf(i)).start();

}

}

}

class MyLock{

//The variable modified by volatile can ensure that each thread can obtain the latest value of the variable, so as to avoid dirty data reading.

private volatile Map<String,Object> map = new HashMap<>();

//Read / write lock, more fine-grained control

private ReentrantReadWriteLock readWriteLock = new ReentrantReadWriteLock();

//When saving and writing, you only want one thread to write at the same time

public void put(String key,Object value) {

readWriteLock.writeLock().lock();

try {

System.out.println(Thread.currentThread().getName()+"write in"+key);

map.put(key, value);

System.out.println(Thread.currentThread().getName()+"write in ok");

} catch(Exception e) {

e.printStackTrace();

} finally {

readWriteLock.writeLock().unlock();

}

}

//When taking and reading, everyone can read

public void get(String key) {

readWriteLock.writeLock().lock();

try {

System.out.println(Thread.currentThread().getName()+"read"+key);

Object object = map.get(key);

System.out.println(Thread.currentThread().getName()+"read ok");

} catch(Exception e) {

e.printStackTrace();

} finally {

readWriteLock.writeLock().unlock();

}

}

}

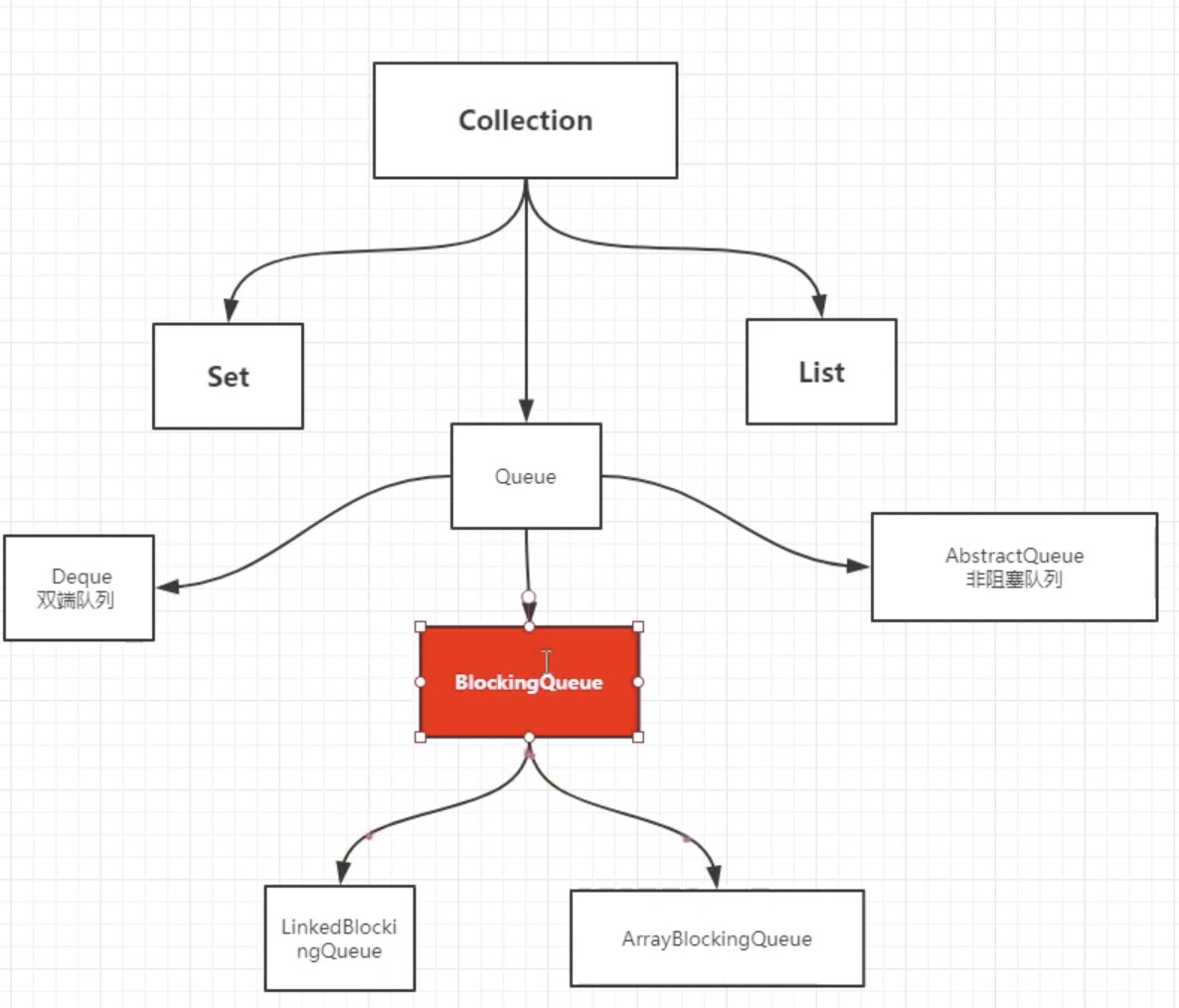

6, BlockingQueue four sets of API s

| mode | Throw exception | If there is a return value, no exception will be thrown | Blocking, waiting | Timeout wait |

|---|---|---|---|---|

| add to | add() | offer() | put() | offer(,) |

| remove | remove() | poll() | take() | poll(,) |

| Inspection team head element | element() | peek() | - | - |

public static void test1() throws InterruptedException {

ArrayBlockingQueue<Object> blockingQueue = new ArrayBlockingQueue<>(3);

blockingQueue.offer("a");

blockingQueue.offer("b");

blockingQueue.offer("c");

//blockingQueue.offer("d",3,TimeUnit.SECONDS); // Wait 3 seconds

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll(3, TimeUnit.SECONDS));

}

7, SynchronousQueue

/**

* Synchronization queue

* Unlike other blockingqueues, synchronous queues do not store elements

* put If you have an element, you must take it out first, otherwise you can't put in the value.

*/

public class SynchronousQueueDemo {

public static void main(String[] args) {

BlockingQueue<String> blockingQueue = new SynchronousQueue<>();

new Thread(()-> {

try {

System.out.println(Thread.currentThread().getName()+"->1");

blockingQueue.put("1");

System.out.println(Thread.currentThread().getName()+"->2");

blockingQueue.put("2");

System.out.println(Thread.currentThread().getName()+"->3");

blockingQueue.put("3");

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

},"Save").start();

new Thread(()-> {

try {

TimeUnit.SECONDS.sleep(3);

System.out.println(Thread.currentThread().getName()+"->"+blockingQueue.take());

TimeUnit.SECONDS.sleep(3);

System.out.println(Thread.currentThread().getName()+"->"+blockingQueue.take());

TimeUnit.SECONDS.sleep(3);

System.out.println(Thread.currentThread().getName()+"->"+blockingQueue.take());

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

},"take").start();

}

}

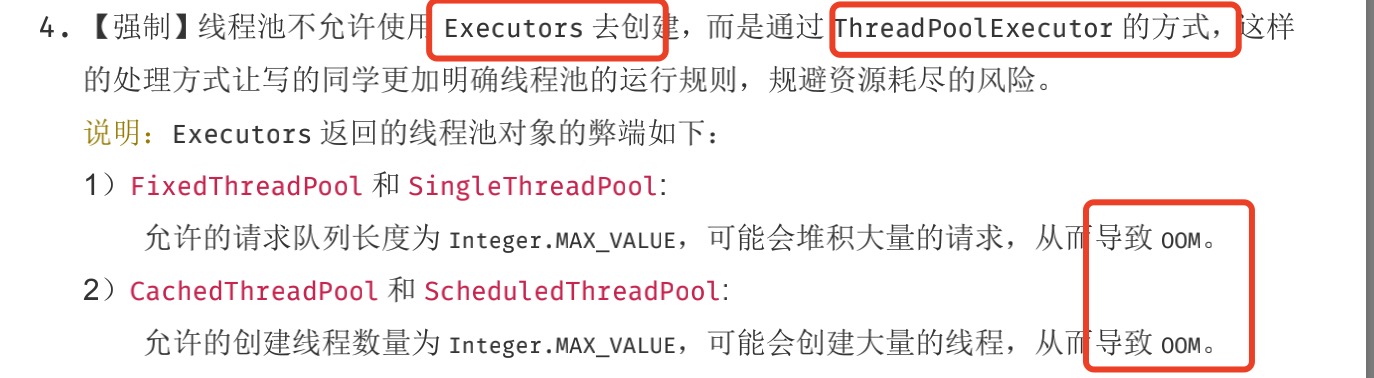

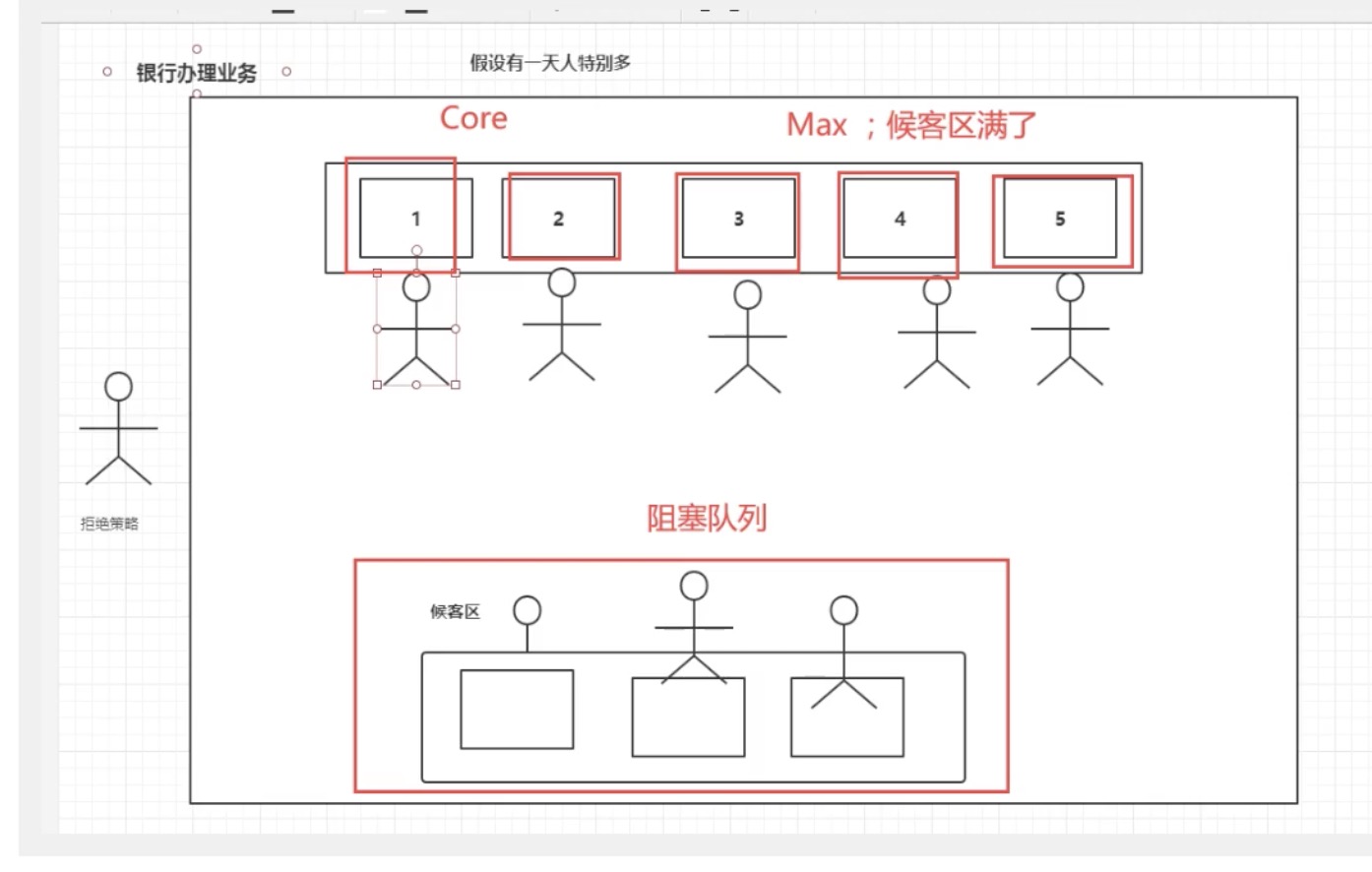

8, Thread pool (4 methods, 7 parameters and 4 rejection policies)

8.1 four methods (unsafe, need to create thread pool manually)

- newCachedThreadPool

Create a cacheable thread pool. If the size of the thread pool exceeds the threads required to process the task, Then some idle threads (not executing tasks for 60 seconds) will be recycled. When the number of tasks increases, this thread pool can intelligently add new threads to process tasks. This thread pool does not limit the size of the thread pool, and the size of the thread pool completely depends on the maximum thread size that the operating system (or JVM) can create. - newFixedThreadPool

Create a fixed size thread pool. Each time a task is submitted, a thread is created until the thread reaches the maximum size of the thread pool. Once the size of the thread pool reaches the maximum, it will remain unchanged. If a thread ends due to execution exception, the thread pool will supplement a new thread. - newScheduledThreadPool

Create an unlimited thread pool. This thread pool supports the need to execute tasks regularly and periodically. - newSingleThreadExecutor

Create a single threaded thread pool. This thread pool has only one thread working, which is equivalent to a single thread executing all tasks in series. If the only thread ends abnormally, a new thread will replace it. This thread pool ensures that all tasks are executed in the order they are submitted.

//Executors tool class: 3 methods

public class Demo1 {

public static void main(String[] args) {

//Single thread

// ExecutorService threadPool = Executors.newSingleThreadExecutor();

//Create a fixed thread pool size

// ExecutorService threadPool = Executors.newFixedThreadPool(5);

//Dynamic size

ExecutorService threadPool = Executors.newCachedThreadPool();

try {

for (int i = 0; i < 10; i++) {

//Create threads through thread pool

threadPool.execute(()->{

System.out.println(Thread.currentThread().getName()+"ok");

});

}

} catch (Exception e) {

e.printStackTrace();

} finally {

//When the thread pool runs out, the program ends. Close the thread pool

threadPool.shutdown();

}

}

}

The difference between submit and execute

| Method name | Return value | Task interface | Throw an exception to the outer caller |

|---|---|---|---|

| execute | void | Runnable | Cannot throw an exception |

| submit | Future | Callable and Runnable | Can throw exceptions through future Get catches the exception thrown |

public static void main(String[] args) throws InterruptedException, ExecutionException {

List<Future<String>> results = new ArrayList<Future<String>>();

ExecutorService eService = Executors.newCachedThreadPool();

for (int i = 0; i < 100; i++) {

// eService.execute(new Runnable() {

// @Override

// public void run() {

// // TODO Auto-generated method stub

// }

// });

results.add(eService.submit(new TaskCallable()));

}

for (Future<String> future : results) {

System.out.println(future.get());

}

}

public static class TaskCallable implements Callable<String> {

@Override

public String call() throws Exception {

String tid = String.valueOf(Thread.currentThread().getId());

System.out.printf("Thread#%s : in call\n", tid);

return tid;

}

}

Alibaba cloud development manual explains that using Executors can easily lead to OOM. It is recommended to manually create thread pools and threadpoolexecutors

8.2 seven parameters: ThreadPoolExecutor

public static void main(String[] args) {

//Custom thread pool

ExecutorService threadPool = new ThreadPoolExecutor(

2, //Core thread pool size

5, //Maximum core thread pool size

3, //If no one calls it, it will be released

TimeUnit.SECONDS, //Timeout unit

new LinkedBlockingDeque<>(3), //Blocking queue

Executors.defaultThreadFactory(), //Thread factory, creating threads, generally do not need to move

new ThreadPoolExecutor.AbortPolicy()); //Reject policy

/**

* 1,new ThreadPoolExecutor.AbortPolicy() No processing, exception thrown

* 2,new ThreadPoolExecutor.CallerRunsPolicy() Where to deal with execution

* 3,new ThreadPoolExecutor.DiscardPolicy() No exception will be thrown

* 4,new ThreadPoolExecutor.DiscardOldestPolicy() When the list is full, try to compete with the earliest one. When there are many threads, it can be effective without throwing exceptions

*/

try {

//The maximum load is equal to the value 5 of queue new linkedblockingdeque < > (3) + max

for (int i = 1; i <= 9; i++) {

//Create threads through thread pool

threadPool.execute(()->{

System.out.println(Thread.currentThread().getName()+" ok");

});

}

} catch (Exception e) {

e.printStackTrace();

} finally {

//When the thread pool runs out, the program ends. Close the thread pool

threadPool.shutdown();

}

}

Create thread pool manually

- **@Configuration: * * when the Spring container starts, it will load the class with @ configuration annotation and process the methods with @ Bean annotation.

- **@Bean: * * is a method level annotation, which is mainly used in the class annotated with @ Configuration or in the class annotated with @ Component. The id of the added bean is the method name.

- **@PropertySource: * * load the specified configuration file. Value is the configuration file to be loaded. ignoreResourceNotFound means whether the program ignores the loaded file if it cannot be found. The default is false. If true, it means that the loaded configuration file does not exist and the program does not report an error. In actual project development, it is best to set it to false. If application If the property in the properties file is duplicate with the property in the custom configuration file, the property value in the custom configuration file will be overwritten and the application The configuration properties in the properties file.

- **@Slf4j: * * lombok's log output tool. After adding this annotation, you can directly call log to output logs at all levels.

- **@Value: * * call the property in the configuration file and assign a value to the property.

ExecutorConfig

@Configuration

@EnableAsync

public class ExecutorConfig {

@Bean(name = "asyncTaskExecutor")

public Executor taskExecutor() {

ThreadPoolTaskExecutor executor = new ThreadPoolTaskExecutor();

executor.setCorePoolSize(10);

executor.setMaxPoolSize(20);

executor.setQueueCapacity(200);

executor.setKeepAliveSeconds(60);

executor.setThreadNamePrefix("taskExecutor-");

executor.setRejectedExecutionHandler(new ThreadPoolExecutor.CallerRunsPolicy());

return executor;

}

}

Usually ThreadPoolTaskExecutor is used with @ Async. Add @ Async annotation on a method to indicate that the method function is called asynchronously@ Add the method name or bean name of the thread pool after Async to indicate that the asynchronous thread will load the configuration of the thread pool. Be sure to add @ EnableAsync annotation on the startup class so that the @ Async annotation will take effect, or add * * @ EnableAsync in ExecutorConfig**

@Component

public class ThreadTest {

/**

* Cycle every 10 seconds, and a thread cycles 10 times in total.

*/

@Async("asyncTaskExecutor")

public void ceshi3() {

for (int i = 0; i <= 10; i++) {

log.info("ceshi3: " + i);

try {

Thread.sleep(2000 * 5);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

Or create a custom thread pool through asyncconfigurator

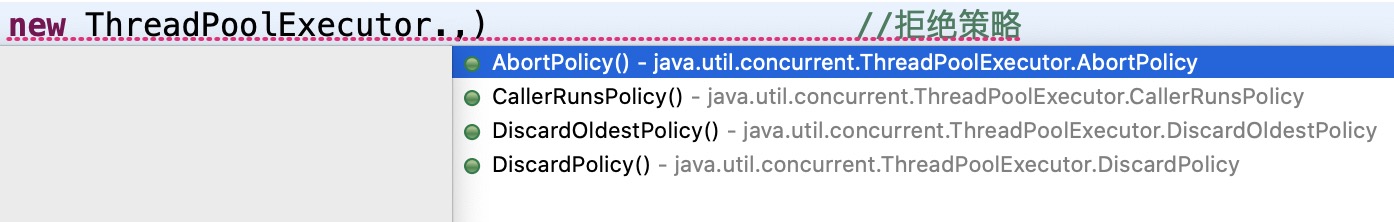

Four rejection strategies

- ThreadPoolExecutor.AbortPolicy discards the task and throws a RejectedExecutionException exception (default).

- ThreadPoolExecutor. Discardpolitical discards the task without throwing an exception.

- ThreadPoolExecutor.DiscardOldestPolicy discards the task at the top of the queue and then tries to execute the task again.

- ThreadPoolExecutor.CallerRunsPolic is handled by the calling thread.

How to define the maximum thread: (tuning)

- CPU intensive, several cores are several, which can maintain the highest CPU efficiency

System.out.println(Runtime.getRuntime().availableProcessors()); //View CPU cores

- IO density determines the thread that consumes IO very much in the program. It can be set twice

8.3 ThreadPoolExecutor and ForkJoinPool

- ForkJoinPool is suitable for many task dependent, generated, transient, and almost non blocking (i.e., compute intensive) tasks

- ThreadPoolExecutor is used for few, independent, externally generated, long, and sometimes blocked tasks

- Consider using ForkJoinPool when you need to handle recursive divide and conquer algorithms.

- Carefully set the threshold for no task division, which has an impact on performance.

- Some features in Java 8 use the common thread pool in ForkJoinPool. In some cases, you need to adjust the default number of threads in the thread pool.

ThreadPool(TP) and ForkJoinPool(FJ) are for different use cases The main difference is that the number of queues used by different performers determines which type of problem is more suitable for any performer.

FJ executor has n (also parallelism level) separate concurrent queues (double ended queues), while TP executor has only one concurrent queue (these queues / double ended queues may be custom implementations that do not follow JDK Collections API) As a result, FJ executors perform better when you generate a large number of tasks (usually with relatively short running time), because independent queues will minimize concurrent operations, and few steals will help load balancing In TP, because there is only one queue, there will be concurrent operations every time the work is out of the queue, which will become a relative bottleneck and limit performance

Conversely, if there are relatively few long-running tasks, a single queue in TP is no longer a performance bottleneck. However, n irrelevant queues and relatively frequent theft attempts will now become the bottleneck of FJ, because there may be many futile theft attempts, which will increase the overhead.

Four functional interfaces

function (functional interface)

public static void main(String[] args) {

// funciton function interface has one input parameter and one output

Function<String, String> function = new Function<String, String>() {

@Override

public String apply(String t) {

// TODO Auto-generated method stub

return t;

}

};

System.out.println(function.apply("123"));

}

As long as it is a functional interface, it can be simplified with lambda expressions

public static void main(String[] args) {

Function function = (str)->{return str;};

System.out.println(function.apply("123"));

}

Predicate (deterministic interface)

public static void main(String[] args) {

// Assertive interface: there is an input parameter, and the return value can only be Boolean!

//Judge whether the string is empty

Predicate<String> predicate = new Predicate<String>() {

@Override

public boolean test(String str) {

return str.isEmpty();

}

};

System.out.println(predicate.test("123"));

}

Simplifying with lambda expression

public static void main(String[] args) {

// Assertive interface: there is an input parameter, and the return value can only be Boolean!

//Judge whether the string is empty

Predicate<String> predicate = (str)->{return str.isEmpty();};

System.out.println(predicate.test("123"));

}

Consumer (consumer interface)

public static void main(String[] args) {

//The Consumer consumer interface has only input and no return value

Consumer<String> consumer = new Consumer<String>() {

@Override

public void accept(String t) {

System.out.println(t);

}

};

consumer.accept("123");

}

Simplifying with lambda expression

public static void main(String[] args) {

Consumer<String> consumer = (str)->{System.out.println(str);};

consumer.accept("123");

}

Supplier (supply interface)

public static void main(String[] args) {

//There are no parameters, only return values

Supplier<String> supplier = new Supplier<String>() {

@Override

public String get() {

System.out.println("123");

return "abc";

}

};

System.out.println(supplier.get());

}

Simplifying with lambda expression

public static void main(String[] args) {

//There are no parameters, only return values

Supplier<String> supplier = ()->{return "abc";};

System.out.println(supplier.get());

}

9, lambda expression, chain programming, functional interface, stream flow calculation

@Data

@NoArgsConstructor

@AllArgsConstructor

public class User {

private int id;

private String name;

private int age;

}

/**

* 1,ID Must be even

* 2,Age must be greater than 23

* 3,User name to uppercase

* 4,User names are sorted alphabetically backwards

* 5,Output only one user

*/

public class Test {

public static void main(String[] args) {

User u1 = new User(1, "a", 21);

User u2 = new User(2, "b", 22);

User u3 = new User(3, "c", 23);

User u4 = new User(4, "d", 24);

User u5 = new User(6, "e", 25);

List<User> list = Arrays.asList(u1,u2,u3,u4,u5);

// lambda expression, chain programming, functional interface, stream flow calculation

list.stream()

.filter((u)->{return u.getId()%2==0;})

.filter((u)->{return u.getAge()>23;})

.map((u)->{return u.getName().toUpperCase();})

.sorted((uu1,uu2)->{return uu2.compareTo(uu1);})

.limit(1)

.forEach(System.out::println);

}

}

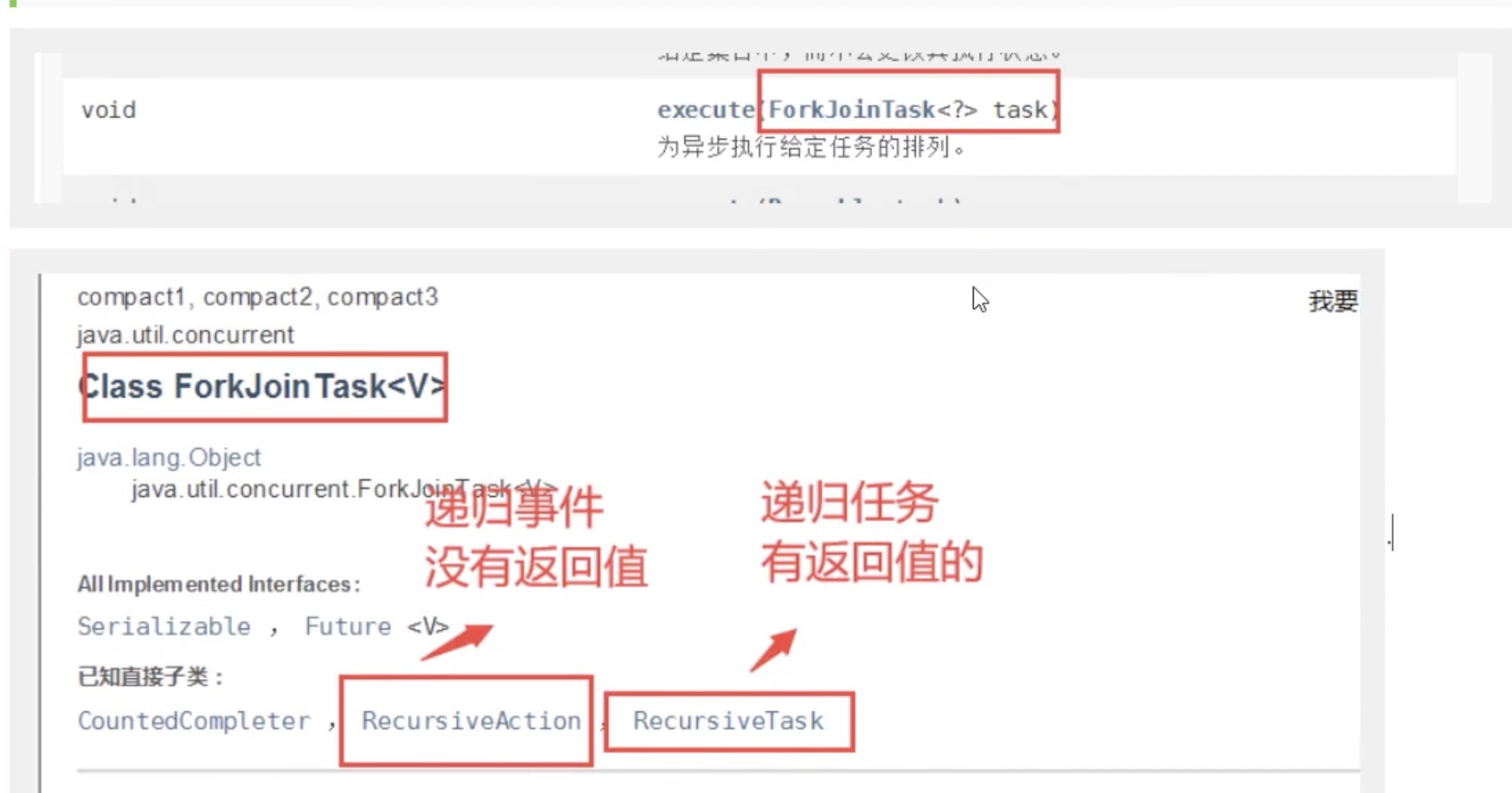

10, ForkJoin

Use in case of large amount of data

/**

* Task of summation calculation

* How to use forkjoin

* 1,Execution via forkjoinpool

* 2,Calculate the task forkjoinpool execute(ForkJoinTask<?> task)

* 3,The calculation class inherits the RecursiveTask

*/

public class ForkJoinDemo extends RecursiveTask<Long>{

private Long start; // start

private Long end; //end

//critical value

private Long temp = 10000L;

public ForkJoinDemo(Long start, Long end) {

this.start = start;

this.end = end;

}

//computing method

@Override

protected Long compute() {

if((end-start)>temp) {

Long sum = 0L;

for (Long i = start; i <= end; i++) {

sum = sum + i;

}

return sum;

}else { // forkjoin

long middle = (start+end)/2; //Intermediate value

ForkJoinDemo task1 = new ForkJoinDemo(start, middle);

task1.fork();

ForkJoinDemo task2 = new ForkJoinDemo(middle+1, end);

task2.fork();

return task1.join()+task2.join();

}

}

}

public class Test {

public static void main(String[] args) throws InterruptedException, ExecutionException {

test3();

}

// sum50000000050000000000 time 3055

public static void test1() {

long sum = 0L;

long start = System.currentTimeMillis();

for (Long i = 1L; i <= 10_0000_0000 ; i++) {

sum = sum + i;

}

long end = System.currentTimeMillis();

System.out.println("sum"+sum+"time"+(end-start));

}

// Forkjoin sum50000000050000000000 time 5874

public static void test2() throws InterruptedException, ExecutionException {

long start = System.currentTimeMillis();

ForkJoinPool forkJoinPool = new ForkJoinPool();

ForkJoinTask<Long> task = new ForkJoinDemo(0L, 10_0000_0000L);

ForkJoinTask<Long> submit = forkJoinPool.submit(task);

Long sum = submit.get();

long end = System.currentTimeMillis();

System.out.println("sum"+sum+"time"+(end-start));

}

// sum5000000005000000000 time 192

public static void test3() {

//Stream parallel stream

long start = System.currentTimeMillis();

Long sum = LongStream.rangeClosed(0L, 10_0000_0000L).parallel().reduce(0, Long::sum);

long end = System.currentTimeMillis();

System.out.println("sum"+sum+"time"+(end-start));

}

}

11, Future

Usage scenario

- Compute intensive scene

- Handling large amounts of data

- Remote method call, etc

5 methods

The Future interface has the methods shown in the following table, which can obtain the information related to the currently executing task.

| method | explain |

|---|---|

| boolean cancel(boolean interruptIf) | Cancel task execution |

| boolean isCancelled() | Whether the task has been cancelled. Cancel the task before it completes normally, and return true |

| boolean isDone() | Whether the task has been completed, whether the task is terminated normally, abnormally or cancelled, and return true |

| V get() | Wait for the task to end, and then get the results of type V |

| V get(long timeout, TimeUnit unit) | Get the result and set the timeout |

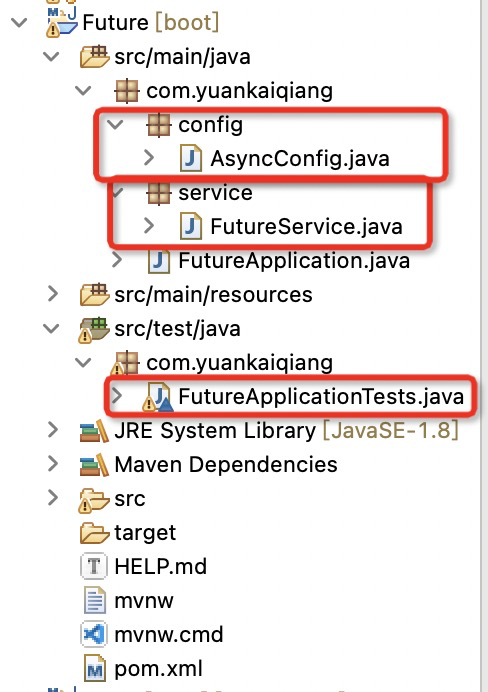

Test Future parallel processing

Test the difference between Future and normal processing. The following is the test directory structure

AsyncConfig

@Configuration

@EnableAsync

public class AsyncConfig implements AsyncConfigurer {

// Thread pool name prefix

private static final String threadNamePrefix = "Async-Service-";

@Override

@Bean(name = "taskExecutor")

public Executor getAsyncExecutor() {

ThreadPoolTaskExecutor taskExecutor = new ThreadPoolTaskExecutor();

// Number of core threads

taskExecutor.setCorePoolSize(8);

// Maximum number of threads

taskExecutor.setMaxPoolSize(16);

// Queue size

taskExecutor.setQueueCapacity(100);

// Thread pool name prefix

taskExecutor.setThreadNamePrefix(threadNamePrefix);

// Idle time of the thread (in seconds)

taskExecutor.setKeepAliveSeconds(3);

// When the task is completed, it will be closed automatically. The default value is false

taskExecutor.setWaitForTasksToCompleteOnShutdown(true);

// The core thread timed out and exited. The default value is false

taskExecutor.setAllowCoreThreadTimeOut(true);

// Processing strategy of thread pool for rejecting tasks

// CallerRunsPolicy: the calling thread (the thread submitting the task) handles the task

// The processing policy of the thread pool for rejecting tasks (no threads are available). At present, only AbortPolicy and CallerRunsPolicy are supported; the latter is the default

taskExecutor.setRejectedExecutionHandler(new ThreadPoolExecutor.CallerRunsPolicy());

taskExecutor.initialize();

return taskExecutor;

}

// exception handling

@Override

public AsyncUncaughtExceptionHandler getAsyncUncaughtExceptionHandler() {

return new MyAsyncUncaughtExceptionHandler();

}

class MyAsyncUncaughtExceptionHandler implements AsyncUncaughtExceptionHandler {

@Override

public void handleUncaughtException(Throwable ex, Method method, Object... params) {

System.out.println("class#method: " + method.getDeclaringClass().getName() + "#" + method.getName());

System.out.println("type : " + ex.getClass().getName());

System.out.println("exception : " + ex.getMessage());

}

}

FutureService

@Service

public class FutureService {

/**

* @Title: futureTest

* @Description: Asynchronous processing

* @Author yuankaiqiang

* @DateTime 2021-07-19 08:42:07

* @return

* @throws InterruptedException

*/

@Async("taskExecutor")

public Future<String> futureTest() throws InterruptedException {

System.out.println("Task execution start,Required: 1000 ms");

for (int i = 0; i < 10; i++) {

Thread.sleep(100);

System.out.println("do:" + i);

}

System.out.println("Complete the task");

return new AsyncResult<>(Thread.currentThread().getName());

}

/**

* @Title: ordinaryTest

* @Description: General treatment

* @Author yuankaiqiang

* @DateTime 2021-07-19 08:41:53

* @throws InterruptedException

*/

public void ordinaryTest(CountDownLatch latch) throws InterruptedException {

System.out.println("Task execution start,Required: 1000 ms");

for (int i = 0; i < 10; i++) {

Thread.sleep(100);

System.out.println("do:" + i);

}

System.out.println("Complete the task");

latch.countDown();

}

}

@SpringBootTest

@Resource

private FutureService futureService;

@Test

void asyncTest() throws InterruptedException, ExecutionException {

long start = System.currentTimeMillis();

System.out.println("start");

// Time consuming task

Future<String> future = futureService.futureTest();

// Another time-consuming task

Thread.sleep(500);

System.out.println("Another time-consuming task requires 500 ms");

String s = future.get();

System.out.println("Calculation result output:" + s);

System.out.println("Total time:" + (System.currentTimeMillis() - start));

}

@Test

void ordinaryTest() throws InterruptedException, ExecutionException {

// To wait for other threads to execute before ending the main thread

final CountDownLatch latch = new CountDownLatch(1);

long start = System.currentTimeMillis();

System.out.println("start");

new Thread(()-> {

try {

futureService.ordinaryTest(latch);

} catch (InterruptedException e) {

e.printStackTrace();

}

}).start();

latch.await();

// Another time-consuming task

Thread.sleep(500);

System.out.println("Another time-consuming task requires 500 ms");

System.out.println("Calculation result output:" + Thread.currentThread().getName());

System.out.println("Total time:" + (System.currentTimeMillis() - start));

}

Run test

// Total time 1039 start Task execution start,Required: 1000 ms do:0 do:1 do:2 do:3 Another time-consuming task requires 500 ms do:4 do:5 do:6 do:7 do:8 do:9 Complete the task Calculation result output:Async-Service-1 Total time: 1039 // The following are the results of the general test, with a total time of 1532 start Task execution start,Required: 1000 ms do:0 do:1 do:2 do:3 do:4 do:5 do:6 do:7 do:8 do:9 Complete the task Another time-consuming task requires 500 ms Calculation result output:main Total time: 1532

It can be seen that the processing time of Future is less than 1500ms, while the ordinary processing time is more than 1500ms, because Future is executing time-consuming task 1 and time-consuming task 2. The two tasks are executed in parallel, which is the advantage of Future mode. Executing other tasks within the waiting time can make full use of time

be careful

-

Asynchronous methods are decorated with static

-

The asynchronous class does not use @ Component annotation (or other annotations), so spring cannot scan the asynchronous class

-

An asynchronous method cannot be in the same class as the called asynchronous method

-

Class needs to be automatically injected with annotations such as @ Autowired or @ Resource. You cannot manually create a new object

-

If the SpringBoot framework is used, the @ EnableAsync annotation must be added to the startup class

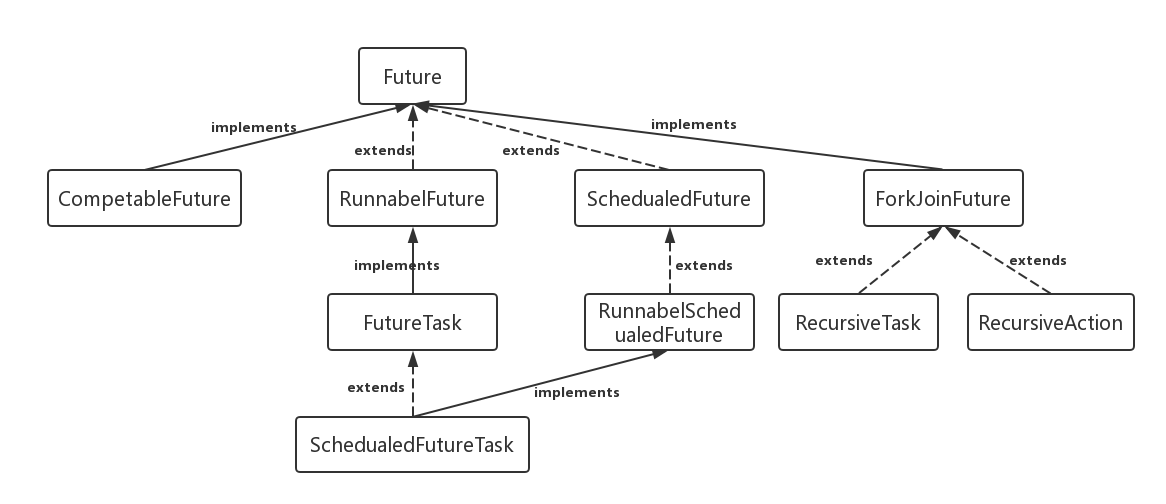

Class diagram structure of Future

The Future interface defines five main interface methods. RunnableFuture and SchedualFuture inherit this interface, and CompleteFuture and ForkJoinTask implement this interface.

RunnableFuture

This interface inherits both the Future interface and the Runnable interface, run is executed successfully () method, you can access the execution results through Future. The implementation class of this interface is FutureTask, a cancelable asynchronous calculation. This class provides the basic implementation of Future. Later, our demo is also implemented with this class, which enables you to start and cancel a calculation, query whether the calculation has been completed, and restore the calculation results. The calculation results can only be returned after the calculation has been completed Recovery after completion. If the calculation is not completed, the get method will block. Once the calculation is completed, the calculation cannot be restarted and cancelled unless the runAndReset method is called.

FutureTask can be used to wrap a Callable or Runnable object because it implements the Runnable interface and can be passed to the Executor for execution. To provide a singleton class, this class provides a protected constructor when creating a custom working class.

SchedualFuture

This interface indicates that a delayed behavior can be cancelled. Usually, a scheduled future is the result of the scheduled task SchedualedExecutorService.

@Component

public class SchedulerService {

@Bean

public ThreadPoolTaskScheduler threadPoolTaskScheduler() {

return new ThreadPoolTaskScheduler();

}

}

@RestController

@RequestMapping("schedual")

public class SchedualFutureRest {

@Autowired

private ThreadPoolTaskScheduler threadPoolTaskScheduler;

/**

* There is a cancel in ScheduledFuture to stop scheduled tasks.

*/

private ScheduledFuture<?> future;

/**

* @Title: startCron

* @Description: Start scheduled task

* @Author yuankaiqiang

* @DateTime 2021-07-18 17:19:08

*/

@RequestMapping("startCron")

public void startCron() {

future = threadPoolTaskScheduler.schedule(new Runnable() {

@Override

public void run() {

System.out.println("hello world!!!");

}

// Send data every second

}, new CronTrigger("0/1 * * * * *"));

}

/**

* @Title: getCronStatus

* @Description: Get scheduled task

* @Author yuankaiqiang

* @DateTime 2021-07-18 17:27:17

*/

@RequestMapping("getCronStatus")

public boolean getCronStatus() {

return future.isDone();

}

/**

* @Title: stopCron

* @Description: Stop scheduled tasks

* @Author yuankaiqiang

* @DateTime 2021-07-18 17:27:40

*/

@RequestMapping("stopCron")

public boolean stopCron() {

return future.cancel(true);

}

}

CompleteFuture

A Future class is the completion of the display, and can be used as a completion level to trigger supported dependent functions and behaviors through its completion. When two or more threads want to perform a complete or cancel operation, only one can succeed.

- In Java 8, completable Future provides a very powerful extension function of Future, which can help us simplify the complexity of asynchronous programming, provide the ability of functional programming, process calculation results through callback, and provide methods for transforming and combining completable Future.

- It may represent an explicitly completed Future or a completion stage. It supports triggering some functions or executing some actions after the calculation is completed.

- It implements the Future and CompletionStage interfaces

ForkJoinTask

Task based abstract classes can be executed through ForkJoinPool. A ForkJoinTask is similar to a thread entity, but it is lightweight relative to a thread entity. A large number of tasks and subtasks will be suspended by real threads in the ForkJoinPool pool at the expense of some usage restrictions.

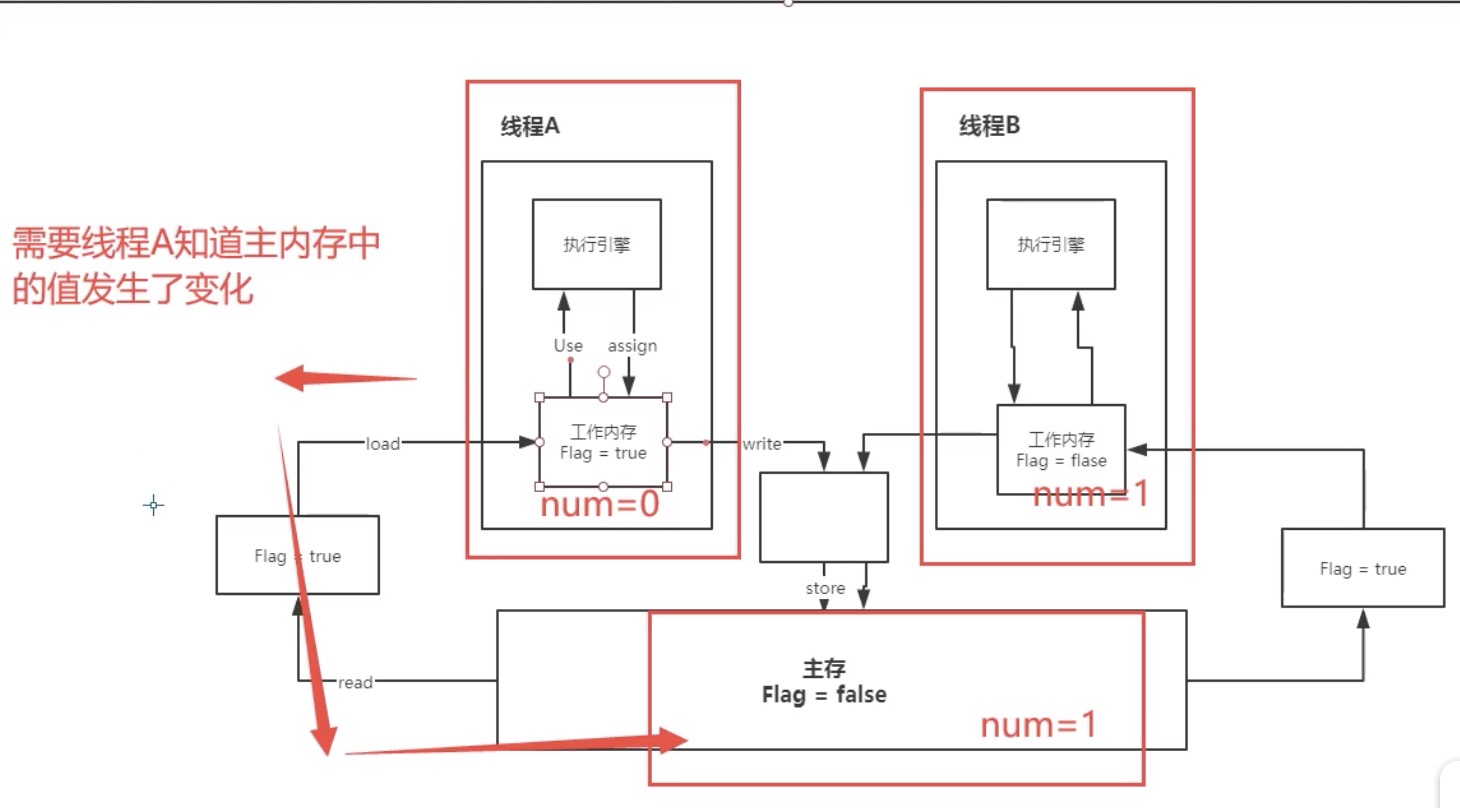

12, JMM

There are 8 kinds of memory interactive operations. The virtual machine implementation must ensure that each operation is atomic and cannot be separated (for variables of double and long types, exceptions are allowed for load, store, read and write operations on some platforms)

-

- lock: a variable that acts on main memory and identifies a variable as thread exclusive

- unlock: a variable that acts on the main memory. It releases a locked variable, and the released variable can be locked by other threads

- read: acts on the main memory variable. It transfers the value of a variable from the main memory to the working memory of the thread for subsequent load actions

- load: a variable that acts on working memory. It puts the read operation from main memory into working memory

- Use: acts on variables in working memory. It transfers variables in working memory to the execution engine. Whenever the virtual machine encounters a value that needs to be used, it will use this instruction

- assign: acts on a variable in working memory. It puts a value received from the execution engine into the variable copy in working memory

- store: a variable that acts on main memory. It transfers the value of a variable from working memory to main memory for subsequent write

- write: it acts on the variables in main memory. It puts the values of the variables obtained from the working memory by the store operation into the variables in main memory. JMM formulates the following rules for the use of these eight instructions:

-

- One of read and load, store and write operations is not allowed to appear alone. That is, read must be loaded and store must be written

- The thread is not allowed to discard its latest assign operation, that is, after the data of the work variable has changed, it must inform the main memory

- A thread is not allowed to synchronize data without assign from working memory back to main memory

- A new variable must be born in main memory. Working memory is not allowed to directly use an uninitialized variable. This means that the assign and load operations must be performed before the use and store operations are performed on the linked variables

- Only one thread can lock a variable at a time. After multiple locks, you must perform the same number of unlocks to unlock

- If you lock a variable, the value of this variable in all working memory will be cleared. Before the execution engine uses this variable, you must re load or assign to initialize the value of the variable

- If a variable is not locked, it cannot be unlocked. You cannot unlock a variable that is locked by another thread

- Before unlock ing a variable, you must synchronize the variable back to main memory

JMM is responsible for these eight operation rules and Some special rules of volatile You can determine which operations are thread safe and which operations are thread unsafe. However, these rules are so complex that it is difficult to analyze them directly in practice. Therefore, generally, we will not analyze through the above rules. More often, use java's happen before rule for analysis.

public class JMMDemo {

//Adding volatile ensures visibility

private volatile static int num = 0;

// private static int num = 0;

public static void main(String[] args) {

//Thread A does not know that the value has been modified, so it has been executing

new Thread(()-> {

while(num==0) {

}

},"A").start();

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

num = 1;

System.out.println(num);

}

}

Problem: the program does not know that the value in main memory has been modified

13, volatile

Is a lightweight synchronization mechanism provided by Java virtual machine

- Ensure visibility

//Adding volatile ensures visibility private volatile static int num = 0;

- Atomicity is not guaranteed

public class JMMDemo {

//Volatile does not guarantee atomicity

private volatile static int num = 0;

//synchronized ensures

public static void add() {

num++;

}

public static void main(String[] args) {

// Theoretically, the result of num should be 20000

for (int i = 1; i <= 20; i++) {

new Thread(()->{

for (int j = 0; j < 1000; j++) {

add();

}

}).start();

}

while(Thread.activeCount()>2) { // main gcc

Thread.yield();

}

System.out.println(Thread.currentThread().getName()+" "+num);

}

}

Solution:

- Add lock and synchronized to ensure atomicity

- Use atomic classes in Java util. concurrent. Under atomic, the bottom layers of these classes are directly linked to the operating system to modify values in memory. Unsafe class is a very special existence.

public class JMMDemo {

//Volatile does not guarantee atomicity

private volatile static AtomicInteger num = new AtomicInteger();

//synchronized ensures

public static void add() {

num.getAndIncrement();

}

public static void main(String[] args) {

// Theoretically, the result of num should be 20000

for (int i = 1; i <= 20; i++) {

new Thread(()->{

for (int j = 0; j < 1000; j++) {

add();

}

}).start();

}

while(Thread.activeCount()>2) { // main gcc

Thread.yield();

}

System.out.println(Thread.currentThread().getName()+" "+num);

}

}

-

Prohibit instruction rearrangement

Due to the memory barrier, instruction replay can be avoided.

14, Lock

synchronized

//synchronized

public class Demo1 {

public static void main(String[] args) {

Phone phone = new Phone();

new Thread(()->{

phone.sms();

},"A").start();

new Thread(()->{

phone.sms();

},"B").start();

}

}

class Phone {

public synchronized void sms() {

System.out.println(Thread.currentThread().getName()+"sms");

call();

}

public synchronized void call() {

System.out.println(Thread.currentThread().getName()+"call");

}

}

Lock

public class Demo2 {

public static void main(String[] args) {

Phone2 phone = new Phone2();

new Thread(()->{

phone.sms();

},"A").start();

new Thread(()->{

phone.sms();

},"B").start();

}

}

class Phone2 {

Lock lock = new ReentrantLock();

public void sms() {

// lock locks must be paired, otherwise they will deadlock

lock.lock();

try {

System.out.println(Thread.currentThread().getName()+"sms");

call();

} catch(Exception e) {

e.getMessage();

} finally {

lock.unlock();

}

}

public void call() {

lock.lock();

try {

System.out.println(Thread.currentThread().getName()+"call");

} catch(Exception e) {

e.getMessage();

} finally {

lock.unlock();

}

}

}

15, Deadlock

What is deadlock?

Multiple processes occupy the resources needed by the other party and request the other party's resources at the same time, and they will not release the occupied resources before they get the request, which will lead to deadlock, that is, the processes cannot achieve synchronization.

Causes of deadlock

The thread locks itself. In order to ensure synchronization and mutual exclusion between threads, we often need to lock them. Sometimes, threads apply for lock resources and do not wait for release. They apply for the lock again. The result is to hang up and wait for the release of the lock. However, the lock is held by themselves, so it will hang up and wait forever, resulting in a deadlock.

Competitive resources. When the number of resources shared by multiple processes in the system, such as printers and public queues, is not enough to meet the needs of processes, it will cause competition among processes and deadlock.

Illegal interprocess advance sequence. In the process of running, the improper order of requesting and releasing resources will also lead to process deadlock.

Deadlock condition

-

Mutual exclusion: resources cannot be shared and can only be used by one process.

-

Hold and wait: the process that has obtained resources can apply for new resources again.

-

No pre emption: the allocated resources cannot be forcibly deprived from the corresponding process.

-

Circular wait condition: several processes in the system form a loop. Each process in the loop is waiting for the resources occupied by adjacent processes.

Deadlock prevention

- The static resource allocation strategy is adopted to destroy the "partial allocation" condition;

- Allow the process to deprive itself of the resources occupied by other processes, thereby undermining the "inalienable" conditions;

- The orderly allocation of resources is used to destroy the "loop" conditions.

View deadlock

Step 1: use jps -l to locate the process number

Step 2: the deadlock problem is found in the jstack process number

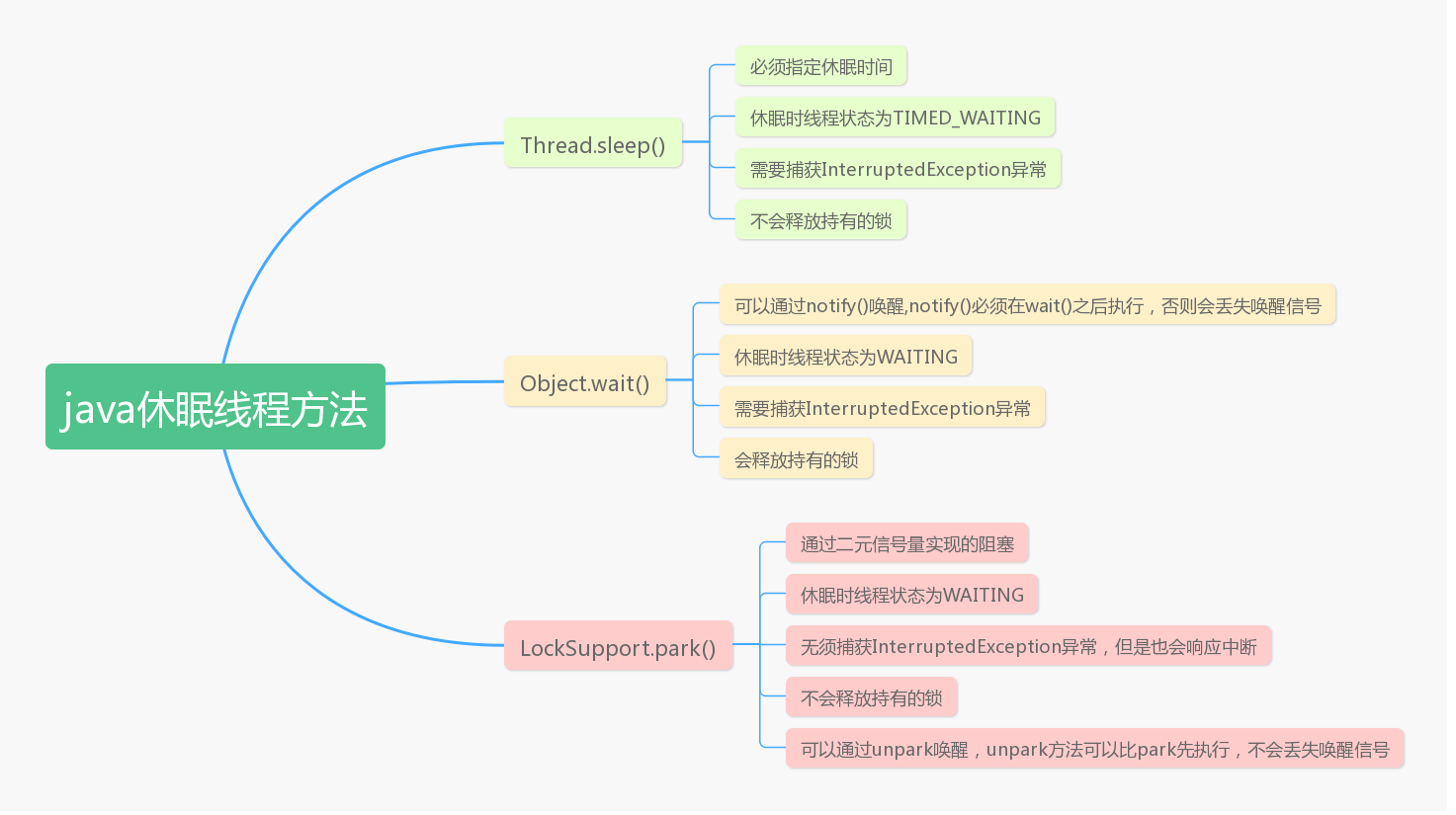

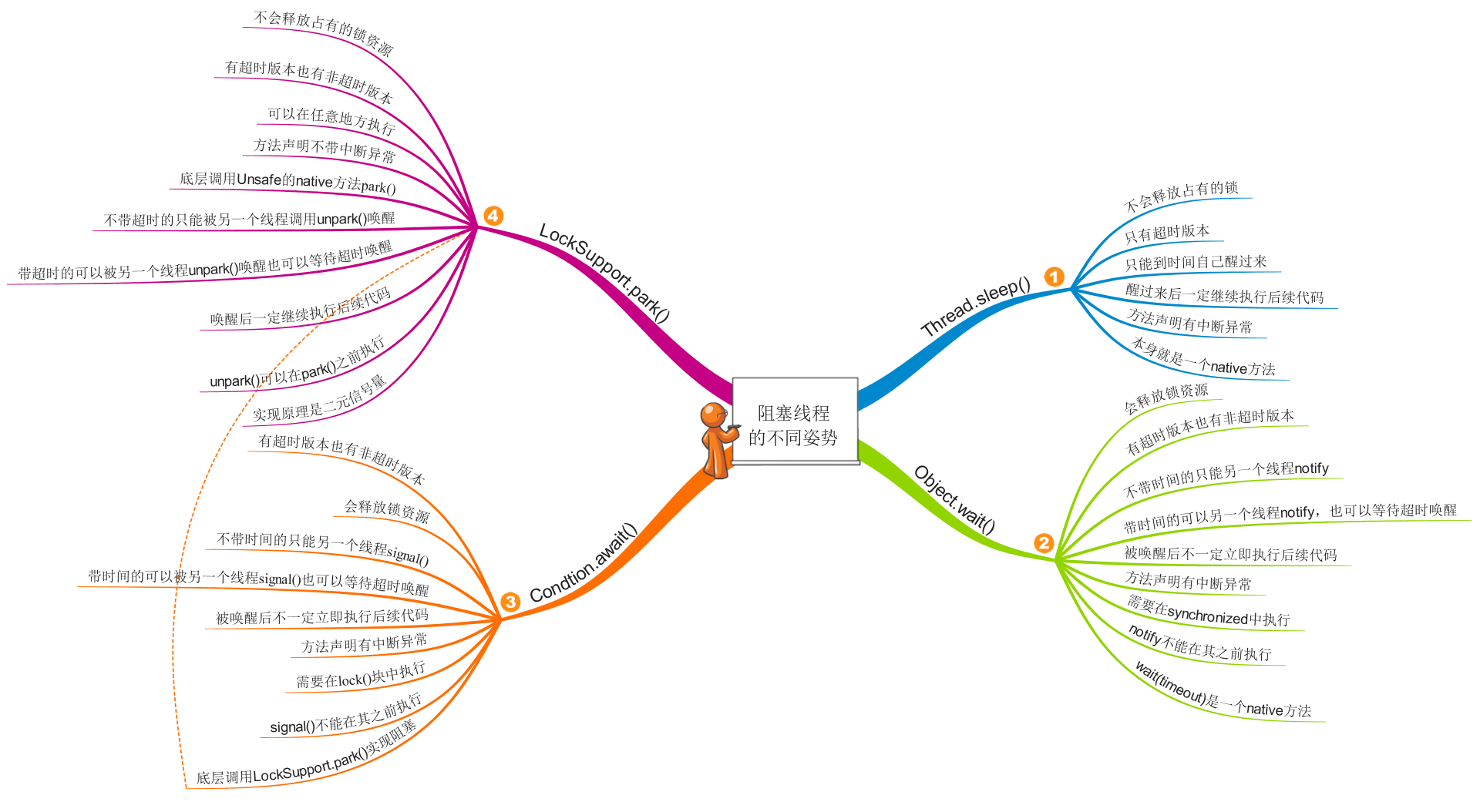

16, Three common blocking methods

https://blog.csdn.net/kangkanglou/article/details/82221301

https://www.cnblogs.com/suixing123/p/13861657.html

https://blog.csdn.net/u013332124/article/details/84647915

https://blog.csdn.net/asdasdasd123123123/article/details/107814280

Three thread blocking methods

LockSupport

You can specify a thread to wake up the specified thread

-

void park(): blocks the current thread. If the unpark method is called or the current thread is interrupted, it can be returned from the park() method

-

void park(Object blocker): the function is the same as method 1. An Object object is added to the input parameter to record the blocking objects that cause thread blocking, so as to facilitate troubleshooting;

-

void parkNanos(long nanos): blocks the current thread for no more than nanoseconds, adding the feature of timeout return;

-

void parkNanos(Object blocker, long nanos): the function is the same as method 3. An Object object is added to the input parameter to record the blocking objects that cause thread blocking, so as to facilitate problem troubleshooting;

-

void parkUntil(long deadline): blocks the current thread and knows the deadline;

-

void parkUntil(Object blocker, long deadline): the function is the same as method 5. An Object object is added to the input parameter to record the blocking objects that cause thread blocking, so as to facilitate problem troubleshooting;

-

void unpark(Thread thread): wakes up the specified thread in the blocked state

| Object.wait() | Thread.sleep() | LockSupport.park() | |

|---|---|---|---|

| synchronization | The wait method can only be invoked in the synchronous context, or IllegalMonitorStateException exception is thrown. | No need to call in synchronous or synchronous blocks. | No need to call in synchronous or synchronous blocks. |

| Action object | The wait method is defined in the Object class and acts on the Object itself | The sleep method is defined in Java Lang. thread, which acts on the current thread | Act on current thread |

| Release lock resource | yes | no | no |

| Wakeup condition | Other threads call the notify() or notifyAll() methods of the object | Timeout or call interrupt() method body | unpark |

| Method properties | wait is an instance method | sleep is a static method | park static method |

public static void main(String[] args) throws InterruptedException {

Object lock = new Object();

synchronized (lock) {

try {

System.out.println("Thread running");

lock.wait(5000);

System.out.println("The thread continues to run");

} catch (Exception ex) {

}

}

Thread thread1 = new Thread(new Runnable() {

@Override

public void run() {

System.out.println("Thread 1 running");

// Waiting for permission

LockSupport.park();

// Output thread over true

System.out.println("Thread 1 continues running");

}

});

Thread thread2 = new Thread(new Runnable() {

@Override

public void run() {

System.out.println("Thread 2 running");

// Get permission in 5 seconds

LockSupport.parkNanos(5_000_000_000L);

// Output thread over true

System.out.println("Thread 2 continues running");

}

});

thread1.start();

thread2.start();

Thread.sleep(2000);

LockSupport.unpark(thread1);

}

17, Three characteristics of high concurrency

Atomicity: that is, one or more operations are either all executed without interruption, or none.

Visibility: when a thread changes the value of a shared variable, other threads will immediately know the change. When other threads want to read this variable, they will eventually read it from memory instead of from the cache.

Ordering: when the virtual machine compiles the code, the virtual machine may not execute the code that will not affect the final result after changing the order. It may reorder them. actually,

18, Five thread states

newly build

When a thread is created with the new operator. At this time, the program has not started running the code in the thread.

be ready

A newly created thread does not start running automatically. To execute the thread, you must call the thread's start() method. When the thread object calls the start() method, the thread is started. The start() method creates the system resources for the thread to run, and schedules the thread to run the run() method. When the start() method returns, the thread is ready.

Threads in the ready state do not necessarily run the run() method immediately. Threads must also compete with other threads for CPU time. Threads can run only after obtaining CPU time. Because in a single CPU computer system, it is impossible to run multiple threads at the same time, and only one thread is running at a time. Therefore, multiple threads may be ready at this time. For multiple threads in the ready state, the Java The thread scheduler of the runtime system.

function

When the thread obtains CPU time, it enters the running state and really starts to execute the run() method.

block

When a thread is running, it may enter a blocking state for various reasons:

① The thread enters the sleep state by calling the sleep method;

② A thread invokes an operation that is blocked on I/O, that is, the operation will not return to its caller until the input / output operation is completed;

③ A thread attempts to get a lock that is being held by another thread;

④ The thread is waiting for a trigger condition;

The so-called blocking state is that the running thread does not finish running and temporarily gives up the CPU. At this time, other threads in the ready state can obtain CPU time and enter the running state.

The blocking state is the most interesting of the above four states, which is worthy of further discussion. Thread blockage may be caused by the following five reasons:

(1) Call sleep (number of milliseconds) to put the thread into "sleep" state. This thread will not run within the specified time.

(2) Thread execution was suspended with suspend(). The thread will not return to the runnable state unless it receives a resume() message.

(3) Pauses the execution of the thread with wait(). Unless the thread receives a nofify() or notifyAll() message, it will not become "runnable" (yes, this looks very similar to reason 2, but there is an obvious difference that we will reveal soon).

(4) The thread is waiting for some IO (input / output) operations to complete.

(5) The thread tried to call the synchronization method of another object, but that object is locked and temporarily unavailable.

death

There are two reasons for thread death:

① The run method exited normally and died naturally;

② An uncapped exception terminates the run method and causes the thread to die suddenly;

To determine whether the thread is alive (that is, it is either runnable or blocked), you need to use the isAlive method. If it is runnable or blocked, this method returns true; if the thread is still new and not runnable, or the thread dies, it returns false.

18, Others

1. What is thread safety

When multiple threads write to the same global variable (the same resource) at the same time (the read operation will not involve thread safety), if the result is the same as what we expect, we call it thread safety. On the contrary, threads are not safe.

2. In actual development, when the program is dormant, use TimeUnit instead of sleep. TimeUnit will directly dormant the whole program

3. There are four ways to create threads 6

- By inheriting the Thread class, multiple threads cannot share the instance variables of the Thread class.

- The Runnable interface is implemented, which is more suitable for resource sharing than inheriting the Thread class, avoiding the limitations of inheritance.

- Create a thread using Callable and Future. The method can have a return value and throw an exception.

- Create a thread pool implementation. The thread pool provides a thread queue in which all threads in waiting state are saved to avoid additional overhead of creation and destruction and improve the response speed.

4. Difference between process and thread

| difference | process | thread |

|---|---|---|

| Fundamental difference | As the smallest unit of resource allocation | As the smallest unit of resource scheduling and execution |

| expenses | Each process has independent code and data space (process context), and switching between processes will have a large overhead. | Threads can be regarded as lightweight processes. The same kind of threads share code and data space. Each thread has an independent running stack and program counter (PC). The overhead of thread switching is small. |

| Environment | Multiple tasks (programs) can be run simultaneously in the operating system | Multiple sequential streams are executed simultaneously in the same application |

| Allocate memory | When the system is running, it will allocate different memory areas for each process | In addition to the CPU, memory is not allocated for threads (the resources used by threads are the resources of the processes to which they belong), and thread groups can only share resources |

| Inclusion relation | A process without threads can be regarded as a single thread. If there are multiple threads in a process, the execution process is not one line, but multiple lines (threads) work together. | Threads are part of a process, so threads are sometimes called lightweight processes or lightweight processes. |

5. What is optimistic lock and what is pessimistic lock?

| difference | Optimistic lock | Pessimistic lock |

|---|---|---|

| concept | Optimistic lock is very optimistic when operating data. It thinks that others will not modify data at the same time. Therefore, the optimistic lock will not be locked. Just judge whether others have modified the data during the update period: if others have modified the data, give up the operation, otherwise execute the operation. | Pessimistic lock is pessimistic when operating data. It thinks that others will modify data at the same time. Therefore, the data is directly locked when operating the data, and the lock will not be released until the operation is completed; No one else can modify the data during locking. |

| Implementation mode | CAS mechanism and version number mechanism | Locking can be used to lock code blocks (such as the synchronized keyword in Java) or data (such as the exclusive lock in MySQL). |

Reference website

https://www.cnblogs.com/null-qige/p/9481900.html ↩︎

https://blog.csdn.net/kangkanglou/article/details/82221301 ↩︎

https://www.cnblogs.com/suixing123/p/13861657.html ↩︎

https://blog.csdn.net/u013332124/article/details/84647915 ↩︎

https://blog.csdn.net/asdasdasd123123123/article/details/107814280 ↩︎

https://www.cnblogs.com/wxw7blog/p/7727510.html ↩︎