Foreword

Share a case of using some crawler technology to simply crawl articles from media web pages as needed and save them to the local designated folder for reference only. In the learning process, do not visit the website frequently and cause web page paralysis. Do what you can!!!

Crawling demand

Crawl address: Construction Archives - construction industry wide industry chain content co construction platform

Crawling involves technologies: Requests, Xpath, and panda

Climbing purpose: only used to practice and consolidate reptile technology

Climb target:

- Save the title, author, publishing time and article link of each article in the home page of the web page to the excel file in the specified location. The excel name is data

- Save the text of each article to a document in the specified location and name it with the article title

- Save the picture of each article to a folder in the specified location, and name it with the article title + picture serial number

Crawling idea

Initiate a homepage page request to obtain the response content

Analyze the homepage data to obtain the title, author, release time and article link information of the article

Summarize the home page information of the website and save it to excel

Initiate an article web page request to obtain the response content

Analyze the article web page data and obtain the text, picture and link information of the article

Save the article content to the specified document

Initiate a picture web page request to obtain the response content

Save pictures to the specified folder

Code explanation

The following explains the implementation method and precautions of each step in the order of crawler ideas

Step 1: import related libraries

import requests # Used to initiate a web page request and return the response content from lxml import etree # Parse data for response content import pandas as pd # Generate two-dimensional data and save it to excel import os # file save import time # Slow down the reptile

Install without related libraries

Press win + r to call up the CMD window and enter the following code

pip install Missing library name

Step 2: set anti reptile measures

"""

Record crawler time

Set basic information for accessing web pages

"""

start_time = time.time()

start_url = "https://www.jzda001.com"

header = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36'}

hearder: header. Copy the header of the browser by yourself. If you don't find it, you can directly copy it for me

Step 3: visit the home page and parse the response content

# Main page access + parsing information

response = requests.get(start_url, headers=header) # Initiate request

html_str = response.content # Transcoding response content

tree = etree.HTML(html_str) # Parse response content

# Create a dictionary for loading information

dict = {}

# Get article link

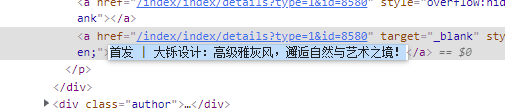

dict['href_list'] = tree.xpath("//ul[@class='art-list']//a[1]/@href")[1:]

# Get article title

dict['title_list'] = tree.xpath("//div[contains(@class,'title')]/p[contains(@class,'line')]//text()")[:-1]

# Get article author

dict['author_list'] = tree.xpath("//div[@class='author']/p[@class='name']//text()[1]")[:-2]

# Get article publishing time

dict['time_list'] = tree.xpath("//div[@class='author']/p[@class='name']//text()[2]")[:-1]

# Pause for 3 seconds

time.sleep(3)Adjust the time according to the network conditions Sleep() is set to prevent climbing too fast

During the parsing process, it is found that there is unnecessary information in the header or tail of the obtained content, so the list slicing method is used to eliminate the elements

For xpath parsing method, refer to another article: [Xpath] crawler parsing summary based on lxml Library

Step 4: data cleaning

# Main page data cleaning

author_clear = []

for i in dict['author_list']:

if i != '.':

author_clear.append(i.replace('\n', '').replace(' ', ''))

dict['author_list'] = author_clear

time_clear = []

for j in dict['time_list']:

time_clear.append(j.replace('\n', '').replace(' ', '').replace(':', ''))

dict['time_list'] = time_clear

title_clear = []

for n in dict['title_list']:

title_clear.append(n.replace(': ', ' ').replace('!', ' ').replace('|', ' ').replace('/', ' ').replace(' ', ' '))

dict['title_list'] = title_clear

time.sleep(3)Viewing the web page structure, you can see that there are some system sensitive characters in the title, author and publishing time (affecting the subsequent inability to create folders)

Therefore, the parsed data can only be used after data cleaning

Step 5: create article links

# Generate sub page address

url_list = []

for j in dict['href_list']:

url_list.append(start_url + j)

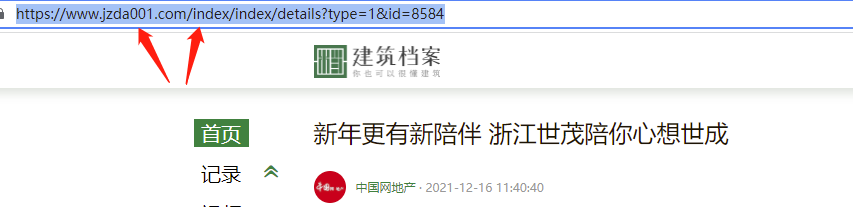

dict['url_list'] = url_listBy comparing the @ href attribute link of the web page structure with the real link of the article, it can be seen that the parsed url needs to be spliced into the home page of the web address

Step 6: visit the article link and parse the response content

# Sub page parsing

dict_url = {} # Parsing information for loading articles

for n in range(len(url_list)): # Loop through a single sub page

response_url = requests.get(url_list[n], headers=header, timeout=5)

response_url.encoding = 'utf-8'

tree_url = etree.HTML(response_url.text)

# Get article text information

text_n = tree_url.xpath("//div[@class='content']/p/text()")

# Splicing text content

dict_url['text'] = [txt + txt for txt in text_n]

# Get links to all pictures in the article

dict_url['img_url'] = tree_url.xpath("//div[@class='pgc-img']/img/@src")

# Gets the title of the article

title_n = dict['title_list'][n]

# Get the author information of this article

author_n = dict['author_list'][n]

# Get the publishing time of the article

time_n = dict['time_list'][n]

Splicing text content is because the obtained text information is separated paragraph by paragraph and needs to be spliced together, which is the complete article text information

# Gets the title of the article title_n = dict['title_list'][n] # Get the author information of this article author_n = dict['author_list'][n] # Get the publishing time of the article time_n = dict['time_list'][n]

Because the information on the home page is consistent with the access order of the article, the number of cycles = the position of the information of the article in the dictionary

Step 7: create a multi-layer folder

# New multi tier folder

file_name = "Building archives crawler folder" # Folder name

path = r'd:/' + file_name

url_path_file = path + '/' + title_n + '/' # Splice folder address

if not os.path.exists(url_path_file):

os.makedirs(url_path_file)Because the created folder is: ". / crawler folder name / article title name /", you need to use OS Make dirs to create multi-layer folders

Note that the file name ends with "/"

Step 8: save sub page pictures

When the folder is set up, start accessing the picture links of each article and save them

# Save sub page picture

for index, item in enumerate(dict_url['img_url']):

img_rep = requests.get(item, headers=header, timeout=5) # Set timeout

index += 1 # Display quantity + 1 for each picture saved

img_name = title_n + ' picture' + '{}'.format(index) # Picture name

img_path = url_path_file + img_name + '.png' # Picture address

with open(img_path, 'wb') as f:

f.write(img_rep.content) # Write in binary

f.close()

print('The first{}Pictures saved successfully at:'.format(index), img_path)

time.sleep(2)Step 9: save sub page text

You can save the text first and then the picture. You need to pay attention to the writing methods of the two

# Save page text

txt_name = str(title_n + ' ' + author_n + ' ' + time_n) # Text name

txt_path = url_path_file + '/' + txt_name + '.txt' # Text address

with open(txt_path, 'w', encoding='utf-8') as f:

f.write(str(dict_url['text']))

f.close()

print("The text of this page was saved successfully,The text address is:", txt_path)

print("Crawling succeeded, crawling{}Pages!!!".format(n + 2))

print('\n')

time.sleep(10)Step 10: summary of article information

Save all article titles, authors, publishing time and article links on the front page of the website to an excel folder

# Master page information saving

data = pd.DataFrame({'title': dict['title_list'],

'author': dict['author_list'],

'time': dict['time_list'],

'url': dict['url_list']})

data.to_excel('{}./data.xls'.format(path),index=False)

print('Saved successfully,excel File address:', '{}/data.xls'.format(path))Complete code (Process Oriented Version)

# !/usr/bin/python3.9

# -*- coding:utf-8 -*-

# @author:inganxu

# CSDN:inganxu.blog.csdn.net

# @Date: December 18, 2021

import requests # Used to initiate a web page request and return the response content

from lxml import etree # Parse data for response content

import pandas as pd # Generate two-dimensional data and save it to excel

import os # file save

import time # Slow down the reptile

"""

Record crawler time

Set basic information for accessing web pages

"""

start_time = time.time()

start_url = "https://www.jzda001.com"

header = {'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36'}

# Main page access + parsing information

response = requests.get(start_url, headers=header) # Initiate request

html_str = response.content # Transcoding response content

tree = etree.HTML(html_str) # Parse response content

# Create a dictionary for loading information

dict = {}

# Get article link

dict['href_list'] = tree.xpath("//ul[@class='art-list']//a[1]/@href")[1:]

# Get article title

dict['title_list'] = tree.xpath("//div[contains(@class,'title')]/p[contains(@class,'line')]//text()")[:-1]

# Get article author

dict['author_list'] = tree.xpath("//div[@class='author']/p[@class='name']//text()[1]")[:-2]

# Get article publishing time

dict['time_list'] = tree.xpath("//div[@class='author']/p[@class='name']//text()[2]")[:-1]

# Pause for 3 seconds

time.sleep(3)

# Main page data cleaning

author_clear = []

for i in dict['author_list']:

if i != '.':

author_clear.append(i.replace('\n', '').replace(' ', ''))

dict['author_list'] = author_clear

time_clear = []

for j in dict['time_list']:

time_clear.append(j.replace('\n', '').replace(' ', '').replace(':', ''))

dict['time_list'] = time_clear

title_clear = []

for n in dict['title_list']:

title_clear.append(n.replace(': ', ' ').replace('!', ' ').replace('|', ' ').replace('/', ' ').replace(' ', ' '))

dict['title_list'] = title_clear

time.sleep(3)

# Generate sub page address

url_list = []

for j in dict['href_list']:

url_list.append(start_url + j)

dict['url_list'] = url_list

# Sub page parsing

dict_url = {} # Parsing information for loading articles

for n in range(len(url_list)): # Loop through a single sub page

response_url = requests.get(url_list[n], headers=header, timeout=5)

response_url.encoding = 'utf-8'

tree_url = etree.HTML(response_url.text)

# Get article text information

text_n = tree_url.xpath("//div[@class='content']/p/text()")

# Splicing text content

dict_url['text'] = [txt + txt for txt in text_n]

# Get links to all pictures in the article

dict_url['img_url'] = tree_url.xpath("//div[@class='pgc-img']/img/@src")

# Gets the title of the article

title_n = dict['title_list'][n]

# Get the author information of this article

author_n = dict['author_list'][n]

# Get the publishing time of the article

time_n = dict['time_list'][n]

# New multi tier folder

file_name = "Building archives crawler folder" # Folder name

path = r'd:/' + file_name

url_path_file = path + '/' + title_n + '/' # Splice folder address

if not os.path.exists(url_path_file):

os.makedirs(url_path_file)

# Save sub page picture

for index, item in enumerate(dict_url['img_url']):

img_rep = requests.get(item, headers=header, timeout=5) # Set timeout

index += 1 # Display quantity + 1 for each picture saved

img_name = title_n + ' picture' + '{}'.format(index) # Picture name

img_path = url_path_file + img_name + '.png' # Picture address

with open(img_path, 'wb') as f:

f.write(img_rep.content) # Write in binary

f.close()

print('The first{}Pictures saved successfully at:'.format(index), img_path)

time.sleep(2)

# Save page text

txt_name = str(title_n + ' ' + author_n + ' ' + time_n) # Text name

txt_path = url_path_file + '/' + txt_name + '.txt' # Text address

with open(txt_path, 'w', encoding='utf-8') as f:

f.write(str(dict_url['text']))

f.close()

print("The text of this page was saved successfully,The text address is:", txt_path)

print("Crawling succeeded, crawling{}Pages!!!".format(n + 2))

print('\n')

time.sleep(10)

# Master page information saving

data = pd.DataFrame({'title': dict['title_list'],

'author': dict['author_list'],

'time': dict['time_list'],

'url': dict['url_list']})

data.to_excel('{}./data.xls'.format(path),index=False)

print('Saved successfully,excel File address:', '{}/data.xls'.format(path))

print('Crawling completed!!!!')

end_time = time.time()

print('Time for crawling:', end_time - start_time)

Complete code (Object Oriented Version)

# !/usr/bin/python3.9

# -*- coding:utf-8 -*-

# @author:inganxu

# CSDN:inganxu.blog.csdn.net

# @Date: December 18, 2021

import requests # Used to initiate a web page request and return the response content

from lxml import etree # Parse data for response content

import pandas as pd # Generate two-dimensional data and save it to excel

import os # file save

import time # Slow down the reptile

class JZDA:

def __init__(self):

self.start_url = "https://www.jzda001.com"

self.header = {'user-agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36'}

# Send request and return response

def parse_url(self, url):

response = requests.get(url, headers=self.header)

return response.content

# Parsing master page data

def get_url_content(self, html_str):

dict = {}

tree = etree.HTML(html_str)

dict['href_list'] = tree.xpath("//ul[@class='art-list']//a[1]/@href")[1:]

dict['title_list'] = tree.xpath("//div[contains(@class,'title')]/p[contains(@class,'line')]//text()")[:-1]

dict['author_list'] = tree.xpath("//div[@class='author']/p[@class='name']//text()[1]")[:-2]

dict['time_list'] = tree.xpath("//div[@class='author']/p[@class='name']//text()[2]")[:-1]

dict['url_list'], dict['title_list'], dict['author_list'], dict['time_list'] = self.data_clear(dict)

return dict

# Data cleaning

def data_clear(self, dict):

# Generate sub pages

url_list = []

for href in dict['href_list']:

url_list.append(self.start_url + href)

# Data cleaning

author_clear = []

for i in dict['author_list']:

if i != '.':

author_clear.append(i.replace('\n', '').replace(' ', ''))

author_list = author_clear

time_clear = []

for j in dict['time_list']:

time_clear.append(j.replace('\n', '').replace(' ', '').replace(':', ''))

time_list = time_clear

title_clear = []

for n in dict['title_list']:

title_clear.append(

n.replace(': ', ' ').replace('!', ' ').replace('|', ' ').replace('/', ' ').replace(' ', ' '))

title_list = title_clear

return url_list, title_list, author_list, time_list

# Get the title, author and time of the sub page

def parse_content_dict(self, dict, index):

dict_index = {}

dict_index['title'] = dict['title_list'][index]

dict_index['author'] = dict['author_list'][index]

dict_index['time'] = dict['time_list'][index]

return dict_index

# Get text and picture links for sub pages

def get_html_url(self, url):

dict_url = {}

# Send request

response_url = requests.get(url, headers=self.header, timeout=3)

response_url.encoding = 'utf-8'

# Parse sub page

tree_url = etree.HTML(response_url.text)

# Get text

text = tree_url.xpath("//div[@class='content']/p/text()")

# Get picture

dict_url['img_url'] = tree_url.xpath("//div[@class='pgc-img']/img/@src")

dict_url['text'] = [txt + txt for txt in text]

return dict_url

# Save sub page text

def save_url_text(self, path, dict_index, dict_url, index):

txt_name = str(dict_index['title'] + ' ' + dict_index['author'] + ' ' + dict_index['time'])

txt_path = path + '/' + txt_name + '.txt'

with open(txt_path, 'w', encoding='utf-8') as f:

f.write(str(dict_url['text']))

f.close()

print("The text of this page was saved successfully,The text address is:", txt_path)

print("Crawling succeeded, crawling{}Pages!!!".format(index + 2))

print('\n')

time.sleep(10)

# Save sub page picture

def save_url_img(self, img_index, img_url, dict_index, path):

# Request access

img_rep = requests.get(img_url, headers=self.header, timeout=5) # Convert pictures to binary

img_index += 1

img_name = dict_index['title'] + ' picture' + '{}'.format(img_index)

img_path = path + img_name + '.png'

with open(img_path, 'wb') as f:

f.write(img_rep.content)

f.close()

print('The first{}Pictures saved successfully at:'.format(img_index), img_path)

time.sleep(2)

# Save master page information

def save_content_list(self, dict, file_name):

data = pd.DataFrame({'title': dict['title_list'],

'author': dict['author_list'],

'time': dict['time_list'],

'url': dict['url_list']})

# Specify where to save text

data.to_excel('{}./data.xls'.format(r'd:/' + file_name), index=False)

print('Saved successfully,excel File address:', '{}/data.xls'.format(r'd:/' + file_name))

def run(self):

start_time = time.time()

next_url = self.start_url

# Send request and get response

html_str = self.parse_url(next_url)

# Analyze the main page data and obtain the title, author, time and sub page links

dict = self.get_url_content(html_str)

# Create crawler information save folder

file_name = "Building archives crawler folder"

# Traverse sub page data

for index, url in enumerate(dict['url_list']):

# Get the text and picture links of the sub page and generate a dictionary

dict_url = self.get_html_url(url)

# Get the title, author and time of the sub page, and generate a dictionary

dict_index = self.parse_content_dict(dict, index)

# Create sub page information storage address, naming rule: folder + title

path = r'd:/' + file_name + '/' + dict_index['title'] + '/'

if not os.path.exists(path):

os.makedirs(path)

# Name the sub page text in the folder with title author time

self.save_url_text(path, dict_index, dict_url, index)

# Traverse the picture links of sub pages

for img_index, img_url in enumerate(dict_url['img_url']):

# Name the sub page picture in the folder with the title picture number

self.save_url_img(img_index, img_url, dict_index, path)

# Save title, author, time, sub page link to csv

self.save_content_list(dict, file_name)

print("Crawling completed")

end_time = time.time()

print('Time for crawling:', end_time - start_time)

if __name__ == '__main__':

jzda = JZDA()

jzda.run()

epilogue

In the future, anti creep measures (ip pool, header pool, response access) and data processing (data analysis, data visualization) will be extended for the case

Reference articles

[requests] web interface access module library _inganxucsdn blog