What is multi cluster?

-

If you don't create a cluster, first install k8s and build a cluster. One of my blog categories is: "kubernetes" k8s installation details [create a cluster, join a cluster, kick out a cluster, reset a cluster...]. You can have a look at it if you want to know.

-

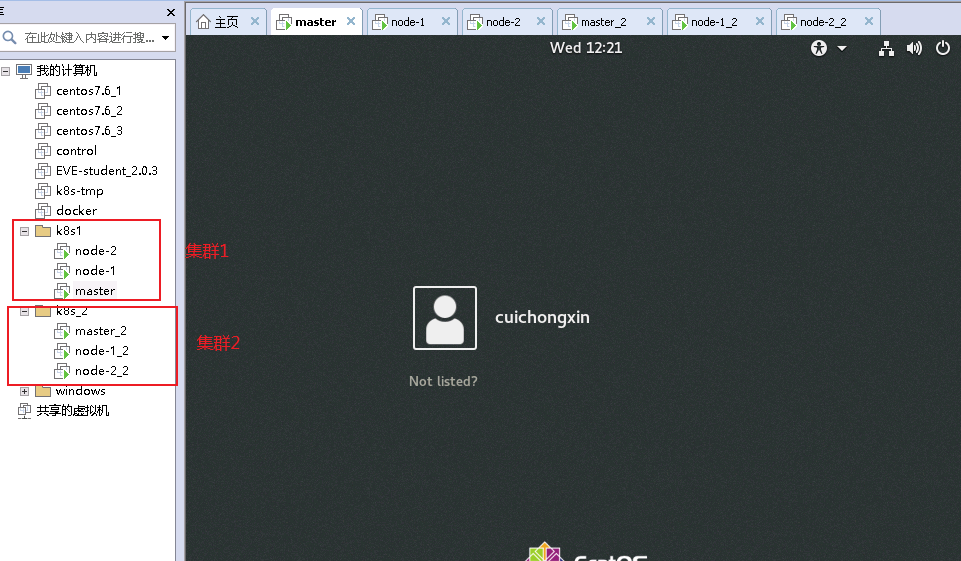

As shown in the following figure, a cluster has a master node and a node node

They have nothing to do with each other.

As follows, I created two clusters and started them all.

-

Each cluster provides services independently, and the two are not related to each other. Generally, we manage multiple clusters by logging in to the master node of a cluster through ssh

After multi clustering, you can manage another master node on a master node without ssh login. -

The configurations of the two clusters are exactly the same, except that the ip addresses are different. I will explain these two nodes below

The host name and ip address are as follows

#Cluster 1

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 5d2h v1.21.0

node1 Ready <none> 5d2h v1.21.0

node2 Ready <none> 5d1h v1.21.0

[root@master ~]#

[root@master ~]# ip a | grep 59

inet 192.168.59.142/24 brd 192.168.59.255 scope global noprefixroute dynamic ens33

[root@master ~]#

[root@master ~]# hostname

master

[root@master ~]#

#Cluster 2

[root@master2 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 5d2h v1.21.0

node1 Ready <none> 5d2h v1.21.0

node2 Ready <none> 5d1h v1.21.0

[root@master2 ~]#

[root@master2 ~]# ip a | grep 59

inet 192.168.59.151/24 brd 192.168.59.255 scope global noprefixroute ens33

[root@master2 ~]#

[root@master2 ~]# hostname

master2

[root@master2 ~]#

kubeconfig file content preparation [master node operation]

- The default kubeconfig file is: ~/ kube/config

- View the kubeconfig file command: kubectl config view

[root@master .kube]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://192.168.59.142:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

namespace: default

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@master .kube]#

Single cluster configuration file modification

- You want to modify the information of the current cluster.

- The configuration file is ~/ kube/config. See mode 1 below for the code explanation. The modification method is the same as mode 1, except for leaving a cluster information.

Method 1: manual editing

File backup

- This is the most original method. It is implemented directly by editing the configuration file. When doing this, be sure to back up the file [key is here].

[root@master ~]# cd .kube/ [root@master .kube]# cp config config.bak [root@master .kube]#

Code explanation of config file [detailed explanation of context]

- After the backup, we will edit it directly: ~/ kube/config file

In order to facilitate editing, it is recommended to delete all three keys after entering to get the following refreshing file content [if not deleted, too many keys will affect the operation]

In order to facilitate understanding, I directly add comments after the following code. After careful reading, the function of each line of code is clear [the following is the original code of the file, without any modification, only for explanation]

1 apiVersion: v1

2 clusters: # This means that the cluster to which you need to connect [lines 3-6 below are a group] and how many new clusters you need to add, copy lines 3-6, add them to line 6, and modify the relevant information

3 - cluster: # Cluster start [3-6 rows in a group]

4 certificate-authority-data: #This is the key. The viewing method will be described later

5 server: https://192.168. 59.142:6443 # the ip address of the master node of this cluster

6 name: kubernetes #Cluster name, which can be customized

7 contexts:#Context, bind the upper cluster [clusters] and the lower user [users] together [they are independent before binding]

8 - context: #At the beginning of the context [8-12 acts as a group], how many clusters and how many contexts are added are placed under line 12

9 cluster: kubernetes #This is the name of the cluster above

10 namespace: default #Namespace [can be modified]

11 user: kubernetes-admin #This is the name of the following user

12 name: kubernetes-admin@kubernetes # This context name can be customized

13 current-context: kubernetes-admin@kubernetes # This is the default context name [cluster name] [for example, if there are multiple clusters, the cluster will be used by default]

14 kind: Config

15 preferences: {}

16 users: # This specifies the user information [17-20 below as a group], the number of clusters and the number of users added [copy content below 20 lines]

17 - name: kubernetes-admin # User name can be customized

18 user:

19 client-certificate-data: #This is the key. The viewing method will be described later

20 client-key-data:#This is the key. The viewing method will be described later

config code editing

According to the above explanation, make relevant edits. Here is the code I configured

[root@master .kube]# vim config

1 apiVersion: v1

2 clusters:

3 - cluster:

4 certificate-authority-data:

5 server: https://192.168.59.142:6443

6 name: master

7 - cluster:

8 certificate-authority-data:

9 server: https://192.168.59.151:6443

10 name: master1

11 contexts:

12 - context:

13 cluster: master

14 namespace: default

15 user: ccx

16 name: context

17 - context:

18 cluster: master1

19 namespace: default

20 user: ccx1

21 name: context1

22 current-context: context

23 kind: Config

24 preferences: {}

25 users:

26 - name: ccx

27 user:

28 client-certificate-data:

29 client-key-data:

30 - name: ccx1

31 user:

32 client-certificate-data:

33 client-key-data:

View certificate [key]

-

Because the key of the current cluster has been deleted above, you need to copy it back and copy the keys of other clusters.

The key s of each cluster are different. Do not copy the cross. -

key file: ~ / kube/config [yes, the file edited above]

- For the edited cluster, view the config file backed up at the beginning [reopen a window]. For other cluster information, directly view the corresponding copy of the config file.

- I don't need to say more about the corresponding replication. If there are multiple clusters, which two are the specified clusters and users in the context, just don't make mistakes when copying key s.

- Note: there is a space in front of the key, and there is no space at the end. Don't have more or less spaces.

-

After all, the code is as follows:

[root@master .kube]# cat config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EY3dNakF4TXpVeU9Gb1hEVE14TURZek1EQXhNelV5T0Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBUGFBCnQwaG5LOEJTYWQ1VmhOY1Q0c2tDWUs5NVhWUndPQ3RJd29qVXNnU2lzTzJSazl5aG1hMnl2OE5EaTlmYmpzQ0sKaGd4VDJkZDI2Z2FyampXcTNXaWNmclNjVm5MV0ZXY1BZOHFyQ3hIYzFhbDh5N2t6YnMvaklhYkVsTm5QMXVFYwprQmpFYWtMMnIzN0cxOXpyM3BPcUd1S2p1OURUUGxpbmcrRjlPQTRHaURWRS9vNjVXM1ZQY3hFZmw4NVJ6REo4CmlaRGgvbjNiS2YrOEZSdTdCZHdpWDBidFVsUHIzMlVxNXROVzNsS3lJNjhsSkNCc2UvZ2ZnYkpkbFBXZjQ1SUUKRW43UUVqNlMyVm1JMHNISVA3MUNYNlpkMG83RlNPRWpmbGpGZ24xdWFxdnltdFFPN1lYcW9uWjR2bGlDeDA5TQpwT3VGaTZlZ2F1QkNYZWlTbUtFQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZNN0Nmc2FudWRjVEZIdG5vZXk4aC9aUXFFWnJNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFCZ1BFNmR5VVh5dDEySWdyVTRKTEFwQmZjUW5zODFPeFVWVkluTFhFL2hHQlZVY0Ywagp3d3F4cG9FUVRZcDFpTytQczlZN0NBazVSdzJvMnJkNlhScDVhdFllZVo4V1Z5YXZXcGhsLzkxd2d1d1Yrdm9oCmMwMFNmWExnVEpkbGZKY250TVNzRUxaQkU5dlprZFVJa2dCTXlOelUxVk0wdnpySDV4WEEvTHJmNW9LUkVTdWUKNk5iRGcyMmJzQlk5MnpINUxnNmEraWxKRTVyKzgvS1JFbVRUL2VlUmZFdVRSMnMwSHN4ZEl0cENMell2RndicgorL2pEK084RHlkcFFLMUxWaDREbyt2ZFQvVlBYb2hNU05oekJTVzlmdXg0OWV1M3dsazkrL25mUnRoeWg3TjZHCjRzTVA0OGVacUJsTm5JRzRzdU1PQW9UejdMeTlKZ2JSWXd5WQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.59.142:6443

name: master

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1EY3dNakF4TXpVeU9Gb1hEVE14TURZek1EQXhNelV5T0Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBUGFBCnQwaG5LOEJTYWQ1VmhOY1Q0c2tDWUs5NVhWUndPQ3RJd29qVXNnU2lzTzJSazl5aG1hMnl2OE5EaTlmYmpzQ0sKaGd4VDJkZDI2Z2FyampXcTNXaWNmclNjVm5MV0ZXY1BZOHFyQ3hIYzFhbDh5N2t6YnMvaklhYkVsTm5QMXVFYwprQmpFYWtMMnIzN0cxOXpyM3BPcUd1S2p1OURUUGxpbmcrRjlPQTRHaURWRS9vNjVXM1ZQY3hFZmw4NVJ6REo4CmlaRGgvbjNiS2YrOEZSdTdCZHdpWDBidFVsUHIzMlVxNXROVzNsS3lJNjhsSkNCc2UvZ2ZnYkpkbFBXZjQ1SUUKRW43UUVqNlMyVm1JMHNISVA3MUNYNlpkMG83RlNPRWpmbGpGZ24xdWFxdnltdFFPN1lYcW9uWjR2bGlDeDA5TQpwT3VGaTZlZ2F1QkNYZWlTbUtFQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZNN0Nmc2FudWRjVEZIdG5vZXk4aC9aUXFFWnJNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFCZ1BFNmR5VVh5dDEySWdyVTRKTEFwQmZjUW5zODFPeFVWVkluTFhFL2hHQlZVY0Ywagp3d3F4cG9FUVRZcDFpTytQczlZN0NBazVSdzJvMnJkNlhScDVhdFllZVo4V1Z5YXZXcGhsLzkxd2d1d1Yrdm9oCmMwMFNmWExnVEpkbGZKY250TVNzRUxaQkU5dlprZFVJa2dCTXlOelUxVk0wdnpySDV4WEEvTHJmNW9LUkVTdWUKNk5iRGcyMmJzQlk5MnpINUxnNmEraWxKRTVyKzgvS1JFbVRUL2VlUmZFdVRSMnMwSHN4ZEl0cENMell2RndicgorL2pEK084RHlkcFFLMUxWaDREbyt2ZFQvVlBYb2hNU05oekJTVzlmdXg0OWV1M3dsazkrL25mUnRoeWg3TjZHCjRzTVA0OGVacUJsTm5JRzRzdU1PQW9UejdMeTlKZ2JSWXd5WQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.59.151:6443

name: master1

contexts:

- context:

cluster: master

namespace: default

user: ccx

name: context

- context:

cluster: master1

namespace: default

user: ccx1

name: context1

current-context: context

kind: Config

preferences: {}

users:

- name: ccx

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJWEZvemlkeHc5dGt3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TVRBM01ESXdNVE0xTWpoYUZ3MHlNakEzTURJd01UTTFNekphTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXkyRVN4VGsxY2pCQWRpUVUKNnVNd2Q1dm4rNy91TjVjakxpUE9SNTd3d1hvRFo0SUpXMEFtYWIxNzBNUGN2eGRIdW9uS21aQUxJSlFtMFNkWgpLYjFmNDZMd09MTUU0bjBoVjNFZ1ZidlZNUktBM09vUU05dm1rY2w5VlB0SXQvY0YyLzJlMWhrT3RkSEhZZ1NFCnVEd0lGL3NhQ1JjK1ZMWjNhbDRsTkU5TVJKZThaVUxUU3ovUGloRlNiaEkyMzVHaWNMS0lsNzJiYUw3UzFuVUcKVHVOU0RpM3A4ckl1NWZldkNyelBNN0tYdnEremdJK0o3N3B6d29YeFlBbzEvVkNiSEZmalk1SEt3TGZIejZEZQpHdXFGY2FUVkZObmhaek1NcXVvQmFmNFZiYWlBZDJHb04yNWlTTmtxYWFWTS8xRTc2cVdUTjVIQnkwcE5kQmVnCkNGZEtnd0lEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JUT3duN0dwN25YRXhSN1o2SHN2SWYyVUtoRwphekFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBWG5FU0VDYTZTMDIxQXdiMXd5SlFBbUxHRVpMRFNyREpYaVZRCkhGc25mRGN1WTNMendRdVhmRUJhNkRYbjZQUVRmbUJ1cFBOdlNJbWdYNGQzQ3MzZVhjQWFmalhrOVlnMk94TjUKRTh1a3FaSmhIbDhxVmVGNzBhUTBTME14R3NkVVVTTDJscUNFWGEzMVB4SWxVKzBVOG9zblFIM05vdGZVMVJIMgoxaGtrMi8zeWtvUHJxRXpsbEp3enFTRHAvVDBmcS91MHI2SlkzdWZkKzRXaHVTSGRQU2hvN1JOT0FJVitUcllVCklXOTY1c3lyeGp6TTUwcFNIS3IralJQNnZNUmNaRm42UjhhZ1dvVXBPMnMzcFVVVUtNWjdxa2tOWWNiRmQ0M3IKR2xoWDB5M1U3MW1VUHpaci9FYjQvUDBGcytSUlV6eGFCRDFJVUl2NjdKa0trc2UzRFE9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBeTJFU3hUazFjakJBZGlRVTZ1TXdkNXZuKzcvdU41Y2pMaVBPUjU3d3dYb0RaNElKClcwQW1hYjE3ME1QY3Z4ZEh1b25LbVpBTElKUW0wU2RaS2IxZjQ2THdPTE1FNG4waFYzRWdWYnZWTVJLQTNPb1EKTTl2bWtjbDlWUHRJdC9jRjIvMmUxaGtPdGRISFlnU0V1RHdJRi9zYUNSYytWTFozYWw0bE5FOU1SSmU4WlVMVApTei9QaWhGU2JoSTIzNUdpY0xLSWw3MmJhTDdTMW5VR1R1TlNEaTNwOHJJdTVmZXZDcnpQTTdLWHZxK3pnSStKCjc3cHp3b1h4WUFvMS9WQ2JIRmZqWTVIS3dMZkh6NkRlR3VxRmNhVFZGTm5oWnpNTXF1b0JhZjRWYmFpQWQyR28KTjI1aVNOa3FhYVZNLzFFNzZxV1RONUhCeTBwTmRCZWdDRmRLZ3dJREFRQUJBb0lCQUVtQ3BNNDBoMlRtbStZWAoxSmV4MW1ybEoweVBhd01jMWRKdmpyZkVjekQ3Y1ErUXFPRWFwc2ZCZldkUDVCSU4wQmRVaHE1S3FqcjBVYk4zCmpYclF3RC8vUE9UQmtCcHRNQWZ6RThUcFIzMmRPb2FlODR4TEIyUGFlRHFuT1BtRmg5Q2tNeTBma1htV2dZS2sKTDNTSC9rVHN0ZFJqV2x3ME42VnlzZS9lV2FyUXFEZXJzNFFTU2xaVGxlcUVGdVFadk1rNXp4QVZmYjEzUFBJcApORTZySW9pajZIOVdvWmdoMmYzNDdyME52VmJpekdYNUE5RTNjOG51NkVWQlpqS0FqeWRaTkpyU2VRTEtMZDF3CmtGL0J1ZTM2RkltRWtLdlByNTAxSnJ4VEJEUnFsMEdkUnpxY3RxTDdkaHdKa0t4TUt1akpCeGFucTNRSGQzSmcKR0VnMFBNRUNnWUVBMWlhOHZMM1crbnRjNEZOcDZjbzJuaHVIcTNKdGVrQlVILzlrUnFUYlFnaUpBVW9ld3BGdwprRjROMUhsR21WWngwWkVTTVhkTGozYXdzY2lWUFRqZVRJK216VDA0MUVyRzdNVnhuM05KZy96VlhUY0FITmJqCmZhR3o1UlZwaERieUZtRTRvcUlpWHFuY050Y2ZmRE9XQ3NNU2ZCODlPMW1LTFpEYjJ4WjZsQ2NDZ1lFQTh4OXgKZWhFeHNKRFljOXFTSnlCcVQyNlozek5GRmlWV1JNUE9NWEYrbFNBZDc4eWpVanZEaDg3NXRzdG4wV1pBY05kUApQcGg1Tm1wbzVNUTVSMmE5Z2J1NGVxZjArZ2o0WHM0RytySW5iYlE2Q3ZwZW9RYVVtZUJ3UVBqcXJFZnVPZUpvCjU1VnRYNGRHc0EzOHV0dllycmsyYU16ejdmcnRON3JjNVo5VlJFVUNnWUVBeFp0MUtVeWI1UUtVajBNcFJsd2IKemdWbFNXVUxkSFdMcXdNRlN0S3dwOXdzWUE0L0dCY1FvWWJJaURsb1ZmSVlrT0ttd1JKdG5QSk8xWjViWitUago3QTNhUXlTdEhlZnFhMjArRFg1YVpmcVYvNi9TNE1uQm5abnE0QWJFR1FhQ21QZ1pSS2tMd2dKSGZDdEJtR0FaCm9kQ2phL2wvalJad2xOOUlvSCs3bUowQ2dZQUVEUTRTL3A1WlZ0Q0VmYXZad3d5Q2JsRmFDcnluOWM5T0xnVU4KaGRxYUdZTG1MLzY0ckE1Q0FRemdJdHVEL2JRdExTbEEzY0dIU3BhYzJUZ3JIR2NqOWtESXFtdkdqc2UwckxJcApFemJjK1JmT2Z3VjhvV053ZlBEaDVFUGt3djRSTU5pV28wTERTTG5BelRyYzBqVDJGRmYzdnhLQmNLRHJRTTNWCmRhWXlFUUtCZ0ROMUVRNUhYdGlEbi9nMllUMzVwQWNNZjNrbVZFYTVPWHZONDFXSHJOZHhieUJJZ2Fkc2pUZjIKZTJiZnBKNzBJcHFqV2JITG1ENzZjUzNTZmFXSDFCVDdiRnZ4cWg4dUtXUXFoeWhzdTZWZ1VWNWNyQnp5cVVtNgpRcXFoeEx2STZsaWgrRGUwU3F3eDh5b1J5QUNXU0NYRDM2K3JmbGZoZnExd2pqdFF1VHBsCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

- name: ccx1

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJWEZvemlkeHc5dGt3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TVRBM01ESXdNVE0xTWpoYUZ3MHlNakEzTURJd01UTTFNekphTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXkyRVN4VGsxY2pCQWRpUVUKNnVNd2Q1dm4rNy91TjVjakxpUE9SNTd3d1hvRFo0SUpXMEFtYWIxNzBNUGN2eGRIdW9uS21aQUxJSlFtMFNkWgpLYjFmNDZMd09MTUU0bjBoVjNFZ1ZidlZNUktBM09vUU05dm1rY2w5VlB0SXQvY0YyLzJlMWhrT3RkSEhZZ1NFCnVEd0lGL3NhQ1JjK1ZMWjNhbDRsTkU5TVJKZThaVUxUU3ovUGloRlNiaEkyMzVHaWNMS0lsNzJiYUw3UzFuVUcKVHVOU0RpM3A4ckl1NWZldkNyelBNN0tYdnEremdJK0o3N3B6d29YeFlBbzEvVkNiSEZmalk1SEt3TGZIejZEZQpHdXFGY2FUVkZObmhaek1NcXVvQmFmNFZiYWlBZDJHb04yNWlTTmtxYWFWTS8xRTc2cVdUTjVIQnkwcE5kQmVnCkNGZEtnd0lEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JUT3duN0dwN25YRXhSN1o2SHN2SWYyVUtoRwphekFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBWG5FU0VDYTZTMDIxQXdiMXd5SlFBbUxHRVpMRFNyREpYaVZRCkhGc25mRGN1WTNMendRdVhmRUJhNkRYbjZQUVRmbUJ1cFBOdlNJbWdYNGQzQ3MzZVhjQWFmalhrOVlnMk94TjUKRTh1a3FaSmhIbDhxVmVGNzBhUTBTME14R3NkVVVTTDJscUNFWGEzMVB4SWxVKzBVOG9zblFIM05vdGZVMVJIMgoxaGtrMi8zeWtvUHJxRXpsbEp3enFTRHAvVDBmcS91MHI2SlkzdWZkKzRXaHVTSGRQU2hvN1JOT0FJVitUcllVCklXOTY1c3lyeGp6TTUwcFNIS3IralJQNnZNUmNaRm42UjhhZ1dvVXBPMnMzcFVVVUtNWjdxa2tOWWNiRmQ0M3IKR2xoWDB5M1U3MW1VUHpaci9FYjQvUDBGcytSUlV6eGFCRDFJVUl2NjdKa0trc2UzRFE9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBeTJFU3hUazFjakJBZGlRVTZ1TXdkNXZuKzcvdU41Y2pMaVBPUjU3d3dYb0RaNElKClcwQW1hYjE3ME1QY3Z4ZEh1b25LbVpBTElKUW0wU2RaS2IxZjQ2THdPTE1FNG4waFYzRWdWYnZWTVJLQTNPb1EKTTl2bWtjbDlWUHRJdC9jRjIvMmUxaGtPdGRISFlnU0V1RHdJRi9zYUNSYytWTFozYWw0bE5FOU1SSmU4WlVMVApTei9QaWhGU2JoSTIzNUdpY0xLSWw3MmJhTDdTMW5VR1R1TlNEaTNwOHJJdTVmZXZDcnpQTTdLWHZxK3pnSStKCjc3cHp3b1h4WUFvMS9WQ2JIRmZqWTVIS3dMZkh6NkRlR3VxRmNhVFZGTm5oWnpNTXF1b0JhZjRWYmFpQWQyR28KTjI1aVNOa3FhYVZNLzFFNzZxV1RONUhCeTBwTmRCZWdDRmRLZ3dJREFRQUJBb0lCQUVtQ3BNNDBoMlRtbStZWAoxSmV4MW1ybEoweVBhd01jMWRKdmpyZkVjekQ3Y1ErUXFPRWFwc2ZCZldkUDVCSU4wQmRVaHE1S3FqcjBVYk4zCmpYclF3RC8vUE9UQmtCcHRNQWZ6RThUcFIzMmRPb2FlODR4TEIyUGFlRHFuT1BtRmg5Q2tNeTBma1htV2dZS2sKTDNTSC9rVHN0ZFJqV2x3ME42VnlzZS9lV2FyUXFEZXJzNFFTU2xaVGxlcUVGdVFadk1rNXp4QVZmYjEzUFBJcApORTZySW9pajZIOVdvWmdoMmYzNDdyME52VmJpekdYNUE5RTNjOG51NkVWQlpqS0FqeWRaTkpyU2VRTEtMZDF3CmtGL0J1ZTM2RkltRWtLdlByNTAxSnJ4VEJEUnFsMEdkUnpxY3RxTDdkaHdKa0t4TUt1akpCeGFucTNRSGQzSmcKR0VnMFBNRUNnWUVBMWlhOHZMM1crbnRjNEZOcDZjbzJuaHVIcTNKdGVrQlVILzlrUnFUYlFnaUpBVW9ld3BGdwprRjROMUhsR21WWngwWkVTTVhkTGozYXdzY2lWUFRqZVRJK216VDA0MUVyRzdNVnhuM05KZy96VlhUY0FITmJqCmZhR3o1UlZwaERieUZtRTRvcUlpWHFuY050Y2ZmRE9XQ3NNU2ZCODlPMW1LTFpEYjJ4WjZsQ2NDZ1lFQTh4OXgKZWhFeHNKRFljOXFTSnlCcVQyNlozek5GRmlWV1JNUE9NWEYrbFNBZDc4eWpVanZEaDg3NXRzdG4wV1pBY05kUApQcGg1Tm1wbzVNUTVSMmE5Z2J1NGVxZjArZ2o0WHM0RytySW5iYlE2Q3ZwZW9RYVVtZUJ3UVBqcXJFZnVPZUpvCjU1VnRYNGRHc0EzOHV0dllycmsyYU16ejdmcnRON3JjNVo5VlJFVUNnWUVBeFp0MUtVeWI1UUtVajBNcFJsd2IKemdWbFNXVUxkSFdMcXdNRlN0S3dwOXdzWUE0L0dCY1FvWWJJaURsb1ZmSVlrT0ttd1JKdG5QSk8xWjViWitUago3QTNhUXlTdEhlZnFhMjArRFg1YVpmcVYvNi9TNE1uQm5abnE0QWJFR1FhQ21QZ1pSS2tMd2dKSGZDdEJtR0FaCm9kQ2phL2wvalJad2xOOUlvSCs3bUowQ2dZQUVEUTRTL3A1WlZ0Q0VmYXZad3d5Q2JsRmFDcnluOWM5T0xnVU4KaGRxYUdZTG1MLzY0ckE1Q0FRemdJdHVEL2JRdExTbEEzY0dIU3BhYzJUZ3JIR2NqOWtESXFtdkdqc2UwckxJcApFemJjK1JmT2Z3VjhvV053ZlBEaDVFUGt3djRSTU5pV28wTERTTG5BelRyYzBqVDJGRmYzdnhLQmNLRHJRTTNWCmRhWXlFUUtCZ0ROMUVRNUhYdGlEbi9nMllUMzVwQWNNZjNrbVZFYTVPWHZONDFXSHJOZHhieUJJZ2Fkc2pUZjIKZTJiZnBKNzBJcHFqV2JITG1ENzZjUzNTZmFXSDFCVDdiRnZ4cWg4dUtXUXFoeWhzdTZWZ1VWNWNyQnp5cVVtNgpRcXFoeEx2STZsaWgrRGUwU3F3eDh5b1J5QUNXU0NYRDM2K3JmbGZoZnExd2pqdFF1VHBsCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

[root@master .kube]#

At this point, the multi cluster configuration is complete.

Processing of unreachable error messages on cluster 6443 port

- The error message is that the IP port of your current cluster is unreachable

The connection to the server 192.168.59.151:6443 was refused - did you specify the right host or port?

- Idea:

- 1. Check whether the docker service is active: systemctl is active docker

- 2. Check whether the kubectl service is active: systemctl is active kubelet

- 3. Check configuration file: ~ / Is kube/config incorrect

- 4. Check whether the image is missing: docker images [if the image is missing, supplement it, and then restart the above two services, all images will be started automatically (do not try to start the image manually, restart the above two services in order, and docker will automatically pull up the services in order)]

- 5. Check whether the port is listening: netstat -ntlp | grep 6443

- 6. Check whether the service is normal: docker ps [there should be about 17 normal]

- 7. Check the system log: journalctl -xeu kubelet

[root@master ~]# systemctl is-active docker active [root@master ~]# systemctl is-active kubelet active [root@master ~]# [root@master ~]# netstat -ntlp | grep 6443 tcp6 0 0 :::6443 :::* LISTEN 19672/kube-apiserve [root@master ~]# [root@master ~]# docker ps | wc -l 17 [root@master ~]#

- But I think this is special. All the services of my problem cluster are normal and can be used alone in the problem cluster. All kinds of troubleshooting are fruitless. Finally, I thought that my second cluster was cloned. I did find that the key s of my two clusters are the same through the configuration file. Therefore, theoretically, the two clusters are the same, and the IP cannot be changed.

Now the error is reported as follows

[root@master ~]# kubectl config use-context context1

Switched to context "context1".

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context master ccx default

* context1 master1 ccx1 default

[root@master ~]#

[root@master ~]# kubectl get nodes

The connection to the server 192.168.59.151:6443 was refused - did you specify the right host or port?

[root@master ~]#

- In order to verify my idea, I changed the ip of the second cluster in the configuration file to be the same as that of my first cluster, and did not modify anything else [the key is still the key of the second cluster], and then accessed it again. It was successful

[root@master ~]# cat .kube/config | grep server

server: https://192.168.59.142:6443

server: https://192.168.59.142:6443

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context master ccx default

* context1 master1 ccx1 default

[root@master ~]#

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 6d v1.21.0

node1 Ready <none> 6d v1.21.0

node2 Ready <none> 5d22h v1.21.0

[root@master ~]#

[root@master ~]# kubectl get pods

No resources found in default namespace.

[root@master ~]# kubectl get pods -n ccx

NAME READY STATUS RESTARTS AGE

centos-7846bf67c6-s9tmg 0/1 ImagePullBackOff 0 23h

nginx-test-795d659f45-j9m9b 0/1 ImagePullBackOff 0 24h

nginx-test-795d659f45-txf8l 0/1 ImagePullBackOff 0 24h

[root@master ~]#

- The conclusion is: master cluster cannot be cloned!!!!!!! The pit summarized in most of the day

Of course, cloning is not impossible. One article in my blog is: "[kubernetes] k8s installation details [create cluster, join cluster, kick cluster, reset cluster...]", just reset the cluster according to the methods in this, and then repeat the above steps, and everything will be normal

Tearful eyes... It took most of the day to sum up the pit

[root@master ~]# cat .kube/config| grep server

server: https://192.168.59.142:6443

server: https://192.168.59.151:6443

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1-new master1 ccx1 default

[root@master ~]# kubecon^C

[root@master ~]#

[root@master ~]# kubectl config use-context context1-new

Switched to context "context1-new".

[root@master ~]# kubectl get pods

No resources found in default namespace.

[root@master ~]# kubectl get pods -n ccx

No resources found in ccx namespace.

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-78d6f96c7b-q25lf 1/1 Running 0 7m36s

calico-node-g2wfj 1/1 Running 0 7m36s

calico-node-gtfbc 1/1 Running 0 7m36s

calico-node-nd29t 1/1 Running 0 6m49s

coredns-545d6fc579-j4ckc 1/1 Running 0 12m

coredns-545d6fc579-qn4kz 1/1 Running 0 12m

etcd-master2 1/1 Running 0 12m

kube-apiserver-master2 1/1 Running 1 12m

kube-controller-manager-master2 1/1 Running 0 12m

kube-proxy-c4x8j 1/1 Running 0 12m

kube-proxy-khh2z 1/1 Running 0 10m

kube-proxy-pckf7 1/1 Running 0 6m49s

kube-scheduler-master2 1/1 Running 0 12m

[root@master ~]#

[root@master ~]#

kubectl get node no node

- Other services are normal [see services above], that is, no node is found or missing on the master, as shown below

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready <none> 16m v1.21.0 node1 Ready <none> 2m19s v1.21.0 [root@master ~]#

- resolvent

Log in to the undiscovered node node, restart the docker and kubelet services, and return to the master node later to see the node information

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready <none> 16m v1.21.0 node1 Ready <none> 2m19s v1.21.0 [root@master ~]# [root@master ~]# ssh node2 root@node2's password: Last login: Fri Jul 2 12:19:44 2021 from 192.168.59.1 [root@node2 ~]# systemctl restart docker [root@node2 ~]# systemctl restart kubelet [root@node2 ~]# [root@node2 ~]# exit logout Connection to node2 closed. [root@master ~]# [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready <none> 20m v1.21.0 node1 Ready <none> 7m5s v1.21.0 node2 NotReady <none> 2s v1.21.0 [root@master ~]#

Method 2: command generation

config multi cluster management (contexts)

View all cluster information

Command: kubectl config get contexts

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context master ccx default

* context1 master1 ccx1 default

[root@master ~]#

- Command interpretation

- CURRENT: * indicates which cluster you are currently in

- NAME: context NAME

- CLUSTER: CLUSTER name

- AUTHINFO: user name

- NAMESPACE: NAMESPACE

Context switching [cluster switching]

- Command: kubectl config use context context NAME [NAME in kubectl config get contexts is the context NAME]

- For example, I switch to context

*The location is the current cluster.

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context master ccx default

* context1 master1 ccx1 default

[root@master ~]#

[root@master ~]# kubectl config use-context context

Switched to context "context".

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1 master1 ccx1 default

[root@master ~]#

Rename context

- Command: kubectl config rename context current name new name

- For example, I modified context1 to context1 new

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1 master1 ccx1 default

[root@master ~]#

[root@master ~]# kubectl config rename-context context1 context1-new

Context "context1" renamed to "context1-new".

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1-new master1 ccx1 default

[root@master ~]#

Create a context [not recommended]

- Command: kubectl config set context custom context name

- It is meaningless to simply create such a cluster, because there is no designated cluster, and there is no designated user. [it needs to be used in combination with the following modification information]

For example, when I create a my context, I can see nothing

[root@master ~]# kubectl config set-context my-context

Context "my-context" created.

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context master ccx default

* context1 master1 ccx1 default

my-context

[root@master ~]#

- As above, after creation, it will be in: ~ / kube/config generates a context, but there is no other key information. You need to manually specify ns, user and name by modifying the information

- context:

cluster: my-context

namespace:

user:

name:

Modify information

- This is equivalent to two functions

- 1. Modify the context information that has been created

- 2. Add context content [add remaining information after creating with command]

- Syntax:

kubectl config set-context my-context[Existing context name] --namespace=ccx[Must be an existing namespace] --cluster=my-context[User defined name (modification only)] --user=my-user[User defined name (modification only)]

- Non custom view commands

- Context view: kubectl config get contexts

- Namespace view: kubectl get ns

Special instructions for error reporting of new information

- As I said earlier, modifying information is not recommended to add context information because:

A cluster has only one set of key s, and theoretically only one context can exist. If the context is created by command and other information is customized, in ~ / Only the following information will be generated in kube/config file, but neither cluster nor user exists, so an error will be reported when using this file, indicating that there is no 8080 port. The reason is that this context does not specify the server and user name, so the cluster information cannot be found [in this case, you can bind cluster and user to the existing cluster]

- context:

cluster: my-context

namespace: ccx

user: my-user

name: my-context

- For example, I added information to my context created by the above command

After I switch to this context, I am prompted that port 8080 is not started [this is the error message, which is an example of an error, so do not create a context with a command, and specify it directly by modifying the configuration file]

[root@master ~]# kubectl config set-context my-context --namespace=ccx --cluster=my-context --user=my-user

Context "my-context" modified.

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1 master1 ccx1 default

my-context my-context my-user ccx

[root@master ~]#

[root@master ~]# kubectl config use-context my-context

Switched to context "my-context".

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

context master ccx default

context1 master1 ccx1 default

* my-context my-context my-user ccx

[root@master ~]#

[root@master ~]# kubectl get pods

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@master ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@master ~]#

[root@master ~]# netstat -ntlp | grep 8080

[root@master ~]#

Delete context

- Command: kubectl config delete context context name

If you don't know what you're doing, don't delete it. [generally, a cluster has only one context, which cannot be used after deleting the current cluster [generally used to delete multiple clusters]]

After deleting the context through the command, the configuration file ~ / Relevant contents in kube/config will also be deleted - For example, I delete the name my context [this is the context I created through the command above and can't be used, so I can kill it directly]

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1 master1 ccx1 default

my-context my-context my-user ccx

[root@master ~]# kubectl config delete-context my-context

deleted context my-context from /root/.kube/config

[root@master ~]#

[root@master ~]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* context master ccx default

context1 master1 ccx1 default

[root@master ~]#

[root@master ~]# cat ~/.kube/config | grep my-con

[root@master ~]#