1, Foreword

In the last article Research on distributed transaction principle of Seata AT mode In this paper, we will use spring cloud and spring cloud Alibaba to integrate Seata to realize distributed transaction control, and use Nacos as the registration center to register Seata into Nacos.

2, Environmental preparation

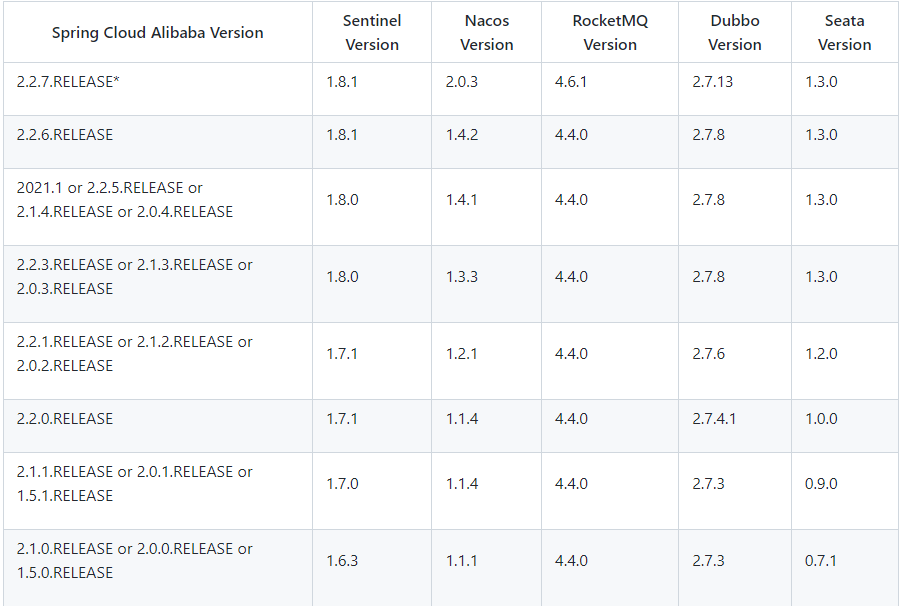

This article mainly includes Nacos and Seata environment. Let's take a look at the matching suggestions of various components officially given by Ali. For each version of the specification, it is recommended to participate in the wiki: Version Description

The following table shows the Spring Cloud Alibaba released in chronological order and the corresponding version relationship between Spring Cloud and Spring Boot (due to the adjustment of Spring Cloud version name, the corresponding Spring Cloud Alibaba version number has also been changed accordingly)

Nacos environment has been prepared before. Here we mainly introduce the preparation of Seata environment, otherwise Nacos will introduce a lot. As a powerful open source component of Alibaba, Nacos is open source to GitHub. Readers can Nacos official website Download to prepare for installation.

Before installing Nacos, you need to ensure that there are Java 8 and Maven environments locally.

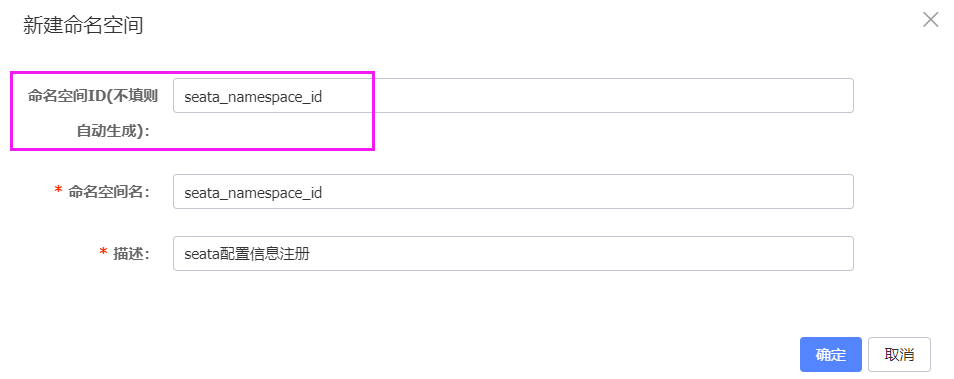

This time, Nacos needs to be used as the registration and configuration center, so the namespace idseata is created in Nacos_ namespace_ ID standby. Note that the namespace ID is made into a namespace name for the first time, so that the configuration information can be persisted to the Nacos database, but can not be displayed on the Nacos console.

2.2 installation of Seata server

This is mainly to adapt to the Cloud version I use, so I download 0.9 Version 0. The installation of Seata Server is very harmonious. You only need to Seata official website Download the Seata server file and unzip it. windows is in zip format and liunx is tar GZ format.

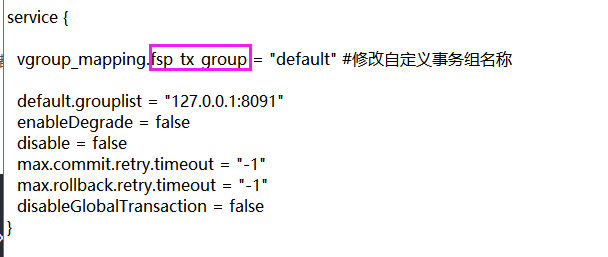

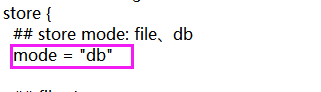

Version Description: after version 1.0, some changes have taken place in the configuration file and use of Seata. After decompression, modify the configuration and replace seata-server-0.9 Unzip. Zip to the specified directory and modify the file. Zip file in the conf directory The conf configuration file (the configuration file directory is generally the registry.conf configuration file under the seata-server-0.9.0\seata\conf directory), which mainly contains the user-defined transaction group name + the transaction log storage mode is db + database connection information.

0.9. 0 version file conf

1. Modify the custom transaction group name in the service module:

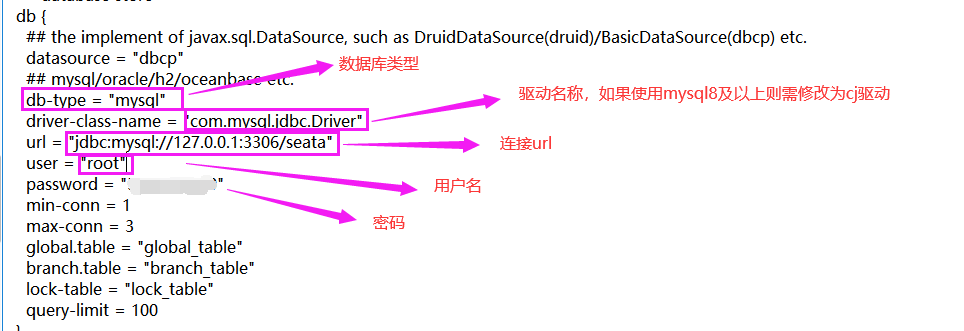

2. In the store module, modify the persistence mode to database db mode

3. Then modify the database connection information in the db module

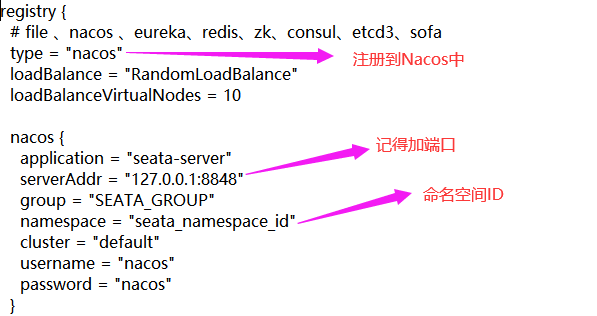

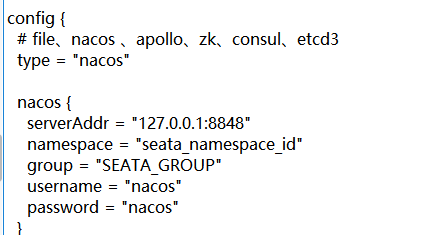

Modify seata-server-0.9 Registry under 0 \ Seata \ conf directory Conf configuration file, registry Conf mainly involves two module modifications: one is registry, the registration type is changed to nacos, and the other is config. We also use nacos as the configuration center. Refer to 1.4.1 directly here 1 version of registry Conf configuration 0.9 Some properties in 0 are sufficient.

1.4. Version 1

In the later version, the configuration information in registry is relatively complete. file.conf is the same as the above configuration, and registry The more complete configuration in conf is as follows:

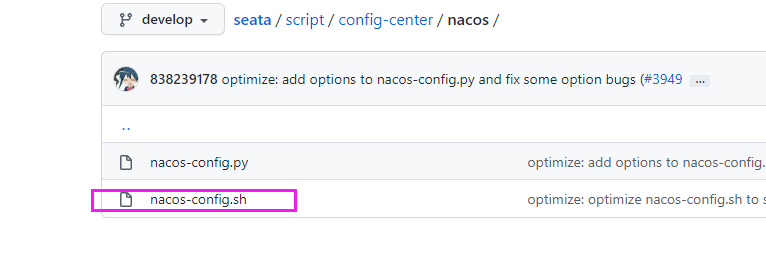

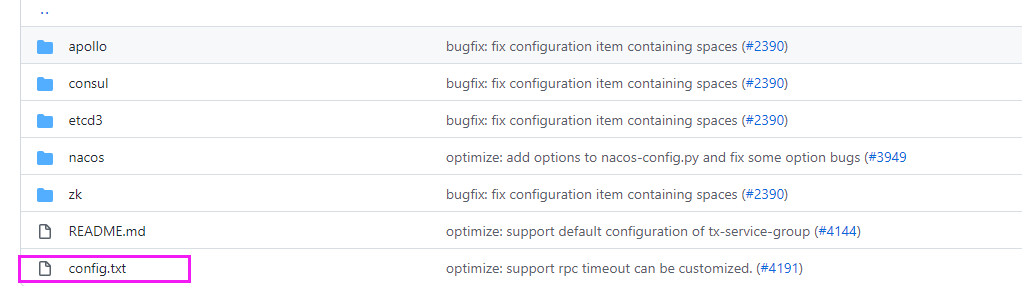

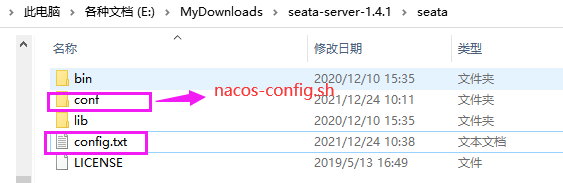

In fact, there is little difference in the information in the configuration file. Finally, if we want to use Nacos as the configuration center of Seata, we also need to push the local configuration information of Seata server to Nacos. Push involves two files, in 0.9 Nacos config.0 in version 0 SH and Nacos config Txt one is the push script and the other is the push configuration information. And in 1.4 These two files are not found in version 1, so they are directly from Official website Download to modify.

Note: Nacos config The SH script is placed under the conf directory, while config. Config Txt and conf folders.

Next, modify config Txt file:

service.vgroupMapping.fxp_tx_group=default store.mode=db store.db.driverClassName=com.mysql.jdbc.Driver # MySQL con 8.0 uses com mysql. cj. jdbc. Driver store.db.url=jdbc:mysql://127.0.0.1:3306/seata?useUnicode=true&rewriteBatchedStatements=true store.db.user=root store.db.password=123456

Then, open git bash here in the conf directory, execute the push command, and set config Txt configuration information is pushed to Nacos:

sh nacos-config.sh -h 192.168.135.33 -p 8848 -g SEATA_GROUP -t seata_namespace_id -u nacos -w nacos

-t seata_namespace_id specifies the Nacos configuration namespace ID

-g SEATA_GROUP specifies the name of the Nacos configuration group

-u nacos specifies the user name of nacos

-w nacos specifies the nacos password

The parameters on the official website are as follows:

-h: host, the default value is localhost.

-p: port, the default value is 8848.

-g: Configure grouping, the default value is 'SEATA_GROUP'.

-t: Tenant information, corresponding to the namespace ID field of Nacos, the default value is ''.

-u: username, nacos 1.2.0+ on permission control, the default value is ''.

-w: password, nacos 1.2.0+ on permission control, the default value is ''.

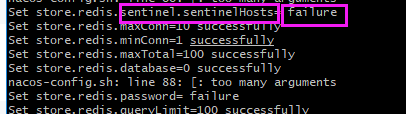

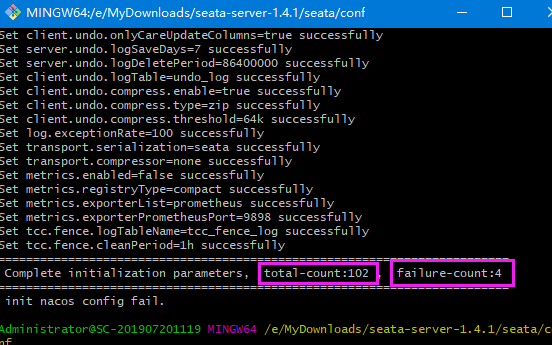

If init nacos config fail reports an error, please check the modification information. If there is a property modification prompt failure, modify config Txt. If some of these properties are not available now, you can back up a config.txt file first Txt, delete the unnecessary attributes in the pushed configuration. Of course, according to me, it's ok if the experiment fails. As long as you don't need the failed configurations, you can continue to use them. If necessary, just change them honestly.

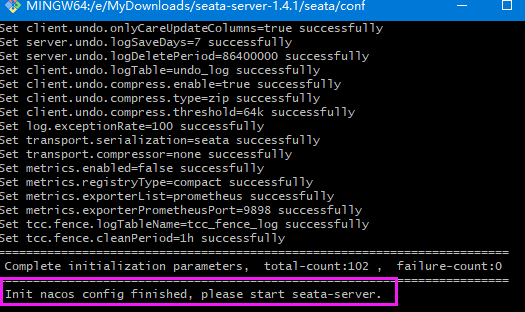

After the final complete success, it is as follows:

Then you can see the following on the Nacos console:

After the configuration information is pushed to Nacos, you can start seata server directly (Nacos must have been started before, because Nacos is the registration center and configuration center of seata, so it should be started first):

Seata library preparation

Create a Seata database, create tables in the Seata database, and create sql scripts for tables_ store. sql in \ seata-server-0.9 0 \ Seata \ conf directory (Note: This sql script does not exist in Seata server after version 1.0. Of course, there is no problem. I prepared it at the end of the article)

In order to avoid space, all the information of the SQL table and the two modified configuration files are placed at the end of the text, Click here You can jump. After execution, the seata library contains three tables:

Then you can start Seata server. In seata-server-1.4 1 / Seata / bin find Seata server Bat double click to start.

Through the above steps, the basic environment, seata environment and nacos environment are set up, and then the client construction is started. Before the construction, clarify the requirements for the verification of distributed transactions. This verification will take the user placing an order as an example: placing an order - > deducting inventory - > deducting account (balance)

It includes three micro services: order service, inventory service and account service.

The specific process is as follows: when the user places an order, an order will be created in the order service, and then the inventory of the ordered goods will be deducted by remotely calling the inventory service, and then the balance in the user's account will be deducted by remotely calling the account service. Finally, the order status will be modified to completed in the order service.

This operation spans three databases and has two remote calls. Obviously, there will be distributed transaction problems.

1. Create business database and data table

create database seata_order; // Order database;

create database seata_storage; // Inventory database;

create database seata_account; // Database of account information

CREATE TABLE t_order (

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`user_id` BIGINT(11) DEFAULT NULL COMMENT 'user id',

`product_id` BIGINT(11) DEFAULT NULL COMMENT 'product id',

`count` INT(11) DEFAULT NULL COMMENT 'quantity',

`money` DECIMAL(11,0) DEFAULT NULL COMMENT 'amount of money',

`status` INT(1) DEFAULT NULL COMMENT 'Order status: 0:Creating; 1:Closed',

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

CREATE TABLE t_storage (

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY,

`product_id` BIGINT(11) DEFAULT NULL COMMENT 'product id',

`total` INT(11) DEFAULT NULL COMMENT 'Total inventory',

`used` INT(11) DEFAULT NULL COMMENT 'Used inventory',

`residue` INT(11) DEFAULT NULL COMMENT 'Remaining inventory'

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

INSERT INTO seata_storage.t_storage(`id`, `product_id`, `total`, `used`, `residue`)

VALUES ('1', '1', '100', '0','100');

CREATE TABLE t_account(

`id` BIGINT(11) NOT NULL AUTO_INCREMENT PRIMARY KEY COMMENT 'id',

`user_id` BIGINT(11) DEFAULT NULL COMMENT 'user id',

`total` DECIMAL(10,0) DEFAULT NULL COMMENT 'Total amount',

`used` DECIMAL(10,0) DEFAULT NULL COMMENT 'Balance used',

`residue` DECIMAL(10,0) DEFAULT '0' COMMENT 'Remaining available limit'

) ENGINE=INNODB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

INSERT INTO seata_account.t_account(`id`, `user_id`, `total`, `used`, `residue`)

VALUES ('1', '1', '1000', '0', '1000');

Because we use Seata to manage distributed transactions this time, according to the principle knowledge in the previous section, we should also build their own rollback log tables under each branch library: order inventory account for local rollback. 0.9. The table creation statement of version 0 is located in \ seata-server-0.9 DB in 0 \ Seata \ conf directory_ undo_ log. SQL, the replication after version 1.0 can be executed in the following three libraries.

CREATE TABLE `undo_log` ( `id` bigint(20) NOT NULL AUTO_INCREMENT, `branch_id` bigint(20) NOT NULL, `xid` varchar(100) NOT NULL, `context` varchar(128) NOT NULL, `rollback_info` longblob NOT NULL, `log_status` int(11) NOT NULL, `log_created` datetime NOT NULL, `log_modified` datetime NOT NULL, `ext` varchar(100) DEFAULT NULL, PRIMARY KEY (`id`), UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`) ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

The final business library structure is as follows:

Then we start to build a client environment. Under microservices, we are used to uniformly managing versions and module s in the parent pom to build a parent project SpringCloud. pom depends on the following:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.dl</groupId>

<artifactId>SpringCloud</artifactId>

<version>1.0-SNAPSHOT</version>

<modules>

<module>cloud-provider-payment8001</module>

<module>cloud-consumer-order80</module>

<module>cloud-api-commons</module>

<module>cloud-eureka-server7001</module>

<module>cloud-eureka-server7002</module>

<module>cloud-provider-payment8002</module>

<module>cloud-provider-payment8004</module>

<module>cloud-provider-hystrix-payment8001</module>

<module>cloud-consumer-feign-hystrix-order80</module>

<module>cloud-consumer-feign-order80</module>

<module>cloud-consumer-hystrix-dashboard9001</module>

<module>cloud-gateway-gateway9527</module>

<module>cloud-config-center-3344</module>

<module>cloud-config-client-3355</module>

<module>cloud-config-client-3366</module>

<module>cloud-stream-rabbitmq-provider8801</module>

<module>cloud-stream-rabbitmq-consumer8802</module>

<module>cloud-stream-rabbitmq-consumer8803</module>

<module>cloudalibaba-provider-payment9001</module>

<module>cloudalibaba-provider-payment9002</module>

<module>cloudalibaba-consumer-nacos-order83</module>

<module>cloudalibaba-config-nacos-client3377</module>

<module>cloud-snowflake</module>

<module>cloudalibaba-seata-order-service2001</module>

<module>cloudalibaba-seata-storage-service2002</module>

<module>cloudalibaba-seata-account-service2003</module>

</modules>

<packaging>pom</packaging>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<junit.version>4.12</junit.version>

<lombok.version>1.18.16</lombok.version>

<mysql.version>8.0.18</mysql.version>

<druid.version>1.1.20</druid.version>

<!-- <logback.version>1.2.3</logback.version>-->

<!-- <slf4j.version>1.7.25</slf4j.version>-->

<log4j.version>1.2.17</log4j.version>

<mybatis.spring.boot.version>2.1.2</mybatis.spring.boot.version>

</properties>

<!-- After the sub module inherits, it provides the following functions: locking the version+son modlue Do not write groupId and version -->

<dependencyManagement>

<dependencies>

<!--Note if used seata Version 1.0spring cloud alibaba 2.1.0.RELEASE-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>2.2.2.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--spring boot 2.2.2-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>2.2.2.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--spring cloud Hoxton.SR1-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Hoxton.SR1</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!--mysql connect-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>${mysql.version}</version>

</dependency>

<!--druid data source-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>${druid.version}</version>

</dependency>

<!--springboot integration mybatis-->

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>${mybatis.spring.boot.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>${junit.version}</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${log4j.version}</version>

</dependency>

<!--lombok plug-in unit-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

<optional>true</optional>

<scope>provided</scope>

</dependency>

</dependencies>

</dependencyManagement>

<!--Package plug-ins-->

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<fork>true</fork>

<addResources>true</addResources>

</configuration>

</plugin>

</plugins>

</build>

</project>

The sub modules mainly include: cloudalibaba Seata order service2001, cloudalibaba Seata storage service2002 and cloudalibaba Seata account service2003. The dependencies required in the three POMS are common. I post the whole pom. You only need the relevant dependencies.

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>SpringCloud</artifactId>

<groupId>com.dl</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>cloudalibaba-seata-order-service2001</artifactId>

<dependencies>

<!--nacos-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery</artifactId>

</dependency>

<!--seata 0.9-->

<!-- <dependency>-->

<!-- <groupId>com.alibaba.cloud</groupId>-->

<!-- <artifactId>spring-cloud-starter-alibaba-seata</artifactId>-->

<!-- <exclusions>-->

<!-- <exclusion>-->

<!-- <artifactId>seata-all</artifactId>-->

<!-- <groupId>io.seata</groupId>-->

<!-- </exclusion>-->

<!-- </exclusions>-->

<!-- </dependency>-->

<!-- <dependency>-->

<!-- <groupId>io.seata</groupId>-->

<!-- <artifactId>seata-all</artifactId>-->

<!-- <version>0.9.0</version>-->

<!-- </dependency>-->

<!--seata 1.4.1-->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<exclusions>

<!-- Exclude dependencies. The specified version is consistent with the server side -->

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

</exclusion>

<exclusion>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>1.4.1</version>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.4.1</version>

</dependency>

<!--feign-->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-openfeign</artifactId>

</dependency>

<!--web-actuator-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!--mysql-druid-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.37</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.10</version>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

</dependencies>

</project>

end of document

db_store.sql table creation statement:

-- the table to store GlobalSession data drop table if exists `global_table`; create table `global_table` ( `xid` varchar(128) not null, `transaction_id` bigint, `status` tinyint not null, `application_id` varchar(32), `transaction_service_group` varchar(32), `transaction_name` varchar(128), `timeout` int, `begin_time` bigint, `application_data` varchar(2000), `gmt_create` datetime, `gmt_modified` datetime, primary key (`xid`), key `idx_gmt_modified_status` (`gmt_modified`, `status`), key `idx_transaction_id` (`transaction_id`) ); -- the table to store BranchSession data drop table if exists `branch_table`; create table `branch_table` ( `branch_id` bigint not null, `xid` varchar(128) not null, `transaction_id` bigint , `resource_group_id` varchar(32), `resource_id` varchar(256) , `lock_key` varchar(128) , `branch_type` varchar(8) , `status` tinyint, `client_id` varchar(64), `application_data` varchar(2000), `gmt_create` datetime, `gmt_modified` datetime, primary key (`branch_id`), key `idx_xid` (`xid`) ); -- the table to store lock data drop table if exists `lock_table`; create table `lock_table` ( `row_key` varchar(128) not null, `xid` varchar(96), `transaction_id` long , `branch_id` long, `resource_id` varchar(256) , `table_name` varchar(32) , `pk` varchar(36) , `gmt_create` datetime , `gmt_modified` datetime, primary key(`row_key`) );

File after modification Conf configuration information is as follows:

transport {

# tcp udt unix-domain-socket

type = "TCP"

#NIO NATIVE

server = "NIO"

#enable heartbeat

heartbeat = true

#thread factory for netty

thread-factory {

boss-thread-prefix = "NettyBoss"

worker-thread-prefix = "NettyServerNIOWorker"

server-executor-thread-prefix = "NettyServerBizHandler"

share-boss-worker = false

client-selector-thread-prefix = "NettyClientSelector"

client-selector-thread-size = 1

client-worker-thread-prefix = "NettyClientWorkerThread"

# netty boss thread size,will not be used for UDT

boss-thread-size = 1

#auto default pin or 8

worker-thread-size = 8

}

shutdown {

# when destroy server, wait seconds

wait = 3

}

serialization = "seata"

compressor = "none"

}

service {

vgroup_mapping.fsp_tx_group = "default" #Modify custom transaction group name

default.grouplist = "127.0.0.1:8091"

enableDegrade = false

disable = false

max.commit.retry.timeout = "-1"

max.rollback.retry.timeout = "-1"

disableGlobalTransaction = false

}

client {

async.commit.buffer.limit = 10000

lock {

retry.internal = 10

retry.times = 30

}

report.retry.count = 5

tm.commit.retry.count = 1

tm.rollback.retry.count = 1

}

## transaction log store

store {

## store mode: file,db

mode = "db"

## file store

file {

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

max-branch-session-size = 16384

# globe session size , if exceeded throws exceptions

max-global-session-size = 512

# file buffer size , if exceeded allocate new buffer

file-write-buffer-cache-size = 16384

# when recover batch read size

session.reload.read_size = 100

# async, sync

flush-disk-mode = async

}

## database store

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

db-type = "mysql"

driver-class-name = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://127.0.0.1:3306/seata"

user = "root"

password = "5120154230"

min-conn = 1

max-conn = 3

global.table = "global_table"

branch.table = "branch_table"

lock-table = "lock_table"

query-limit = 100

}

}

lock {

## the lock store mode: local,remote

mode = "remote"

local {

## store locks in user's database

}

remote {

## store locks in the seata's server

}

}

recovery {

#schedule committing retry period in milliseconds

committing-retry-period = 1000

#schedule asyn committing retry period in milliseconds

asyn-committing-retry-period = 1000

#schedule rollbacking retry period in milliseconds

rollbacking-retry-period = 1000

#schedule timeout retry period in milliseconds

timeout-retry-period = 1000

}

transaction {

undo.data.validation = true

undo.log.serialization = "jackson"

undo.log.save.days = 7

#schedule delete expired undo_log in milliseconds

undo.log.delete.period = 86400000

undo.log.table = "undo_log"

}

## metrics settings

metrics {

enabled = false

registry-type = "compact"

# multi exporters use comma divided

exporter-list = "prometheus"

exporter-prometheus-port = 9898

}

support {

## spring

spring {

# auto proxy the DataSource bean

datasource.autoproxy = false

}

}

Modified registry Conf is as follows:

registry {

# file ,nacos ,eureka,redis,zk,consul,etcd3,sofa

type = "nacos"

loadBalance = "RandomLoadBalance"

loadBalanceVirtualNodes = 10

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = "seata_namespace_id"

cluster = "default"

username = "nacos"

password = "nacos"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = 0

password = ""

cluster = "default"

timeout = 0

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}

config {

# file,nacos ,apollo,zk,consul,etcd3

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "127.0.0.1:8848"

group = "SEATA_GROUP"

namespace = "seata_namespace_id"

cluster = "default"

username = "nacos"

password = "nacos"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

appId = "seata-server"

apolloMeta = "http://192.168.1.204:8801"

namespace = "application"

apolloAccesskeySecret = ""

}

zk {

serverAddr = "127.0.0.1:2181"

sessionTimeout = 6000

connectTimeout = 2000

username = ""

password = ""

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}