Article catalog

summary

Java Review - Concurrent Programming_ Principle of LinkedBlockingQueue & source code analysis This paper introduces the LinkedBlockingQueue implemented by bounded linked list. Here, we continue to study the principle of ArrayBlockingQueue implemented by bounded array.

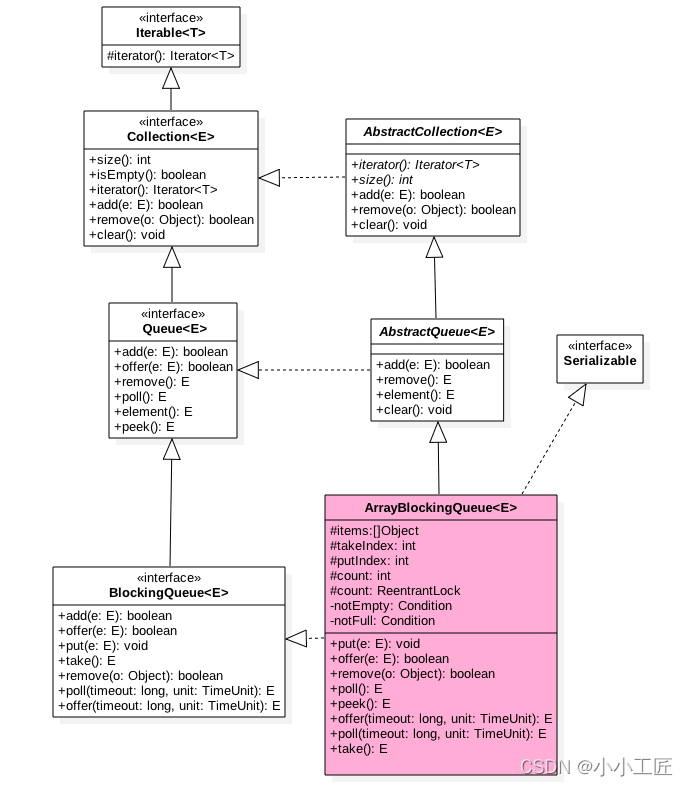

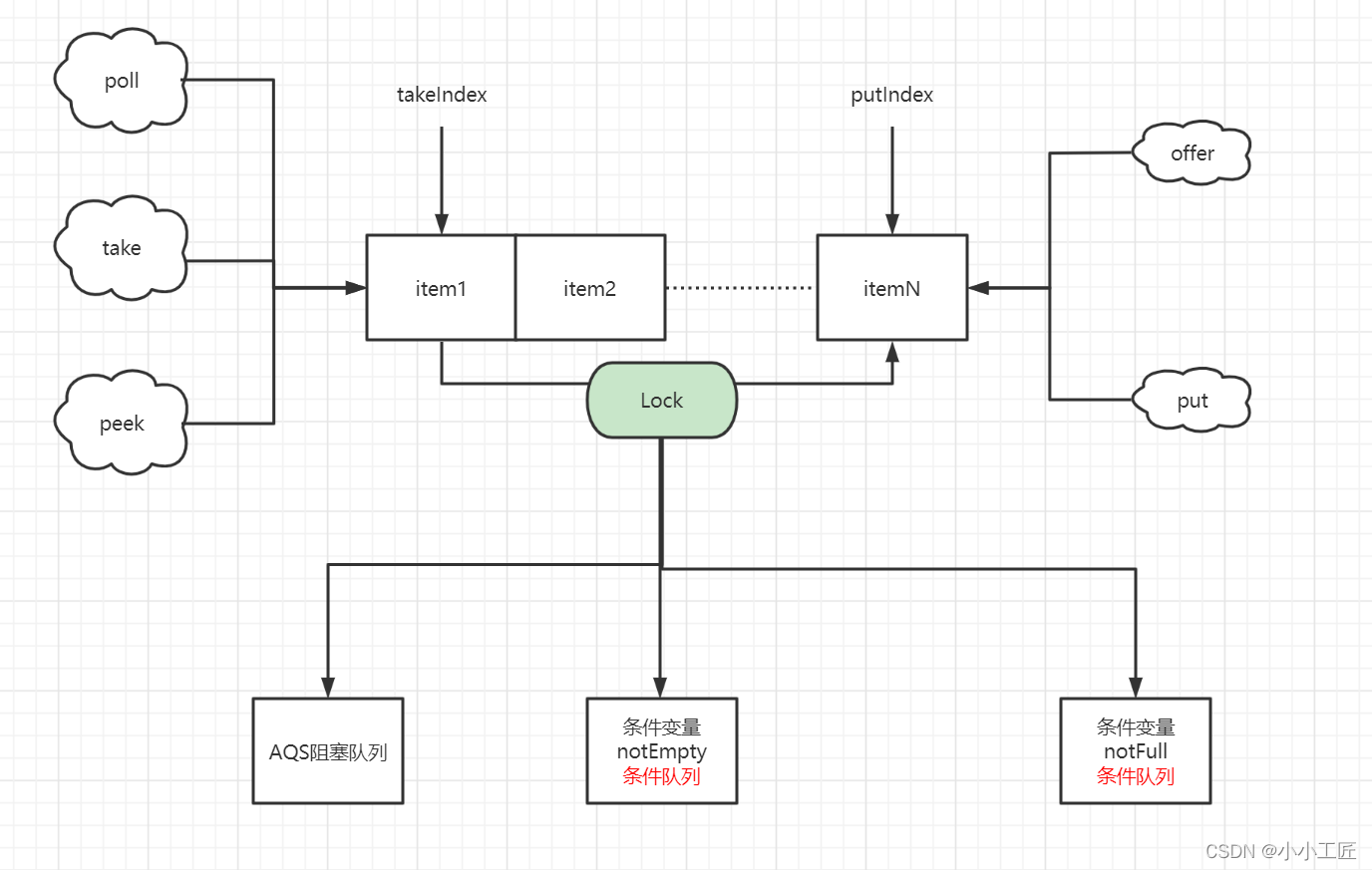

Class diagram structure

As can be seen from the figure, ArrayBlockingQueue

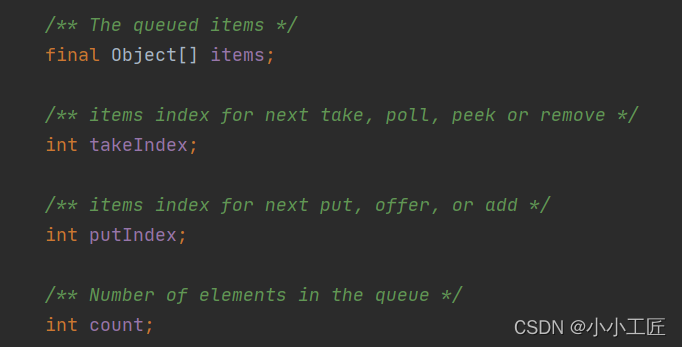

- There is an array items inside, which is used to store queue elements

- The putindex variable represents the subscript of the queued element

- takeIndex is the out of team subscript

- count counts the number of queue elements

From the definition, these variables are not decorated with volatile because they are accessed in the lock block, and locking has ensured the memory visibility of the variables in the lock block.

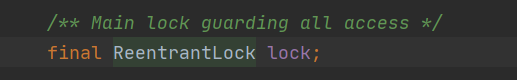

In addition, an exclusive lock is used to ensure the atomicity of out of queue and in queue operations, which ensures that only one thread can perform in queue and out of queue operations at the same time.

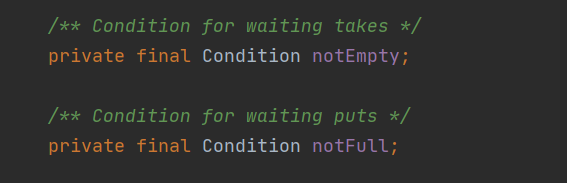

In addition, notEmpty and notFull condition variables are used to synchronize outgoing and incoming queues.

Constructor

ArrayBlockingQueue is a bounded queue, so the constructor must pass in the queue size parameter.

The constructor code is as follows.

public ArrayBlockingQueue(int capacity) {

this(capacity, false);

}

public ArrayBlockingQueue(int capacity, boolean fair) {

if (capacity <= 0)

throw new IllegalArgumentException();

this.items = new Object[capacity];

lock = new ReentrantLock(fair);

notEmpty = lock.newCondition();

notFull = lock.newCondition();

}

public ArrayBlockingQueue(int capacity, boolean fair,

Collection<? extends E> c) {

this(capacity, fair);

final ReentrantLock lock = this.lock;

lock.lock(); // Lock only for visibility, not mutual exclusion

try {

int i = 0;

try {

for (E e : c) {

checkNotNull(e);

items[i++] = e;

}

} catch (ArrayIndexOutOfBoundsException ex) {

throw new IllegalArgumentException();

}

count = i;

putIndex = (i == capacity) ? 0 : i;

} finally {

lock.unlock();

}

}As can be seen from the above code, the non fair exclusive lock provided by ReentrantLock is used for synchronization of outgoing and incoming operations by default.

Main methods source code analysis

After studying the implementation of LinkedBlockingQueue, looking at the implementation of ArrayBlockingQueue, you will feel that the latter is much simpler

offer operation

Insert an element into the end of the queue. If the queue has free space, it returns true after successful insertion. If the queue is full, it discards the current element and returns false.

If the e element is null, a NullPointerException exception is thrown.

In addition, the method is non blocking.

public boolean offer(E e) {

// 1

checkNotNull(e);

// 2

final ReentrantLock lock = this.lock;

lock.lock();

try {

// 3

if (count == items.length)

return false;

else {

// 4

enqueue(e);

return true;

}

} finally {

lock.unlock();

}

}- Code (1) if the e element is null, a NullPointerException exception is thrown

- Code (2) obtains the exclusive lock. After the current thread obtains the lock, other threads for queue in and queue out operations will be blocked and suspended, and then put into the AQS blocking queue of lock lock.

- Code (3) judges that if the queue is full, it will directly return false, otherwise it will return true after calling enqueue method. The enqueue code is as follows

/**

* Inserts element at current put position, advances, and signals.

* Call only when holding lock.

*/

private void enqueue(E x) {

// assert lock.getHoldCount() == 1;

// assert items[putIndex] == null;

// 6 elements join the team

final Object[] items = this.items;

items[putIndex] = x;

// 7 calculate the subscript position where the next element should be stored

if (++putIndex == items.length)

putIndex = 0;

count++;

// 8

notEmpty.signal();

}The above code first puts the current element into the items array, then calculates the subscript position where the next element should be stored, increments the number of elements counter, and finally activates a thread blocked by calling the take operation in the condition queue of notEmpty.

Since the lock is added before the operation of the shared variable count, there is no memory invisibility problem. The shared variables obtained after the lock are obtained from the main memory, not from the CPU cache or registers.

Code (5) releases the lock, and then flushes the modified shared variable value (such as the value of count) back to main memory, so that when other threads read these shared variables again through locking, they can see the latest value.

put operation

Insert an element into the tail of the queue. If the queue is idle, it will directly return true after insertion. If the queue is full, the current thread will be blocked until the queue is idle and the insertion is successful. If the interrupt flag is set by other threads during blocking, the blocked thread will throw an InterruptedException exception and return.

In addition, if the e element is null, a NullPointerException exception is thrown.

public void put(E e) throws InterruptedException {

// 1

checkNotNull(e);

final ReentrantLock lock = this.lock;

// 2 access to locks can be interrupted

lock.lockInterruptibly();

try {

// 3. If the queue is full, put the current lower level into the condition queue managed by notFull

while (count == items.length)

notFull.await();

// 4 insert queue

enqueue(e);

} finally {

// 5

lock.unlock();

}

}- In code (2), if the current thread is interrupted by other threads during lock acquisition, the current thread will throw an InterruptedException exception and exit.

- Code (3) judges that if the current queue is full, the current thread blocking is suspended and put into the notFull condition queue. Note that the while loop is also used instead of the if statement\

- Code (4) determines that if the queue is not satisfied, the current element will be inserted, which will not be repeated here.

poll operation

Get and remove an element from the queue header. If the queue is empty, it returns null. This method is not blocked.

public E poll() {

// 1

final ReentrantLock lock = this.lock;

lock.lock();

try {

// 2

return (count == 0) ? null : dequeue();

} finally {

lock.unlock();

}

}- Code (1) acquires an exclusive lock.

- The code (2) judges that if the queue is empty, null will be returned; otherwise, the dequeue() method will be called

private E dequeue() {

// assert lock.getHoldCount() == 1;

// assert items[takeIndex] != null;

final Object[] items = this.items;

@SuppressWarnings("unchecked")

// 4 get elements

E x = (E) items[takeIndex];

// 5 the value in the array is null

items[takeIndex] = null;

// 6. Calculate the header pointer and subtract one from the number of queue elements

if (++takeIndex == items.length)

takeIndex = 0;

count--;

if (itrs != null)

itrs.elementDequeued();

// 7 send a signal to activate a thread in the notFull condition queue

notFull.signal();

return x;

}As can be seen from the above code, first obtain the current queue header element and save it to the local variable, then reset the queue header element to null, reset the queue header subscript, decrement the element counter, and finally send a signal to activate a thread blocked by calling the put method in the notFull condition queue.

take operation

Gets the current queue header element and removes it from the queue. If the queue is empty, the current thread will be blocked until the queue is not empty, and then the element will be returned. If the interrupt flag is set by other threads during blocking, the blocked thread will throw an InterruptedException exception and return.

public E take() throws InterruptedException {

// 1

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

// 2 if the queue is empty, wait until there is data in the queue

while (count == 0)

notEmpty.await();

// 3 get header element

return dequeue();

} finally {

// 4

lock.unlock();

}The code of take operation is also relatively simple, which is only different from the code (2) of poll.

Here, if the queue is empty, suspend the current thread and put it into the condition queue of notEmpty, and wait for other threads to call notEmpty Signal () method and then return.

It should be noted that the while loop is also used to detect and wait instead of the if statement.

peek operation

Get the queue header element but do not remove it from the queue. If the queue is empty, null will be returned. This method is not blocked.

public E peek() {

// 1

final ReentrantLock lock = this.lock;

lock.lock();

try {

// 2

return itemAt(takeIndex); // null when queue is empty

} finally {

// 3

lock.unlock();

}

} final E itemAt(int i) {

return (E) items[i];

}The implementation of peek is simpler. First, obtain the exclusive lock, then obtain the value of the current team header subscript from the array items and return it, and release the obtained lock before returning.

size

Calculate the number of current queue elements.

public int size() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return count;

} finally {

lock.unlock();

}

}The size operation is relatively simple. After obtaining the lock, it directly returns count, and releases the lock before returning.

You may ask, here you don't modify the value of count, but simply get it. Why lock it?

In fact, if the count is declared volatile, there is no need to lock it here, because volatile variables ensure the visibility of memory, while the count in ArrayBlockingQueue is not declared volatile, because the count operation is carried out after obtaining the lock,

One of the semantics of obtaining a lock is that variables accessed after obtaining a lock are obtained from main memory, which ensures the memory visibility of variables.

Summary

- ArrayBlockingQueue realizes that only one thread can enter or leave the queue at the same time by using the global exclusive lock. The granularity of this lock is relatively large, which is a bit similar to adding synchronized to the method.

- The offer and poll operations are performed by simply locking in and out of the queue,

- The put and take operations are implemented using conditional variables. If the queue is full, wait, if the queue is empty, and then send signals to activate the waiting threads in the out of queue and in queue operations to achieve synchronization.

- In addition, compared with LinkedBlockingQueue, the size operation result of ArrayBlockingQueue is accurate because global lock is added before calculation.