💖 About the author: Hello, I'm brother cheshen, cheshen at No. 18 Fuxue road 🥇

⚡ About - > Che Shen: the fastest time from the bedroom to the laboratory is 3 minutes, and the slowest time is 3 minutes and a half (that half minute is actually waiting for the traffic light)

📝 Personal homepage: A blog that should have no place to live_ Cheshen, 18 Fuxue Road_ CSDN blog

🎉 give the thumbs-up ➕ comment ➕ Collection = = form a habit (one button three times) 😋

📖 This series focuses on learning the development of wechat applet MiniProgram as the standard and achieving the improvement of self-ability ⚡

⚡ I hope you can give me more support 🤗~ Let's come on together 😁

-

This column:

Wechat applet development -

special column

I always thought that no one made the library of extreme learning machine. When I found the hpelm library, I found that the needle doesn't poke!!!

Python source code at the end of the article is taken by yourself!!!

Introduction to ELM

In 2004, the Extreme Learning Machine (ELM) theory proposed by Professor Huang guangbin of Nanyang Institute of technology can improve this situation. The original limit learning machine is a new learning algorithm for single hidden layer feed forward neural networks (SLFNs). It randomly selects the input weight and analyzes it to determine the output weight of the network. In this theory, this algorithm attempts to provide limited performance in learning speed.

ELM advantages

I think the biggest difference between ELM algorithm and neural network algorithm is that ELM does not need iteration, but calculates the weight of the last layer of neurons through the label at one time. The neural network updates the weight value according to the loss value through the gradient descent method.

Therefore, ELM algorithm is not suitable to construct a deeper network structure, but it reduces the amount of computation and machine overhead. Compared with ELM, DELM adds the restriction of regular term to prevent over fitting.

ELM principle

ELM is a new fast learning algorithm. For single hidden layer neural networks, ELM can randomly initialize input weights and offsets and obtain corresponding hidden node outputs:

Import library

Input directly at PyCharm terminal:

pip install hpelm

ELM linear Regression regression

Taking linear Regression regression as an example, the Python code is as follows:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Time : 2021/12/27 11:02

# @Author: cheshen, 18 Fuxue Road

# @Email : yurz_control@163.com

# @File : elm_regression.py

#Import libraries

import hpelm

from keras.datasets import mnist

from keras.utils import to_categorical

import numpy as np

from numpy import random

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import time

import matplotlib.pyplot as plt

#Lists to store results

Train_T = []

Test_E = []

##Load wine testing UCI data data

data = np.genfromtxt('winequality-white.csv', dtype = float, delimiter = ';')

#Delete heading

data = np.delete(data,0,0)

x = data[:,:11]

y = data[:,-1]

#Train test split

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.33,random_state=42)

#===============================================================

def calculateE(y,t):

#Calculate RMSE

return mean_squared_error(y, t)

#===============================================================

#Initialization

Lmax = 40

L = 0

E = 0

ExpectedAccuracy = 0

while L < Lmax and E >= ExpectedAccuracy:

#Increase Node

L = L + 1

#Calculate Random weights, they are already addded into model using hpelm library

w = random.rand(11,L)

#Initialize model

model = hpelm.ELM(11,1)

model.add_neurons(L,'sigm')

start_time = time.time()

#Train model

model.train(x_train,y_train,'r')

Train_T.append(time.time() - start_time)

#Calculate output weights and intermediate layer

BL_HL = model.predict(x_test)

#Calculate new EMSE

E = calculateE(y_test,BL_HL)

Test_E.append(E)

#Print result

print("Hidden Node",L,"RMSE :",E)

#===================================================================

#Find best RMSE

L = Test_E.index(min(Test_E)) + 1

print()

print()

print()

print()

#Define model

model = hpelm.ELM(11,1)

model.add_neurons(L,'sigm')

start_time = time.time()

model.train(x_train,y_train,'r')

print('Training Time :',time.time() - start_time)

start_time = time.time()

BL_HL = model.predict(x_train)

print('Testing Time :',time.time() - start_time)

#Calculate training RMSE

E = calculateE(y_train,BL_HL)

print('Training RMSE :',E)

print('Testing RMSE :',min(Test_E))

#===================================================================

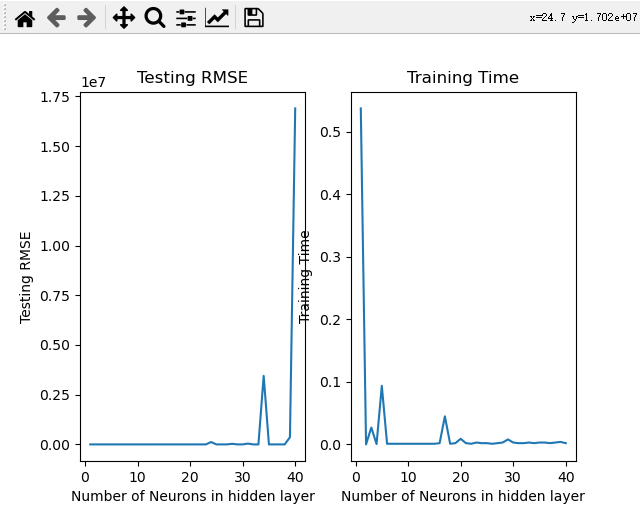

#Plot Data

plt.subplot(1, 2, 1) #Generate graph for ANN

plt.plot(range(1,Lmax+1),Test_E)

plt.title('Testing RMSE')

plt.xlabel('Number of Neurons in hidden layer')

plt.ylabel('Testing RMSE')

plt.subplot(1, 2, 2) #Generate graph for CNN

plt.plot(range(1,Lmax+1),Train_T)

plt.title('Training Time')

plt.xlabel('Number of Neurons in hidden layer')

plt.ylabel('Training Time')

plt.show()

source code

The source code and files are here: Implementation of linear regression with Python extreme learning machine ELM

Link: https://pan.baidu.com/s/1b3yTQp5El-aNwvOT7vad-A

Extraction code: yyds

❤ Keep reading Paper, keep taking notes, keep learning, and keep brushing LeetCode ❤!!!

Insist on brushing questions!!! Hit the ladder!!!

⚡To Be No.1

⚡⚡ Ha ha ha ha

⚡ Creation is not easy ⚡, Crossing energy ❤ Follow, collect and like ❤ Three is the best

ღ( ´・ᴗ・` )

❤

『

The sun lusts for flowers with smoke, and the moon lusts for elements without sleep.

』