preface

We will see a node / log in zk's data_ dir_ event_ Notification /, which is a serial number persistent node The role of this node in kafka is to add a child node / log to zk when LogDir on a Broker is abnormal (such as disk damage, file read-write failure, etc.)_ dir_ event_ notification/log_ dir_ event_ Serial number; After listening to the changes of this node, the Controller will send a LeaderAndIsrRequest request to the Brokers; Then do some aftercare operations when the copy is offline

Source code analysis

The dirLog here is server Log. Configured in properties Dir example

Replica exception handling

First, we find the source code that uses this node; kafka is called at the beginning of startup

ReplicaManager.startup()

def startup(): Unit = {

// Omit

//When the inter Broker protocol (IBP) < 1.0, if there are some exceptions of logDir, the whole Broker will fail to start directly;

val haltBrokerOnFailure = config.interBrokerProtocolVersion < KAFKA_1_0_IV0

logDirFailureHandler = new LogDirFailureHandler("LogDirFailureHandler", haltBrokerOnFailure)

logDirFailureHandler.start()

}

private class LogDirFailureHandler(name: String, haltBrokerOnDirFailure: Boolean) extends ShutdownableThread(name) {

override def doWork(): Unit = {

//Get data from the queue offlineLogDirQueue

val newOfflineLogDir = logDirFailureChannel.takeNextOfflineLogDir()

if (haltBrokerOnDirFailure) {

fatal(s"Halting broker because dir $newOfflineLogDir is offline")

Exit.halt(1)

}

handleLogDirFailure(newOfflineLogDir)

}

}// logDir should be an absolute path

// sendZkNotification is needed for unit test

def handleLogDirFailure(dir: String, sendZkNotification: Boolean = true): Unit = {

// Omit

logManager.handleLogDirFailure(dir)

if (sendZkNotification)

zkClient.propagateLogDirEvent(localBrokerId)

warn(s"Stopped serving replicas in dir $dir")

}The code is relatively long, so let's have a brief overview: The main reason is that when an exception occurs when reading or operating LogDir, it will be executed here. It may be some IOException exceptions such as the disk is offline, or the file suddenly does not have read and write permission; Then the Broker needs to do some processing; as follows

Make a judgment and exit directly when the protocol version is less than; At that time, mu lt iple logdirs on a single Broker were not supported;

Replica stop fetching data

Mark zone offline

Some monitoring information may be removed

If the current are offline (or abnormal), you can directly shut down the machine for a long time

If there are other online, continue to do other cleaning operations;

Create a persistent sequence node + sequence number; The data is the broker ID; For example:

{"version":1,"broker":20003,"event":1}PS: log_dir can configure multiple paths in one Broker, separated by commas

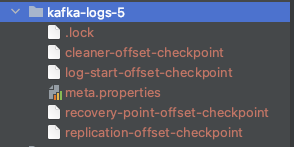

LogDir exception

For example, when locking a file, lockLogDirs throws an exception IOException when the disk is damaged

/**

* Lock all the given directories

*/

private def lockLogDirs(dirs: Seq[File]): Seq[FileLock] = {

dirs.flatMap { dir =>

try {

val lock = new FileLock(new File(dir, LockFile))

if (!lock.tryLock())

throw new KafkaException("Failed to acquire lock on file .lock in " + lock.file.getParent +

". A Kafka instance in another process or thread is using this directory.")

Some(lock)

} catch {

case e: IOException =>

logDirFailureChannel.maybeAddOfflineLogDir(dir.getAbsolutePath, s"Disk error while locking directory $dir", e)

None

}

}

}

def maybeAddOfflineLogDir(logDir: String, msg: => String, e: IOException): Unit = {

error(msg, e)

if (offlineLogDirs.putIfAbsent(logDir, logDir) == null)

offlineLogDirQueue.add(logDir)

}After adding an exception queue to the offlineLogDirQueue, you will return to the above copy exception handling code, which is consistent with the queue Of take()

The Controller monitors zk node changes

KafkaController.processLogDirEventNotification

private def processLogDirEventNotification(): Unit = {

if (!isActive) return

val sequenceNumbers = zkClient.getAllLogDirEventNotifications

try {

val brokerIds = zkClient.getBrokerIdsFromLogDirEvents(sequenceNumbers)

//Try to move all replicas on this Broker to OnlineReplica

onBrokerLogDirFailure(brokerIds)

} finally {

// delete processed children

zkClient.deleteLogDirEventNotifications(sequenceNumbers, controllerContext.epochZkVersion)

}

}Mainly from zk node / log_dir_event_notification/log_dir_event_ All replicas on the Broker of the data obtained in the serial number are subject to a replica status flow - > onlinereplica; For the flow of state machines, see [kafka source code] state machine in Controller

- Send a leaderandisrequest request to all broker s to query the status of their replicas. If the replica logDir is offline, Kafka will be returned_ STORAGE_ Error exception;

- The node will be deleted after completion