Today, I received an SQL from my operation classmate, which was a little complicated. In particular, the SQL explain could not be executed for a long time, so our background team helped solve the SQL problem, but we just found a hidden online problem.

select a.share_code, a.generated_time, a.share_user_id, b.user_count, b.order_count, a.share_order_id, b.rewarded_amount from t_risk_share_code a, (select count(distinct r.user_id) user_count, count(distinct r.order_id) order_count, s.rewarded_amount, r.share_code from t_order s,t_order_rel r where r.order_id = s.id and r.type = 1 and r.share_code = 'Order code I just shared' group by r.share_code) b where a.share_code = b.share_code and a.type = 1

First of all, we found that the direct EXPLAIN SQL is also very slow, that is, some sub queries may be actually executed. Therefore, in the first step, we disassemble the sub queries and analyze them step by step, that is:

select count(distinct r.user_id) user_count, count(distinct r.order_id) order_count, max(s.rewarded_amount), r.share_code from t_order s,t_order_rel r where r.order_id = s.id and r.type = 1 and r.share_code = 'Order code I just shared' group by r.share_code

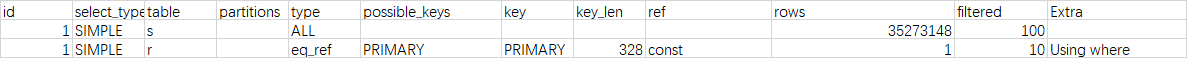

EXPLAIN SQL executes very fast. We find that:

It's strange. Why t_ The scan of the order table becomes a full scan? The index of this table is normal. The primary key is the id.

according to Official documents , you can see the following reasons

- The table is too small to be indexed. But the two tables here are very large, with tens of millions of levels of data.

- For WHERE or ON conditions, there is no appropriate index, which is not the case here. Both tables have appropriate indexes for WHERE and ON conditions (although the query conditions are put in WHERE, we will know that the SQL will be changed to JOIN ON + WHERE for later analysis).

- Using the index column to compare with the constant value, MYSQL analyzes the values that cover most of the values in the table through the index. In fact, it analyzes the hit rows. Finally, when pulling data back to the table, most pages in the table file must be loaded into memory for reading. In this way, instead of loading the index into memory to obtain the hit column, it is better to directly scan the whole table, Anyway, in the end, most of the pages in the table file are loaded into memory. In this case, it is obvious that it will be faster without indexing. In our SQL, t_order_ In fact, rel table will only return dozens of data according to the where condition, t_order and t_order_rel is a 1-to-many relationship. There will not be too many data hits here.

- The Cardinality of this column is too low. The discreteness is the number of different values divided by the number of rows, with a maximum of 1. However, this value is not calculated in real time for the innoDB engine and may be inaccurate (especially when the value of this column is updated, resulting in a change in the position of the row in the page). However, the distinct or primary key column does not need to be calculated, which is 1. If the dispersion is too low, it is similar to the third case, and too many rows will be hit. The SQL to be optimized here uses the primary key, so it does not belong to this case.

Although the above is not what we want to discuss here, here are some optimizations we want to avoid full scan:

- In order to make the SQL execution plan analyzer more accurate, for the fourth case, we may need to regularly execute ANALYZE TABLE for some tables when business is idle to ensure the accuracy of the analyzer's statistics.

- Considering that databases and tables are divided, and sometimes the database SQL execution plan is always imperfect, or there will be wrong indexes, we generally try to add force index to the OLTP query business to force some indexes. This is a problem we often encounter in the process of using middleware based sub database and sub table (such as sharding JDBC) or native distributed database (such as TiDB).

- For MySQL, we set -- Max searches for key = 10000 (the default value is very large), which actually limits the number of rows that may be scanned by the index analyzed by the SQL execution plan analyzer each time. The principle is very simple. Refer to the source code:

double find_cost_for_ref(const THD *thd, TABLE *table, unsigned keyno,

double num_rows, double worst_seeks) {

//Compare the number of rows that will be scanned with max_seeks_for_key for comparison, take the small one

//That is, the SQL analyzer concludes that the number of rows scanned by the index will not exceed max_seeks_for_key

num_rows = std::min(num_rows, double(thd->variables.max_seeks_for_key));

if (table->covering_keys.is_set(keyno)) {

// We can use only index tree

const Cost_estimate index_read_cost =

table->file->index_scan_cost(keyno, 1, num_rows);

return index_read_cost.total_cost();

} else if (keyno == table->s->primary_key &&

table->file->primary_key_is_clustered()) {

const Cost_estimate table_read_cost =

table->file->read_cost(keyno, 1, num_rows);

return table_read_cost.total_cost();

} else

return min(table->cost_model()->page_read_cost(num_rows), worst_seeks);

}This cannot be set too small. Otherwise, the index that can walk multiple indexes but has the largest number of actual scan rows will appear.

Now there's no way. EXPLAIN is not enough. We can only further turn to optimizer for help_ Trace. Do not use optimizer directly_ The reason for trace is that optimizer_trace must execute SQL completely before it can get all useful information.

## Open optimizer_trace set session optimizer_trace="enabled=on"; ## Execute SQL select ..... ## Query trace results SELECT trace FROM information_schema.OPTIMIZER_TRACE;

Through the trace results, we found that the SQL actually executed is:

SELECT Various fields FROM `t_order_rel` `r` JOIN `t_order` `s` WHERE ( ( `r`.`order_id` = CONVERT ( `s`.`id` USING utf8mb4 ) ) AND ( `r`.`type` = 1 ) AND ( `r`.`share_code` = 'B2MTB6C' ) )

I'll go. The field codes of the two tables are different! When the join is on, a layer of code conversion (s.idusing utf8mb4)) is set We know that the condition matching of a field with a layer function cannot go to the index. For example, date (create_time) < "2021-8-1" cannot go to the index, but create_ Time < "2021-8-1" is OK. The comparison of columns between different types can not get to the index, because MySQL will automatically set the type conversion function. This is also the frequent misuse of MySQL syntax sugar.

This t_ order_ The default code of rel is different from other tables. Because some fields use emoji expressions, the default code of the whole table is utf8mb4 when creating a table. Moreover, this table only records usage. There is no OLTP business, and only some OLAP scenarios used by operation students. So I haven't found this problem.

After modifying the field code, the SQL is finally not fully scanned. At the same time, pay attention to:

- The database specifies the default code, and the table no longer specifies the default code. At the same time, for the field that needs to use special code, specify the code for the field

- When join ing, where, pay attention to whether the types on both sides of compare are consistent, and whether it will lead to no index