Modification version required:

1. Start two servers to automatically register IP and port information with Zookeeper

2. When the client starts, it obtains the node information of all service providers from Zookeeper, and the client establishes a connection with each server

3. After a server goes offline, the Zookeeper registration list will automatically eliminate the offline server nodes, and the client will be disconnected from the offline server

4. When the server goes online again, the client can sense it and re establish a connection with the server that goes online again

---------------------------------------------------------------------------------------------------------------------------------

Note: in the actual development, curator is used as the framework to connect zookeeper.

1. First introduce curator related dependencies

<!--use curator client-->

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>2.12.0</version>

</dependency>

<!--introduce curator Listening dependency-->

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-recipes</artifactId>

<version>2.12.0</version>

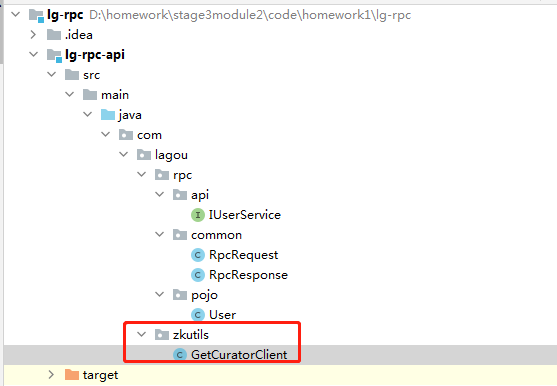

</dependency>2. Because both providers and consumers need to hold the zookeeper client, the method of establishing a session is put into the public module.

3. Write a method to establish a session

package com.lagou.zkutils;

import org.apache.curator.RetryPolicy;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.retry.ExponentialBackoffRetry;

public class GetCuratorClient {

/**

* Both providers and consumers hold zookeeper client objects

*/

// When the server creates a node, it acts as the parent node, for example: / LG RPC / IP: port

public static String path = "/lg-rpc";

public static CuratorFramework getZkClient(){

RetryPolicy exponentialBackoffRetry = new ExponentialBackoffRetry(1000, 3); // Receive return value with interface

// Using fluent programming style

CuratorFramework client = CuratorFrameworkFactory.builder()

.connectString("127.0.0.1:2181")

.sessionTimeoutMs(50000)

.connectionTimeoutMs(30000)

.retryPolicy(exponentialBackoffRetry)

.namespace("base") // The independent naming space / base is set. At present, any operation of the client on the zookeeper data node is relative to the directory, which is conducive to the business isolation of different zookeepers

.build();

client.start();

System.out.println("use fluent Programming style session establishment");

return client;

}

}

4. Operation of provider module

4.1 main startup

package com.lagou.rpc;

import com.lagou.rpc.provider.server.RpcServer;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.CommandLineRunner;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class ServerBootstrapApplication implements CommandLineRunner {

@Autowired

private RpcServer rpcServer;

public static void main(String[] args) {

SpringApplication.run(ServerBootstrapApplication.class,args);

}

// Starting the netty server is executed after the spring container is started

@Override

public void run(String... args) throws Exception {

// Start the netty server according to the thread

new Thread(new Runnable() {

@Override

public void run() {

rpcServer.startServer("127.0.0.1",8899);

}

}).start();

}

}

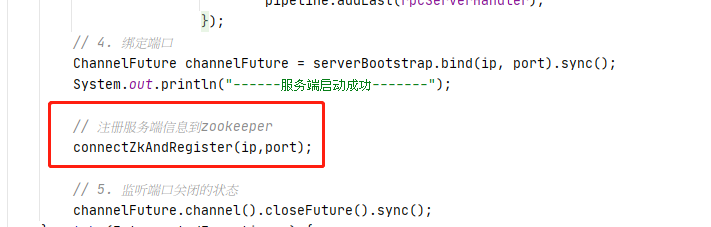

4.2 register the server information on zookeeper and modify the RpcServer class

Specific code:

package com.lagou.rpc.provider.server;

import com.lagou.rpc.provider.handler.RpcServerHandler;

import com.lagou.zkutils.GetCuratorClient;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.ChannelFuture;

import io.netty.channel.ChannelInitializer;

import io.netty.channel.ChannelPipeline;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.string.StringDecoder;

import io.netty.handler.codec.string.StringEncoder;

import org.apache.curator.framework.CuratorFramework;

import org.apache.zookeeper.CreateMode;

import org.springframework.beans.factory.DisposableBean;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

/**

* netty Startup class for

*/

@Service

public class RpcServer implements DisposableBean {

@Autowired

private RpcServerHandler rpcServerHandler;

// netty needs bossGroup and workerGroup. When the container is destroyed, close the thread group in the destroy() method

private NioEventLoopGroup bossGroup;

private NioEventLoopGroup workerGroup;

public void startServer(String ip,int port){

try {

// 1. Create thread group

bossGroup = new NioEventLoopGroup(1);

workerGroup = new NioEventLoopGroup();

// 2. Create a server startup assistant

ServerBootstrap serverBootstrap = new ServerBootstrap();

// 3. Set parameters

serverBootstrap.group(bossGroup,workerGroup)

.channel(NioServerSocketChannel.class) // Set channel implementation

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

ChannelPipeline pipeline = socketChannel.pipeline();

// Add a codec for String because service providers and consumers are passed through json strings

pipeline.addLast(new StringDecoder());

pipeline.addLast(new StringEncoder());

// Add business processing class TODO

pipeline.addLast(rpcServerHandler);

}

});

// 4. Binding port

ChannelFuture channelFuture = serverBootstrap.bind(ip, port).sync();

System.out.println("------The server was started successfully-------");

// Register server information to zookeeper

connectZkAndRegister(ip,port);

// 5. Listen for the closed status of the port

channelFuture.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

}finally {

if (bossGroup != null){

bossGroup.shutdownGracefully();

}

if (workerGroup != null){

workerGroup.shutdownGracefully();

}

}

}

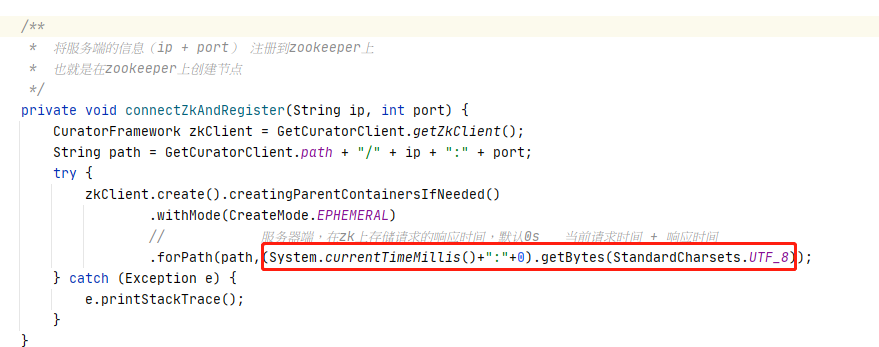

/**

* Register the server's information (ip + port) on zookeeper

* That is, create a node on zookeeper

*/

private void connectZkAndRegister(String ip, int port) {

CuratorFramework zkClient = GetCuratorClient.getZkClient();

String path = GetCuratorClient.path + "/" + ip + ":" + port;

try {

zkClient.create().creatingParentContainersIfNeeded()

.withMode(CreateMode.EPHEMERAL)

// On the server side, store the response time of the request on zk. The default is 0s current request time + response time

.forPath(path,(System.currentTimeMillis()+":"+0).getBytes());

} catch (Exception e) {

e.printStackTrace();

}

}

// Implement the DisposableBean rewrite method, execute this method when the container is closed and destroyed, and close the thread group in this method

@Override

public void destroy() throws Exception {

if (bossGroup != null){

bossGroup.shutdownGracefully();

}

if (workerGroup != null){

workerGroup.shutdownGracefully();

}

}

}

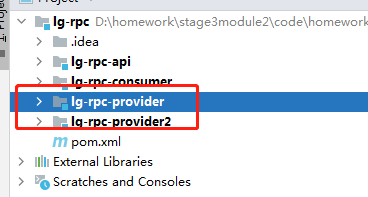

4.3 note: it is required to start two servers, so create another sub module of the provider, and copy the first copy of the content.

5. Implementation of consumer code

Idea: 1 Get the zookeeper instance first

2. Monitoring node changes

package com.lagou.rpc.consumer;

import com.lagou.rpc.api.IUserService;

import com.lagou.rpc.consumer.client.RpcClient;

import com.lagou.rpc.consumer.proxy.RpcClientProxy;

import com.lagou.rpc.consumer.serverinfo.ServerInfo;

import com.lagou.zkutils.GetCuratorClient;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.recipes.cache.ChildData;

import org.apache.curator.framework.recipes.cache.TreeCache;

import org.apache.curator.framework.recipes.cache.TreeCacheEvent;

import org.apache.curator.framework.recipes.cache.TreeCacheListener;

import java.util.HashMap;

import java.util.Iterator;

import java.util.Map;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

/**

* Test class

*/

public class ClientBootStrap {

// Load balancing, client

// Start the scheduled task and set the response time of the server that takes a long time to 0

// Start the thread and select the client to perform the requested operation

public static ScheduledExecutorService executorService = Executors.newScheduledThreadPool(5);

// Create a map collection to cache (maintain) the relationship between server nodes and rpc clients

public static Map<String, RpcClient> clientMap = new HashMap<>();

// Maintain connection information

public static Map<String, ServerInfo> serverMap = new HashMap<>();

public static void main(String[] args) throws Exception {

// The server and client communicate through the interface

// IUserService userService = (IUserService) RpcClientProxy.createProxy(IUserService.class);

// User user = userService.getById(2);

// System.out.println(user);

//Listen for node changes in the specified path on zk

// 1. Get the zookeeper instance first

CuratorFramework zkClient = GetCuratorClient.getZkClient();

// 2. Monitor the changes of nodes (listen to the current zkClient instance and the monitored node path. Here is the parent node: / LG RPC)

// Subsequently, the list status of the child nodes of the current node will be judged: add or delete, which means that a machine has joined zookeeper, or the machine is offline

watchNode(zkClient,GetCuratorClient.path);

// Job 1 does not let the main method end, but sleeps all the time

Thread.sleep(Integer.MAX_VALUE);

}

/**

* Monitor changes to the zookeeper node

*/

private static void watchNode(CuratorFramework zkClient, String path) throws Exception {

TreeCache treeCache = new TreeCache(zkClient, path);

treeCache.getListenable().addListener(new TreeCacheListener() {

@Override

public void childEvent(CuratorFramework curatorFramework, TreeCacheEvent treeCacheEvent) throws Exception {

ChildData data = treeCacheEvent.getData();

System.out.println("data.getPath==="+data.getPath());

String str = data.getPath().substring(data.getPath().lastIndexOf("/")+1);

System.out.println("str ==="+str);

TreeCacheEvent.Type type = treeCacheEvent.getType();

if (type == TreeCacheEvent.Type.NODE_ADDED){// If the listening node change type is add

String value = new String(data.getData());

System.out.println("value===="+value);

String[] split = str.split(":");

if (split.length != 2){

return;

}

if (!serverMap.containsKey(str)){

String[] valueSplit = value.split(":");

if (valueSplit.length != 2){

return;

}

RpcClient rpcClient = new RpcClient(split[0], Integer.parseInt(split[1]));

clientMap.put(data.getPath(), rpcClient);

// The connection has been established

IUserService iUserService = (IUserService) RpcClientProxy.createProxy(IUserService.class, rpcClient);

ServerInfo serverInfo = new ServerInfo();

serverInfo.setLastTime(Long.valueOf(valueSplit[0]));

serverInfo.setUsedTime(Long.valueOf(valueSplit[1]));

serverInfo.setiUserService(iUserService);

serverMap.put(str,serverInfo);

}

}else if (type == TreeCacheEvent.Type.NODE_REMOVED){ // Node deletion

System.out.println("Node deletion detected:"+str);

clientMap.remove(str);

}

System.out.println("------List of existing connections-----");

Iterator<Map.Entry<String, RpcClient>> iterator = clientMap.entrySet().iterator();

while (iterator.hasNext()){

Map.Entry<String, RpcClient> next = iterator.next();

System.out.println(next.getKey()+":"+next.getValue());

}

}

});

treeCache.start();

}

}

So far, the first part has been completed.

--------------------------------------------------------------------------------------------------------------------------------

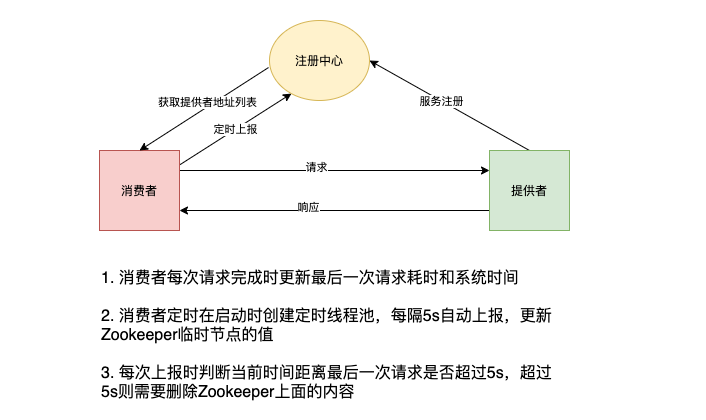

On the basis of "programming question 1", a simple version of load balancing strategy based on Zookeeper is realized

Zookeeper records the last response time of each server. The effective time is 5 seconds. If there is no new request from the server within 5s, the response time will be cleared or invalid. (as shown in the figure below)

When the client initiates a call, the server with the last short response time is selected for service call every time. If the time is the same, a server is randomly selected for call, so as to achieve load balancing

--------------------------------------------------------------------------------------------------------------------------------

realization:

Server side:

1. Register the information, current request time and service response time on the zookeeper node. When the node is created, data information is added to the node. (for subsequent requests, it is necessary to interact according to the server with low response time)

2. The operation of load balancing is mainly on the client:

2.1 encapsulate the server connection information in order to maintain the server node and its connection information

package com.lagou.rpc.consumer.serverinfo;

import com.lagou.rpc.api.IUserService;

public class ServerInfo {

// Used to encapsulate connection information

// Last execution time

private long lastTime;

// response time

private long usedTime;

private IUserService iUserService;

public long getLastTime() {

return lastTime;

}

public void setLastTime(long lastTime) {

this.lastTime = lastTime;

}

public long getUsedTime() {

return usedTime;

}

public void setUsedTime(long usedTime) {

this.usedTime = usedTime;

}

public IUserService getiUserService() {

return iUserService;

}

public void setiUserService(IUserService iUserService) {

this.iUserService = iUserService;

}

}

2.2 sort according to the service response time, select the server with low response time as the interaction, and update the information of server execution time and response time on zookeeper

package com.lagou.rpc.consumer;

import com.lagou.rpc.api.IUserService;

import com.lagou.rpc.consumer.serverinfo.ServerInfo;

import com.lagou.rpc.pojo.User;

import com.lagou.zkutils.GetCuratorClient;

import org.apache.curator.framework.CuratorFramework;

import java.nio.charset.StandardCharsets;

import java.util.ArrayList;

import java.util.Collections;

import java.util.Comparator;

import java.util.Map;

public class SimpleTask implements Runnable {

/** Idea:

* 1.Get the list of all servers and sort them according to the response time usedTime,

* 2.Select the server interaction with the lowest response time, that is, the first server in the sort

* 3.Update the time before and after the start of the execution request to ServerInfo

* 4.Update the last request time and response time on the zookeeper node (the initial value was initialized when the node was created, system time: 0)

*/

private CuratorFramework zkClient;

public SimpleTask(CuratorFramework zkClient) {

this.zkClient = zkClient;

}

@Override

public void run() {

while (true){

if (ClientBootStrap.serverMap.isEmpty()){

// If there is no service provider (server), continue the next cycle

continue;

}

// TODO directly converts a Map into a list in this way

// 1. Get the list of service providers

ArrayList<Map.Entry<String, ServerInfo>> entries = new ArrayList<>(ClientBootStrap.serverMap.entrySet());

// Sort the list of servers according to response time

Collections.sort(entries, new Comparator<Map.Entry<String, ServerInfo>>() {

@Override

public int compare(Map.Entry<String, ServerInfo> o1, Map.Entry<String, ServerInfo> o2) {

return (int) (o1.getValue().getUsedTime() - o2.getValue().getUsedTime());

}

});

System.out.println("--------Sort complete-------");

for (Map.Entry<String, ServerInfo> entry : entries) {

// An entry represents a server node

System.out.println(entry.getKey()+":"+entry.getValue());

}

// 2. Select the server interaction with the smallest response time, that is, the first server in the sorting

ServerInfo serverInfo = entries.get(0).getValue();

System.out.println("Server used:"+entries.get(0).getKey());

IUserService iUserService = serverInfo.getiUserService();

// Current system time

long startTime = System.currentTimeMillis();

// Execute request

User user = iUserService.getById(1);

System.out.println("user==="+user);

// The system time at which the execution request ended

long endTime = System.currentTimeMillis();

// response time

long usedTime = endTime - startTime;

serverInfo.setLastTime(endTime);

serverInfo.setUsedTime(usedTime);

// Update the last request time and response time on the zookeeper node (the value on the node has been initialized when the server starts to create the node, system time: 0)

try {

zkClient.setData().forPath(GetCuratorClient.path+"/"+entries.get(0).getKey(),

(endTime+":"+usedTime).getBytes(StandardCharsets.UTF_8));

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

2.3 write a scheduled task. If the response time exceeds 5 seconds, reset the response time

package com.lagou.rpc.consumer;

import com.lagou.zkutils.GetCuratorClient;

import org.apache.curator.framework.CuratorFramework;

import java.util.List;

public class ScheduleTask implements Runnable {

private CuratorFramework zkClient;

public ScheduleTask(CuratorFramework zkClient) {

this.zkClient = zkClient;

}

@Override

public void run() {

try {

// Query all child nodes under / LG RPC

List<String> stringPaths = zkClient.getChildren().forPath(GetCuratorClient.path);

for (String stringPath : stringPaths) {// A stringPath represents a server node

// Get the content on the node corresponding to each server. Here, the execution time and response time are stored. For example, execution time: response time

byte[] bytes = zkClient.getData().forPath(GetCuratorClient.path + "/" + stringPath);

String data = new String(bytes);

String[] split = data.split(":");

if (split.length != 2){

continue;

}

// Last execution time

long lastTime = Long.parseLong(split[0]);

if (System.currentTimeMillis() - lastTime > 5000){// If current time - last execution time > 5S

// Reset response time to 0

ClientBootStrap.serverMap.get(stringPath).setUsedTime(0);

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

2.4 write the main startup class, create a scheduled task thread pool, execute the scheduled task every 5 seconds, create a thread, select the client and execute the requested operation.

package com.lagou.rpc.consumer;

import com.lagou.rpc.api.IUserService;

import com.lagou.rpc.consumer.client.RpcClient;

import com.lagou.rpc.consumer.proxy.RpcClientProxy;

import com.lagou.rpc.consumer.serverinfo.ServerInfo;

import com.lagou.zkutils.GetCuratorClient;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.recipes.cache.ChildData;

import org.apache.curator.framework.recipes.cache.TreeCache;

import org.apache.curator.framework.recipes.cache.TreeCacheEvent;

import org.apache.curator.framework.recipes.cache.TreeCacheListener;

import java.util.HashMap;

import java.util.Iterator;

import java.util.Map;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

/**

* Test class

*/

public class ClientBootStrap {

// Load balancing, client

// Start the scheduled task and set the response time of the server that takes a long time to 0

// Start the thread and select the client to perform the requested operation

public static ScheduledExecutorService executorService = Executors.newScheduledThreadPool(5);

// Create a map collection to cache (maintain) the relationship between server nodes and rpc clients

public static Map<String, RpcClient> clientMap = new HashMap<>();

// Maintain the relationship between server nodes and connection information, for example: 127.0 0.1:8899, lastTime: XXX (system time at last execution), UsedTime: XXX (response time)

public static Map<String, ServerInfo> serverMap = new HashMap<>();

public static void main(String[] args) throws Exception {

// The server and client communicate through the interface

// IUserService userService = (IUserService) RpcClientProxy.createProxy(IUserService.class);

// User user = userService.getById(2);

// System.out.println(user);

//Listen for node changes in the specified path on zk

// 1. Get the zookeeper instance first

CuratorFramework zkClient = GetCuratorClient.getZkClient();

// 2. Monitor the changes of nodes (for which zkClient to listen, the monitored node path, here is the parent node)

watchNode(zkClient,GetCuratorClient.path);

// 3. Scheduled task, parameter 1: scheduled task to be executed; Parameter 2: delay 5S after system operation; Parameter 3: execute the task again after 5S delay; Parameter 4: time unit

// The consumer creates a timed thread pool at startup, automatically reports every 5s, and updates the value of the zookeeper temporary node

executorService.scheduleWithFixedDelay(new ScheduleTask(zkClient),5,5, TimeUnit.SECONDS);

// 4. Select a client to perform the task

new Thread(new SimpleTask(zkClient)).start();

// Job 1 does not let the main method end, but sleeps all the time

// Thread.sleep(Integer.MAX_VALUE);

}

/**

* Monitor changes to the zookeeper node

*/

private static void watchNode(CuratorFramework zkClient, String path) throws Exception {

TreeCache treeCache = new TreeCache(zkClient, path);

treeCache.getListenable().addListener(new TreeCacheListener() {

@Override

public void childEvent(CuratorFramework curatorFramework, TreeCacheEvent treeCacheEvent) throws Exception {

ChildData data = treeCacheEvent.getData();

System.out.println("data.getPath==="+data.getPath());

String str = data.getPath().substring(data.getPath().lastIndexOf("/")+1); //For example: 127.0 0.1:8000 (one server node)

System.out.println("str ==="+str);

TreeCacheEvent.Type type = treeCacheEvent.getType();

if (type == TreeCacheEvent.Type.NODE_ADDED){// If the listening node change type is add

// The obtained time information is the time information written when the server creates a node on zookeeper. Format: system execution time: response time

String value = new String(data.getData());

System.out.println("value===="+value);

String[] split = str.split(":");

if (split.length != 2){

return;

}

if (!serverMap.containsKey(str)){ // If the server node information is not in the container

String[] valueSplit = value.split(":");

if (valueSplit.length != 2){

return;

}

RpcClient rpcClient = new RpcClient(split[0], Integer.parseInt(split[1]));

clientMap.put(data.getPath(), rpcClient);

// The connection has been established

IUserService iUserService = (IUserService) RpcClientProxy.createProxy(IUserService.class, rpcClient);

ServerInfo serverInfo = new ServerInfo();

// Set the system time of the last task execution

serverInfo.setLastTime(Long.parseLong(valueSplit[0]));

// Set response time

serverInfo.setUsedTime(Long.parseLong(valueSplit[1]));

serverInfo.setiUserService(iUserService);

// Put the connection information into the container

serverMap.put(str,serverInfo);

}

}else if (type == TreeCacheEvent.Type.NODE_REMOVED){ // Node deletion

System.out.println("Node deletion detected:"+str);

clientMap.remove(str);

}

System.out.println("------List of existing connections-----");

Iterator<Map.Entry<String, RpcClient>> iterator = clientMap.entrySet().iterator();

while (iterator.hasNext()){

Map.Entry<String, RpcClient> next = iterator.next();

System.out.println(next.getKey()+":"+next.getValue());

}

}

});

treeCache.start();

}

}

So far, the load balancing function is completed.

To test the effect, you need to debug on the provider side, because when writing the SimpleTask class, the run method uses dead loop and circular request to facilitate testing.

Project address: Download address