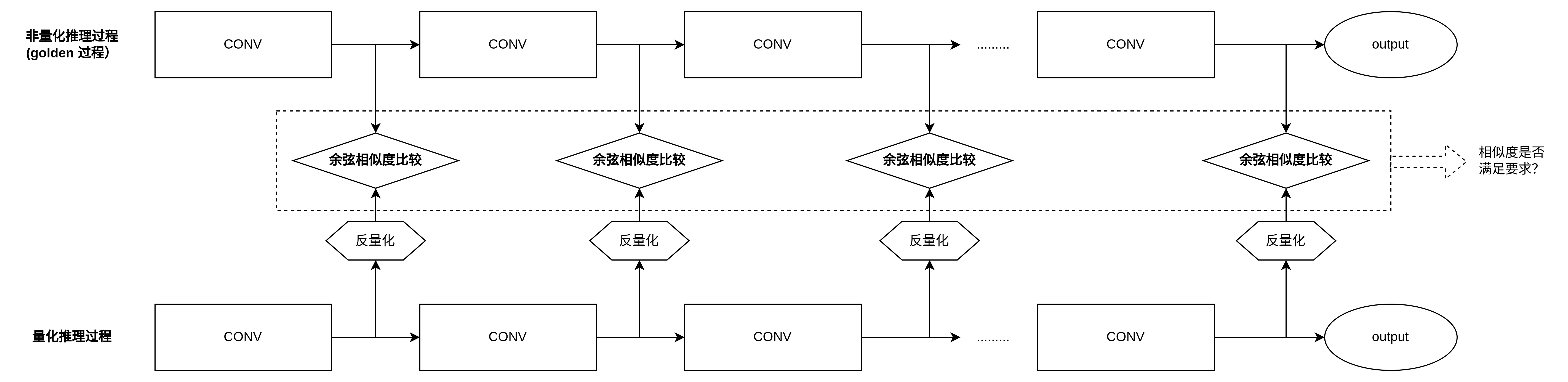

Generally, in the process of model reasoning on the end side, the quantized data is used to participate in the calculation of each layer, rather than the original float data type. The usual quantized data types include UINT8/INT8/INT16, etc. due to the difference of data representation range, the accuracy loss must be involved in the quantization process, The difference between the data calculated by quantitative reasoning between the same layers of the same model after inverse quantization and the float data of the original goden version is expressed by similarity and described graphically as follows:

Generally, the comparison of similarity can be expressed by cosine distance and space Euclidean distance. According to linear space theory, when two vectors are similar, their inner product is large. We can think that the size of inner product represents the similarity of two vectors, and the result of cos represents cosine similarity.

The graphical representation is shown in the figure below. It can be seen that when the directions of vector i and vector u are roughly the same (the included angle is an acute angle), the projection length of red is relatively large. When the two vectors are vertical, the projection is zero, and when the included angle of the vector is an obtuse angle, the projection is a negative vector, representing the largest difference.

The following takes tensor generated by two different processes of the same model as an example to introduce the C language implementation process of two types of statistical information.

The code is as follows:

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <stddef.h>

#include <stdint.h>

#include <fcntl.h>

#include <unistd.h>

#include <math.h>

#include <sys/ioctl.h>

#define DBG(fmt, ...) do { printf("%s line %d, "fmt"\n", __func__, __LINE__, ##__VA_ARGS__); } while (0)

static void dump_memory(uint8_t *buf, int32_t len)

{

int i;

printf("\n\rdump file memory:");

for (i = 0; i < len; i ++)

{

if ((i % 16) == 0)

{

printf("\n\r%p: ", buf + i);

}

printf("0x%02x ", buf[i]);

}

printf("\n\r");

return;

}

int get_tensor_from_txt_file(char *file_path, float **buf)

{

int len = 0;

static float *memory = NULL;

static int max_len = 10 * 1024 * 1024;

FILE *fp = NULL;

if(memory == NULL)

{

memory = (float*) malloc(max_len * sizeof(float));

}

if((fp = fopen(file_path, "r")) == NULL)

{

DBG("open tensor error.");

exit(-1);

}

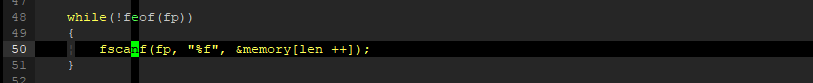

while(!feof(fp))

{

fscanf(fp, "%f", &memory[len ++]);

}

*buf = (float*)malloc(len * sizeof(float));

if(len == 0 || *buf == NULL)

{

DBG("read tensor error, len %d, *buf %p", len, *buf);

exit(-1);

}

memcpy(*buf, memory, len * sizeof(float));

fclose(fp);

return len;

}

float calculate_ojilide(int tensor_len, float *data0, float *data1)

{

int i;

float sum = 0.00;

for(i = 0; i < tensor_len; i ++)

{

float square = (data0[i]-data1[i]) * (data0[i]-data1[i]);

sum += square;

}

return sqrt(sum);

}

float yuxianxiangsidu(int tensor_len, float *data0, float *data1)

{

int i;

float fenzi = 0.00;

float fenmu = 0.00;

float len_vec0 = 0.00;

float len_vec1 = 0.00;

for(i = 0; i < tensor_len; i ++)

{

fenzi += data0[i]*data1[i];

len_vec0 += data0[i]*data0[i];

len_vec1 += data1[i]*data1[i];

}

len_vec0 = sqrt(len_vec0);

len_vec1 = sqrt(len_vec1);

fenmu = len_vec0 * len_vec1;

return fenzi/fenmu;

}

int main(int argc, char **argv)

{

FILE *file;

DBG("in");

if(argc != 3)

{

DBG("input error, you should use this program like that: program tensor0 tensor1.");

exit(-1);

}

int tensor0_len, tensor1_len;

float *tensor0_dat, *tensor1_dat;

tensor0_len = tensor1_len = 0;

tensor0_dat = tensor1_dat = NULL;

tensor0_len = get_tensor_from_txt_file(argv[1], &tensor0_dat);

tensor1_len = get_tensor_from_txt_file(argv[2], &tensor1_dat);

if(tensor0_len != tensor1_len)

{

DBG("%d:%d the two tensor are mismatch on dimisons, caculate cant continue!", tensor0_len, tensor1_len);

exit(-1);

}

float sum = calculate_ojilide(tensor0_len, tensor0_dat, tensor1_dat);

DBG("oujilide sum = %f", sum);

float similar = yuxianxiangsidu(tensor0_len, tensor0_dat, tensor1_dat);

DBG("cosin similar is = %f", similar);

DBG("out");

return 0;

}

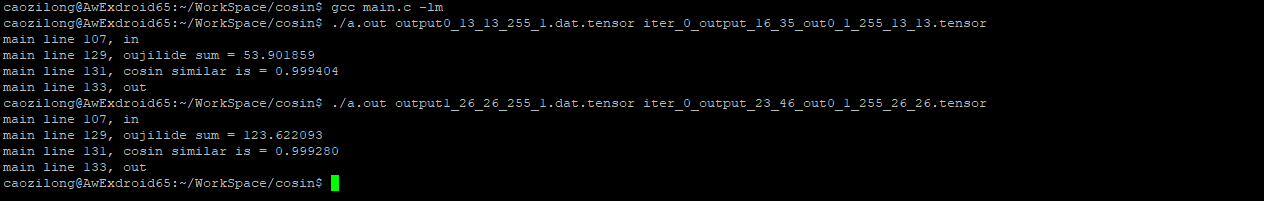

Test and compare the two tensor s to check the similarity:

It can be seen that after calculation, the Euclidean distances of the two groups of tensor s are 53.901859 and 123.622093 respectively, and the cosine similarity is 0.999404 and 0.999280, which meet the requirements.

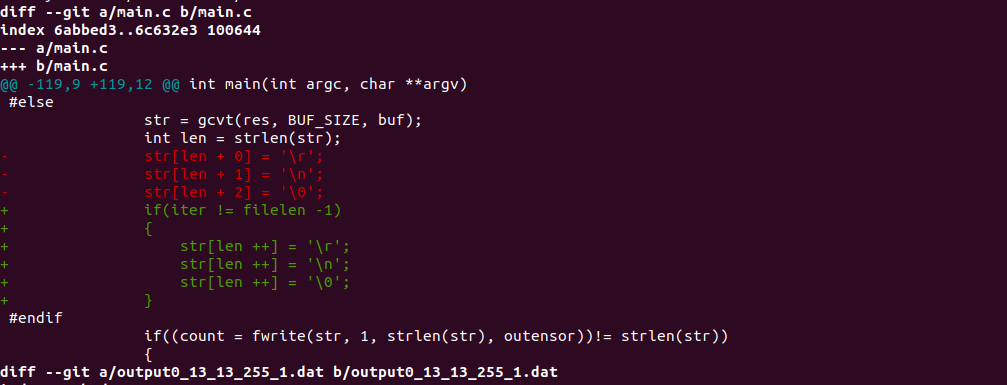

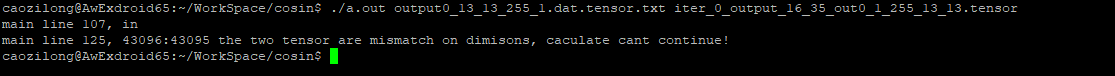

Problems in the process:

At the beginning, when testing with the tensor file exported by the program tool in the link blog below, the error in the figure below is reported consistently, that is, the number of dimensions entered into the two tensors is inconsistent, the first is 43096 and the second is 43095

However, the number of lines in the two files is the same, both 43095. Where does the extra data in the tensor file exported by the tool come from?

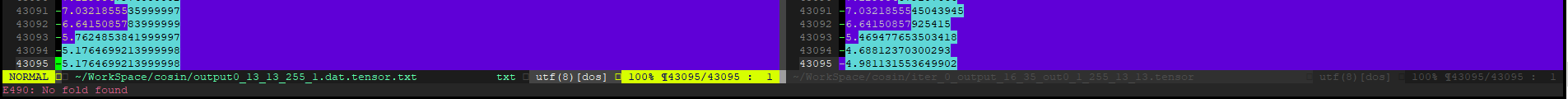

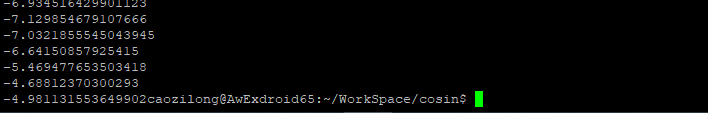

The first clue to solve this problem is that after controlling the contents of its cat file, the position of bash promot e is different:

cat output0_13_13_255_1.dat.tensor.txt

The tensor generated by cat acuity is not like this:

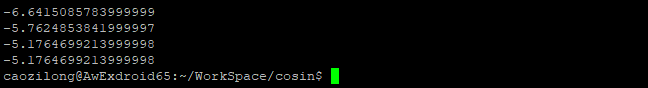

This example shows that the last line of acuity tools is EOF directly after the quantized number, rather than carriage return and line feed.

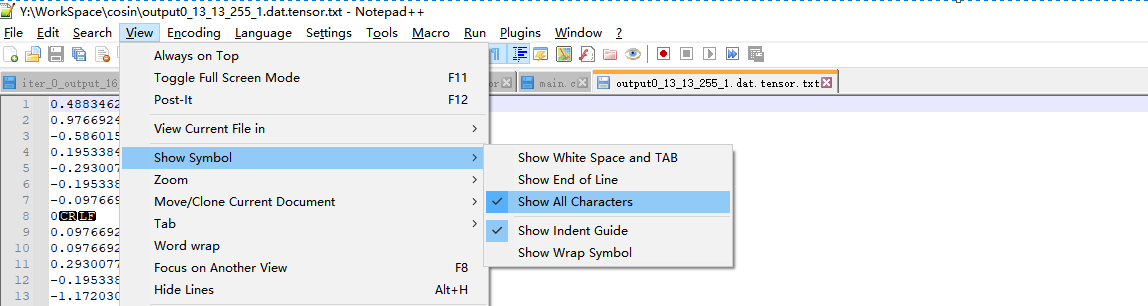

Another clue comes from notepad. Open notepad to view the menu options of all characters, including some non printed characters:

After that, you can see that there are carriage returns and line feeds in the last line, resulting in EOF in line 43096.

The tensor generated by acuity tools does not have this problem. After the last line of numbers, it will directly EOF, so the statistical dimension is correct.

Therefore, to solve this problem, modify the conversion program from dat to tensor. In the last line, directly EOF, and no carriage return and line feed characters are printed.

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <stddef.h>

#include <stdint.h>

#include <fcntl.h>

#include <unistd.h>

#include <sys/ioctl.h>

#define DBG(fmt, ...) do { printf("%s line %d, "fmt"\n", __func__, __LINE__, ##__VA_ARGS__); } while (0)

static void dump_memory(uint8_t *buf, int32_t len)

{

int i;

printf("\n\rdump file memory:");

for (i = 0; i < len; i ++)

{

if ((i % 16) == 0)

{

printf("\n\r%p: ", buf + i);

}

printf("0x%02x ", buf[i]);

}

printf("\n\r");

return;

}

static double uint8_to_fp32(uint8_t val, int32_t zeropoint, double scale)

{

double result = 0.0f;

result = (val - (uint8_t)zeropoint) * scale;

return result;

}

int main(int argc, char **argv)

{

FILE *file;

double scale;

int zeropoint;

DBG("in");

if(argc != 4)

{

DBG("input error, you should use this program like that: program xxxx.dat scale zeropoint.");

exit(-1);

}

scale = atof(argv[2]);

zeropoint = atoi(argv[3]);

DBG("scale %f, zeropoint %d.", scale, zeropoint);

file = fopen(argv[1], "rb");

if(file == NULL)

{

DBG("fatal error, open file %s failure, please check the file status.", argv[1]);

exit(-1);

}

fseek(file, 0, SEEK_END);

int filelen = ftell(file);

DBG("file %s len %d byets.", argv[1], filelen);

unsigned char *p = malloc(filelen);

if(p == NULL)

{

DBG("malloc buffer failure for %s len %d.", argv[1], filelen);

exit(-1);

}

memset(p, 0x00, filelen);

fseek(file, 0, SEEK_SET);

if(fread(p, 1, filelen, file) != filelen)

{

DBG("read file failure, size wrong.");

exit(-1);

}

dump_memory(p, 32);

dump_memory(p + filelen - 32, 32);

char outputname[255];

memset(outputname, 0x00, 255);

memcpy(outputname, argv[1], strlen(argv[1]));

memcpy(outputname+strlen(argv[1]), ".tensor", 7);

DBG("output tensor name %s", outputname);

FILE *outensor = fopen(outputname, "w+");

if(outensor == NULL)

{

DBG("fatal error, create output tensor file %s failure, please check the file status.", outputname);

exit(-1);

}

// anti-quanlization && convert to text and store.

#define BUF_SIZE 32

int iter;

double res;

char buf[BUF_SIZE];

int count;

for(iter = 0; iter < filelen; iter ++)

{

char *str;

memset(buf, 0x00, BUF_SIZE);

res = uint8_to_fp32(p[iter], zeropoint, scale);

#if 0

sprintf(buf, "%f\r\n", res);

str = buf;

#else

str = gcvt(res, BUF_SIZE, buf);

int len = strlen(str);

if(iter != filelen -1)

{

str[len ++] = '\r';

str[len ++] = '\n';

str[len ++] = '\0';

}

#endif

if((count = fwrite(str, 1, strlen(str), outensor))!= strlen(str))

{

DBG("write float to file error, count %d, strlen %ld.", count, strlen(str));

exit(-1);

}

}

fflush(outensor);

fsync(fileno(outensor));

fclose(outensor);

fclose(file);

free(p);

DBG("out");

return 0;

}Here are the main changes: