Above This paper introduces the process of statistical strategy profit and loss distribution. This paper will use multi process to realize it. stay Previous article In this paper, the main difference of multi process implementation is that the main process needs to obtain the return value of sub processes.

Main code analysis

Create a new source file named data_center_v10.py, see the end of the text for all contents. V10 mainly involves three changes:

Modify profit and loss statistics function

def profit_loss_statistic(stock_codes, hold_days=10):

This function is used for profit and loss distribution statistics. It calculates that the candidate of the current day is True and the position is held_ Income distribution of days, where:

- Parameter stock_codes is the stock code to be analyzed

- Parameter hold_days is the number of days of position

- The return value is the stock DataFrame that meets the buying conditions

As a function called by the sub process, compared with v9 and v10, this function does not output data distribution tables and picture files, but returns the filtered stock data as the return value for the main process.

# Create database engine object

engine = create_mysql_engine()

# Create an empty DataFrame

candidate_df = pd.DataFrame()

# Calculate revenue distribution cycle

for index, code in enumerate(stock_codes):

print('({}/{})Processing{}...'.format(index + 1, len(stock_codes), code))

# Table name of stock data in database

table_name = '{}_{}'.format(code[3:], code[:2])

# Skip if there is no stock data in the database

if table_name not in sqlalchemy.inspect(engine).get_table_names():

continue

# Read specific field data from the database

cols = 'date, open, high, low, close, candidate'

sql_cmd = 'SELECT {} FROM {} ORDER BY date DESC'.format(cols, table_name)

df = pd.read_sql(sql=sql_cmd, con=engine)

# Move the opening price, lowest price and hold on the second day_ The highest price and lowest price data of days

df = shift_i(df, ['open'], 1, suffix='l')

df = shift_till_n(df, ['high', 'low'], hold_days, suffix='l')

# Discard recent hold_days data

df = df.iloc[hold_days: df.shape[0] - g_available_days_limit, :]

# Select the stocks with buying points

df = df[(df['candidate'] > 0) & (df['low_1l'] <= df['close'])]

# Add data to candidate pool

if df.shape[0]:

df['code'] = code

candidate_df = candidate_df.append(df)

if candidate_df.shape[0]:

# Calculate maximum profit

# It cannot be sold on the day of purchase, so the maximum return shall be calculated from the second day

cols = ['high_{}l'.format(x) for x in range(2, hold_days + 1)]

candidate_df['max_high'] = candidate_df[cols].max(axis=1)

candidate_df['max_profit'] = candidate_df['max_high'] / candidate_df[['open_1l', 'close']].min(axis=1) - 1

# Calculate maximum loss

cols = ['low_{}l'.format(x) for x in range(2, hold_days + 1)]

candidate_df['min_low'] = candidate_df[cols].min(axis=1)

candidate_df['max_loss'] = candidate_df['min_low'] / candidate_df[['open_1l', 'close']].min(axis=1) - 1

Statistics of profit and loss. The above code is the same as v9, which can be used for reference v9 analysis content.

return candidate_df

Return the DataFrame containing profit and loss statistics.

New multi process call collection return DataFrame function

def multiprocessing_func_df(func, args):

This function is used to call multiple processes to collect and return the dataframes returned by each child process, where:

- Parameter func is the function to be called by the child process

- The parameter args is a parameter of func. The type is tuple. The 0th element is the number of processes, and the 1st element is the stock code list. If func has other parameters, they will be arranged backward in turn

- The return value is the DataFrame containing the return value of each child process

This function mainly calls multiprocessing_func for multi process calculation. Since many processes are involved in the program to call the function of collecting DataFrame, this function is abstracted for easy use.

results = multiprocessing_func(func, args)

Multiple processes call func to get the list of objects returned by child processes.

df = pd.DataFrame()

Build an empty DataFrame.

for i in results:

df = df.append(i.get())

Collect the return values of each process, obtain the return values of child processes, and summarize them to df. Results is a list constructed by us. When creating sub processes, we apply the results of the process pool through the append method_ The return value of async method is added to the results list. Here, we call the get method for each element in the results list to obtain the return value of func function called by each child process, and finally summarize all results into df.

return df

Returns the DataFrame containing the return value of each child process.

Add multi process profit and loss statistics function

def profit_loss_statistic_mp(stock_codes, process_num=61, hold_days=10):

This function is used for multi process profit and loss distribution statistics. It calculates that the candidate of the current day is True and the position is held_ Income distribution of days, output data distribution tables and picture files, where:

- Parameter stock_codes is the stock code to be analyzed

- Parameter process_num is the number of processes

- Parameter hold_days is the number of days of position

- The return value is null

In the function profile_ loss_ In statistical, we complete the calculation of the sub process, and the candidate stock data is saved in candidate_df and return. In profile_ loss_ statistic_ In MP, we want to collect these candidates_ DF, then spliced, and finally counted the overall profit and loss distribution.

candidate_df = multiprocessing_func_df(profit_loss_statistic, (process_num, stock_codes, hold_days,))

Multi process calculation obtains candidate stock data and calls multiprocessing_func_df function, collect subprocesses and call profit_ loss_ The DataFrame data returned by the statistical function.

# Save income distribution data to excel file

candidate_df[['date', 'code', 'max_profit', 'max_loss']].to_excel(

'profit_loss_{}.xlsx'.format(hold_days), index=False, encoding='utf-8')

# Reset index for later drawing

candidate_df.reset_index(inplace=True)

# Format and plot

fig, ax = plt.subplots(1, 1)

table(ax, np.round(candidate_df[['max_profit', 'max_loss']].describe(), 4), loc='upper right',

colWidths=[0.2, 0.2, 0.2])

candidate_df[['max_profit', 'max_loss']].plot(ax=ax, legend=None)

# Save chart

fig.savefig('profit_loss_{}.png'.format(hold_days))

Output the profit and loss statistics chart. The above code is the same as v9 content, which can be used for reference v9 analysis content.

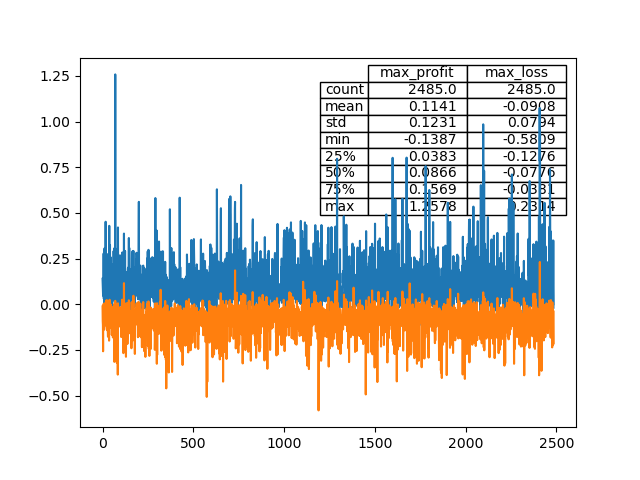

The results of drawing pictures are as follows:

Summary

This paper realizes the profit and loss distribution of multi process computing strategy, using multiprocessing Pool. apply_ Async calculates the child process. The child process has a return value. The main process obtains the return object (non return value) of the child process, and then uses the get method to obtain the value of the return object.

The calculation results obtained in this paper are consistent with Above Similarly, the strategy has a 50% probability of earning more than 8.7%. The stock data meeting the buying conditions are saved in Excel file In, readers can download, analyze and optimize by themselves.

The next article will document the process of updating data on a daily basis.

data_ center_ v10. The full code of Py is as follows:

import baostock as bs

import datetime

import sys

import numpy as np

import pandas as pd

import multiprocessing

import sqlalchemy

import matplotlib.pyplot as plt

from pandas.plotting import table

# Available daily line quantity constraints

g_available_days_limit = 250

# BaoStock daily data field

g_baostock_data_fields = 'date,open,high,low,close,preclose,volume,amount,adjustflag,turn,tradestatus,pctChg,peTTM,pbMRQ, psTTM,pcfNcfTTM,isST'

def create_mysql_engine():

"""

Create database engine object

:return: Newly created database engine object

"""

# Engine parameter information

host = 'localhost'

user = 'root'

passwd = '111111'

port = '3306'

db = 'db_quant'

# Create database engine object

mysql_engine = sqlalchemy.create_engine(

'mysql+pymysql://{0}:{1}@{2}:{3}'.format(user, passwd, host, port),

poolclass=sqlalchemy.pool.NullPool

)

# If database db does not exist_ Quant creates

mysql_engine.execute("CREATE DATABASE IF NOT EXISTS {0} ".format(db))

# Create connection database db_quant's engine object

db_engine = sqlalchemy.create_engine(

'mysql+pymysql://{0}:{1}@{2}:{3}/{4}?charset=utf8'.format(user, passwd, host, port, db),

poolclass=sqlalchemy.pool.NullPool

)

# Return engine object

return db_engine

def get_stock_codes(date=None, update=False):

"""

Gets the of the specified date A Stock code list

If parameter update by False,Indicates that the stock list is read from the database

If the table of stock list does not exist in the database, or update by True,Then download the specified date date List of traded stocks

If parameter date If it is blank, the date of the last trading day will be returned A Stock code list

If parameter date If it is not empty and is a trading day, return date Current day A Stock code list

If parameter date If it is not empty but not a trading day, the non trading day information will be printed and the program will exit

:param date: Date, default to None

:param update: Whether to update the stock list. The default is False

:return: A List of stock codes

"""

# Create database engine object

engine = create_mysql_engine()

# Table name of stock code in database

table_name = 'stock_codes'

# The stock code table does not exist in the database or needs to be updated

if table_name not in sqlalchemy.inspect(engine).get_table_names() or update:

# Log in to biostock

bs.login()

# Query stock data from BaoStock

stock_df = bs.query_all_stock(date).get_data()

# If the length of the acquired data is 0, it means that the date is not a trading day

if 0 == len(stock_df):

# If the parameter date is set, the print message indicates that date is a non trading day

if date is not None:

print('The currently selected date is a non trading day or there is no trading data, please set it date Is the date of a historical trading day')

sys.exit(0)

# If the parameter date is not set, the latest trading day will be found from the history. When the length of stock data obtained is not 0, the latest trading day will be found

delta = 1

while 0 == len(stock_df):

stock_df = bs.query_all_stock(datetime.date.today() - datetime.timedelta(days=delta)).get_data()

delta += 1

# Logout login

bs.logout()

# Screening stock data, the stock codes of Shanghai Stock Exchange and Shenzhen Stock Exchange are sh.600000 and Sz Between 39900

stock_df = stock_df[(stock_df['code'] >= 'sh.600000') & (stock_df['code'] < 'sz.399000')]

# Write stock code to database

stock_df.to_sql(name=table_name, con=engine, if_exists='replace', index=False, index_label=False)

# Return to stock list

return stock_df['code'].tolist()

# Read the stock code list from the database

else:

# sql statement to be executed

sql_cmd = 'SELECT {} FROM {}'.format('code', table_name)

# Read the sql and return the stock list

return pd.read_sql(sql=sql_cmd, con=engine)['code'].tolist()

def create_data(stock_codes, from_date='1990-12-19', to_date=datetime.date.today().strftime('%Y-%m-%d'),

adjustflag='2'):

"""

Download the daily data of the specified stock within the specified date and calculate the expansion factor

:param stock_codes: Stock code of data to be downloaded

:param from_date: Daily line start date

:param to_date: Daily line end date

:param adjustflag: Option 1: Post reinstatement option 2: pre reinstatement option 3: no reinstatement option, default to pre reinstatement option

:return: None

"""

# Create database engine object

engine = create_mysql_engine()

# Download stock cycle

for code in stock_codes:

print('Downloading{}...'.format(code))

# Log in to BaoStock

bs.login()

# Download daily data

out_df = bs.query_history_k_data_plus(code, g_baostock_data_fields, start_date=from_date, end_date=to_date,

frequency='d', adjustflag=adjustflag).get_data()

# Eliminate stop disk data

if out_df.shape[0]:

out_df = out_df[(out_df['volume'] != '0') & (out_df['volume'] != '')]

# If the data is empty, it is not created

if not out_df.shape[0]:

continue

# Delete duplicate data

out_df.drop_duplicates(['date'], inplace=True)

# Daily data is less than g_available_days_limit, do not create

if out_df.shape[0] < g_available_days_limit:

continue

# Convert numerical data to float type for subsequent processing

convert_list = ['open', 'high', 'low', 'close', 'preclose', 'volume', 'amount', 'turn', 'pctChg']

out_df[convert_list] = out_df[convert_list].astype(float)

# Reset index

out_df.reset_index(drop=True, inplace=True)

# Calculate expansion factor

out_df = extend_factor(out_df)

# Write to database

table_name = '{}_{}'.format(code[3:], code[:2])

out_df.to_sql(name=table_name, con=engine, if_exists='replace', index=True, index_label='id')

def get_code_group(process_num, stock_codes):

"""

Get code groups for multi process computing, and each process processes a set of stocks

:param process_num: Number of processes

:param stock_codes: Stock code to be processed

:return: Stock code list after grouping. Each element of the list is a list of stock codes

"""

# Create an empty group

code_group = [[] for i in range(process_num)]

# Allocate shares to each group by the remainder

for index, code in enumerate(stock_codes):

code_group[index % process_num].append(code)

return code_group

def multiprocessing_func(func, args):

"""

Multiprocess call function

:param func: Function name

:param args: func The type is tuple, the 0 th element is the number of processes, and the 1 st element is the stock code list

:return: Contains a list of objects returned by each child process

"""

# Used to save the list of objects returned by each child process

results = []

# Create process pool

with multiprocessing.Pool(processes=args[0]) as pool:

# Multi process asynchronous computing

for codes in get_code_group(args[0], args[1]):

results.append(pool.apply_async(func, args=(codes, *args[2:],)))

# Prevent subsequent tasks from being submitted to the process pool

pool.close()

# Wait for all processes to finish

pool.join()

return results

def create_data_mp(stock_codes, process_num=61,

from_date='1990-12-19', to_date=datetime.date.today().strftime('%Y-%m-%d'), adjustflag='2'):

"""

Use multiple processes to create daily data of specified stocks on a specified date and calculate the expansion factor

:param stock_codes: Stock code of data to be created

:param process_num: Number of processes

:param from_date: Daily line start date

:param to_date: Daily line end date

:param adjustflag: Option 1: Post reinstatement option 2: pre reinstatement option 3: no reinstatement option, default to pre reinstatement option

:return: None

"""

multiprocessing_func(create_data, (process_num, stock_codes, from_date, to_date, adjustflag,))

def extend_factor(df):

"""

Calculate expansion factor

:param df: Expansion factor to be calculated DataFrame

:return: With expansion factor DataFrame

"""

# Use pipe to calculate the daily limit, double God and whether it is a candidate stock

df = df.pipe(zt).pipe(ss, delta_days=30).pipe(candidate)

return df

def zt(df):

"""

Calculate the limit factor

If the limit rises, the factor is True,Otherwise False

The closing price of the current day was 9% higher than the closing price of the previous day.8%And above as the trading judgment standard

:param df: Expansion factor to be calculated DataFrame

:return: With expansion factor DataFrame

"""

df['zt'] = np.where((df['close'].values >= 1.098 * df['preclose'].values), True, False)

return df

def shift_i(df, factor_list, i, fill_value=0, suffix='a'):

"""

Calculate the movement factor for obtaining the front i Later or later i Daily factor

:param df: Expansion factor to be calculated DataFrame

:param factor_list: List of factors to be moved

:param i: Steps moved

:param fill_value: For filling NA The default value is 0

:param suffix: Value is a(ago)When, it means that the historical data obtained by the mobile is used to calculate the index; Value is l(later)When, it means to obtain future data for calculating income

:return: With expansion factor DataFrame

"""

# Select the column that needs to be shifted to form a new DataFrame for shift operation

shift_df = df[factor_list].shift(i, fill_value=fill_value)

# Rename the new DataFrame column

shift_df.rename(columns={x: '{}_{}{}'.format(x, i, suffix) for x in factor_list}, inplace=True)

# Merge the renamed DataFrame into the original DataFrame

df = pd.concat([df, shift_df], axis=1)

return df

def shift_till_n(df, factor_list, n, fill_value=0, suffix='a'):

"""

Calculation range shift factor

Used to get pre/after n The correlation factor within the day is called internally shift_i

:param df: Expansion factor to be calculated DataFrame

:param factor_list: List of factors to be moved

:param n: Move steps range

:param fill_value: For filling NA The default value is 0

:param suffix: Value is a(ago)When, it means that the historical data obtained by the mobile is used to calculate the index; Value is l(later)When, it means to obtain future data for calculating income

:return: With expansion factor DataFrame

"""

for i in range(n):

df = shift_i(df, factor_list, i + 1, fill_value, suffix)

return df

def ss(df, delta_days=30):

"""

Calculate the double God factor, that is, the two trading limits of the interval

If double gods are formed on that day, the factor is True,Otherwise False

:param df: Expansion factor to be calculated DataFrame

:param delta_days: The time between two daily limits cannot exceed this value, otherwise it will not be judged as double God, and the default value is 30

:return: With expansion factor DataFrame

"""

# Move the limit factor to get the near Delta_ The daily limit within days is saved in a temporary DataFrame

temp_df = shift_till_n(df, ['zt'], delta_days, fill_value=False)

# Generate a list for subsequent retrieval from day 2 to delta_ Are there any daily limit days ago

col_list = ['zt_{}a'.format(x) for x in range(2, delta_days + 1)]

# To calculate double gods, three conditions shall be met at the same time:

# 1. Day 2 to delta_days days ago, there was at least one daily limit

# 2. 1 day ago was not the daily limit (otherwise, it was the continuous daily limit, not the interval daily limit)

# 3. The day is the daily limit

df['ss'] = temp_df[col_list].any(axis=1) & ~temp_df['zt_1a'] & temp_df['zt']

return df

def ma(df, n=5, factor='close'):

"""

Calculate mean square factor

:param df: Expansion factor to be calculated DataFrame

:param n: The period of the moving average to be calculated. The 5-day moving average is calculated by default

:param factor: The factor of the moving average to be calculated is the closing price by default

:return: With expansion factor DataFrame

"""

# The name of the moving average, for example, the name of the 5-day moving average of the closing price is ma_5. The name of the 5-day moving average of trading volume is volume_ ma_ five

name = '{}ma_{}'.format('' if 'close' == factor else factor + '_', n)

# Take the factor column of the moving average to be calculated

s = pd.Series(df[factor], name=name, index=df.index)

# Using rolling and mean to calculate the moving average data

s = s.rolling(center=False, window=n).mean()

# Add the moving average data to the original DataFrame

df = df.join(s)

# The average value shall be kept to two decimal places

df[name] = df[name].apply(lambda x: round(x + 0.001, 2))

return df

def mas(df, ma_list, factor='close'):

"""

Calculate multiple moving average factors, internal call ma Calculate a single moving average

:param df: Expansion factor to be calculated DataFrame

:param ma_list: List of periods for which the moving average is to be calculated

:param factor: The factor of the moving average to be calculated is the closing price by default

:return: With expansion factor DataFrame

"""

for i in ma_list:

df = ma(df, i, factor)

return df

def cross_mas(df, ma_list):

"""

Calculate the average crossing factor

If the lowest price of the day is not higher than the moving average price

And the closing price of the day shall not be lower than the moving average price

Then the daily moving average factor value is True,Otherwise False

:param df: Expansion factor to be calculated DataFrame

:param ma_list: Period list of moving average

:return: With expansion factor DataFrame

"""

for i in ma_list:

df['cross_{}'.format(i)] = (df['low'] <= df['ma_{}'.format(i)]) & (

df['ma_{}'.format(i)] <= df['close'])

return df

def candidate(df):

"""

Calculate whether it is a candidate

If the daily line crosses the 5, 10, 20 and 30 day moving average at the same time

And the 30 day moving average is above the 60 day moving average

And form double gods on that day

Then the current day is the candidate, and the factor value is True,Otherwise False

:param df: Expansion factor to be calculated DataFrame

:return: With expansion factor DataFrame

"""

# Moving average period list

ma_list = [5, 10, 20, 30, 60]

# Calculate the factor of the moving average and save it to the temporary DataFrame

temp_df = mas(df, ma_list)

# Calculate the multi thread factor and save it to the temporary DataFrame

temp_df = cross_mas(temp_df, ma_list)

# List of column names of threading factors

column_list = ['cross_{}'.format(x) for x in ma_list[:-1]]

# Calculate whether it is a candidate

df['candidate'] = temp_df[column_list].all(axis=1) & (temp_df['ma_30'] >= temp_df['ma_60']) & df['ss']

return df

def profit_loss_statistic(stock_codes, hold_days=10):

"""

Profit and loss distribution statistics, calculated on the current day candidate by True,Position hold_days Day's income distribution

:param stock_codes: Stock code to be analyzed

:param hold_days: Position days

:return: Select the stocks that meet the buying conditions DataFrame

"""

# Create database engine object

engine = create_mysql_engine()

# Create an empty DataFrame

candidate_df = pd.DataFrame()

# Calculate revenue distribution cycle

for index, code in enumerate(stock_codes):

print('({}/{})Processing{}...'.format(index + 1, len(stock_codes), code))

# Table name of stock data in database

table_name = '{}_{}'.format(code[3:], code[:2])

# Skip if there is no stock data in the database

if table_name not in sqlalchemy.inspect(engine).get_table_names():

continue

# Read specific field data from the database

cols = 'date, open, high, low, close, candidate'

sql_cmd = 'SELECT {} FROM {} ORDER BY date DESC'.format(cols, table_name)

df = pd.read_sql(sql=sql_cmd, con=engine)

# Move the opening price, lowest price and hold on the second day_ The highest price and lowest price data of days

df = shift_i(df, ['open'], 1, suffix='l')

df = shift_till_n(df, ['high', 'low'], hold_days, suffix='l')

# Discard recent hold_days data

df = df.iloc[hold_days: df.shape[0] - g_available_days_limit, :]

# Select the stocks with buying points

df = df[(df['candidate'] > 0) & (df['low_1l'] <= df['close'])]

# Add data to candidate pool

if df.shape[0]:

df['code'] = code

candidate_df = candidate_df.append(df)

if candidate_df.shape[0]:

# Calculate maximum profit

# It cannot be sold on the day of purchase, so the maximum return shall be calculated from the second day

cols = ['high_{}l'.format(x) for x in range(2, hold_days + 1)]

candidate_df['max_high'] = candidate_df[cols].max(axis=1)

candidate_df['max_profit'] = candidate_df['max_high'] / candidate_df[['open_1l', 'close']].min(axis=1) - 1

# Calculate maximum loss

cols = ['low_{}l'.format(x) for x in range(2, hold_days + 1)]

candidate_df['min_low'] = candidate_df[cols].min(axis=1)

candidate_df['max_loss'] = candidate_df['min_low'] / candidate_df[['open_1l', 'close']].min(axis=1) - 1

return candidate_df

def multiprocessing_func_df(func, args):

"""

Multiple processes call functions to collect the information returned by each child process DataFrame

:param func: Function name

:param args: func The type is tuple, the 0 th element is the number of processes, and the 1 st element is the stock code list

:return: Contains the returned value of each child process DataFrame

"""

# Multiple processes call func to get the list of objects returned by child processes

results = multiprocessing_func(func, args)

# Building an empty DataFrame

df = pd.DataFrame()

# Collect the return value of each process

for i in results:

df = df.append(i.get())

return df

def profit_loss_statistic_mp(stock_codes, process_num=61, hold_days=10):

"""

Multi process profit and loss distribution statistics, calculate the current day candidate by True,Position hold_days Day's income distribution

Output data distribution tables and picture files

:param stock_codes: Stock code to be analyzed

:param process_num: Number of processes

:param hold_days: Position days

:return: None

"""

# Multi process calculation to obtain candidate stock data

candidate_df = multiprocessing_func_df(profit_loss_statistic, (process_num, stock_codes, hold_days,))

# Save income distribution data to excel file

candidate_df[['date', 'code', 'max_profit', 'max_loss']].to_excel(

'profit_loss_{}.xlsx'.format(hold_days), index=False, encoding='utf-8')

# Reset index for later drawing

candidate_df.reset_index(inplace=True)

# Format and plot

fig, ax = plt.subplots(1, 1)

table(ax, np.round(candidate_df[['max_profit', 'max_loss']].describe(), 4), loc='upper right',

colWidths=[0.2, 0.2, 0.2])

candidate_df[['max_profit', 'max_loss']].plot(ax=ax, legend=None)

# Save chart

fig.savefig('profit_loss_{}.png'.format(hold_days))

# Display chart

plt.show()

if __name__ == '__main__':

stock_codes = get_stock_codes()

profit_loss_statistic_mp(stock_codes)

Blog content is only used for communication and learning, does not constitute investment suggestions, and is responsible for its own profits and losses!

Personal blog: https://coderx.com.cn/ (priority update)

Latest project code: https://gitee.com/sl/quant_from_scratch

Welcome to forward and leave messages. Wechat group has been established for learning and communication. Group 1 is full and group 2 has been created. Interested readers please scan the code and add wechat!