Go microservice architecture practice - [gongzong No.: stack future]

The original text moves here

This series of articles is mainly aimed at the actual combat of micro service architecture in the cloud native field, including the application of gateway, k8s, etcd, grpc and other related technologies. At the same time, it will also add service discovery and registration, fusing, degradation, current limiting and distributed locking to the series as a supplement. At the end of the course, it will also arrange the learning of distributed link tracking framework, The construction of the monitoring platform and gray-scale publishing and other technical services, so generally speaking, the course covers a wide range of technical fields and a wide range of knowledge. Everyone comes down to take what they need and try to be familiar with and apply it. Then there is time to study the source code. Happy!

The first part has been completed. You can see that I posted it here. The second part has been produced for you since this week. Because it takes too much energy, I hope you can give me more support!

Go microservice architecture actual combat directory

1. Microservice architecture part I

1. Introduction to grpc Technology

2. grpc+protobuf + gateway actual combat

3. etcd technology introduction

4. etcd based service discovery and registration

5. Distributed lock practice based on etcd

2. Microservice architecture Part II

1. k8s architecture introduction

2. Container deployment based on pod and deployment

For k8s, all resource objects are created with yaml files. K8s provides a tool kubectl to interact with API server to create corresponding resource objects.

Our project has a server, a client, and a service discovery and registry etcd.

We used to use supervisor to host various service processes on bare metal machines, which is relatively simple and direct. Now we use k8s to try to deploy it.

1. Steps to create a server pod

- First, in order to enable the server to deploy in containers, we need the existing Dockerfile. Let's take a look at the Dockerfile of the server:

FROM golang AS build-env //Find golang image from Docker image warehouse ADD . /go/src/app WORKDIR /go/src/app RUN GOOS=linux GOARCH=386 go build -mod vendor cmd/svr/svr.go //Build mirror FROM alpine //Building binary images COPY --from=build-env /go/src/app/svr /usr/local/bin/svr //copy from golang to binary image CMD [ "svr", "-port", "50009"] //Run the service. The specified parameter is port

-

Build an image with docker build docker build - t k8s-grpc-demo - F/ Dockerfile .

-

Create the yaml of the server (server. Yaml) with the image just described:

apiVersion: apps/v1 kind: Deployment //Deployment is the object that manages Pod resources metadata: name: k8sdemo-deploy //Pod name labels: app: k8sdemo //The Pod tag} provides load balancing for the service spec: replicas: 1 //Copy is 1 selector: matchLabels: app: k8sdemo template: metadata: labels: app: k8sdemo spec: containers: - name: k8sdemo //Container name image: k8s-grpc-demo:latest //Use the local image just generated imagePullPolicy: Never //Build locally ports: - containerPort: 50007 //Container port

-

Create podkubectl apply - f server with kubectl Yaml does not specify a namespace. It is in the default space by default.

-

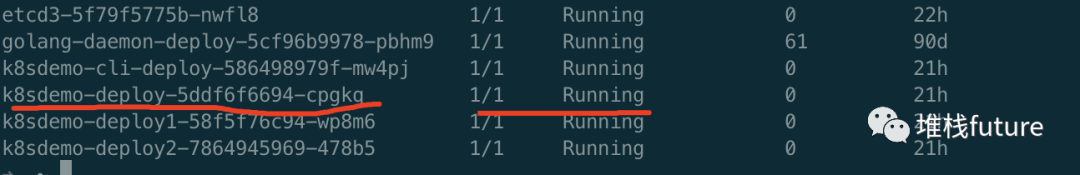

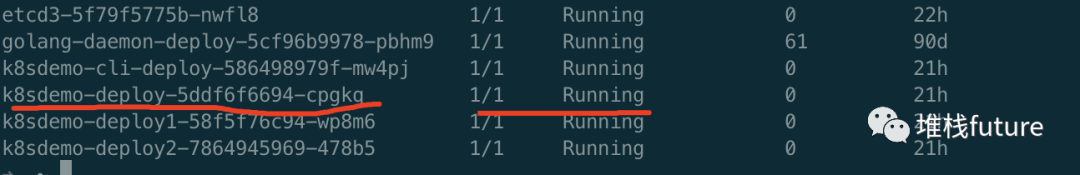

After creation, check whether the pod gets up kubectl get pod

It is found that the server's pod has been running.

It is found that the server's pod has been running.

In order to test several more services, we copy and create the same Dockerfile and server Yaml, such as Dockerfile1, Dockerfile2 and Server1 Yaml and server2 Yaml, the configuration is not pasted here. You can view it from the github address.

2. Steps of creating client's pod

- Create Dockerfile(Dockerfile3)

FROM golang AS build-env ADD . /go/src/app WORKDIR /go/src/app RUN GOOS=linux GOARCH=386 go build -mod vendor cmd/cli/cli.go FROM alpine COPY --from=build-env /go/src/app/cli /usr/local/bin/cli CMD [ "cli"]

-

Build dockerfiledocker build - t k8s-grpc-demo3 - F/ Dockerfile3 .

-

Create client yaml(client.yaml) with the image just described:

apiVersion: apps/v1 kind: Deployment metadata: name: k8sdemo-cli-deploy //Client pod name labels: app: k8sdemo spec: replicas: 1 selector: matchLabels: app: k8sdemo template: metadata: labels: app: k8sdemo spec: containers: - name: k8sdemocli image: k8s-grpc-demo3:latest //Use the image you just built imagePullPolicy: Never ports: - containerPort: 50010

-

Create podkubectl apply - F client with kubectl Yaml does not specify a namespace. It is in the default space by default.

-

After creation, check whether the pod gets up kubectl get pod

It is found that the client's pod has been running.

It is found that the client's pod has been running.

3. Steps to create a pod for etcd

Because we use the etcd image on the Docker official website, we don't need to build it ourselves. Here, we directly post the yaml of the pod that created the etcd.

apiVersion: apps/v1

kind: Deployment

metadata:

name: etcd3 //etcd name

labels:

name: etcd3

spec:

replicas: 1

selector:

matchLabels:

app: etcd3

template:

metadata:

labels:

app: etcd3

spec:

containers:

- name: etcd3 //Container name

image: quay.io/coreos/etcd:latest //etcd image

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /data //Data store mount path

name: etcd-data

env:

- name: host_ip

valueFrom:

fieldRef:

fieldPath: status.podIP

command: ["/bin/sh","-c"]

args: //Start etcd

- /usr/local/bin/etcd //etcd executable

--name etcd3 //Name of etcd cluster

--initial-advertise-peer-urls http://0.0.0.0:2380

--listen-peer-urls http://0.0.0.0:2380

--listen-client-urls http://0.0.0.0:2379

--advertise-client-urls http://0.0.0.0:2379

--initial-cluster-token etcd-cluster-1

--initial-cluster etcd3=http://0.0.0.0:2380

--initial-cluster-state new

--data-dir=/data

volumes:

- name: etcd-data

emptyDir: {} //Current container as storage path

Then create the pod directly with the kubectl command

kubectl apply -f etc.yaml

View with kubectl get pod It is found that the pod of etcd is running.

It is found that the pod of etcd is running.

However, a pod does not necessarily have the ability to provide internal service registration and discovery, so a service is required to provide services to an internal pod. Therefore, the service for creating an etcd is as follows:

apiVersion: v1 kind: Service metadata: name: etcd3 //The name of the service is very important for service registration and discovery spec: ports: - name: client port: 2379 //client access port of service to internal pod targetPort: 2379 - name: etcd3 port: 2380 //server access port of service to internal pod targetPort: 2380 selector: app: etcd3 //Find the etcd # pod # that needs to be associated, that is, the etcd # pod created above

Let's see if the creation is successful: bingo. So far, the service, client and etcd service registration and discovery center have been deployed.

bingo. So far, the service, client and etcd service registration and discovery center have been deployed.

Next, make a small change to realize service registration and discovery.

3. Service registration

Because our pod also has ip, we must obtain the ip of the pod before service registration. The code is as follows:

//Get the local eth0# IP # you can see the implementation in the source code func GetLocalIP() string

Then there are two areas that need to be modified:

-

The address of the registry (etcd address) was changed from localhost to http://etcd3:2379 As for why, I can make a brief introduction, because localhost is not available between different pods. Localhost can only communicate with each other between containers in the same pod. There is no way for different pods to communicate. Therefore, the pod that needs to communicate must be accessed through the svc name of etcd. The k8s cluster provides related dns that will automatically resolve to the svc ip, so the pod can access the etcd registry.

-

Change the localhost that the original service listens to by default to obtain ip from the local

1 and 2 Modification codes are as follows:

4. Client discovery

The client also needs to modify the registry address:

5. Verification

After the above modifications are completed, we also need to go through the above reconstruction and deployment steps, because the code has changed.

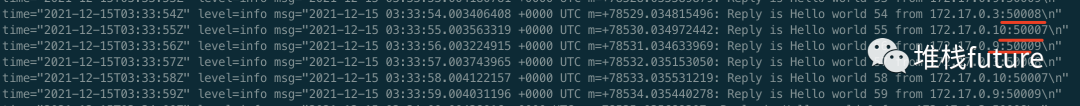

After the redeployment, when we finally started the client, we found that there were requests from multiple servers in the log, and the response was returned by RR round robin. We can see the pod list of the client and service:

The client logs are as follows:

So far, our k8s based service deployment and service registration center has been set up. We will bring you failover, rolling update, capacity expansion and other contents. You are welcome to pay attention, share and praise.

github address:

https://github.com/guojiangli/k8s-grpc-demo

Stack future

So that many coder s in the confused stage can find light from here, stack creation, contribute to the present and benefit the future

125 original content

[gongzong No.: future]