TCN from "ABA ABA" to "balabalabala"

- The concept of TCN (why? What problems can be solved)

- TCN's parents (origin)

- Introduction to the principle of TCN

- Code!

1. What is TCN (time domain convolution network, time convolution network) and what can it do

-

Main application directions:

Time series prediction, probability prediction, time prediction and traffic prediction

2. Origin of TCN

ps: before understanding TCN, you need to have a certain understanding of CNN and RNN.

- Handling problems:

It is a network structure that can process time series data. Under specific conditions, the effect is better than the traditional neural network (RNN, CNN, etc.).

3. Introduction to the principle of TCN

Network structure of TCN

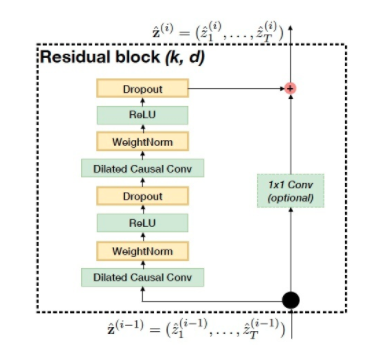

1, The network structure of TCN is mainly composed of the above figure. This paper is divided into two parts: the left and the right. The first is the left

Dilated Causal Conv ---> WeightNorm--->ReLU--->Dropout--->Dilated Causal Conv ---> WeightNorm--->ReLU--->Dropout

Obviously, this can be divided into

(Dilated Causal Conv ---> WeightNorm--->ReLU--->Dropout)*2

ok, let's explain these four one by one. If you have any idea, you can choose to skip

1,Dilated Gausal Conv

Dilated causal convolution

Inflation causal convolution can be divided into inflation, causality and convolution.

Convolution refers to the convolution in CNN, which refers to a sliding operation performed by the convolution kernel on the data;

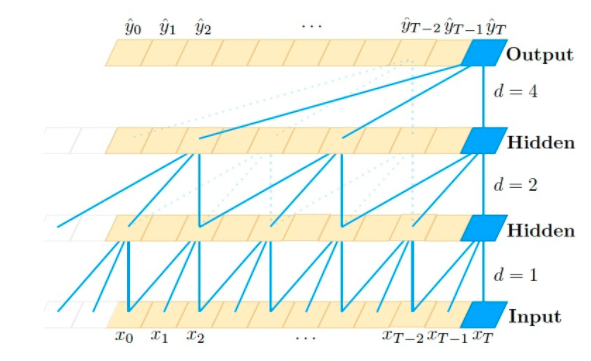

Dilation means that the input of convolution is allowed to have interval sampling, which is similar to the stripe in convolution neural network, but also has obvious differences

Picture Description:

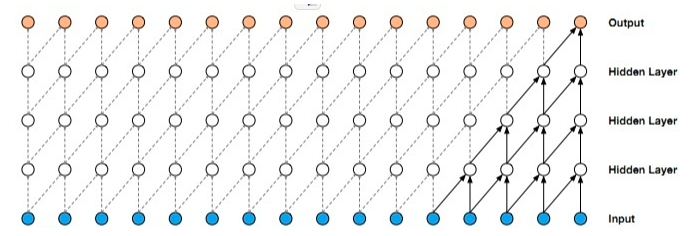

Causality refers to the data at time t in layer I, which only depends on the influence of time t and its previous values in layer (i-1). Causal convolution can abandon the reading of future data during training. It is a strict time constrained model.

Picture Description:

(ps: expansion convolution is not added)

2,WeightNorm

Normalize Weights

Normalize the weight value. If you want to carefully study the normalization process & normalization formula, you can click link Learning

advantage:

1. Small time overhead and fast operation speed!

2. Introduce less noise

3. WeightNorm is accelerated by rewriting the weight of the deep network without introducing the dependence on minibatch

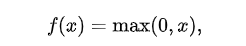

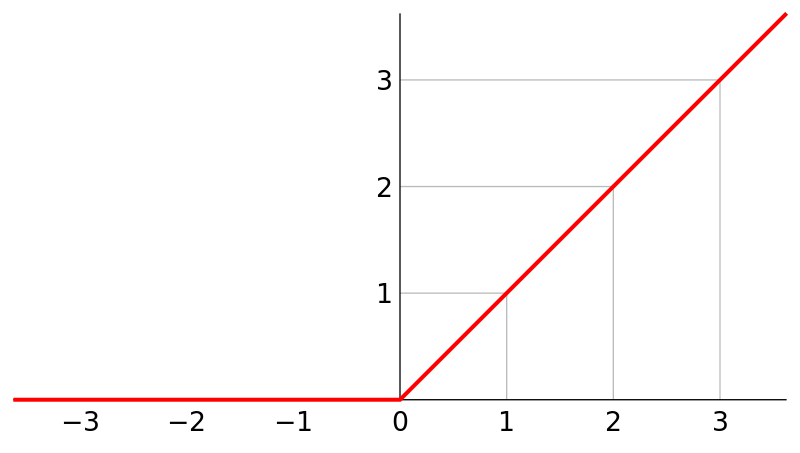

3,ReLU()

A method of activation function

advantage:

1. It can make the training speed of the network faster

2. Increase the nonlinearity of the network and improve the expression ability of the model

3. Prevent the gradient from disappearing,

4. Make the network sparse, etc

Formula:

Overview diagram:

4,Dropout()

Dropout means that in the training process of deep learning network, neural network units are temporarily discarded from the network according to a certain probability.

Advantages: prevent over fitting and improve the operation speed of the model

2, Finally, the right - residual connection:

On the right is a 1 * 1 convolution block, which can not only make the network have the function of transmitting information across layers, but also ensure the consistency of input and output.

3, Advantages of TCN:

1. Parallelism

2. Gradient disappearance and gradient explosion can be avoided to a great extent

3. The receptive field is larger and more information is learned

4. Zero coding

import os

import sys

import paddle

import paddle.nn as nn

import numpy as np

import pandas as pd

import seaborn as sns

from pylab import rcParams

import matplotlib.pyplot as plt

from matplotlib import rc

import paddle.nn.functional as F

from paddle.nn.utils import weight_norm

from sklearn.preprocessing import MinMaxScaler

from pandas.plotting import register_matplotlib_converters

from sourceCode import TimeSeriesNetwork

sys.path.append(os.path.abspath(os.path.join(os.getcwd(), "../..")))

class Chomp1d(nn.Layer):

def __init__(self, chomp_size):

super(Chomp1d, self).__init__()

self.chomp_size = chomp_size

def forward(self, x):

return x[:, :, :-self.chomp_size]

class TemporalBlock(nn.Layer):

def __init__(self,

n_inputs,

n_outputs,

kernel_size,

stride,

dilation,

padding,

dropout=0.2):

super(TemporalBlock, self).__init__()

self.conv1 = weight_norm(

nn.Conv1D(

n_inputs,

n_outputs,

kernel_size,

stride=stride,

padding=padding,

dilation=dilation))

# Chomp1d is used to make sure the network is causal.

# We pad by (k-1)*d on the two sides of the input for convolution,

# and then use Chomp1d to remove the (k-1)*d output elements on the right.

self.chomp1 = Chomp1d(padding)

self.relu1 = nn.ReLU()

self.dropout1 = nn.Dropout(dropout)

self.conv2 = weight_norm(

nn.Conv1D(

n_outputs,

n_outputs,

kernel_size,

stride=stride,

padding=padding,

dilation=dilation))

self.chomp2 = Chomp1d(padding)

self.relu2 = nn.ReLU()

self.dropout2 = nn.Dropout(dropout)

self.net = nn.Sequential(self.conv1, self.chomp1, self.relu1,

self.dropout1, self.conv2, self.chomp2,

self.relu2, self.dropout2)

self.downsample = nn.Conv1D(n_inputs, n_outputs,

1) if n_inputs != n_outputs else None

self.relu = nn.ReLU()

self.init_weights()

def init_weights(self):

self.conv1.weight.set_value(

paddle.tensor.normal(0.0, 0.01, self.conv1.weight.shape))

self.conv2.weight.set_value(

paddle.tensor.normal(0.0, 0.01, self.conv2.weight.shape))

if self.downsample is not None:

self.downsample.weight.set_value(

paddle.tensor.normal(0.0, 0.01, self.downsample.weight.shape))

def forward(self, x):

out = self.net(x)

res = x if self.downsample is None else self.downsample(x) # Make input equal to output

return self.relu(out + res)

class TCNEncoder(nn.Layer):

def __init__(self, input_size, num_channels, kernel_size=2, dropout=0.2):

# input_size: enter the expected number of features

# num_channels: number of channels

# kernel_size: convolution kernel size

super(TCNEncoder, self).__init__()

self._input_size = input_size

self._output_dim = num_channels[-1]

layers = nn.LayerList()

num_levels = len(num_channels)

# print('print num_channels: ', num_channels)

# print('print num_levels: ',num_levels)

# exit(0)

for i in range(num_levels):

dilation_size = 2 ** i

in_channels = input_size if i == 0 else num_channels[i - 1]

out_channels = num_channels[i]

layers.append(

TemporalBlock(

in_channels,

out_channels,

kernel_size,

stride=1,

dilation=dilation_size,

padding=(kernel_size - 1) * dilation_size,

dropout=dropout))

self.network = nn.Sequential(*layers)

def get_input_dim(self):

return self._input_size

def get_output_dim(self):

return self._output_dim

def forward(self, inputs):

inputs_t = inputs.transpose([0, 2, 1])

output = self.network(inputs_t).transpose([2, 0, 1])[-1]

return output

class TimeSeriesNetwork(nn.Layer):

def __init__(self, input_size, next_k=1, num_channels=[256]):

super(TimeSeriesNetwork, self).__init__()

self.last_num_channel = num_channels[-1]

self.tcn = TCNEncoder(

input_size=input_size,

num_channels=num_channels,

kernel_size=3,

dropout=0.2

)

self.linear = nn.Linear(in_features=self.last_num_channel, out_features=next_k)

def forward(self, x):

tcn_out = self.tcn(x)

y_pred = self.linear(tcn_out)

return y_pred

'''

I try to portray myself as the hero in the tragedy,

Put all the blame on you,

Make you an evil witch,

become frenzied

But I am a normal person,

Sad and happy,

Wrong and right,

At this point,

We all have a responsibility,

Until now, I don't think I've lost you

Did you tell me I lost you?

'''

def config_mtp():

sns.set(style='whitegrid', palette='muted', font_scale=1.2)

HAPPY_COLORS_PALETTE = ["#01BEFE", "#FFDD00", "#FF7D00", "#FF006D", "#93D30C", "#8F00FF"]

sns.set_palette(sns.color_palette(HAPPY_COLORS_PALETTE))

rcParams['figure.figsize'] = 14, 10

register_matplotlib_converters()

def read_data():

df_all = pd.read_csv('./data/time_series_covid19_confirmed_global.csv')

# print(df_all.head())

# We will predict the number of cases in the world, so we don't need to care about the longitude and latitude of specific countries, just the number of global cases on specific dates.

df = df_all.iloc[:, 4:]

daily_cases = df.sum(axis=0)

daily_cases.index = pd.to_datetime(daily_cases.index)

# print(daily_cases.head())

plt.figure(figsize=(5, 5))

plt.plot(daily_cases)

plt.title("Cumulative daily cases")

# plt.show()

# In order to improve the stationarity of the sample time series, the first-order difference is taken

daily_cases = daily_cases.diff().fillna(daily_cases[0]).astype(np.int64)

# print(daily_cases.head())

plt.figure(figsize=(5, 5))

plt.plot(daily_cases)

plt.title("Daily cases")

plt.xticks(rotation=60)

plt.show()

return daily_cases

def create_sequences(data, seq_length):

xs = []

ys = []

for i in range(len(data) - seq_length + 1):

x = data[i:i + seq_length - 1]

y = data[i + seq_length - 1]

xs.append(x)

ys.append(y)

return np.array(xs), np.array(ys)

def preprocess_data(daily_cases):

TEST_DATA_SIZE,SEQ_LEN = 30,10

TEST_DATA_SIZE = int(TEST_DATA_SIZE/100*len(daily_cases))

# TEST_DATA_SIZE=30, the last 30 data are used as test sets for prediction

train_data = daily_cases[:-TEST_DATA_SIZE]

test_data = daily_cases[-TEST_DATA_SIZE:]

print("The number of the samples in train set is : %i" % train_data.shape[0])

print(train_data.shape, test_data.shape)

# In order to improve the convergence speed and performance of the model, we use scikit learn for data normalization.

scaler = MinMaxScaler()

train_data = scaler.fit_transform(np.expand_dims(train_data, axis=1)).astype('float32')

test_data = scaler.transform(np.expand_dims(test_data, axis=1)).astype('float32')

# Build time series

# The number of cases in the first 10 days can be used to predict the number of cases in that day. In order to make all the data in the test set participate in the prediction, we will supplement a small amount of data to the test set, which will only be used as the input of the model.

x_train, y_train = create_sequences(train_data, SEQ_LEN)

test_data = np.concatenate((train_data[-SEQ_LEN + 1:], test_data), axis=0)

x_test, y_test = create_sequences(test_data, SEQ_LEN)

# Try output

'''

print("The shape of x_train is: %s"%str(x_train.shape))

print("The shape of y_train is: %s"%str(y_train.shape))

print("The shape of x_test is: %s"%str(x_test.shape))

print("The shape of y_test is: %s"%str(y_test.shape))

'''

return x_train,y_train,x_test,y_test,scaler

# After the data set is processed, the data set is encapsulated into CovidDataset for model training and prediction.

class CovidDataset(paddle.io.Dataset):

def __init__(self, feature, label):

self.feature = feature

self.label = label

super(CovidDataset, self).__init__()

def __len__(self):

return len(self.label)

def __getitem__(self, index):

return [self.feature[index], self.label[index]]

def parameter():

LR = 1e-2

model = paddle.Model(network)

optimizer = paddle.optimizer.Adam(

learning_rate=LR, parameters=model.parameters())

loss = paddle.nn.MSELoss(reduction='sum')

model.prepare(optimizer, loss)

config_mtp()

data = read_data()

x_train,y_train,x_test,y_test,scaler = preprocess_data(data)

train_dataset = CovidDataset(x_train, y_train)

test_dataset = CovidDataset(x_test, y_test)

network = TimeSeriesNetwork(input_size=1)

# Parameter configuration

LR = 1e-2

model = paddle.Model(network)

optimizer = paddle.optimizer.Adam(learning_rate=LR, parameters=model.parameters()) # optimizer

loss = paddle.nn.MSELoss(reduction='sum')

model.prepare(optimizer, loss) # Configure the model before running

# train

USE_GPU = False

TRAIN_EPOCH = 100

LOG_FREQ = 20

SAVE_DIR = os.path.join(os.getcwd(),"save_dir")

SAVE_FREQ = 20

if USE_GPU:

paddle.set_device("gpu")

else:

paddle.set_device("cpu")

model.fit(train_dataset,

batch_size=32,

drop_last=True,

epochs=TRAIN_EPOCH,

log_freq=LOG_FREQ,

save_dir=SAVE_DIR,

save_freq=SAVE_FREQ,

verbose=1 # The verbosity mode, should be 0, 1, or 2. 0 = silent, 1 = progress bar, 2 = one line per epoch. Default: 2.

)

# forecast

preds = model.predict(

test_data=test_dataset

)

# Data post-processing, convert the normalized data into the original data, and draw the curve corresponding to the real value and the curve corresponding to the predicted value.

true_cases = scaler.inverse_transform(

np.expand_dims(y_test.flatten(), axis=0)

).flatten()

predicted_cases = scaler.inverse_transform(

np.expand_dims(np.array(preds).flatten(), axis=0)

).flatten()

print(true_cases.shape, predicted_cases.shape)

# print (type(data))

# print(data[1:3])

# print (len(data), len(data))

# print(data.index[:len(data)])

mse_loss = paddle.nn.MSELoss(reduction='mean')

print(paddle.sqrt(mse_loss(paddle.to_tensor(true_cases), paddle.to_tensor(predicted_cases))))

print(true_cases, predicted_cases)

If you need data, please comment below, and you can also get it by private mail.

Don't forget to like, comment and collect. It's really important to me~