Following the previous article, according to the "terminal factory automation operation and maintenance scheme", https://blog.csdn.net/yyz_1987/article/details/118358038

Take the terminal status maintenance monitoring service and remote acquisition log instruction as an example to record the simple use of go zero micro service. Finally, a low-cost background monitoring cloud service is realized to monitor the status of all factory terminal equipment and subsequent alarm push service.

This scheme is simple and simple, and it is really not easy to say it is difficult. The difficulty is how to support the high concurrency of tens of thousands of equipment across the country every ten minutes. The number of terminals is calculated as 100000, unlike other systems, which read more and write less. This monitoring scenario actually writes more data and reads less data. Can a single mysql database support the writing of 100000 records at the same time?

It involves load balancing of API gateway and multiple deployments of the same microservice node. Data records are first entered into the persistent cache queue, and then written to mysql These must be indispensable.

The great gods in the Golang group suggest that you go to MQ as follows Kafka This reduces the write pressure on the database. But kafka is a bit of a heavyweight. Let's not consider it first. Others suggest F5 load balancing on hardware or using keepalive or lvs. However, with the help of domain name resolution, it will not be considered for the time being. When it really reaches the order of magnitude or it is necessary to solve it, there will always be a way.

Here is the preliminary implementation:

Create a new directory of Golang service background project code, named monitor.

Environmental preparation

Mysql, redis and etcd are installed on the computer or server

Download some plug-in tools: goctl, protocol exe,proto-gen-go. exe

Implementation of API gateway layer

According to the use mode of goctl code generated artifact, first define the interface field information to be submitted by the terminal:

statusUpload.api

type (

//Content of terminal status report

StatusUploadReq {

Sn string `json:"sn"` //Equipment unique number

Pos string `json:"pos"` //Terminal number

City string `json:"city"` //City Code

Id string `json:"id"` //Terminal type

Unum1 uint `json:"unnum"` //Number of records not transmitted -- bus

Unum2 uint `json:"unnum"` //Quantity of records not transferred -- Third Party

Ndate string `json:"ndate"` //current date

Ntime string `json:"ntime"` //current time

Amount uint `json:"amount"` //Total on duty

Count uint `json:"count"` //Number of people on duty

Line uint `json:"line"` //Line number

Stime string `json:"stime"` //Startup time

Ctime string `json:"ctime"` //Shutdown time

Tenant uint `json:"tenant"` //Tenant ID

}

//Response content

StatusUploadResp {

Code int `json:"code"`

Msg string `json:"msg"`

Cmd int `json:"cmd"` //Control terminal command word

}

)

service open-api {

@doc(

summary: Open api function

desc: >statusUpload Terminal status reporting

)

@server(

handler: statusUploadHandler

folder: open

)

post /open/statusUpload(StatusUploadReq) returns(StatusUploadResp)

}Next, with the power of goctl artifact, directly generate the gateway layer code:

goctl api go -api statusUpload.api -dir .

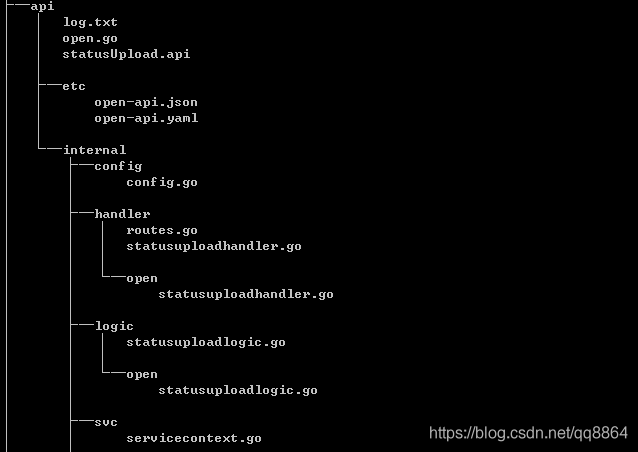

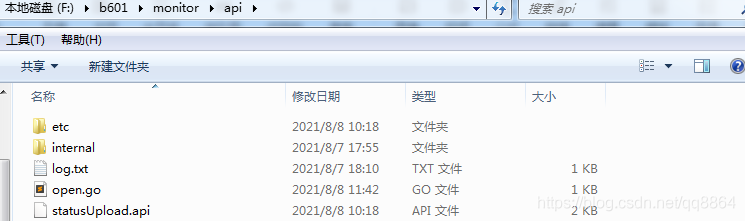

The directory structure of generated code is as follows:

Next, run and try:

go run open.go

The gateway layer is started successfully. Listen on port 8888 and open API in the etc folder Configuration in yaml file

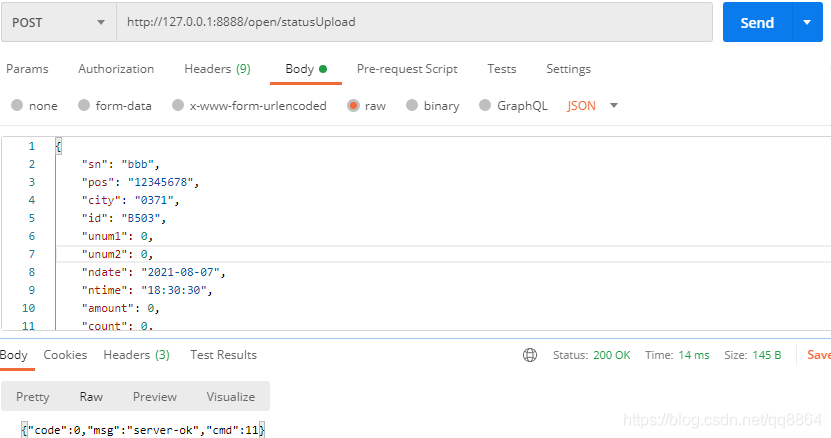

Measure with postman and curl tools respectively:

curl http://127.0.0.1:8888/open/statusUpload -X POST -H "Content-Type: application/json" -d @status.json

status.json file content:

{

"sn": "1C2EB08D",

"pos": "12345678",

"city": "0371",

"id": "B503",

"unum1": 0,

"unum2": 0,

"ndate": "2021-08-07",

"ntime": "18:30:30",

"amount": 0,

"count": 0,

"line": 101,

"stime": "05:01:01",

"ctime": "18:30:20",

"tenant": 0

}RPC server implementation

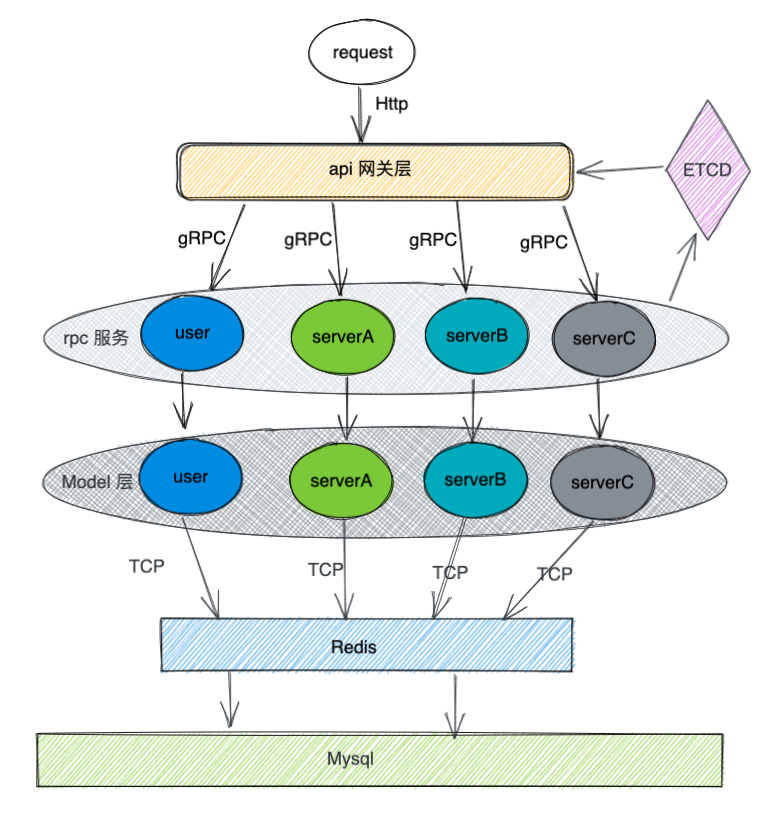

Next, transform it into a microservice, and call the interface provided by the service through rpc. The general structure is as follows:

The etcd environment needs to be installed in advance, and some plug-in tools need to be installed, such as proto Exe and proto Gen go Exe tool, put it in the bin directory of go or gopath.

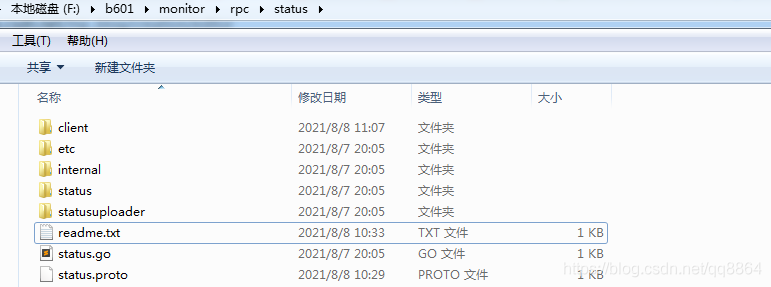

Create an rpc folder under the project code directory and create a code directory on the microservice side, which is called status here.

Define the proto file, status Proto is as follows:

syntax = "proto3";

package status;

message statusUploadReq {

string sn = 1;

string pos = 2;

string city = 3;

string id = 4;

uint32 unum1 = 5;

uint32 unum2 = 6;

string ndate = 7;

string ntime = 8;

uint32 amount = 9;

uint32 count = 10;

uint32 line = 11;

string stime = 12;

string ctime = 13;

uint32 tenant = 14;

}

message statusUploadResp {

int32 code = 1;

string msg = 2;

int32 cmd = 3;

}

service statusUploader {

rpc statusUpload(statusUploadReq) returns(statusUploadResp);

}Then the goctl artifact became powerful and automatically generated code. Isn't it powerful?

goctl rpc proto -src=status.proto -dir .

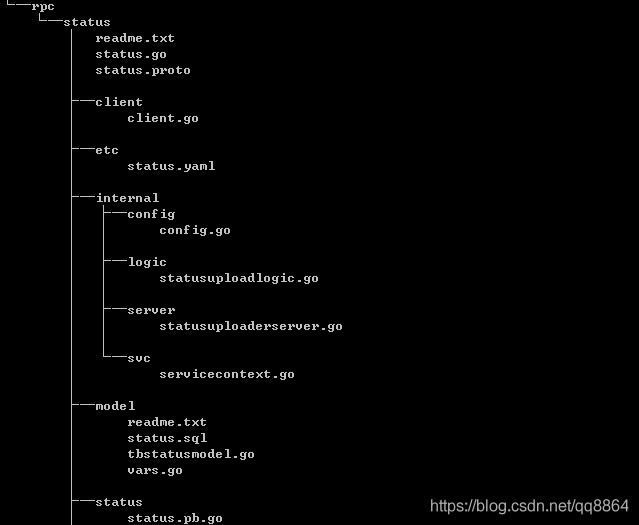

The automatically generated file directory is as follows:

The automatically generated does not contain the client folder shown above. The client folder is created to test the rpc service separately. Make a demo on the client side to call the rpc service. The model folder is also created manually and contains the database operation interface.

Automatically generated rpc server status Go entry file content:

package main

import (

"flag"

"fmt"

"monitor/rpc/status/internal/config"

"monitor/rpc/status/internal/server"

"monitor/rpc/status/internal/svc"

"monitor/rpc/status/status"

"github.com/tal-tech/go-zero/core/conf"

"github.com/tal-tech/go-zero/zrpc"

"google.golang.org/grpc"

)

var configFile = flag.String("f", "etc/status.yaml", "the config file")

func main() {

flag.Parse()

var c config.Config

conf.MustLoad(*configFile, &c)

ctx := svc.NewServiceContext(c)

srv := server.NewStatusUploaderServer(ctx)

s := zrpc.MustNewServer(c.RpcServerConf, func(grpcServer *grpc.Server) {

status.RegisterStatusUploaderServer(grpcServer, srv)

})

defer s.Stop()

fmt.Printf("Starting rpc server at %s...\n", c.ListenOn)

s.Start()

}At this time, if etcd is started, go run status Go, the server starts successfully.

RPC client test

To verify that the rpc server works normally, implement a zrpc client in the client folder to test:

client.go files are as follows:

package main

import (

"context"

"fmt"

"github.com/tal-tech/go-zero/core/discov"

"github.com/tal-tech/go-zero/zrpc"

"log"

pb "monitor/rpc/status/status"

)

func main() {

client := zrpc.MustNewClient(zrpc.RpcClientConf{

Etcd: discov.EtcdConf{

Hosts: []string{"127.0.0.1:2379"},

Key: "status.rpc",

},

})

sclient := pb.NewStatusUploaderClient(client.Conn())

reply, err := sclient.StatusUpload(context.Background(), &pb.StatusUploadReq{Sn: "test rpc", Pos: "go-zero"})

if err != nil {

log.Fatal(err)

}

fmt.Println(reply.Msg)

}If the service is normal, you will receive a response from the server interface.

The gateway layer call is changed to micro service call

The gateway layer can be transformed into the invocation mode of micro services. The changes are not significant, as follows:

Step 1:

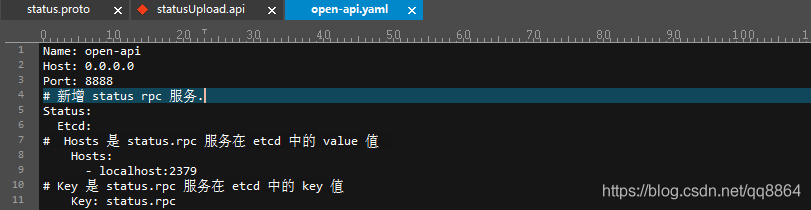

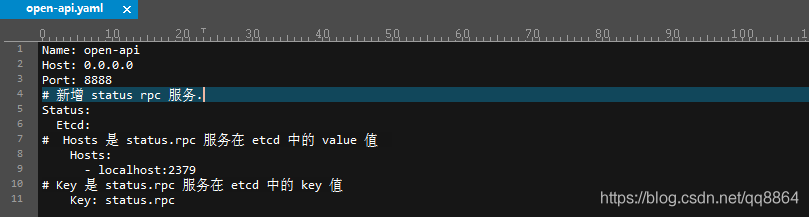

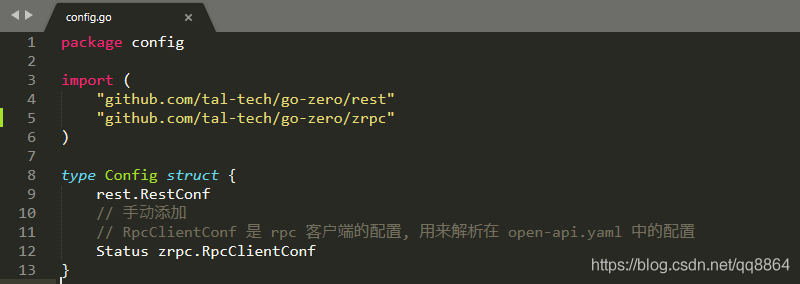

The config file under the api\internal\config path in the api directory and the open api. Config file under api\etc Yaml file changes:

open-api.yaml adds etcd related configurations to connect to the etcd service center and find the corresponding service methods.

Note that the Status name in the Config structure corresponds to that in the configuration file one by one and cannot be wrong. If there are multiple micro services, it can be written here in sequence, such as:

Status:

Etcd:

Hosts:

- localhost:2379

Key: status.rpc

Expander:

Etcd:

Hosts:

- localhost:2379

Key: expand.rpctype Config struct {

rest.RestConf

Status zrpc.RpcClientConf // Manual code

Expander zrpc.RpcClientConf // Manual code

}Step 2:

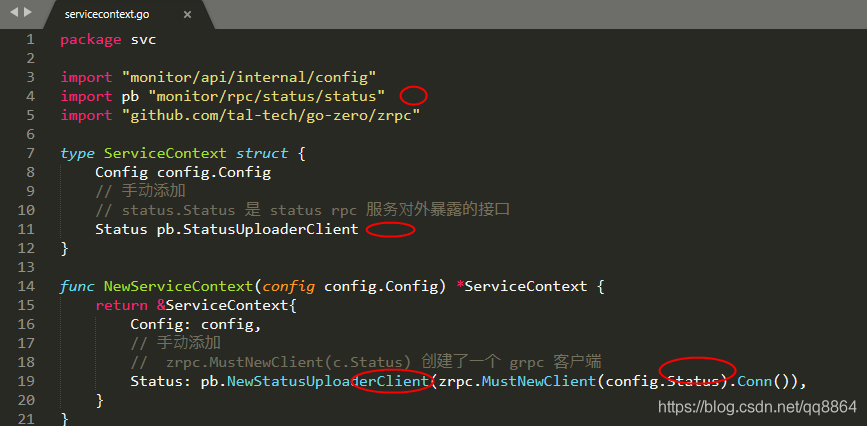

Servicecontext. In the api directory api\internal\svc path Go file changes:

Step 3:

Statusuploadlogic. In the api\internal\logic directory Go file changes,

So far, the transformation of api gateway layer is completed. You can simulate the access gateway interface address

curl http://127.0.0.1:8888/open/statusUpload -X POST -H "Content-Type: application/json" -d @status.json

Define the database table structure and generate CRUD+cache code

- Create rpc/model directory under the root path of monitor project: mkdir -p rpc/model

- Write and create TB in rpc/model directory_ The sql file status. For the status table sql, as follows:

CREATE TABLE `tb_status` ( `id` INT UNSIGNED AUTO_INCREMENT, `sn` VARCHAR(32) NOT NULL COMMENT 'Equipment unique number', `posno` VARCHAR(32) COMMENT 'Terminal number', `city` VARCHAR(16) COMMENT 'City Code', `tyid` VARCHAR(16) COMMENT 'Equipment type', `unum1` INT COMMENT 'Number of records not transmitted--transit', `unum2` INT COMMENT 'Number of records not transmitted--Third party', `ndate` DATE COMMENT 'current date', `ntime` TIME COMMENT 'current time ', `amount` INT COMMENT 'Total on duty', `count` INT COMMENT 'Number of people on duty', `line` INT COMMENT 'line number', `stime` TIME COMMENT 'Startup time ', `ctime` TIME COMMENT 'Shutdown time ', `tenant` INT COMMENT 'Tenant number ', PRIMARY KEY(`id`) ) ENGINE=INNODB DEFAULT CHARSET=utf8mb4;

- Create a DB called monitor and table

create database monitor; source status.sql;

- Execute the following command in rpc/model directory to generate CRUD+cache code, - c means redis cache is used

goctl model mysql ddl -c -src status.sql -dir .

You can also use the datasource command instead of ddl to specify that database links are generated directly from the schema

The generated file structure is as follows:

rpc/model ├── status.sql ├── tbstatusmodel.go // CRUD+cache code └── vars.go // Define constants and variables

Automatically generated tbstatusmodel Go file content:

package model

import (

"database/sql"

"fmt"

"strings"

"github.com/tal-tech/go-zero/core/stores/cache"

"github.com/tal-tech/go-zero/core/stores/sqlc"

"github.com/tal-tech/go-zero/core/stores/sqlx"

"github.com/tal-tech/go-zero/core/stringx"

"github.com/tal-tech/go-zero/tools/goctl/model/sql/builderx"

)

var (

tbStatusFieldNames = builderx.RawFieldNames(&TbStatus{})

tbStatusRows = strings.Join(tbStatusFieldNames, ",")

tbStatusRowsExpectAutoSet = strings.Join(stringx.Remove(tbStatusFieldNames, "`id`", "`create_time`", "`update_time`"), ",")

tbStatusRowsWithPlaceHolder = strings.Join(stringx.Remove(tbStatusFieldNames, "`id`", "`create_time`", "`update_time`"), "=?,") + "=?"

cacheTbStatusIdPrefix = "cache::tbStatus:id:"

)

type (

TbStatusModel interface {

Insert(data TbStatus) (sql.Result, error)

FindOne(id int64) (*TbStatus, error)

Update(data TbStatus) error

Delete(id int64) error

}

defaultTbStatusModel struct {

sqlc.CachedConn

table string

}

TbStatus struct {

Id int64 `db:"id"`

Sn sql.NullString `db:"sn"` // Equipment unique number

Posno sql.NullString `db:"posno"` // Terminal number

City sql.NullString `db:"city"` // City Code

Tyid sql.NullString `db:"tyid"` // Equipment type

Unum1 sql.NullInt64 `db:"unum1"` // Number of records not transmitted -- bus

Unum2 sql.NullInt64 `db:"unum2"` // Number of records not transmitted -- Third Party

Ndate sql.NullTime `db:"ndate"` // current date

Ntime sql.NullString `db:"ntime"` // current time

Amount sql.NullInt64 `db:"amount"` // Total on duty

Count sql.NullInt64 `db:"count"` // Number of people on duty

Line sql.NullInt64 `db:"line"` // line number

Stime sql.NullString `db:"stime"` // Startup time

Ctime sql.NullString `db:"ctime"` // Shutdown time

Tenant sql.NullInt64 `db:"tenant"` // Tenant number

}

)

func NewTbStatusModel(conn sqlx.SqlConn, c cache.CacheConf) TbStatusModel {

return &defaultTbStatusModel{

CachedConn: sqlc.NewConn(conn, c),

table: "`tb_status`",

}

}

func (m *defaultTbStatusModel) Insert(data TbStatus) (sql.Result, error) {

query := fmt.Sprintf("insert into %s (%s) values (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)", m.table, tbStatusRowsExpectAutoSet)

ret, err := m.ExecNoCache(query, data.Sn, data.Posno, data.City, data.Tyid, data.Unum1, data.Unum2, data.Ndate, data.Ntime, data.Amount, data.Count, data.Line, data.Stime, data.Ctime, data.Tenant)

return ret, err

}

func (m *defaultTbStatusModel) FindOne(id int64) (*TbStatus, error) {

tbStatusIdKey := fmt.Sprintf("%s%v", cacheTbStatusIdPrefix, id)

var resp TbStatus

err := m.QueryRow(&resp, tbStatusIdKey, func(conn sqlx.SqlConn, v interface{}) error {

query := fmt.Sprintf("select %s from %s where `id` = ? limit 1", tbStatusRows, m.table)

return conn.QueryRow(v, query, id)

})

switch err {

case nil:

return &resp, nil

case sqlc.ErrNotFound:

return nil, ErrNotFound

default:

return nil, err

}

}

func (m *defaultTbStatusModel) Update(data TbStatus) error {

tbStatusIdKey := fmt.Sprintf("%s%v", cacheTbStatusIdPrefix, data.Id)

_, err := m.Exec(func(conn sqlx.SqlConn) (result sql.Result, err error) {

query := fmt.Sprintf("update %s set %s where `id` = ?", m.table, tbStatusRowsWithPlaceHolder)

return conn.Exec(query, data.Sn, data.Posno, data.City, data.Tyid, data.Unum1, data.Unum2, data.Ndate, data.Ntime, data.Amount, data.Count, data.Line, data.Stime, data.Ctime, data.Tenant, data.Id)

}, tbStatusIdKey)

return err

}

func (m *defaultTbStatusModel) Delete(id int64) error {

tbStatusIdKey := fmt.Sprintf("%s%v", cacheTbStatusIdPrefix, id)

_, err := m.Exec(func(conn sqlx.SqlConn) (result sql.Result, err error) {

query := fmt.Sprintf("delete from %s where `id` = ?", m.table)

return conn.Exec(query, id)

}, tbStatusIdKey)

return err

}

func (m *defaultTbStatusModel) formatPrimary(primary interface{}) string {

return fmt.Sprintf("%s%v", cacheTbStatusIdPrefix, primary)

}

func (m *defaultTbStatusModel) queryPrimary(conn sqlx.SqlConn, v, primary interface{}) error {

query := fmt.Sprintf("select %s from %s where `id` = ? limit 1", tbStatusRows, m.table)

return conn.QueryRow(v, query, primary)

}Modify monitor/rpc/status rpc code and call crud+cache code

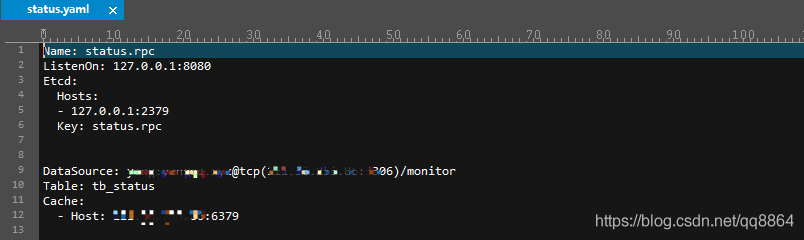

- Modify RPC / status / etc / status Yaml, add the following:

- Modify RPC / status / internal / config Go, as follows:

package config

import "github.com/tal-tech/go-zero/zrpc"

//Manual code

import "github.com/tal-tech/go-zero/core/stores/cache"

type Config struct {

zrpc.RpcServerConf

DataSource string // Manual code

Cache cache.CacheConf // Manual code

}mysql and redis cache configurations are added

- Modify RPC / status / internal / SVC / servicecontext Go, as follows:

package svc

import "monitor/rpc/status/internal/config"

//Manual code

import "monitor/rpc/status/model"

type ServiceContext struct {

Config config.Config

Model model.TbStatusModel // Manual code

}

func NewServiceContext(c config.Config) *ServiceContext {

return &ServiceContext{

Config: c,

Model: model.NewTbStatusModel(sqlx.NewMysql(c.DataSource), c.Cache), // Manual code

}

}- Modify RPC / status / internal / logic / statusuploadlogic Go, as follows:

package logic

import (

"context"

"monitor/rpc/status/internal/svc"

"monitor/rpc/status/status"

"github.com/tal-tech/go-zero/core/logx"

//Manual code

"database/sql"

"monitor/rpc/status/model"

"time"

)

type StatusUploadLogic struct {

ctx context.Context

svcCtx *svc.ServiceContext

logx.Logger

model model.TbStatusModel // Manual code

}

func NewStatusUploadLogic(ctx context.Context, svcCtx *svc.ServiceContext) *StatusUploadLogic {

return &StatusUploadLogic{

ctx: ctx,

svcCtx: svcCtx,

Logger: logx.WithContext(ctx),

model: svcCtx.Model, // Manual code

}

}

func (l *StatusUploadLogic) StatusUpload(in *status.StatusUploadReq) (*status.StatusUploadResp, error) {

// todo: add your logic here and delete this line

// Start with manual code and insert records into the database

t, _ := time.Parse("2006-01-02", in.Ndate)

_, err := l.model.Insert(model.TbStatus{

Sn: sql.NullString{in.Sn, true},

Posno: sql.NullString{in.Pos, true},

City: sql.NullString{in.City, true},

Tyid: sql.NullString{in.Id, true},

Ndate: sql.NullTime{t, true},

Ntime: sql.NullString{in.Ntime, true},

})

if err != nil {

return nil, err

}

return &status.StatusUploadResp{Code: 0, Msg: "server resp,insert data ok", Cmd: 1}, nil

}Note the SQL. SQL Nullstring and NullTime. If the value of the second parameter is false, the value inserted into the library is empty.

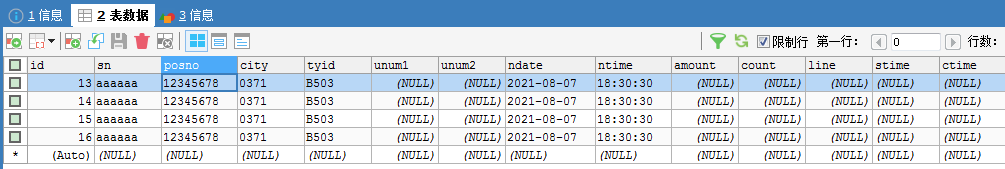

Finally, under the test, it is found that the data can be successfully stored.