The original text is reproduced from "Liu Yue's technology blog" https://v3u.cn/a_id_203

Container, see also container. The main advantage of Docker containers is that they are portable. A set of services whose dependencies can be bundled into a single container independent of the host version of the Linux kernel, platform distribution, or deployment model. This container can be transferred to another host running Docker and executed without compatibility problems. The traditional microservice architecture encapsulates each service as a container. Although the microservice container environment can achieve higher workload density within a given number of infrastructures, the total demand for containers created, monitored and destroyed in the whole production environment increases exponentially, which significantly increases the complexity of the container based management environment.

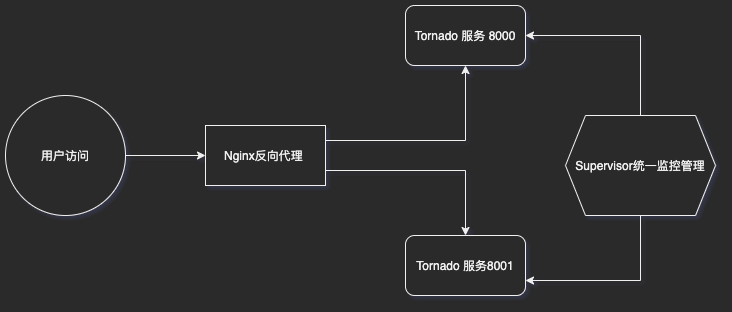

Therefore, this time we will divide the service into parts, integrate Tornado service, Nginx server and supporting monitoring and management program Supervisor into a separate container, and maximize its high portability.

For the specific installation process of Docker, please move to: One inch of downtime, one inch of blood, 100000 containers, 100000 soldiers | build Gunicorn+Flask high availability Web Cluster Based on Kubernetes(k8s) under Win10/Mac system

The system architecture in the overall container is shown in the figure:

First, create the project directory mytornado:

mkdir mytornado

Here, we use the well-known non blocking asynchronous framework tornado 6 2. Create a service entry file main py

import json

import tornado.ioloop

import tornado.web

import tornado.httpserver

from tornado.options import define, options

define('port', default=8000, help='default port', type=int)

class IndexHandler(tornado.web.RequestHandler):

def get(self):

self.write("Hello, Tornado")

def make_app():

return tornado.web.Application([

(r"/", IndexHandler),

])

if __name__ == "__main__":

tornado.options.parse_command_line()

app = make_app()

http_server = tornado.httpserver.HTTPServer(app)

http_server.listen(options.port)

tornado.ioloop.IOLoop.instance().start()Here, we monitor the running port by passing parameters from the command line to facilitate the startup of multi process services.

After that, create requirements Txt project dependent files:

tornado==6.2

Next, create the configuration file tornado. Exe for Nginx conf

upstream mytornado {

server 127.0.0.1:8000;

server 127.0.0.1:8001;

}

server {

listen 80;

location / {

proxy_pass http://mytornado;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}Here, Nginx listens to port 80 and reverse proxy to ports 8000 and 8001 of the local system. Here, we use the default load balancing scheme: polling. If there are other requirements, we can modify it according to other schemes:

1,Polling (default)

Each request is allocated to different back-end servers one by one in chronological order. If the back-end server down It can be removed automatically.

upstream backserver {

server 192.168.0.14;

server 192.168.0.15;

}

2,weight weight

Specify the polling probability, weight It is directly proportional to the access ratio, which is used in the case of uneven performance of back-end servers.

upstream backserver {

server 192.168.0.14 weight=3;

server 192.168.0.15 weight=7;

}

3,ip_hash( IP Binding)

There is a problem with the above method, that is, in the load balancing system, if the user logs in on a server, the user will relocate to a server in the server cluster every time when the user requests the second time, because we are the load balancing system, so the user who has logged in to a server will relocate to another server, Its login information will be lost, which is obviously inappropriate.

We can use ip_hash The instruction solves this problem. If the client has accessed a server, when the user accesses it again, the request will be automatically located to the server through the hash algorithm.

Access per request ip of hash Result allocation, so that each visitor has a fixed access to a back-end server, can be solved session Problems.

upstream backserver {

ip_hash;

server 192.168.0.14:88;

server 192.168.0.15:80;

}

4,fair(Third party plug-ins)

Requests are allocated according to the response time of the back-end server, and those with short response time are allocated first.

upstream backserver {

server server1;

server server2;

fair;

}

5,url_hash(Third party plug-ins)

Access by url of hash Results to allocate requests so that each url Directed to the same back-end server, which is more effective when the back-end server is cache.

upstream backserver {

server squid1:3128;

server squid2:3128;

hash $request_uri;

hash_method crc32;

}Next, we write the Supervisor configuration file Supervisor conf:

[supervisord] nodaemon=true [program:nginx] command=/usr/sbin/nginx [group:tornadoes] programs=tornado-8000,tornado-8001 [program:tornado-8000] command=python3.8 /root/mytornado/main.py --port=8000 # Execution directory directory=/root/mytornado # Automatic restart autorestart=true # When the supervisor is started, the program starts automatically autostart=true # journal stdout=/var/log/tornado-8000.log redirect_stderr=true loglevel=info [program:tornado-8001] command=python3.8 /root/mytornado/main.py --port=8001 # Execution directory directory=/root/mytornado # Automatic restart autorestart=true # When the supervisor is started, the program starts automatically autostart=true # journal stdout=/var/log/tornado-8001.log redirect_stderr=true loglevel=info

Supervisor is a tool specially used to monitor the management process on Unix like systems. It was released in 2004. Its corresponding roles are supervisor CTL and supervisor respectively. The latter is mainly used to start the configured program, respond to the instructions sent by supervisor CTL and restart the exiting sub process. The former is the supervisor's client. It provides a series of parameters in the form of command line to facilitate users to send instructions to supervisor. Commonly used commands include start, pause, remove, update and so on.

Here, we mainly use Supervisor to monitor and manage Tornado services. The default project directory here is / root/mytornado/

Two processes are configured, corresponding to the listening ports of nginx: 8000 and 8001

Finally, write the container configuration file Dockerfile:

FROM yankovg/python3.8.2-ubuntu18.04

RUN sed -i "s@/archive.ubuntu.com/@/mirrors.163.com/@g" /etc/apt/sources.list \

&& rm -rf /var/lib/apt/lists/* \

&& apt-get update --fix-missing -o Acquire::http::No-Cache=True

RUN apt install -y nginx supervisor pngquant

# application

RUN mkdir /root/mytornado

WORKDIR /root/mytornado

COPY main.py /root/mytornado/

COPY requirements.txt /root/mytornado/

RUN pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

# nginx

RUN rm /etc/nginx/sites-enabled/default

COPY tornado.conf /etc/nginx/sites-available/

RUN ln -s /etc/nginx/sites-available/tornado.conf /etc/nginx/sites-enabled/tornado.conf

RUN echo "daemon off;" >> /etc/nginx/nginx.conf

# supervisord

RUN mkdir -p /var/log/supervisor

COPY supervisord.conf /etc/supervisor/conf.d/supervisord.conf

# run

CMD ["/usr/bin/supervisord"]Here, the basic image selection is pre installed with Python 3 Ubuntu 18 of 8 has the characteristics of small volume and scalability. After adding the installation source of apt get, install Nginx and Supervisor respectively.

Then, create the project directory / root/mytornado inside the container as described in the Supervisor configuration file/

And write the above main Py and requirements Txt to the inside of the container, run PIP install - R requirements txt -i https://mirrors.aliyun.com/py... Install all dependencies of the project.

Finally, tornado Conf and Supervisor Conf is also copied to the corresponding configuration path to start the Nginx and Supervisor services respectively.

After writing, run the command in the terminal of the project root directory to package the image:

docker build -t 'mytornado' .

The first compilation will wait for a while because the basic image service needs to be downloaded:

liuyue:docker_tornado liuyue$ docker build -t mytornado . [+] Building 16.2s (19/19) FINISHED => [internal] load build definition from Dockerfile 0.1s => => transferring dockerfile: 37B 0.0s => [internal] load .dockerignore 0.0s => => transferring context: 2B 0.0s => [internal] load metadata for docker.io/yankovg/python3.8.2-ubuntu18.04:latest 15.9s => [internal] load build context 0.0s => => transferring context: 132B 0.0s => [ 1/14] FROM docker.io/yankovg/python3.8.2-ubuntu18.04@sha256:811ad1ba536c1bd2854a42b5d6655fa9609dce1370a6b6d48087b3073c8f5fce 0.0s => CACHED [ 2/14] RUN sed -i "s@/archive.ubuntu.com/@/mirrors.163.com/@g" /etc/apt/sources.list && rm -rf /var/lib/apt/lists/* 0.0s => CACHED [ 3/14] RUN apt install -y nginx supervisor pngquant 0.0s => CACHED [ 4/14] RUN mkdir /root/mytornado 0.0s => CACHED [ 5/14] WORKDIR /root/mytornado 0.0s => CACHED [ 6/14] COPY main.py /root/mytornado/ 0.0s => CACHED [ 7/14] COPY requirements.txt /root/mytornado/ 0.0s => CACHED [ 8/14] RUN pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/ 0.0s => CACHED [ 9/14] RUN rm /etc/nginx/sites-enabled/default 0.0s => CACHED [10/14] COPY tornado.conf /etc/nginx/sites-available/ 0.0s => CACHED [11/14] RUN ln -s /etc/nginx/sites-available/tornado.conf /etc/nginx/sites-enabled/tornado.conf 0.0s => CACHED [12/14] RUN echo "daemon off;" >> /etc/nginx/nginx.conf 0.0s => CACHED [13/14] RUN mkdir -p /var/log/supervisor 0.0s => CACHED [14/14] COPY supervisord.conf /etc/supervisor/conf.d/supervisord.conf 0.0s => exporting to image 0.0s => => exporting layers 0.0s => => writing image sha256:2dd8f260882873b587225d81f7af98e1995032448ff3d51cd5746244c249f751 0.0s => => naming to docker.io/library/mytornado 0.0s

After successful packaging, run the command to view the image information:

docker images

You can see that the total size of the image is less than 1g:

liuyue:docker_tornado liuyue$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE mytornado latest 2dd8f2608828 4 hours ago 828MB

Next, let's start the container:

docker run -d -p 80:80 mytornado

Through the port mapping technology, the 80 port service in the container is mapped to the 80 port of the host.

Enter the command to view the service process:

docker ps

Show running:

docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 60e071ba2a36 mytornado "/usr/bin/supervisord" 6 seconds ago Up 2 seconds 0.0.0.0:80->80/tcp, :::80->80/tcp frosty_lamport liuyue:docker_tornado liuyue$

At this point, we open the browser to access http://127.0.0.1

No problem.

At the same time, you can select to enter the corresponding container according to the running container id:

liuyue:docker_tornado liuyue$ docker exec -it 60e071ba2a36 /bin/sh #

Inside the container, we can see all the files of the project:

# pwd /root/mytornado # ls main.py requirements.txt #

Importantly, the Supervisor can be used to manage the existing Tornado process,

View all processes:

supervisorctl status

According to the configuration file, there are three services running in our container:

# supervisorctl status nginx RUNNING pid 10, uptime 0:54:28 tornadoes:tornado-8000 RUNNING pid 11, uptime 0:54:28 tornadoes:tornado-8001 RUNNING pid 12, uptime 0:54:28

Stop the service according to the service name:

# supervisorctl stop tornadoes:tornado-8001 tornadoes:tornado-8001: stopped # supervisorctl status nginx RUNNING pid 10, uptime 0:55:52 tornadoes:tornado-8000 RUNNING pid 11, uptime 0:55:52 tornadoes:tornado-8001 STOPPED Dec 28 08:47 AM #

Restart:

# supervisorctl start tornadoes:tornado-8001 tornadoes:tornado-8001: started # supervisorctl status nginx RUNNING pid 10, uptime 0:57:09 tornadoes:tornado-8000 RUNNING pid 11, uptime 0:57:09 tornadoes:tornado-8001 RUNNING pid 34, uptime 0:00:08 #

If the service process terminates unexpectedly, the Supervisor can pull it up and revive it with full blood:

# ps -aux | grep python root 1 0.0 0.1 55744 20956 ? Ss 07:58 0:01 /usr/bin/python /usr/bin/supervisord root 11 0.0 0.1 102148 22832 ? S 07:58 0:00 python3.8 /root/mytornado/main.py --port=8000 root 34 0.0 0.1 102148 22556 ? S 08:48 0:00 python3.8 /root/mytornado/main.py --port=8001 root 43 0.0 0.0 11468 1060 pts/0 S+ 08:51 0:00 grep python # kill -9 34 # supervisorctl status nginx RUNNING pid 10, uptime 1:00:27 tornadoes:tornado-8000 RUNNING pid 11, uptime 1:00:27 tornadoes:tornado-8001 RUNNING pid 44, uptime 0:00:16

If you want, you can also submit the compiled image to the Dockerhub, so that you can use it at any time and pull it at any time. There is no need to compile it every time. Here I have pushed the image to the cloud, and you can pull it directly if necessary:

docker pull zcxey2911/mytornado:latest

For the specific operation process of Dockerhub, see: Using DockerHub in centos7 7. The independent architecture of gunnicorn + flask of the Nginx reverse agent of the Environmental Protection Agency

Conclusion: it is true that Docker container technology eliminates the environmental differences between online and offline, and ensures the environmental consistency and standardization of service life cycle. Developers use images to build the standard development environment. After development, they migrate through images that encapsulate the complete environment and applications. Therefore, testing and operation and maintenance personnel can directly deploy software images for testing and publishing, which greatly simplifies the process of continuous integration, testing and publishing. However, we also have to face up to the disadvantages of the current stage of container technology, That is the loss of performance. The Docker container has almost no overhead on the use of CPU and memory, but they will affect I/O and OS interaction. This overhead occurs in the form of additional cycles per I/O operation, so small I/O suffers much more than large I/O. This overhead limits throughput by increasing I/O latency and reducing CPU cycles for useful work. Perhaps in the near future, with the improvement of kernel technology, the defect will be solved gradually. Finally, I will present the project address and share the wine with you: https://github.com/zcxey2911/...\_Tornado6\_Supervisor\_Python3.8

The original text is reproduced from "Liu Yue's technology blog" https://v3u.cn/a_id_203