1. KNN

Algorithm principle:

- k elements are randomly selected from D as the respective centers of k clusters;

- Calculate the dissimilarity of the remaining elements to the center of k clusters, and classify these elements into the cluster with the lowest dissimilarity;

- According to the clustering results, the respective centers of k clusters are recalculated by taking the arithmetic mean of the respective dimensions of all elements in the cluster.

- Re cluster all elements in D according to the new center.

- Repeat step 4 until the clustering results do not change.

- Output results

# Calculate the Euclidean distance to the center def Distance(train, center, k): dist1 = [] for data in train: diff = np.tile(data, (k,1)) - center squaredDiff = diff ** 2 squaredDist = np.sum(squaredDiff, axis=1) distance = squaredDist ** 0.5 dist1.append(distance) dist = np.array(dist1) return dist # Cluster allocation def classify(train, center, k): dist = Distance(train, center, k) # Calculate the distance from the original center minDistIndices = np.argmin(dist, axis=1) # Clustering newcenter = pd.DataFrame(train).groupby(minDistIndices).mean() # Calculate the arithmetic average of all elements in the cluster newcenter = newcenter.values # Update Center changed = newcenter - center return changed, newcenter def kmeans(train, k): center = random.sample(train, k) print('center: %s' % center) col = ['black', 'black', 'blue', 'blue'] for i in range(len(train)): plt.scatter(train[i][0], train[i][1], marker='o', color=col[i], s=40, label='origin') for j in range(len(center)): plt.scatter(center[j][0], center[j][1], marker='x', color='red', s=50, label='center') plt.show() changed, newcenter = classify(train, center, k) while np.any(changed != 0): # Until the center does not change changed, newcenter = classify(train, newcenter, k) center = sorted(newcenter.tolist()) classes = [] dist = Distance(train, center, k) minDistIndices = np.argmin(dist, axis=1) for i in range(k): classes.append([]) for i, j in enumerate(minDistIndices): # Encrypt() traverses both indexes and elements classes[j].append(train[i]) return center, classes

2. PCA

The main function of principal component analysis is to exchange accuracy for speed, reduce the number of attributes and improve the operation speed of the algorithm

Select attribute indicators as the contribution rate of each indicator to the sum of overall variance

Calculation principle:

1. Decentralization

2. Calculate covariance matrix

3. Solve eigenvalue and eigenvalue vector

4. Sort the eigenvalues from large to small, select the largest k, and then form the corresponding eigenvectors into the eigenvector matrix

5. Inverse transformation to the original vector space

def pca(data, n_dim):

data = data - np.mean(data, axis=0, keepdims=True)

XTX = np.dot(data.T, data)

eig_values, eig_vector = np.linalg.eig(XTX) #Eigenvalue eigenvector

indexs_ = np.argsort(-eig_values)[:n_dim]

picked_eig_vector = eig_vector[:, indexs_]

data_ndim = np.dot(data, picked_eig_vector) #Coordinate representation

return data_ndim, picked_eig_vector3. Drug data testing

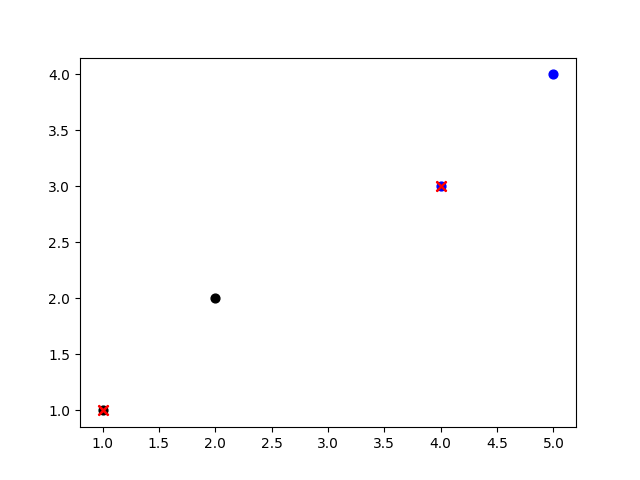

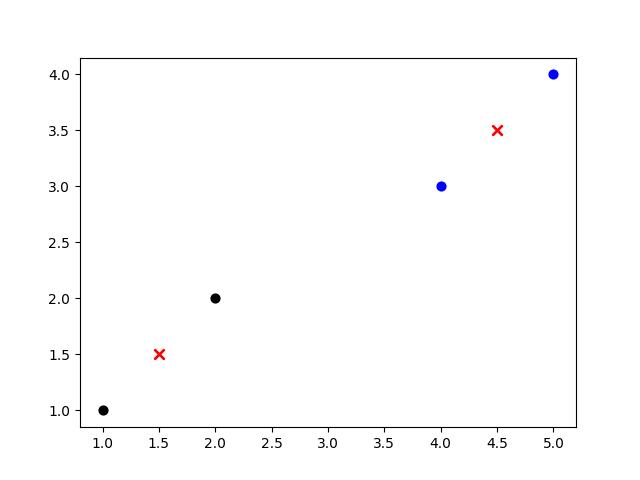

The data is [(1, 1), (2, 2), (4, 3), (5, 4)]

The requirements are divided into two categories

train = [(1, 1),(2, 2),(4, 3),(5,4)]

center, classes = kmeans(train, 2)

print('center: %s' % center)

print('classes: %s' % classes)

col = ['black', 'black', 'blue', 'blue']

for i in range(len(train)):

plt.scatter(train[i][0], train[i][1], marker='o', color=col[i], s=40, label='row')

for j in range(len(center)):

plt.scatter(center[j][0], center[j][1], marker='x', color='red', s=50, label='center')

plt.show()result:

4. Handwritten character recognition

The data set adopts sklearn's own digital data set, which contains 1797 samples with 0-9 numbers, and each sample is an 8 * 8 gray image

Requirement 1: realize PCA+KNN by using sklearn

data = load_digits().data

labels = load_digits().target

pca = PCA(n_components=15)

data_new = pca.fit_transform(data)

Xtrain, Xtest, Ytrain, Ytest = train_test_split(data_new, labels, test_size=0.3, random_state=10)

clf = KNeighborsClassifier(n_neighbors=3, weights='uniform',

algorithm='auto', leaf_size=30,

p=2, metric='minkowski', metric_params=None,

n_jobs=None, )

# train

clf.fit(Xtrain, Ytrain)

print(clf.score(Xtest, Ytest))The number of retained attributes of pca is 15, and the total variance contribution rate has exceeded 98%

result:

0.9833333333333333

Requirement 2: realize handwritten PCA+KNN

# KNN.py

def knn(train, label, k):

n = len(train)

classes = zeros(n)

center = random.sample(train, k)

changed, newcenter = classify(train, center, k)

while np.any(changed != 0):

changed, newcenter = classify(train, newcenter, k)

center = sorted(newcenter.tolist())

dist = Distance(train, center, k) # Call Euler distance

minDistIndices = np.argmin(dist, axis=1)

for i, j in enumerate(minDistIndices):

classes[i] = j # Class sequence number corresponding to each value

dic = {0:0, 1:0, 2:0, 3:0, 4:0, 5:0, 6:0, 7:0, 8:0, 9:0} # Label corresponding to class serial number

vote = []

for i in range(k):

vote.append(zeros(k))

for i in classes:

vote[classes[i]][label[i]] += 1 # Count the number of each label in each class

for i in range(k):

index = vote[i].index(max(vote[i]))

dic[i] = index # Take the most tags in each class as the corresponding tags of the current class

n_wrong = 0

for i in classes:

if label[i] != dic[classes[i]]:

n_wrong += 1 # Statistical classification error

acc = 1 - n_wrong/n

return acc # Return acc

# PCA.py

def PCA_KNN_hand():

data = load_digits().data

print(data.shape)

labels = load_digits().target

data_15d, picked_eig_vector = pca(data, 15)

Xtrain, Xtest, Ytrain, Ytest = train_test_split(data_15d, labels, test_size=0.3, random_state=10)

acc = KNN.knn(list(Xtrain), list(Ytrain), 10)

print(acc)The knn function (in KNN.py) is rewritten because the original knn function only implements clustering, but it does not involve the label of each category. The voting system is adopted here. After counting the number of tags in each category, the tag with the highest number of votes is taken as the tag value of the current category

result:

0.9124900556881463