Talk ahead

This environment is installed using kubeasz. At present, I use nine virtual machines to simulate, memory and PCU can be allocated according to the situation.

Project address: https://github.com/easzlab/kubeasz/

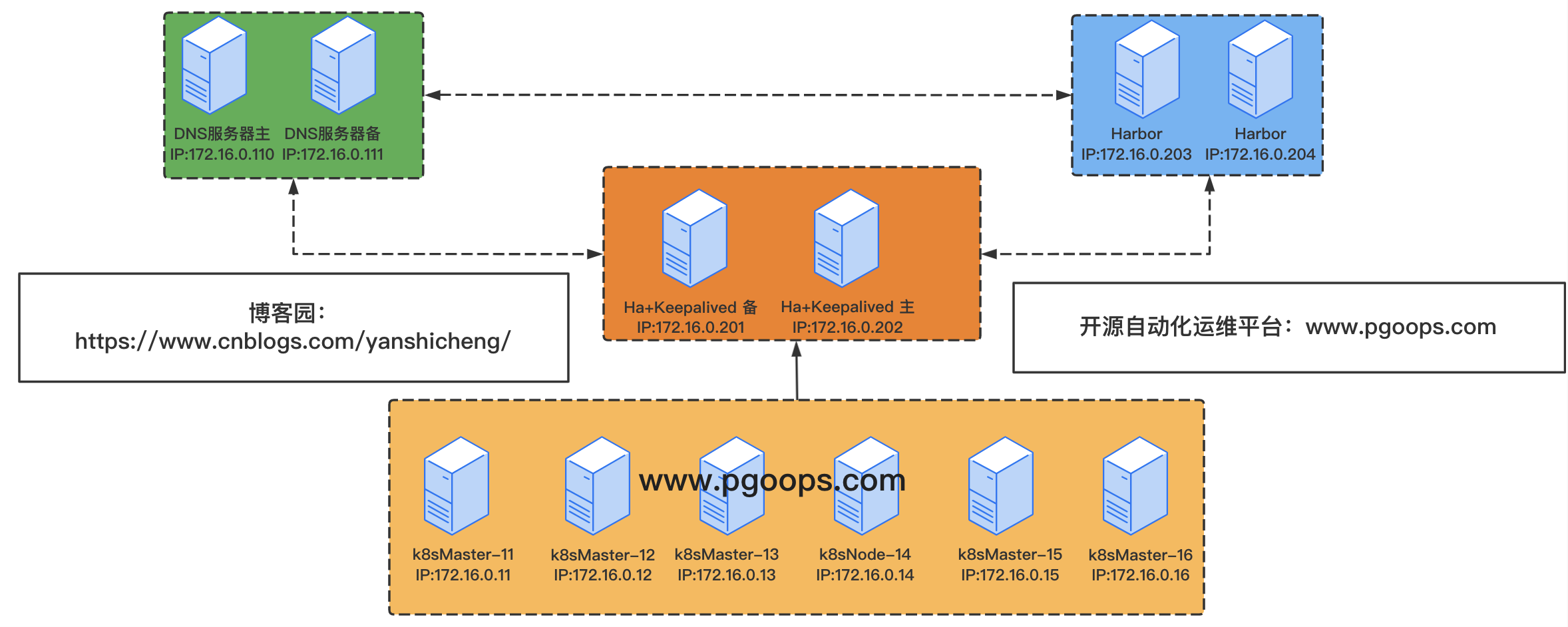

Address role division

| Serial number | role | IP | hostname | system | Kernel version | remarks |

|---|---|---|---|---|---|---|

| 1 | DNS server | 172.16.0.110 | dns-101.pgoops.com | CentOS 7.9.2009 | 3.10.0-1160 | |

| 2 | k8sMaster-11 | 172.16.0.11 | k8smaster-11.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 3 | k8sMaster-11 | 172.16.0.12 | k8smaster-12.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 4 | k8sMaster-11 | 172.16.0.13 | k8smaster-13.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 5 | k8sNode-14 | 172.16.0.14 | k8snode-14.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 6 | k8sNode-14 | 172.16.0.15 | k8snode-15.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 7 | k8sNode-14 | 172.16.0.16 | k8snode-16.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 8 | Ha+keeplived | 172.16.0.202 | ha-202.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic | |

| 172.16.0.180 | Harobr VIP | |||||

| 172.16.0.200 | k8s-api-server VIP | |||||

| 9 | Harbor | 172.16.0.203 | harbor-203.pgoops.com | ubuntu~20.04 | 5.4.0-92-generic |

Experimental topology

preparation

The following initialization work can be viewed in another article of mine

https://www.cnblogs.com/yanshicheng/p/15746537.html

Deploy alicloud image source

Kernel optimization

Resource constrained optimization

Install docker

docker only needs to be installed on three machines 172.16.0.11-172.16.0.16172.16.0.203.

Initialize the installation environment

172.16.0.110 deploy DNS

The specific installation and deployment is omitted here. You can see my other blog

Installation documentation: https://www.cnblogs.com/yanshicheng/p/12890515.html

The configuration file is as follows:

[root@dns-101 ~]# tail -n 13 /var/named/chroot/etc/pgoops.com.zone k8smaster-11 A 172.16.0.11 k8smaster-12 A 172.16.0.12 k8smaster-13 A 172.16.0.13 k8snode-14 A 172.16.0.14 k8snode-15 A 172.16.0.15 k8snode-16 A 172.16.0.16 ha-201 A 172.16.0.201 ha-202 A 172.16.0.202 harbor-203 A 172.16.0.203 harbor A 172.16.0.180 # harbor vip k8s-api-server A 172.16.0.200 # k8s api server k8s-api-servers A 172.16.0.200

Reload DNS

[root@dns-101 ~]# rndc reload WARNING: key file (/etc/rndc.key) exists, but using default configuration file (/etc/rndc.conf) server reload successful

172.16.0.203 deploy Harbor

Installation documentation: https://www.cnblogs.com/yanshicheng/p/15756591.html

The configuration file is as follows:

root@harbor-203:~# head -n 20 /usr/local/harbor/harbor.yml hostname: harbor203.pgoops.com http: port: 80 https: port: 443 certificate: /usr/local/harbor/certs/harbor203.pgoops.com.crt private_key: /usr/local/harbor/certs/harbor203.pgoops.com.key

172.16.0.202 deploy HA + kept

Kernel parameters added: net ipv4. ip_ nonlocal_ bind = 1

keepalived

Installation:

apt install keepalived -y

The configuration file is as follows:

# Modify the IP port name in the configuration file and start it

tee /etc/keepalived/keepalived.conf << 'EOF'

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

router_id Keepalived_202

vrrp_skip_check_adv_addr

vrrp_mcast_group4 224.0.0.18

}

vrrp_instance Harbor {

state BACKUP

interface ens32

virtual_router_id 100

nopreempt

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

unicast_src_ip 172.16.0.202 # Local sending unicast address

unicast_peer {

172.16.0.202 # Unicast mode slave receives unicast broadcast IP

}

virtual_ipaddress {

172.16.0.180/24 dev ens32 label ens32:2 # VIP used by harbor

172.16.0.200/24 dev ens32 label ens32:1 # VIP used by k8s API server

}

track_interface {

ens32

ens33

}

}

EOF

Start keepalived

systemctl enable --now keepalived

haproxy

Install software

apt install haproxy -y

# configuration file stay /etc/haproxy/haproxy.cfg Add later # harbor agent listen harbor bind 172.16.0.180:443 # harbor vip mode tcp balance source server harbor1 harbor203.pgoops.com:443 weight 10 check inter 3s fall 3 rise 5 # Back end address #server harbor2 harbor204.pgoops.com:443 weight 10 check inter 3s fall 3 rise 5 # k8s api proxy listen k8s bind 172.16.0.200:6443 # vip for k8s api mode tcp # k8s master address server k8s1 172.16.0.11:6443 weight 10 check inter 3s fall 3 rise 5 server k8s2 172.16.0.12:6443 weight 10 check inter 3s fall 3 rise 5 server k8s3 172.16.0.13:6443 weight 10 check inter 3s fall 3 rise 5

Start haproxy

systemctl enable --now haproxy

Deploy k8s

Using kubeas to deploy k8s clusters, we recommend that you directly look at the official website documents.

main points:

- kubeas is deployed on that machine. This machine needs to be installed with ansible, and all k8s master node nodes need to be keyless. This is over here.

Download script

export release=3.1.1

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

Use the script to download the appropriate package

Open the script to edit software versions such as docker and k8s.

Post download storage directory: / etc/kubeasz/

cat ezdown DOCKER_VER=20.10.8 KUBEASZ_VER=3.1.1 K8S_BIN_VER=v1.22.2 EXT_BIN_VER=0.9.5 SYS_PKG_VER=0.4.1 HARBOR_VER=v2.1.3 REGISTRY_MIRROR=CN .... # Download ./ezdown -D

Create cluster

root@k8smaster-11:~# cd /etc/kubeasz/ ### Create a cluster deployment instance root@k8smaster-11:/etc/kubeasz# ./ezctl new k8s-cluster1

Modify the cluster hosts file

root@k8smaster-11:/etc/kubeasz/clusters/k8s-cluster1# pwd

/etc/kubeasz/clusters/k8s-cluster1

root@k8smaster-11:/etc/kubeasz/clusters/k8s-cluster1# cat hosts

# etcd node host

[etcd]

172.16.0.11

172.16.0.12

172.16.0.13

# K8s master node host

[kube_master]

172.16.0.11

172.16.0.12

# K8s node host

[kube_node]

172.16.0.14

172.16.0.15

# If it is manually installed here, harbor will not be installed,

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#172.16.0.8 NEW_INSTALL=false

# The host address of the load balancer is followed by the configuration file, which will use the VIP without script installation lb

# EX_ APISERVER_ VIP write ip address if VIP does not have dns

# EX_ APISERVER_ The exposed port corresponding to port HA is not k8s api port

[ex_lb]

172.16.0.202 LB_ROLE=master EX_APISERVER_VIP=k8s-api-servers.pgoops.com EX_APISERVER_PORT=6443

#172.16.0.7 LB_ROLE=master EX_APISERVER_VIP=172.16.0.250 EX_APISERVER_PORT=8443

# Time synchronization. I already have a time synchronization server, so I won't do it here

[chrony]

#172.16.0.1

[all:vars]

# --------- Main Variables ---------------

# apiservers port

SECURE_PORT="6443"

# Cluster running container: docker, containerd

CONTAINER_RUNTIME="docker"

# Cluster network components: calico, flannel, Kube router, cilium, Kube ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service address segment

SERVICE_CIDR="10.100.0.0/16"

# Cluster container address segment

CLUSTER_CIDR="172.100.0.0/16"

# NodePort port range

NODE_PORT_RANGE="30000-40000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binary command storage path

bin_dir="/usr/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

Modify cluster config file

Main modification configuration

- ETCD_DATA_DIR: etcd storage directory

- MASTER_CERT_HOSTS:

- MAX_PODS: maximum number of pod s on node

- dns_install: coredns installation close manual installation

- dashboard_install: dashboard installation close manual installation

- ingress_install: press to close the manual installation

root@k8smaster-11:/etc/kubeasz/clusters/k8s-cluster1# cat config.yml

############################

# prepare

############################

# Optional offline installation system software package (offline|online)

INSTALL_SOURCE: "online"

# Optional system security reinforcement GitHub com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

# Set the time source server [important: the machine time in the cluster must be synchronized]

ntp_servers:

- "ntp1.aliyun.com"

- "time1.cloud.tencent.com"

- "0.cn.pool.ntp.org"

# Set the network segments that allow internal time synchronization, such as "10.0.0.0/8". All are allowed by default

local_network: "0.0.0.0/0"

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

# Validity period of certificate issuance

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# kubeconfig configuration parameters

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

############################

# role:etcd

############################

# Setting different wal directories can avoid disk io competition and improve performance

ETCD_DATA_DIR: "/data/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.] enable container warehouse mirroring

ENABLE_MIRROR_REGISTRY: true

# [containerd] base container image

SANDBOX_IMAGE: "easzlab/pause-amd64:3.5"

# [containerd] container persistent storage directory

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker] container storage directory

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker] open Restful API

ENABLE_REMOTE_API: false

# [docker] trusted HTTP repository

INSECURE_REG: '["127.0.0.1/8", "harbor.pgoops.com"]'

############################

# role:kube-master

############################

# k8s cluster master node certificate configuration, you can add multiple ip and domain names (such as adding public ip and domain names)

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.test.io"

- "www.pgoops.com"

- "test.pgoops.com"

- "test1.pgoops.com"

#- "www.test.com"

# The length of the pod segment mask on the node (determines the maximum pod ip address that each node can allocate)

# If flannel uses the -- Kube subnet Mgr parameter, it will read this setting and assign a pod segment to each node

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet root directory

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# Maximum number of pod s on node

MAX_PODS: 400

# Configure the amount of resources reserved for kube components (kubelet, kube proxy, dockerd, etc.)

# See templates / kubelet config. For numerical settings yaml. j2

KUBE_RESERVED_ENABLED: "no"

# k8s officials do not recommend starting system reserved hastily, unless you know the resource occupation of the system based on long-term monitoring;

# In addition, with the system running time, resource reservation needs to be increased appropriately. See templates / kubelet config. For the value settings yaml. j2

# The system reservation settings are based on 4c/8g virtual machines to minimize the installation of system services. If high-performance physical machines are used, the reservation can be increased appropriately

# In addition, apiserver and other resources will occupy a large amount of time during cluster installation. It is recommended to reserve at least 1g of memory

SYS_RESERVED_ENABLED: "no"

# haproxy balance mode

BALANCE_ALG: "roundrobin"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel] set the flannel backend "host GW", "vxlan", etc

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flannelVer: "v0.13.0-amd64"

flanneld_image: "easzlab/flannel:{{ flannelVer }}"

# [flannel] offline image tar package

flannel_offline: "flannel_{{ flannelVer }}.tar"

# ------------------------------------------- calico

# [calico] set CALICO_IPV4POOL_IPIP = "off", which can improve the network performance. See docs / setup / calico for conditions md

CALICO_IPV4POOL_IPIP: "Always"

# [calico] set the host IP used by calico node. bgp neighbors are established through this address, which can be specified manually or found automatically

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico] set calico network backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico] update supports calico version: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calico_ver: "v3.19.2"

# [calico]calico major version

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# [calico] offline image tar package

calico_offline: "calico_{{ calico_ver }}.tar"

# ------------------------------------------- cilium

# [cilium]CILIUM_ ETCD_ Number of etcd cluster nodes created by operator 1,3,5,7

ETCD_CLUSTER_SIZE: 1

# [cilium] mirrored version

cilium_ver: "v1.4.1"

# [cilium] offline image tar package

cilium_offline: "cilium_{{ cilium_ver }}.tar"

# ------------------------------------------- kube-ovn

# [Kube ovn] select the OVN DB and OVN Control Plane node, which is the first master node by default

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [Kube ovn] offline image tar package

kube_ovn_ver: "v1.5.3"

kube_ovn_offline: "kube_ovn_{{ kube_ovn_ver }}.tar"

# ------------------------------------------- kube-router

# [Kube router] there are restrictions on the public cloud. Generally, you need to always turn on ipinip; The self owned environment can be set to "subnet"

OVERLAY_TYPE: "full"

# [Kube router] networkpolicy support switch

FIREWALL_ENABLE: "true"

# [Kube router] Kube router image version

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

# [Kube router] Kube router offline image tar package

kuberouter_offline: "kube-router_{{ kube_router_ver }}.tar"

busybox_offline: "busybox_{{ busybox_ver }}.tar"

############################

# role:cluster-addon

############################

# coredns automatic installation

dns_install: "no"

corednsVer: "1.8.4"

ENABLE_LOCAL_DNS_CACHE: true

dnsNodeCacheVer: "1.17.0"

# Set local dns cache address

LOCAL_DNS_CACHE: "169.254.20.10"

# Automatic installation of metric server

metricsserver_install: "no"

metricsVer: "v0.5.0"

# dashboard automatic installation

dashboard_install: "no"

dashboardVer: "v2.3.1"

dashboardMetricsScraperVer: "v1.0.6"

# ingress auto install

ingress_install: "no"

ingress_backend: "traefik"

traefik_chart_ver: "9.12.3"

# prometheus automatic installation

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "12.10.6"

# NFS provisioner automatic installation

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.1"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

############################

# role:harbor

############################

# harbor version, full version number

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.yourdomain.com"

HARBOR_TLS_PORT: 8443

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

Install cluster

Installation command:

root@k8smaster-12:/etc/kubeasz# ./ezctl setup --help

Usage: ezctl setup <cluster> <step>

available steps:

01 prepare System initialization* Must execute

02 etcd install etcd If there is no manual installation, it needs to be installed

03 container-runtime Manual installation is omitted when installing the container

04 kube-master install k8s-master,Must execute

05 kube-node install k8s-node,Must execute

06 network Installation of network components must be performed

07 cluster-addon to setup other useful plugins

90 all to run 01~07 all at once

10 ex-lb install lb ,No installation required

11 harbor harbor No installation required

Because lb is installed manually, the corresponding rules need to be deleted during system initialization

vim playbooks/01.prepare.yml # [optional] to synchronize system time of nodes with 'chrony' - hosts: - kube_master - kube_node - etcd - ex_lb # Delete - chrony # Delete

Execute installation cluster

#### 01 system initialization root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 01 ### Deploy etcd root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 02 ### Deploy k8s master root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 04 ### Deploy k8s node root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 05 ### Deploy network root@k8smaster-11:/etc/kubeasz# ./ezctl setup k8s-cluster1 06

Cluster validation

etcd cluster verification

root@k8smaster-11:/etc/kubeasz# export NODE_IPS="172.16.0.11 172.16.0.12 172.16.0.13"

root@k8smaster-11:/etc/kubeasz# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

https://172.16.0.11:2379 is healthy: successfully committed proposal: took = 15.050165ms

https://172.16.0.12:2379 is healthy: successfully committed proposal: took = 13.827469ms

https://172.16.0.13:2379 is healthy: successfully committed proposal: took = 12.144873ms

calicoctl network authentication

root@k8smaster-11:/etc/kubeasz# calicoctl node status Calico process is running. IPv4 BGP status +--------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +--------------+-------------------+-------+----------+-------------+ | 172.16.0.12 | node-to-node mesh | up | 13:08:39 | Established | | 172.16.0.14 | node-to-node mesh | up | 13:08:39 | Established | | 172.16.0.15 | node-to-node mesh | up | 13:08:41 | Established | +--------------+-------------------+-------+----------+-------------+

Cluster validation

root@k8smaster-11:/etc/kubeasz# kubectl get pods -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-59df8b6856-wbwn8 1/1 Running 0 75s kube-system calico-node-5tqdb 1/1 Running 0 75s kube-system calico-node-8gwb6 1/1 Running 0 75s kube-system calico-node-v9tlq 1/1 Running 0 75s kube-system calico-node-w6j4k 1/1 Running 0 75s #### Run container test cluster root@k8smaster-11:/etc/kubeasz# kubectl run net-test3 --image=harbor.pgoops.com/base/alpine:v1 sleep 60000 root@k8smaster-11:/etc/kubeasz# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES net-test1 1/1 Running 0 36s 172.100.248.193 172.16.0.15 <none> <none> net-test2 1/1 Running 0 30s 172.100.248.194 172.16.0.15 <none> <none> net-test3 1/1 Running 0 5s 172.100.229.129 172.16.0.14 <none> <none> #### Internal and external network communication of test container root@k8smaster-11:/etc/kubeasz# kubectl exec -it net-test3 sh kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead. / # ping 172.100.248.193 PING 172.100.248.193 (172.100.248.193): 56 data bytes 64 bytes from 172.100.248.193: seq=0 ttl=62 time=0.992 ms 64 bytes from 172.100.248.193: seq=1 ttl=62 time=0.792 ms ^C --- 172.100.248.193 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.792/0.892/0.992 ms / # ping 114.114.114.114 PING 114.114.114.114 (114.114.114.114): 56 data bytes 64 bytes from 114.114.114.114: seq=0 ttl=127 time=32.942 ms 64 bytes from 114.114.114.114: seq=1 ttl=127 time=33.414 ms ^C --- 114.114.114.114 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 32.942/33.178/33.414 ms