Hello, I'm brother Manon Feige. Thank you for reading this article. Welcome to one click three times.

😁 1. Take a stroll around the community. There are benefits and surprises every week. Manon Feige community, leap plan

💪🏻 2. Python basic column, basic knowledge, 9.9 yuan can't afford to lose, and can't be fooled. Python from introduction to mastery

❤️ 3. Python crawler column, systematically learn the knowledge points of crawlers. 9.9 yuan can't afford to lose, can't afford to be cheated, and is constantly updating. python crawler beginner level

❤️ 4. Ceph has everything from principle to actual combat. Ceph actual combat

❤️ 5. Introduction to Java high concurrency programming, punch in and learn java high concurrency. Introduction to Java high concurrency programming

Pay attention to the official account below, and many welfare prostitution. Add me VX to study in the group. I'm not alone on the way to study

preface

The last article briefly introduced how to use the Scrapy framework for crawling. Quick start to the Scrapy framework, taking the embarrassing encyclopedia as an example [advanced introduction to python crawler] (16) However, the previous article only introduced the data crawling of a single page, and the data saving and crawling of multiple pages have not been introduced. Therefore, this article will introduce data storage and crawling multiple pages.

Return data

class SpiderQsbkSpider(scrapy.Spider):

# Identifies the name of the reptile

name = 'spider_qsbk'

# Identifies the domain name allowed by the crawler

allowed_domains = ['qiushibaike.com']

# Start page

start_urls = ['https://www.qiushibaike.com/text/page/1/']

def parse(self, response):

print(type(response))

# SelectorList

div_list = response.xpath('//div[@class="article block untagged mb15 typs_hot"]')

print(type(div_list))

for div in div_list:

# Selector

author = div.xpath('.//h2/text()').get().strip()

print(author)

content = div.xpath('.//div[@class="content"]//text()').getall()

content = "".join(content).strip()

duanzi = {'author': author, 'content': content}

yield duanzi

Here we mainly look at the following two lines of code. The code is to put the author and content in the dictionary, and then return the data as a generator.

duanzi = {'author': author, 'content': content}

yield duanzi

amount to

items=[]

items.append(item)

return items

data storage

The code for storing data in the Scrapy framework is placed in pipelines Py, that is, receive the item s returned by the crawler through pipelines.

QsbkPipeline

The QsbkPipeline class has three methods:

- open_spider(self, spider): executed when the crawler is opened.

- process_item(self, item, spider): called when an item is passed by the crawler.

- close_spider(self,spider): called when the crawler is closed.

To activate piplilne, it should be in settings Set item in PY_ PIPELINES.

class QsbkPipeline:

def __init__(self):

self.fp = open('duanzi.json', 'w', encoding='utf-8')

# Open file

def open_spider(self, spider):

print('This is the beginning of the reptile.....')

def process_item(self, item, spider):

item_json = json.dumps(item, ensure_ascii=False)

self.fp.write(item_json + '\n')

return item

def close_spider(self, spider):

self.fp.close()

print('This is the end of the reptile.....')

Define a Duanzi in the construction method JSON file, which is used to store the item passed by the crawler. json. The dumps method can convert the dictionary into a JSON string. self.fp.write(item_json + '\n') each time a JSON string is written, a new line is added.

Data transmission optimization - Item as data model

The item returned by the previous spider is a dictionary, which is not recommended by scripy. The recommended method of Scrapy is to pass the data through the scratch The item is encapsulated. The item class in this example is QsbkItem

import scrapy

class QsbkItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

author = scrapy.Field()

content = scrapy.Field()

Then, the returned data is received through the QsbkItem object in the spider qsbkspider of the crawler.

item = QsbkItem(author=author, content=content) # Return as generator yield item

The advantage of this method is to standardize the data structure of item. The code also looks relatively concise.

In QsbkPipeline, you only need to make a small modification to the item when serializing data_ json = json. dumps(dict(item), ensure_ ascii=False).

Data storage optimization - use the exported class of the graph

Using the JsonItemExporter class

First, use the JsonItemExporter class. This class is opened in binary form, and the reading mode of all files specified in the open method is wb mode. The specified encoding type is utf-8, and ascii encoding is not performed.

from scrapy.exporters import JsonItemExporter

class QsbkPipeline:

def __init__(self):

self.fp = open('duanzi.json', 'wb')

self.exporter = JsonItemExporter(self.fp, encoding='utf-8', ensure_ascii=False)

self.exporter.start_exporting()

# Open file

def open_spider(self, spider):

print('This is the beginning of the reptile.....')

pass

def process_item(self, item, spider):

self.exporter.export_item(item)

return item

def close_spider(self, spider):

self.exporter.finish_exporting()

self.fp.close()

print('This is the end of the reptile.....')

Self. Needs to be called before the crawler starts exporter. start_ The exporting () method starts importing. After the crawler is finished, you need to call self exporter. finish_ The exporting () method completes the import.

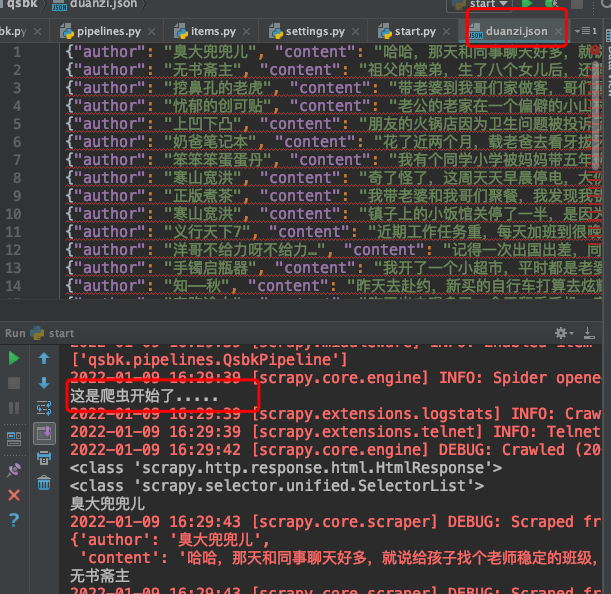

After running, the result is:

The operation logic of this method is to call export_ When the item method, put the data item into a list and call finish_ After the exporting method, the data of this list is uniformly written to the file. If there is more data, it will consume more memory. There is another better way.

Using the jsonlinestitemexporter

The method of jsonlinesiteexporter is to call export every time_ The item will be stored on disk when the item is. You do not need to call start_exporting method and finish_exporting method.

from scrapy.exporters import JsonLinesItemExporter

class QsbkPipeline:

def __init__(self):

self.fp = open('duanzi.json', 'wb')

self.exporter = JsonLinesItemExporter(self.fp, encoding='utf-8', ensure_ascii=False)

# Open file

def open_spider(self, spider):

print('This is the beginning of the reptile.....')

pass

def process_item(self, item, spider):

self.exporter.export_item(item)

return item

def close_spider(self, spider):

self.fp.close()

print('This is the end of the reptile.....')

Summary

When saving json data, you can use the JsonItemExporter class and the jsonlinesiteexporter class to make the operation easier.

- JsonItemExporter: This is to write data to disk every time it is added to memory. The advantage is that the stored data is one that meets the json rules. The disadvantage is that if the amount of data is large, it will consume more memory.

- Jsonlinestitemexporter: This is every time export is called_ The item will be stored on disk when the item is. The disadvantage is that each dictionary is a line, and the whole file is not a file that meets the json format. The advantage is that each time the data is processed, it is directly saved to the disk, so it will not consume memory. The data is also relatively safe.

Of course, the Scrapy framework also provides us with XmlItemExporter, CsvItemExporter and other export classes.

Crawl multiple pages

The data crawling and storage of a single page have been completed, and the next step is to crawl multiple pages. Here, we first need to get to the next page of the current page and cycle to the last page.

class SpiderQsbkSpider(scrapy.Spider):

base_domain = 'https://www.qiushibaike.com'

# Identifies the name of the reptile

name = 'spider_qsbk'

# Identifies the domain name allowed by the crawler

allowed_domains = ['qiushibaike.com']

# Start page

start_urls = ['https://www.qiushibaike.com/text/page/1/']

def parse(self, response):

print(type(response))

# SelectorList

div_list = response.xpath('//div[@class="article block untagged mb15 typs_hot"]')

print(type(div_list))

for div in div_list:

# Selector

author = div.xpath('.//h2/text()').get().strip()

print(author)

content = div.xpath('.//div[@class="content"]//text()').getall()

content = "".join(content).strip()

item = QsbkItem(author=author, content=content)

# Return as generator

yield item

# Gets the next page of the current page

next_url = response.xpath('//ul[@class="pagination"]/li[last()]/a/@href').get()

if not next_url:

return

else:

yield scrapy.Request(self.base_domain + next_url, callback=self.parse)

Here we mainly look at the following code.

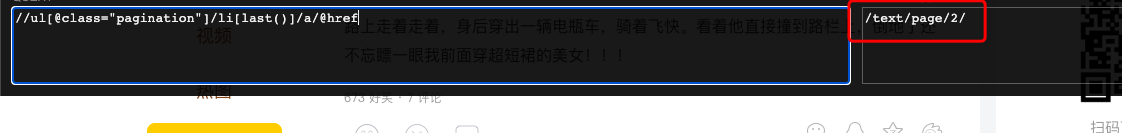

next_url = response.xpath('//ul[@class="pagination"]/li[last()]/a/@href').get()

if not next_url:

return

else:

yield scrapy.Request(self.base_domain + next_url, callback=self.parse)

The link that the crawler gets to the next second is / text/page/2 /. So you need to add the domain name to spell a complete address.

Loop through the call to sweep Request (self. Base_domain + next_url, callback = self. Parse) method to request data. The callback parameter is used to specify the calling method.

You also need to change settings here Download in PY_ Delay = 1 is used to set the download interval to 1 second.

summary

This paper briefly introduces how to quickly crawl the data storage of embarrassing encyclopedia and crawl multiple pages through the Scrapy framework

Exclusive benefits for fans

Soft test materials: Practical soft test materials

Interview questions: 5G Java high frequency interview questions

Learning materials: 50G various learning materials

Withdrawal secret script: reply to [withdrawal]

Concurrent programming: reply to [concurrent programming]

👇🏻 The verification code can be obtained by searching the official account below.👇🏻