explain

-

Starting from Kubernetes 1.8, resource usage indicators (such as container CPU and memory utilization) are obtained in Kubernetes through the Metrics API, and Metrics Server replaces heapster. The Metrics Server implements the Resource Metrics API. The Metrics Server is an aggregator of cluster wide resource usage data.

-

The Metrics Server collects indicator information from the Summary API exposed by Kubelet on each node.

-

kubernetes metrics server reference documentation https://github.com/kubernetes-incubator/metrics-server

-

The reason for putting metric and namespace together is that when metric checks the utilization rate, it will specify the namespace for fear that you won't understand it.

metrics server

Images and packages are downloaded and uploaded to the server

You can download the latest version on the Internet by yourself, or you can directly use the version I downloaded [the use method is the same]

k8s_ Metrics Server package rar

After downloading, upload it to the host. There are two files [images and packages] in total

[root@master k8s]# ls | grep met metrics-img.tar metrics-server-v0.3.6.tar.gz [root@master k8s]#

Image decompression [each node]

First, copy the image to each server [master and node nodes]

Then decompress it into an image [each needs to be decompressed]

[root@master k8s]# ls | grep met metrics-img.tar metrics-server-v0.3.6.tar.gz [root@master k8s]# [root@master k8s]# scp metrics-img.tar node1:~ root@node1's password: metrics-img.tar 100% 39MB 21.3MB/s 00:01 [root@master k8s]# scp metrics-img.tar node2:~ root@node2's password: metrics-img.tar 100% 39MB 21.3MB/s 00:01 [root@master k8s]# [root@master k8s]# docker load -i metrics-img.tar 932da5156413: Loading layer 3.062MB/3.062MB 7bf3709d22bb: Loading layer 38.13MB/38.13MB Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6 [root@master k8s]# [root@master k8s]# docker images |grep metri k8s.gcr.io/metrics-server-amd64 v0.3.6 9dd718864ce6 21 months ago 39.9MB [root@master k8s]# # Don't forget to unzip the node [root@node1 ~]# docker load -i metrics-img.tar 932da5156413: Loading layer 3.062MB/3.062MB 7bf3709d22bb: Loading layer 38.13MB/38.13MB Loaded image: k8s.gcr.io/metrics-server-amd64:v0.3.6 [root@node1 ~]# [root@node1 ~]# docker images |grep metri k8s.gcr.io/metrics-server-amd64 v0.3.6 9dd718864ce6 21 months ago 39.9MB [root@node1 ~]#

Software package installation [master node]

- Look at the orders you execute and follow them.

[root@master k8s]# tar zxvf metrics-server-v0.3.6.tar.gz ... [root@master k8s]# cd kubernetes-sigs-metrics-server-d1f4f6f/ [root@master kubernetes-sigs-metrics-server-d1f4f6f]# ls cmd deploy hack OWNERS README.md version code-of-conduct.md Gopkg.lock LICENSE OWNERS_ALIASES SECURITY_CONTACTS CONTRIBUTING.md Gopkg.toml Makefile pkg vendor [root@master kubernetes-sigs-metrics-server-d1f4f6f]# cd deploy/ [root@master deploy]# ls 1.7 1.8+ docker minikube [root@master deploy]# cd 1.8+/ [root@master 1.8+]# ls aggregated-metrics-reader.yaml metrics-apiservice.yaml resource-reader.yaml auth-delegator.yaml metrics-server-deployment.yaml auth-reader.yaml metrics-server-service.yaml [root@master 1.8+]# #Note that you are currently on this path [root@master 1.8+]# pwd /k8s/kubernetes-sigs-metrics-server-d1f4f6f/deploy/1.8+ [root@master 1.8+]#

Profile modification

[root@master 1.8+]# pwd

/k8s/kubernetes-sigs-metrics-server-d1f4f6f/deploy/1.8+

[root@master 1.8+]#

[root@master 1.8+]# vim metrics-server-deployment.yaml

# In the following line number, the value of imagePullPolicy in line 33 is changed to IfNotPresent

#And 34-38 are new contents, which can be copied in the past

...

33 imagePullPolicy: IfNotPresent

34 command:

35 - /metrics-server

36 - --metric-resolution=30s

37 - --kubelet-insecure-tls

38 - --kubelet-preferred-address-types=InternalIP

...

# After the modification is completed, it is like this

[root@master 1.8+]# cat metrics-server-deployment.yaml | grep -A 6 imagePullPolicy

imagePullPolicy: IfNotPresent

command:

- /metrics-server

- --metric-resolution=30s

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

volumeMounts:

[root@master 1.8+]#

Apply modify configuration

Copy the following command [. Yes, apply all configuration files in the current location]

[root@master 1.8+]# pwd /k8s/kubernetes-sigs-metrics-server-d1f4f6f/deploy/1.8+ [root@master 1.8+]# [root@master 1.8+]# kubectl apply -f . clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created Warning: rbac.authorization.k8s.io/v1beta1 RoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 RoleBinding rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created Warning: apiregistration.k8s.io/v1beta1 APIService is deprecated in v1.19+, unavailable in v1.22+; use apiregistration.k8s.io/v1 APIService apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created serviceaccount/metrics-server created deployment.apps/metrics-server created service/metrics-server created clusterrole.rbac.authorization.k8s.io/system:metrics-server created clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created [root@master 1.8+]#

Profile rename

It's OK not to do it. It's more standardized. It's no harm.

[root@master 1.8+]# pwd /k8s/kubernetes-sigs-metrics-server-d1f4f6f/deploy/1.8+ [root@master 1.8+]# [root@master 1.8+]# cd /k8s [root@master k8s]# ls | grep kubernetes-sigs-metrics-server-d1f4f6f/ [root@master k8s]# ls | grep kubernetes-sigs-metr kubernetes-sigs-metrics-server-d1f4f6f [root@master k8s]# [root@master k8s]# mv kubernetes-sigs-metrics-server-d1f4f6f/ metric [root@master k8s]#

At this point, the metrics configuration is complete.

metrics service status view

Now you can also see that the pod status of metrics is running

Command: kubectl get Pods - n Kube system

[root@master k8s]# kubectl get ns NAME STATUS AGE default Active 4d3h kube-node-lease Active 4d3h kube-public Active 4d3h kube-system Active 4d3h [root@master k8s]# kubectl get pods -n kube-system | tail -n 2 kube-scheduler-master 1/1 Running 12 4d3h metrics-server-644c7f4f6d-xb9bz 1/1 Running 0 6m39s [root@master k8s]#

- This service is also available in the api

[root@master ~]# kubectl api-versions | grep me metrics.k8s.io/v1beta1 [root@master ~]#

test

View node and pod monitoring indicators

node:kubectl top nodes

pod:kubectl top pod --all-namespaces

[root@master ~]# kubectl top nodes W0706 16:03:01.500535 125671 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 319m 7% 1976Mi 53% node1 132m 3% 856Mi 23% node2 141m 3% 841Mi 22% [root@master ~]# [root@master ~]# kubectl top pods -n kube-system W0706 16:03:03.934225 125697 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag NAME CPU(cores) MEMORY(bytes) calico-kube-controllers-78d6f96c7b-p4svs 4m 32Mi calico-node-cc4fc 40m 134Mi calico-node-stdfj 40m 138Mi calico-node-zhhz7 58m 132Mi coredns-545d6fc579-6kb9x 3m 28Mi coredns-545d6fc579-v74hg 3m 19Mi etcd-master 18m 271Mi kube-apiserver-master 66m 387Mi kube-controller-manager-master 21m 77Mi kube-proxy-45qgd 1m 25Mi kube-proxy-fdhpw 1m 35Mi kube-proxy-zf6nt 1m 25Mi kube-scheduler-master 4m 35Mi metrics-server-bcfb98c76-w87q9 2m 13Mi [root@master ~]#

top compact view

By default, there will be a bunch of prompts. If you don't want these prompts, you can directly add the parameter: - use protocol buffers to the back [HA with parameter description at the end of the prompt]

[root@master ~]# kubectl top nodes W0706 16:45:18.630117 42684 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 317m 7% 1979Mi 53% node1 140m 3% 849Mi 23% node2 138m 3% 842Mi 22% [root@master ~]# [root@master ~]# kubectl top nodes --use-protocol-buffers NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 324m 8% 1979Mi 53% node1 140m 3% 850Mi 23% node2 139m 3% 841Mi 22% [root@master ~]#

Usage m description in top

- A core is divided into 1000 micro cores, and one micro core is 1m. 324m in the following cpu(cores) represents 324 micro cores.

[root@master ~]# kubectl top nodes --use-protocol-buffers NAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master 324m 8% 1979Mi 53% node1 140m 3% 850Mi 23% node2 139m 3% 841Mi 22% [root@master ~]#

- To calculate the percentage of cores, you need to first know how many CPUs you have. The command is lscpu. A cpu is 1000 micro cores. After you get the total micro cores, you can calculate them. As above, if the master uses 324m, the utilization rate of the master is:

324/4000*100=8.1%

[root@master ~]# lscpu | grep CPU\( CPU(s): 4

- Here's the problem. Do you think there is% of the CPU in nodes and count yourself as a der?

Yes, the node utilization rate is already available, but the pod does not. All pods need to be calculated by themselves. It is most important to know what m is.

[root@master ~]# kubectl top pods -n kube-system W0706 16:53:45.335507 52024 top_pod.go:140] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag NAME CPU(cores) MEMORY(bytes) calico-kube-controllers-78d6f96c7b-p4svs 4m 32Mi calico-node-cc4fc 41m 134Mi calico-node-stdfj 56m 137Mi calico-node-zhhz7 55m 133Mi coredns-545d6fc579-6kb9x 4m 27Mi coredns-545d6fc579-v74hg 4m 20Mi etcd-master 18m 269Mi kube-apiserver-master 69m 383Mi kube-controller-manager-master 20m 77Mi kube-proxy-45qgd 1m 25Mi kube-proxy-fdhpw 1m 34Mi kube-proxy-zf6nt 1m 26Mi kube-scheduler-master 4m 35Mi metrics-server-bcfb98c76-w87q9 1m 13Mi [root@master ~]#

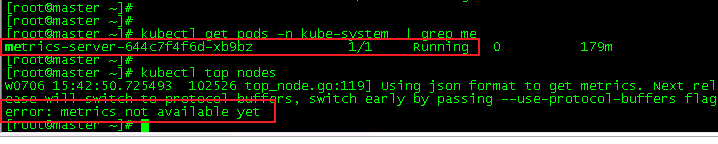

top error handling

All metrics services are in normal status, but the following errors will be reported when executing top

This is because it takes time for the application to modify the configuration. The configuration has not been completed yet. Just wait a while [provided that the above steps are not missed and the configuration file is modified correctly].

If the waiting time is too long and still fails [on the VMware virtual machine, confirm that the cpu of the local physical machine is not in the full load state (the resource manager checks whether the utilization rate is 100%)], put the tar package of metric in the / root directory on the master node and start again]

Namespace [namespace]

explain

- Kubernetes supports multiple virtual clusters, which depend on the same physical cluster at the bottom. These virtual clusters are called namespaces.

- In a Kubernetes cluster, you can use namespaces to create multiple "virtual clusters". These namespaces are completely isolated, but in some way, services in one namespace can access services in other namespaces. We deploy Kubernetes1 in CentOS 6. Several services across the namespace are used when clustering. For example, the services under Traefik ingress and Kube system namespace can provide services for the whole cluster. These need to be realized by defining cluster level roles through RBAC.

View Namespace

- View single: kubectl get namespace name [generally not used]

- View all

- Method 1: kubectl get ns [abbreviation]

- Method 2: kubectl get name s pace

[root@master ~]# kubectl get ns NAME STATUS AGE default Active 4d8h kube-node-lease Active 4d8h kube-public Active 4d8h kube-system Active 4d8h ns1 Active 39m [root@master ~]# kubectl get namespaces NAME STATUS AGE default Active 4d8h kube-node-lease Active 4d8h kube-public Active 4d8h kube-system Active 4d8h ns1 Active 39m [root@master ~]#

-

Kubernetes begins with three initial namespaces:

- Default is the default namespace for objects without other namespaces

- The namespace of objects created by the Kube system

- Kube public this namespace is created automatically and can be read by all users, including unauthenticated users This namespace is mainly used by the cluster. Some associated resources are visible in the cluster and can be read publicly. The common aspect knowledge of this namespace is a convention, but it is not mandatory.

-

Namespaces can be divided into two phases:

- The Active namespace is currently in use.

- The Terminating Namespace is being deleted and cannot be used for new objects.

View the label corresponding to the namespace

Command: kubectl get namespaces -- show labels

[root@master ~]# kubectl get namespaces --show-labels NAME STATUS AGE LABELS ccx Active 8m2s kubernetes.io/metadata.name=ccx ccxhero Active 73s kubernetes.io/metadata.name=ccxhero,name=ccxhero default Active 4d8h kubernetes.io/metadata.name=default kube-node-lease Active 4d8h kubernetes.io/metadata.name=kube-node-lease kube-public Active 4d8h kubernetes.io/metadata.name=kube-public kube-system Active 4d8h kubernetes.io/metadata.name=kube-system ns1 Active 60m kubernetes.io/metadata.name=ns1

View the current default namespace

Command: kubectl config get contexts

root@master ~]# kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * kubernetes-admin@kubernetes kubernetes kubernetes-admin default [root@master ~]#

View details of a single namespace

Command: kubectl describe namespaces name

[root@master ~]# kubectl describe namespaces default Name: default Labels: kubernetes.io/metadata.name=default Annotations: <none> Status: Active No resource quota. No LimitRange resource. [root@master ~]# kubectl describe namespaces kube-system Name: kube-system Labels: kubernetes.io/metadata.name=kube-system Annotations: <none> Status: Active No resource quota. No LimitRange resource. [root@master ~]#

Create namespace

Method 1: create by file

- Create a named My namespace YAML [can be created in any path, with customized name and fixed suffix], which contains the following contents:

Version v1 custom, name custom

The following is the same as the command creation

[root@master ~]# cat my-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ccx

[root@master ~]#

- However, it must be more meaningful to specify labels through the configuration file. The creation method is as follows.

[root@master ~]# cat my-namespace.yaml

{

"apiVersion": "v1",

"kind": "Namespace",

"metadata": {

"name": "ccxhero",

"labels": {

"name": "ccxhero"

}

}

}

[root@master ~]#

[root@master ~]# kubectl create -f my-namespace.yaml

namespace/ccxhero created

[root@master ~]# kubectl get ns

NAME STATUS AGE

ccx Active 7m2s

ccxhero Active 13s

default Active 4d8h

kube-node-lease Active 4d8h

kube-public Active 4d8h

kube-system Active 4d8h

ns1 Active 59m

- View its corresponding labels.

[root@master ~]# kubectl get namespaces --show-labels NAME STATUS AGE LABELS ccx Active 8m2s kubernetes.io/metadata.name=ccx ccxhero Active 73s kubernetes.io/metadata.name=ccxhero,name=ccxhero default Active 4d8h kubernetes.io/metadata.name=default kube-node-lease Active 4d8h kubernetes.io/metadata.name=kube-node-lease kube-public Active 4d8h kubernetes.io/metadata.name=kube-public kube-system Active 4d8h kubernetes.io/metadata.name=kube-system ns1 Active 60m kubernetes.io/metadata.name=ns1 [root@master ~]#

- Then it is generated by a command

[root@master ~]# kubectl apply -f ./my-namespace.yaml namespace/ccx created [root@master ~]# [root@master ~]# kubectl get ns NAME STATUS AGE ccx Active 9s default Active 4d8h kube-node-lease Active 4d8h kube-public Active 4d8h kube-system Active 4d8h

Mode 2, create by command

- Syntax:

kubectl create namespace <create-name>

- For example, now create a ccx1 space

[root@master ~]# kubectl create namespace ccx1 namespace/ccx1 created [root@master ~]# [root@master ~]# kubectl get ns NAME STATUS AGE ccx Active 2m13s ccx1 Active 7s default Active 4d8h

- You can add a - f parameter, which is similar to forced creation, such as:

kubectl create -f namespace ccx1

Delete Namespace

- Note: delete the namespace carefully. Execute it first before deleting: kubectl get pods -n for the ns to be deleted, first determine whether there are pods in it or whether the existing pods are unnecessary, because after deleting ns, the pods in it will be deleted and cannot be recovered.

- Syntax:

kubectl delete namespaces <insert-some-namespace-name>

- For example, I now delete the space of ccx1

[root@master ~]# [root@master ~]# kubectl delete namespaces ccx1 namespace "ccx1" deleted [root@master ~]# [root@master ~]# kubectl get ns NAME STATUS AGE ccx Active 3m44s default Active 4d8h

Deploy application on specified namespace

explain

-

The following is the official English document. I also translated the content and followed it.

Deployment process -

grammar

kubeclt create deployment custom pod name --image=Image warehouse name -n=namespace name --replicas=Number of custom copies #Image warehouse view [root@master ~]# docker images | grep ng nginx latest d1a364dc548d 6 weeks ago 133MB [root@master ~]# # Namespace view [root@master ~]# kubectl get ns NAME STATUS AGE ccx Active 15h ccxhero Active 15h default Active 5d kube-node-lease Active 5d kube-public Active 5d kube-system Active 5d ns1 Active 16h [root@master ~]# #Number of copies --replicas=This parameter is optional. The default value is 1. If--replicas==2,Then 2 of them will be created pod.

Create sample

- For example, I create an nginx pod with 2 copies in the ccx namespace

# Empty before creation [root@master ~]# kubectl get pod -n ccx No resources found in ccx namespace. [root@master ~]# [root@master ~]# kubectl create deployment nginx-test --image=nginx -n=ccx --replicas=2 deployment.apps/nginx-test created [root@master ~]#

- After the creation is successful, it is as follows:

Because I specified 2 copies, 2 pod s will be generated

[root@master ~]# kubectl get pod -n ccx NAME READY STATUS RESTARTS AGE nginx-test-795d659f45-j9m9b 0/1 ImagePullBackOff 0 6s nginx-test-795d659f45-txf8l 0/1 ImagePullBackOff 0 6s [root@master ~]#

Kubernetes pod status is ImagePullBackOff processing method

- You can see that the STATUS of the two pod s I created above is not Running, but ImagePullBackOff. Let's start to deal with this error.

- technological process

- 1. View the NAME with the pod status of ImagePullBackOff in this namespace

kubectl get pods -n ccx [ccx is namespace name] - 2. View the details of this pod

kubectl describe pod -n ccx nginx-test-795d659f45-j9m9b [ccx is followed by the NAME obtained in step 1]

- 1. View the NAME with the pod status of ImagePullBackOff in this namespace

- As follows, the nginx test I created is wrong. The viewing process is as follows

After the second step, all the information will be printed. There will be error reasons in the following. The reasons are different. Just follow the reasons for the error report. Because my is in the Intranet environment, there is no external network, no image source is configured, and the dependency cannot be downloaded. I can manually copy the website given in the error report below, go to docker pull on an external network to obtain the relevant dependency image, and then import it, But I don't want to toss, so I won't demonstrate. You just need to be able to handle the process.

If you are a machine connected to the Internet, the easiest way is to configure the Ali source and execute systemctl daemon reload and systemctl restart docker. The problem is solved [there are methods to configure the Ali source in the docker category of my blog]

[root@master ~]# kubectl get pods -n ccx

NAME READY STATUS RESTARTS AGE

nginx-test-795d659f45-j9m9b 0/1 ImagePullBackOff 0 34m

nginx-test-795d659f45-txf8l 0/1 ImagePullBackOff 0 34m

[root@master ~]#

[root@master ~]# kubectl describe pod -n ccx nginx-test-795d659f45-j9m9b

Name: nginx-test-795d659f45-j9m9b

Namespace: ccx

Priority: 0

Node: node2/192.168.59.144

Start Time: Wed, 07 Jul 2021 09:48:15 +0800

Labels: app=nginx-test

pod-template-hash=795d659f45

Annotations: cni.projectcalico.org/podIP: 10.244.104.2/32

cni.projectcalico.org/podIPs: 10.244.104.2/32

Status: Pending

IP: 10.244.104.2

IPs:

IP: 10.244.104.2

Controlled By: ReplicaSet/nginx-test-795d659f45

Containers:

nginx:

Container ID:

Image: nginx

Image ID:

Port: <none>

Host Port: <none>

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-vmhjt (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-vmhjt:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 34m default-scheduler Successfully assigned ccx/nginx-test-795d659f45-j9m9b to node2

Warning Failed 34m kubelet Failed to pull image "nginx": rpc error: code = Unknown desc = Error response from daemon: Get https://registry-1.docker.io/v2/: dial tcp: lookup registry-1.docker.io on [::1]:53: read udp [::1]:55518->[::1]:53: read: connection refused

Warning Failed 34m kubelet Failed to pull image "nginx": rpc error: code = Unknown desc = Error response from daemon: Get https://registry-1.docker.io/v2/: dial tcp: lookup registry-1.docker.io on [::1]:53: read udp [::1]:39653->[::1]:53: read: connection refused

Warning Failed 33m kubelet Failed to pull image "nginx": rpc error: code = Unknown desc = Error response from daemon: Get https://registry-1.docker.io/v2/: dial tcp: lookup registry-1.docker.io on [::1]:53: read udp [::1]:40238->[::1]:53: read: connection refused

Warning Failed 33m (x6 over 34m) kubelet Error: ImagePullBackOff

Warning Failed 32m (x4 over 34m) kubelet Error: ErrImagePull

Normal Pulling 32m (x4 over 34m) kubelet Pulling image "nginx"

Warning Failed 32m kubelet Failed to pull image "nginx": rpc error: code = Unknown desc = Error response from daemon: Get https://registry-1.docker.io/v2/: dial tcp: lookup registry-1.docker.io on [::1]:53: read udp [::1]:37451->[::1]:53: read: connection refused

Normal BackOff 4m16s (x132 over 34m) kubelet Back-off pulling image "nginx"

[root@master ~]#

Use of kubens tool

Download and copy to the bin directory

- Download address:

kubens - namespace management tool - Upload the extracted script to the host, increase the x permission and move it to the / bin / directory

[root@master k8s]# ls| grep kubens kubens [root@master k8s]# chmod +x kubens [root@master k8s]# mv kubens /bin/ [root@master k8s]#

instructions

kubens: lists namespaces in the current context

Normally, this will list all your namespaces, and the color of the current space will be marked black or deepened, but I don't know why, it can't be displayed.

[root@master ~]# kubens [root@master ~]#

- And the values in the script can be obtained by executing alone

But it doesn't matter. It can be used normally.

[root@master ~]# kubectl get namespaces -o=jsonpath='{range .items[*].metadata.name}{@}{"\n"}{end}'

}ccx

ccxhero

default

kube-node-lease

kube-public

kube-system

ns1

[root@master ~]# }

- We can view the default ns and all ns through the command

root@master ~]# kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * kubernetes-admin@kubernetes kubernetes kubernetes-admin default [root@master ~]# [root@master ~]# kubectl get ns NAME STATUS AGE default Active 4d8h kube-node-lease Active 4d8h kube-public Active 4d8h kube-system Active 4d8h ns1 Active 26m

Kubens < name >: change the active namespace of the current context

- If I'm in the default space, I can't directly execute the pod. Then I can directly switch to Kube system to see all the pods now

[root@master ~]# kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * kubernetes-admin@kubernetes kubernetes kubernetes-admin default [root@master ~]# [root@master ~]# kubectl get pods No resources found in default namespace. [root@master ~]# [root@master ~]# kubens kube-system Context "kubernetes-admin@kubernetes" modified. Active namespace is "kube-system". [root@master ~]# [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE calico-kube-controllers-78d6f96c7b-p4svs 1/1 Running 0 4d7h calico-node-cc4fc 1/1 Running 18 4d6h calico-node-stdfj 1/1 Running 20 4d7h calico-node-zhhz7 1/1 Running 1 4d7h coredns-545d6fc579-6kb9x 1/1 Running 0 4d8h coredns-545d6fc579-v74hg 1/1 Running 0 4d8h etcd-master 1/1 Running 1 4d8h kube-apiserver-master 1/1 Running 1 4d8h kube-controller-manager-master 1/1 Running 11 4d8h kube-proxy-45qgd 1/1 Running 1 4d6h kube-proxy-fdhpw 1/1 Running 1 4d8h kube-proxy-zf6nt 1/1 Running 1 4d7h kube-scheduler-master 1/1 Running 12 4d8h metrics-server-bcfb98c76-w87q9 1/1 Running 0 123m [root@master ~]#

kubens -: switch to the previous namespace in this context

- I have switched to the Kube system space above. Now I can go back to the previous space by directly executing kubens

[root@master ~]# kubens - Context "kubernetes-admin@kubernetes" modified. Active namespace is "default". [root@master ~]# [root@master ~]# kubectl get pods No resources found in default namespace. [root@master ~]#

You can see that after executing the command, you return to the default space.