The kafka environment operation process is built on linux (CentOS7), and the schemes are docker and docker compose

1. Basic environment

1.1. yum source configuration

Install source using alicloud

cd /etc/yum.repos.d rename .repo .repo.bak *.repo wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo curl -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo yum clean all yum makecache yum repolist yum update

Install basic tools

############ Installation of basic tools ############ yum -y install wget telnet bind-utils vim net-tools lrzs

1.2. Modify system settings

Modify host name

Note that the hostname cannot be localhost and does not contain underscores, decimal points and uppercase letters. The command syntax is:

sudo hostnamectl set-hostname test

Modify system parameters

############ limits relevant ############ # Expand handle count sed -i 's/4096/1000000/g' /etc/security/limits.d/20-nproc.conf cat <<EOF>> /etc/security/limits.d/20-all-users.conf * soft nproc 1000000 * hard nproc 1000000 * soft nofile 1000000 * hard nofile 1000000 EOF ############ Kernel parameters ############ cat <<'EOF'> /etc/sysctl.d/50-custom.conf # Disable IPV6 net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.lo.disable_ipv6 = 1 # Maximum number of processes kernel.pid_max = 1048576 # The number of Vmas (virtual memory areas) that a process can own vm.max_map_count = 262144 #Close the slow start of tcp connection transmission, that is, stop for a period of time before initializing the congestion window. net.ipv4.tcp_slow_start_after_idle = 0 # Hash table entry maximum, resolved 'NF'_ conntrack: table full, dropping packet.' problem net.netfilter.nf_conntrack_max = 2097152 # If the established connection is not active within the specified time (seconds), it will be cleared through iptables. net.netfilter.nf_conntrack_tcp_timeout_established = 1200 EOF ############ close selinux ############ sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux setenforce 0 ############ Turn off firewall ############ systemctl disable firewalld systemctl stop firewalld ########## Set up time synchronization server ########## View my other articles systemctl enable chronyd.service systemctl restart chronyd.service systemctl status chronyd.service #View time synchronization source: chronyc sources -v

1.3. docker installation

1.Docker requirement CentOS The kernel version of the system is higher than 3.10 ,Check the prerequisites on this page to verify your CentOS Is the version supported Docker . adopt uname -r Command to view your current kernel version $ uname -r 2.use root Permission login Centos. ensure yum The package is updated to the latest. $ sudo yum update 3.Check whether the is installed in the system docker $ ps -ef |grep docker 4.If installed docker You need to uninstall first $ yum remave docker-* 5.install yum Warehouse management tools $ yum install -y yum-utils (The tool contains yum-config-manager) 6.Download Ali's docker-ce Warehouse $ cd /etc/yum.repos.d/ $ yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 7.View selection docker-ce Each version $ yum list docker-ce --showduplicates | sort -r 8.Install the specified version of docker-ce,docker-ce-cli,containerd.io $ yum install docker-ce-19.03.5-3.el7 docker-ce-cli-19.03.5-3.el7 containerd.io-1.2.10-3.2.el7 9.Turn off firewall $ systemctl status firewalld View firewall status systemctl disable firwalld Turn off firewall 10.start-up docker $ systemctl start docker 11.set up docker Power on $ systemctl enable docker

1.4. Docker compose installation

The first is direct installation

curl -L https://get.daocloud.io/docker/compose/releases/download/1.29.2/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose docker-compose --version The version number can be modified

The second is offline installation

go github Download files manually: https://github.com/docker/compose/releases/tag/1.25.0-rc4

Upload the file to / usr/local/bin / directory, rename it docker compose, and modify the file permissions:

$ chmod +x /usr/local/bin/docker-compose $ docker-compose -v

1.5. docker configuration

Configure mirror warehouse

At / etc / docker / daemon JSON file (if not, please create it yourself)

#Alibaba cloud related configurations

$ vi etc/docker/daemon.json Add the following

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://39kjotb7.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

2. Create kafka service according to docker compose

2.1. Directly post docker compose files

kafka relies on zookeeper. We don't do clustering. We can use a single machine directly. akhq is an interface management tool, which will be explained in detail in the screenshot after it is built later

version: '3.8'

services:

zookeeper:

image: zookeeper:3.4

container_name: zookeeper

restart: always

ports:

- 21181:2181

environment:

TZ: Asia/Shanghai

kafka:

image: wurstmeister/kafka:2.13-2.6.0

container_name: kafka

restart: 'always'

ports:

- 9092:9092

environment:

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

TZ: Asia/Shanghai

volumes:

- /var/run/docker.sock:/var/run/docker.sock

depends_on:

- zookeeper

akhq:

image: tchiotludo/akhq

container_name: akhq

ports:

- "10080:8080"

environment:

AKHQ_CONFIGURATION: |

akhq:

connections:

docker-kafka-server:

properties:

bootstrap.servers: "kafka:9092"

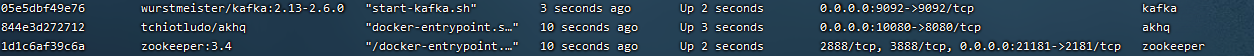

Let's now show our own tests

adopt

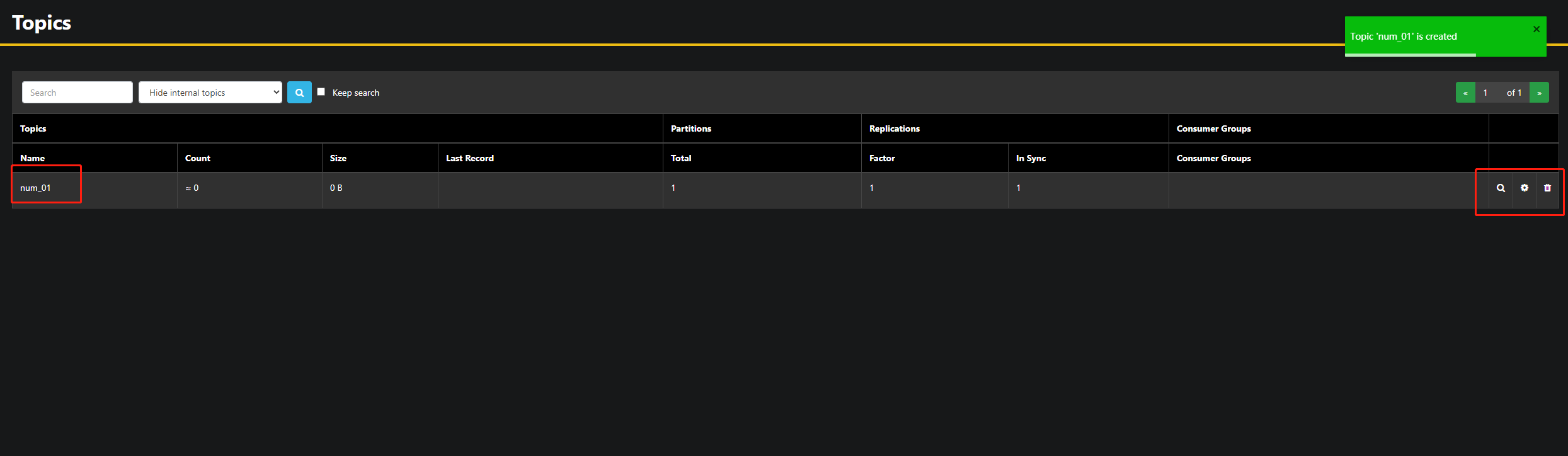

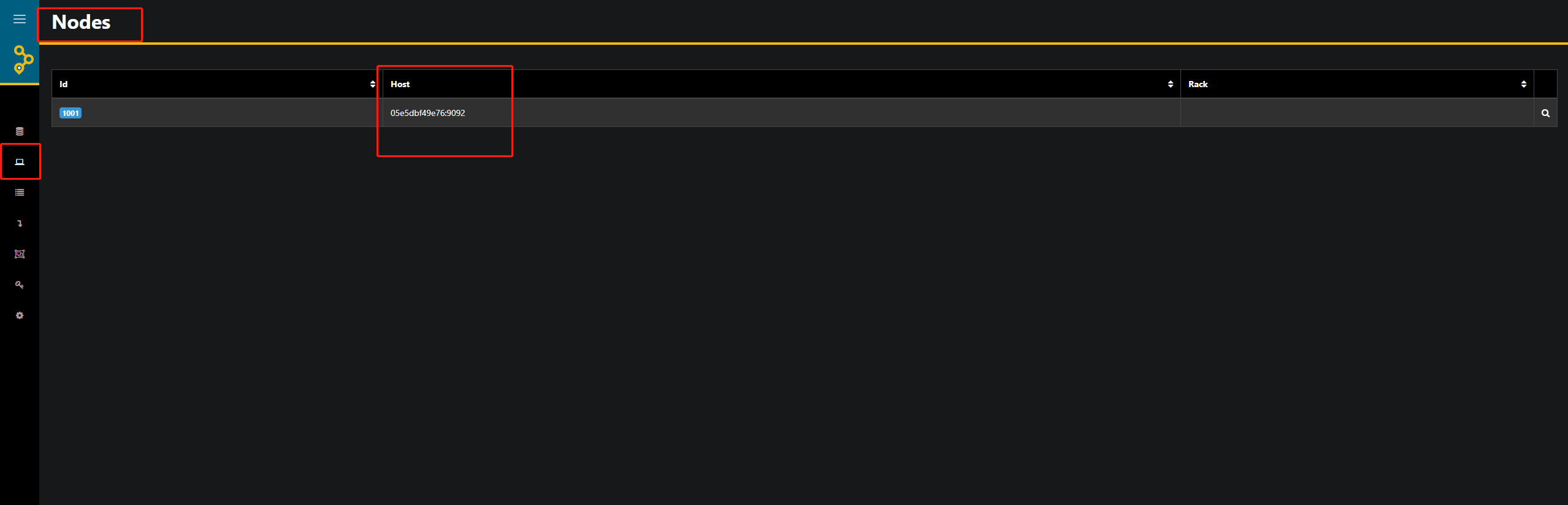

http://ip:10080/ui/docker -kafka server / topic. You can access kafka through the browser, as shown below

Select the part in the red box and try more. The create topic button at the bottom can create your own topic

I create a demo, and then the whole environment is built

2.3 problems

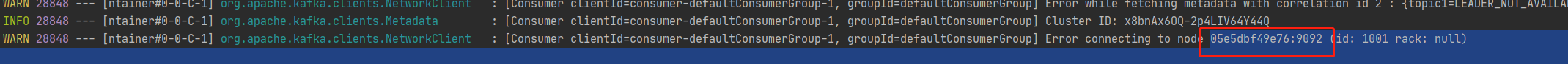

Look at my screenshot. If you want to use kafka in the properties configuration file through a springboot project, you will find that filling in the ip: the port does not take effect, because the kafka containerized through docker is under zookeeper, and the exposed ip will be a container ip. Now let's solve this problem.

My problems

Baidu's ending plan

-e kafka_ ADVERTISED_ Listeners = plantext: / / 172.16.0.13:9092 register the address port of kafka with zookeeper. If it is remote access, it should be changed to external network IP. For example, Java program access fails to connect.

First introduce the principle, and then give the solution.

3. kafka listeners and advertised Introduction to listeners

3.1 introduction and difference

Only listeners are needed to deploy kafka clusters in the company's intranet, so the advertised At the beginning, I checked what listeners do. Because I didn't have enough experience, I didn't always understand enough. Later, I found that it plays a powerful role when the internal and external networks need to be distinguished between docker deployment and ECS deployment.

Let's first look at the text description:

- listeners: the scientific name is listener. In fact, it tells the external connector what protocol to use to access the Kafka service with the specified host name and open port.

- advertised.listeners: there are more advertised than listeners. Advertised means declared and published, that is, this group of listeners is used by Broker for external publishing.

for instance:

listeners: INSIDE://172.17.0.10:9092,OUTSIDE://172.17.0.10:9094 advertised_listeners: INSIDE://172.17.0.10:9092, outside: / / < public IP >: Port kafka_listener_security_protocol_map: "INSIDE:SASL_PLAINTEXT,OUTSIDE:SASL_PLAINTEXT" kafka_inter_broker_listener_name: "INSIDE"

advertised_ The listeners listener will be registered in zookeeper;

When we request to establish a connection to 172.17.0.10:9092, kafka server will find the INSIDE listener through the listener registered in zookeeper, and then find the corresponding communication ip and port through listeners;

Similarly, when we request to establish a connection to the < public ip >: port, the kafka server will find the out side listener through the listener registered in zookeeper, and then find the corresponding communication ip and port 172.17.0.10:9094 through the listeners;

Summary: advertised_listeners are service ports exposed to the outside world. listeners are used to establish connections.

scene

Only Intranet

For example, in the kafka cluster built by the company, only the services in the Intranet can be used. In this case, you only need to use listeners

listeners: <Agreement name>://< intranet IP >: < port >

For example:

listeners: SASL_PLAINTEXT://192.168.0.4:9092

Internal and external network

When deploying kafka clusters in docker or on alicloud like hosts, advertised is required_ listeners.

Take docker as an example:

listeners: INSIDE://0.0.0.0:9092,OUTSIDE://0.0.0.0:9094 advertised_listeners: INSIDE://Localhost: 9092, outside: / / < host IP >: < host exposed port > kafka_listener_security_protocol_map: "INSIDE:SASL_PLAINTEXT,OUTSIDE:SASL_PLAINTEXT" kafka_inter_broker_listener_name: "INSIDE"

4. Problem solving

The docker composites file remains unchanged

In addition to the problems in my personal records, other problems through search are essentially the same as the problems I encounter.

Record two problems encountered in using kafka:

- 1.Caused by java.nio.channels.UnresolvedAddressException null - 2.org.apache.kafka.common.errors.TimeoutException: Expiring 1 record(s) for t2-0: 30042 ms has passed since batch creation plus linger time

The following is someone else's solution, which has been tested and feasible

Two error reports are the same problem.

4.1 problem recurrence

The java producer sends a production message to the kafka cluster, and the error is as follows:

2018-06-03 00:10:02.071 INFO 80 --- [nio-8080-exec-1] o.a.kafka.common.utils.AppInfoParser : Kafka version : 0.10.2.0 2018-06-03 00:10:02.071 INFO 80 --- [nio-8080-exec-1] o.a.kafka.common.utils.AppInfoParser : Kafka commitId : 576d93a8dc0cf421 2018-06-03 00:10:32.253 ERROR 80 --- [ad | producer-1] o.s.k.support.LoggingProducerListener : Exception thrown when sending a message with key='test1' and payload='hello122' to topic t2: org.apache.kafka.common.errors.TimeoutException: Expiring 1 record(s) for t2-0: 30042 ms has passed since batch creation plus linger time

- Obviously, the connection kafka cluster timed out, but actually I don't know the specific reason.

4.2 troubleshooting

- Confirm that the kafka cluster has been started, including zookeeper and kafka clusters. You can use the command

ps -ef | grep java

- After personal investigation, the kafka cluster, namely zookeeper, has been successfully started

- Determine whether the kafka configuration of the producer program is incorrect.

spring boot is configured as follows

spring.kafka.bootstrap-servers=39.108.61.252:9092 spring.kafka.consumer.group-id=springboot-group1 spring.kafka.consumer.auto-offset-reset=earliest

- Naturally, there is no problem

- Determine whether the firewall or security group of the machine where the kafka cluster is located has opened the relevant ports.

On windows, open the telnet tool to check whether the port is open and monitored

cmd enter command line

telnet 39.108.61.252 9092

- The successful connection after execution indicates that the problem is on the party where the program is located.

- Open the debug log level to see the error details

spring boot is set as follows:

logging.level.root=debug

Then restart the application

Found that the background is constantly brushing errors

As follows:

2018-06-03 00:22:37.703 DEBUG 5972 --- [ t1-0-C-1] org.apache.kafka.clients.NetworkClient : Initialize connection to node 0 for sending metadata request

2018-06-03 00:22:37.703 DEBUG 5972 --- [ t1-0-C-1] org.apache.kafka.clients.NetworkClient : Initiating connection to node 0 at izwz9c79fdwp9sb65vpyk3z:9092.

2018-06-03 00:22:37.703 DEBUG 5972 --- [ t1-0-C-1] org.apache.kafka.clients.NetworkClient : Error connecting to node 0 at izwz9c79fdwp9sb65vpyk3z:9092:

java.io.IOException: Can't resolve address: izwz9c79fdwp9sb65vpyk3z:9092

at org.apache.kafka.common.network.Selector.connect(Selector.java:182) ~[kafka-clients-0.10.2.0.jar:na

at org.apache.kafka.clients.NetworkClient.initiateConnect(NetworkClient.java:629) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.NetworkClient.access$600(NetworkClient.java:57) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.NetworkClient$DefaultMetadataUpdater.maybeUpdate(NetworkClient.java:768) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.NetworkClient$DefaultMetadataUpdater.maybeUpdate(NetworkClient.java:684) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:347) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:203) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.awaitMetadataUpdate(ConsumerNetworkClient.java:138) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureCoordinatorReady(AbstractCoordinator.java:216) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.internals.AbstractCoordinator.ensureCoordinatorReady(AbstractCoordinator.java:193) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.internals.ConsumerCoordinator.poll(ConsumerCoordinator.java:275) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.KafkaConsumer.pollOnce(KafkaConsumer.java:1030) [kafka-clients-0.10.2.0.jar:na]

at org.apache.kafka.clients.consumer.KafkaConsumer.poll(KafkaConsumer.java:995) [kafka-clients-0.10.2.0.jar:na]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.run(KafkaMessageListenerContainer.java:558) [spring-kafka-1.2.2.RELEASE.jar:na]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_144]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [na:1.8.0_144]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_144]

Caused by: java.nio.channels.UnresolvedAddressException: null

at sun.nio.ch.Net.checkAddress(Net.java:101) ~[na:1.8.0_144]

at sun.nio.ch.SocketChannelImpl.connect(SocketChannelImpl.java:622) ~[na:1.8.0_144]

at org.apache.kafka.common.network.Selector.connect(Selector.java:179) ~[kafka-clients-0.10.2.0.jar:na]

... 17 common frames omitted

- It can be seen that the server address of kafka cannot be resolved when establishing the socket

You can see from the log that the resolved address is izwz9c79fdwp9sb65vpyk3z

This address is the instance name of the remote server (alicloud server). The ip you configured is obviously an ip, but the program obtains its alias internally. If the alias of this ip is not configured on the manufacturer's machine, it cannot be resolved to the corresponding ip, so an error is reported when the connection fails.

4.3 solution

windows adds a host map

C:\Windows\System32\drivers\etc\hosts

39.108.61.252 izwz9c79fdwp9sb65vpyk3z 127.0.0.1 localhost

- linux is

vi /etc/hosts are modified in the same way

Restart the producer application at this time

The log is as follows. After successful startup, the background will always detect the heartbeat connection and update the offset, so the debug log has been brushing. At this time, you can change the log level to info

2018-06-03 00:29:46.543 DEBUG 12772 --- [ t2-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : Group springboot-group1 committed offset 10 for partition t2-0

2018-06-03 00:29:46.543 DEBUG 12772 --- [ t2-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : Completed auto-commit of offsets {t2-0=OffsetAndMetadata{offset=10, metadata=''}} for group springboot-group1

2018-06-03 00:29:46.563 DEBUG 12772 --- [ t1-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : Group springboot-group1 committed offset 0 for partition t1-0

2018-06-03 00:29:46.563 DEBUG 12772 --- [ t1-0-C-1] o.a.k.c.c.internals.ConsumerCoordinator : Completed auto-commit of offsets {t1-0=OffsetAndMetadata{offset=0, metadata=''}} for group springboot-group1

2018-06-03 00:29:46.672 DEBUG 12772 --- [ t2-0-C-1] o.a.k.c.consumer.internals.Fetcher : Sending fetch for partitions [t2-0] to broker izwz9c79fdwp9sb65vpyk3z:9092 (id: 0 rack: null)

2018-06-03 00:29:46.867 DEBUG 12772 --- [ t1-0-C-1] essageListenerContainer$ListenerConsumer : Received: 0 records

2018-06-03 00:29:46.872 DEBUG 12772 --- [ t2-0-C-1] essageListenerContainer$ListenerConsumer : Received: 0 records

Carry out production data and execute successfully!

Finally, a solution is attached. Instead of configuring the host file, you only need to apply for an extranet domain name and configure docker compose through dns

in

kafka:

image: wurstmeister/kafka:2.13-2.6.0

container_name: kafka

restart: 'always'

ports:

- 9092:9092

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://ilovechina.henanjiayou.com:9092

KAFKA_LISTENERS: PLAINTEXT://:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

TZ: Asia/Shanghai

volumes:

- /var/run/docker.sock:/var/run/docker.sock

depends_on:

- zookeeper

ilovechina.henanjiayou.com is an Internet domain name applied by an individual