Netty is a high-performance, asynchronous event driven NIO framework, which is implemented based on the API provided by JAVA NIO. It provides support for TCP, UDP and file transfer. As an asynchronous NIO framework, all IO operations of netty are asynchronous and non blocking. Through the future listener mechanism, users can easily obtain IO operation results actively or through the notification mechanism

1, Reasons for Netty's high performance

In the process of IO programming, when multiple client access requests need to be processed at the same time, multithreading or IO multiplexing technology can be used for processing. IO multiplexing technology multiplexes multiple IO blocks to the same select block, so that the system can process multiple client requests at the same time in the case of single thread. Compared with the traditional multi thread / multi process model, the biggest advantage of I/O multiplexing is that the system overhead is small. The system does not need to create new additional processes or threads, nor maintain the operation of these processes and threads, which reduces the maintenance workload of the system and saves system resources.

Corresponding to Socket class and ServerSocket class, NIO also provides two different Socket channel implementations, SocketChannel and ServerSocketChannel.

1.1 multiplex communication mode

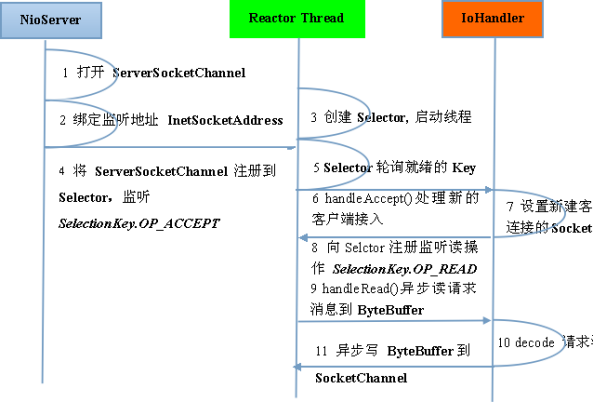

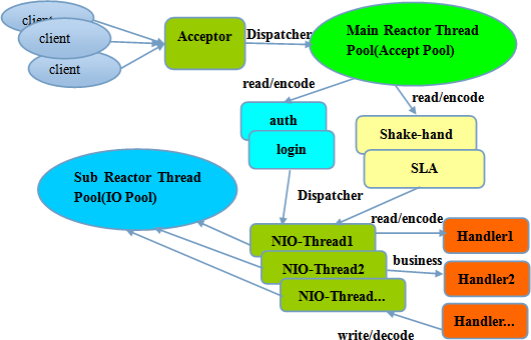

Netty architecture is designed and implemented according to Reactor mode, and its server communication sequence diagram is as follows:

Reactor pattern design

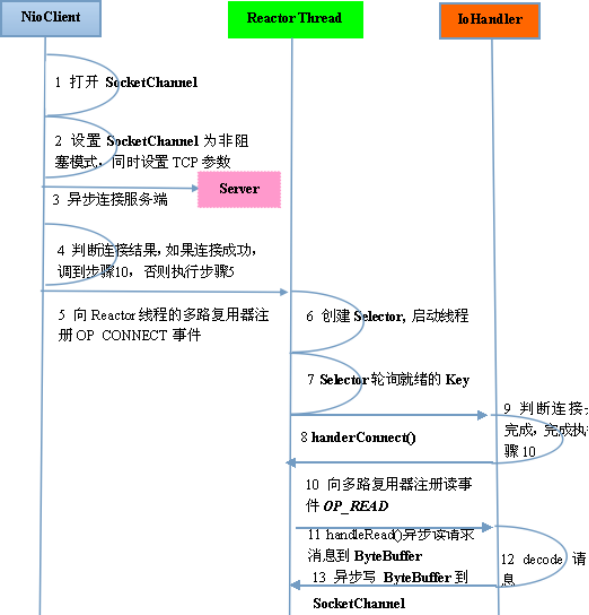

The client communication sequence diagram is as follows:

Client communication

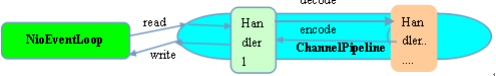

Netty's IO thread NioEventLoop can process hundreds of client channels simultaneously because it aggregates the multiplexer Selector. Because the read and write operations are non blocking, it can fully improve the operation efficiency of IO threads and avoid line suspension caused by frequent IO blocking.

1.2 asynchronous communication NIO

Due to the asynchronous communication mode adopted by Netty, an IO thread can handle N client connections and read-write operations concurrently, which fundamentally solves the traditional synchronous blocking IO connection thread model, and the performance, elastic scalability and reliability of the architecture have been greatly improved.

1.3 zero copy (DIRECT BUFFERS use off heap direct memory)

- Netty's receive and send ByteBuffer adopts DIRECT BUFFERS and uses direct memory outside the heap for Socket reading and writing, without secondary copy of byte Buffer. If the traditional HEAP BUFFERS are used for Socket reading and writing, the JVM will copy a copy of the heap Buffer into the direct memory and then write it into the Socket. Compared with the direct memory outside the heap, the message is sent with one more memory copy of the Buffer.

- Netty provides a combined Buffer object, which can aggregate multiple ByteBuffer objects. Users can operate the combined Buffer as easily as one Buffer, avoiding the traditional method of merging several small buffers into one large Buffer through memory copy.

- Netty's file transfer adopts the transferTo method, which can directly send the data of the file buffer to the target Channel, avoiding the memory copy problem caused by the traditional circular write method.

1.4 memory pool (buffer reuse mechanism based on memory pool)

With the development of JVM virtual machine and JIT real-time compilation technology, object allocation and recycling is a very lightweight work. However, the situation is slightly different for the Buffer, especially for the allocation and recycling of direct memory outside the heap, which is a time-consuming operation. In order to reuse buffers as much as possible, Netty provides a Buffer reuse mechanism based on memory pool.

1.5. Efficient Reactor thread model

There are three commonly used Reactor thread models: Reactor single thread model, Reactor multi thread model and master-slave Reactor multi thread model.

1.5.1. Reactor single thread model

The Reactor single thread model means that all IO operations are completed on the same NIO thread. The responsibilities of the NIO thread are as follows:

1) As the NIO server, it receives the TCP connection of the client;

2) As a NIO client, initiate a TCP connection to the server;

3) Read the request or response message of the communication peer

4) Send a message request or response message to the communication counterpart.

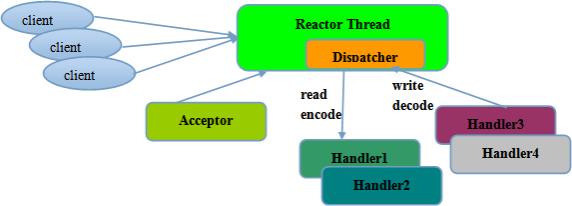

Reactor single thread model

Since the Reactor mode uses asynchronous non blocking IO, all IO operations will not cause blocking. Theoretically, a thread can handle all IO related operations independently. From the perspective of architecture, a NIO thread can indeed fulfill its responsibilities. For example, receive the TCP connection request message from the client through the Acceptor. After the link is successfully established, Dispatch the corresponding ByteBuffer to the specified Handler for message decoding. User Handler can send messages to clients through NIO threads.

1.5.2. Reactor multithreading model

The biggest difference between the Rector multithreading model and the single thread model is that there is a group of NIO threads to process IO operations. There is a special NIO thread Acceptor thread to listen to the server and receive the TCP connection request of the client; A NIO thread pool is responsible for network IO operations such as read and write. The thread pool can be implemented by the standard JDK thread pool. It contains a task queue and N available threads. These NIO threads are responsible for reading, decoding, encoding and sending messages;

Reactor multithreading model

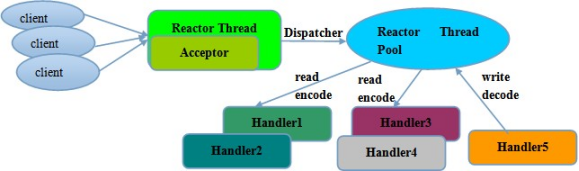

1.5.3. Master slave Reactor multithreading model

The server is no longer a single NIO thread for receiving client connections, but an independent NIO thread pool. After receiving the TCP connection request from the client, the Acceptor registers the newly created SocketChannel with an IO thread in the IO thread pool (sub reactor thread pool) after processing (including access authentication, etc.), and it is responsible for the reading, writing, encoding and decoding of the SocketChannel. The Acceptor thread pool is only used for client login, handshake and security authentication. Once the link is established successfully, the link is registered to the IO thread of the backend subReactor thread pool, and the IO thread is responsible for subsequent IO operations.

Master slave Reactor multithreading model

1.6 lock free design and thread binding

Netty adopts a serial lock free design to perform serial operation within IO threads to avoid performance degradation caused by multi thread competition. On the surface, the serialization design seems to have low CPU utilization and insufficient concurrency. However, by adjusting the thread parameters of NIO thread pool, multiple serialized threads can run in parallel at the same time. This locally unlocked serial thread design has better performance than one queue multiple worker threads model.

No lock

After Netty's NioEventLoop reads the message, it directly calls the fireChannelRead(Object msg) of ChannelPipeline. As long as the user does not actively switch threads, NioEventLoop will always call the user's Handler without thread switching. This serialization method avoids lock competition caused by multi-threaded operation and is optimal from the perspective of performance.

1.7. High performance serialization framework

Netty provides support for Google Protobuf by default. By extending netty's codec interface, users can implement other high-performance serialization frameworks, such as Thrift's compressed binary codec framework.

-

SO_RCVBUF and SO_SNDBUF: generally, the recommended value is 128K or 256K. Small packets encapsulate large packets to prevent network congestion

-

SO_TCPNODELAY: NAGLE algorithm automatically connects the small packets in the buffer to form larger packets to prevent the sending of a large number of small packets from blocking the network, so as to improve the efficiency of network application. However, for delay sensitive application scenarios, the optimization algorithm needs to be turned off. Soft interrupt Hash * value and CPU * binding

-

Soft interrupt: after RPS is enabled, soft interrupt can be realized to improve network throughput. RPS calculates a hash value according to the source address, destination address, destination and source port of the packet, and then selects the cpu running the soft interrupt according to the hash value. From the upper layer, that is, bind each connection to the cpu, and use this hash value to balance the soft interrupt on multiple CPUs and improve the network parallel processing performance.

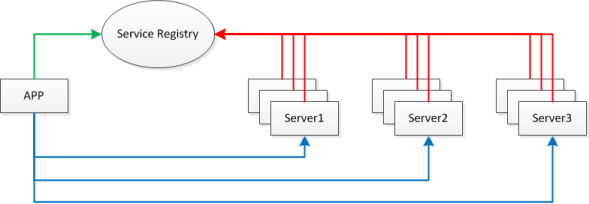

2, Netty and RPC

RPC, that is, Remote Procedure Call, calls the service on the remote computer, just like calling the local service. RPC can well decouple the system. For example, WebService is an RPC based on Http protocol. The overall framework of this RPC is as follows:

RPC overall framework

2.1 key technologies

-

Service publishing and subscription: the server uses Zookeeper to register the service address, and the client obtains the available service address from Zookeeper.

-

Communication: use Netty as the communication framework.

-

Spring: use spring to configure services, load beans, and scan annotations.

-

Dynamic proxy: clients use proxy mode to transparently invoke services.

-

Message encoding and decoding: serialize and deserialize messages using protostaff.

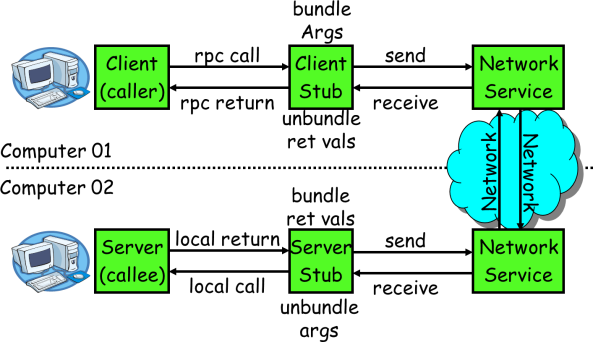

2.2 core process

-

The service consumer (client) invokes the service in a local call mode;

-

After receiving the call, the client stub is responsible for assembling the methods, parameters, etc. into a message body capable of network transmission;

-

The client stub finds the service address and sends the message to the server;

-

The server stub decodes the message after receiving it;

-

The server stub calls the local service according to the decoding result;

-

The local service executes and returns the result to the server stub;

-

The server stub packages the returned result into a message and sends it to the consumer;

-

The client stub receives the message and decodes it;

-

Service consumers get the final result.

The goal of RPC is to 2~8 encapsulate these steps and make users transparent to these details. JAVA generally uses dynamic proxy to realize remote call.

RPC call process

2.2.1 message encoding and decoding

Message data structure (interface name + method name + parameter type and parameter value + timeout + requestID)

The request message structure of the client generally needs to include the following contents:

-

Interface name: in our example, the interface name is "HelloWorldService". If it is not transmitted, the server will not know which interface to call;

-

Method name: there may be many methods in an interface. If the method name is not passed, the server will not know which method to call;

-

Parameter types and parameter values: there are many parameter types, such as bool, int, long, double, string, map, list, and even struct (class); And corresponding parameter values;

-

Timeout:

-

requestID, which identifies the unique request id. the use of requestID will be described in detail in the following section.

-

The message returned by the server generally includes the following contents. Return value + status code+requestID

2.2.2 communication process

Core problems (thread pause, message disorder)

If you use netty, you usually use channel Writeandflush() method to send the message binary string. After this method is called, it is asynchronous for the whole remote call (from sending the request to receiving the result), that is, for the current thread, after sending the request, the thread can execute later. As for the result of the server, it is after the server processing is completed, Then send it to the client in the form of message. Therefore, the following two problems arise:

-

How to make the current thread "pause" and execute backward after the result comes back?

-

If multiple threads make remote method calls at the same time, there will be many messages sent by both sides on the socket connection established between the client and server, and the sequence may also be random. After the server processes the result, it sends the result message to the client, and the client receives many messages. How do you know which message result is called by the original thread? As shown in the figure below, thread A and thread B send requests requestA and requestB to the client socket at the same time. The socket sends requestB and requestA to the server successively, and the server may return responseB first, although the arrival time of requestB request is later. We need A mechanism to ensure that responseA is lost to ThreadA and responseB is lost to ThreadB.

image-20220117213907275

2.2.3 communication process

- Before calling the remote interface through the socket every time, the client thread generates a unique ID, that is, requestID (the requestID must be unique in a socket connection). Generally, AtomicLong is used to accumulate numbers from 0 to generate a unique ID;

- Store the callback object of the processing result into the global ConcurrentHashMap, put(requestID, callback);

- When a thread calls channel After writeandflush () sends the message, it executes the get() method of callback to try to get the result returned remotely. Inside get(), synchronized is used to get the lock of callback object callback, and then it is detected whether the result has been obtained. If not, then the wait() method of callback is called to release the lock on callback, so that the current thread is in wait state.

- After receiving and processing the request, the server sends the response result (the result includes the previous requestID) to the client. The thread specially listening for the message on the client socket connection receives the message, analyzes the result, obtains the requestID, and then gets the requestID from the previous ConcurrentHashMap to find the callback object, Use synchronized to obtain the lock on the callback, set the method call result to the callback object, and then call callback Notifyall() wakes up the previously waiting thread.

3, Handwritten Dubbo framework based on Netty

3.1 implementation scheme

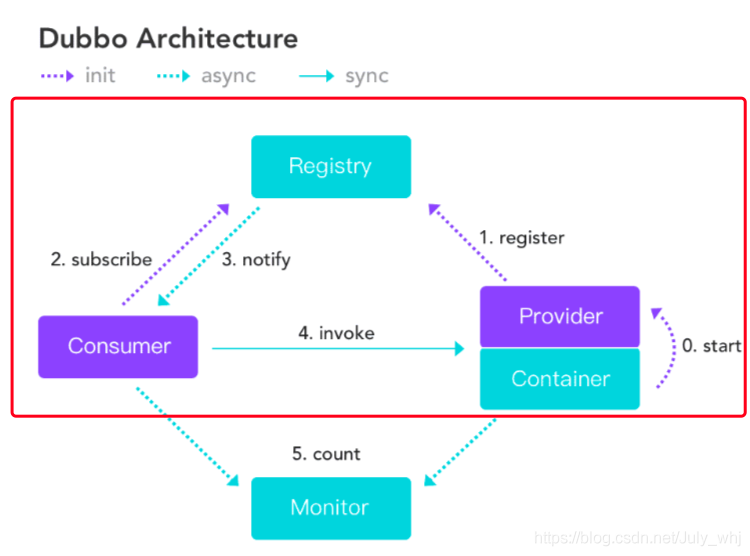

View the official website dubbo structure chart

dubbo structure diagram

1. First, register the url of the service provider in the Registry registry through register.

2. The client Consumer obtains the registration information of the called server from the registration center, such as interface name, URL address, etc.

3. Return the obtained URL address to the Consumer client. The client supports the invoke reflection mechanism to obtain the implementation of the service through the obtained URL address.

3.2. Overall project structure information

|-- netty-to-dubbo |-- netty-dubbo-api |-- cn.org.july.netty.dubbo.api |-- Iservice : External service exposure interface |-- RpcRequest : Service request object Bean |-- netty-dubbo-common |-- cn.org.july.netty.dubbo.annotation |-- RpcAnnotation : Define an interface identification annotation |-- netty-dubbo-server |-- cn.org.july.netty.dubbo.registry |-- IRegisterCenter : Service registration interface |-- RegisterCenterImpl: Service registration implementation class |-- ZkConfig: ZK configuration file |-- cn.org.july.netty.dubbo.rpc |-- NettyRpcServer: be based on netty Realized Rpc Communication service |-- RpcServerHandler: Rpc Service processing flow |-- cn.org.july.netty.dubbo.service |-- ServiceImpl: External interface IService Interface implementation class |-- netty-dubbo-client |-- cn.org.july.netty.dubbo.loadbalance |-- LoadBalance : Load balancing implementation interface |-- RandomLoadBalance: Load balancing implementation class randomly obtains service providers |-- cn.org.july.netty.dubbo.proxy |-- RpcClientProxy: netty Client communication component |-- RpcProxyHandler: netty Communication message processing component with server |-- cn.org.july.netty.dubbo.registry |-- IServiceDiscover: Get registered service interface from registry |-- ServiceDiscoverImpl: Interface IServiceDiscover Implementation class of |-- ZkConfig: zk Configuration file.

3.3. Service Provider side

3.3.1. Implement the 'Iservice' interface

First, let's look at the content of Iservice interface:

package cn.org.july.netty.dubbo.api;

/**

* @author july_whj

*/

public interface IService {

/**

* Computational addition

*/

int add(int a, int b);

/**

* @param msg

*/

String sayHello(String msg);

}

We write ServiceImpl to implement the above two interface classes.

package cn.org.july.netty.dubbo.service;

import cn.org.july.netty.dubbo.annotation.RpcAnnotation;

import cn.org.july.netty.dubbo.api.IService;

/**

* @author july_whj

*/

@RpcAnnotation(IService.class)

public class ServiceImpl implements IService {

@Override

public int add(int a, int b) {

return a + b;

}

@Override

public String sayHello(String msg) {

System.out.println("rpc say :" + msg);

return "rpc say: " + msg;

}

}

The implementation of this class is relatively simple without much processing. The following analysis is about service registration.

3.3.2. Service registration to ZK

First, we define an interface class`IRegisterCenter`,Which defines a`registry`Method, which implements service registration. Service registration needs to register the name and address of the service in the registry. We define the interface as follows:

package cn.org.july.netty.dubbo.registry;

/**

* @author july_whj

*/

public interface IRegisterCenter {

/**

* Service registration

* @param serverName Service name (implementation method path)

* @param serviceAddress Service address

*/

void registry(String serverName,String serviceAddress);

}

Second, we use zookeerper As a service registry, in`netty-dubbo-server`Introduced in module zk Client operation class, pom The documents are as follows:

<dependency> <groupId>org.apache.curator</groupId> <artifactId>curator-recipes</artifactId> <version>2.5.0</version> </dependency> <dependency> <groupId>org.apache.curator</groupId> <artifactId>curator-framework</artifactId> <version>2.5.0</version> </dependency>

Note: the version used here is 2.5.0, and the zk version I use,

Third, implement the interface and write the interface implementation class`RegisterCenterImpl`.

package cn.org.july.netty.dubbo.registry;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.retry.ExponentialBackoffRetry;

import org.apache.zookeeper.CreateMode;

/**

* @author july_whj

*/

public class RegisterCenterImpl implements IRegisterCenter {

private CuratorFramework curatorFramework;

{

curatorFramework = CuratorFrameworkFactory.builder()

.connectString(ZkConfig.addr).sessionTimeoutMs(4000)

.retryPolicy(new ExponentialBackoffRetry(1000, 10)).build();

curatorFramework.start();

}

@Override

public void registry(String serverName, String serviceAddress) {

String serverPath = ZkConfig.ZK_REGISTER_PATH.concat("/").concat(serverName);

try {

if (curatorFramework.checkExists().forPath(serverPath) == null) {

curatorFramework.create().creatingParentsIfNeeded()

.withMode(CreateMode.PERSISTENT).forPath(serverPath, "0".getBytes());

}

String addr = serverPath.concat("/").concat(serviceAddress);

String rsNode = curatorFramework.create().withMode(CreateMode.EPHEMERAL)

.forPath(addr, "0".getBytes());

System.out.println("Service registration succeeded," + rsNode);

} catch (Exception e) {

e.printStackTrace();

}

}

}

Let's analyze the above code:

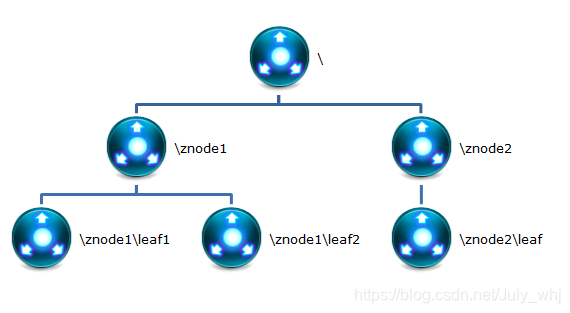

Define a`CuratorFramework`Object, instantiate the object through a code block, and`curatorFramework.start();`To connect ZKConfig Address connection configured in ZK. use zk As a registry, we understand ZK Storage structure. zookeeper The structure of the namespace is very similar to that of the file system. A name is used as a file/Path performance, zookeeper Each node of is uniquely identified by the path.

Insert picture description here

Analyze the registry method. First, obtain the root node information of the data to be registered from ZkConfig, and splice the information with the service name to judge whether the path exists. If not, create the path information of the service name in PERSISTENT mode. Persistence mode is a PERSISTENT mode. We use this mode to create a service. Because the service name does not change dynamically, we do not need to listen to its changes every time. The address of our service may be multiple and may change. We use the EPHEMERAL method to create the implementation address of the service.

We will`ServiceImpl`Service registration to zk Firstly, we get the service name and address of the service implementation, and register the service name and address of the service to zk Let's take a look at the test class of our registration service`RegTest`.

import cn.org.july.netty.dubbo.registry.IRegisterCenter;

import cn.org.july.netty.dubbo.registry.RegisterCenterImpl;

import java.io.IOException;

public class RegTest {

public static void main(String[] args) throws IOException {

IRegisterCenter registerCenter = new RegisterCenterImpl();

registerCenter.registry("cn.org.july.test", "127.0.0.1:9090");

System.in.read();

}

}

We will`cn.org.july.test`Service, and address of service implementation 127.0.0.1:9090 Register to zk Yes.

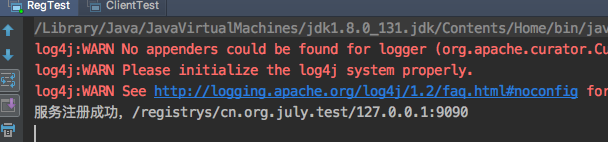

See the service execution effect:

Insert picture description here

The server shows that the registration is successful. Let's see if the data is available in the following zk services,

Insert picture description here

Finally, we can see that the data registration is successful.

3.3.3 implementation of NettyRpcServer

We're going to`ServiceImpl`Publish service to zk On and through netty Listen for a port information. Let's take a look first

package cn.org.july.netty.dubbo.rpc;

import cn.org.july.netty.dubbo.annotation.RpcAnnotation;

import cn.org.july.netty.dubbo.registry.IRegisterCenter;

import io.netty.bootstrap.ServerBootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.nio.NioServerSocketChannel;

import io.netty.handler.codec.LengthFieldBasedFrameDecoder;

import io.netty.handler.codec.LengthFieldPrepender;

import io.netty.handler.codec.serialization.ClassResolvers;

import io.netty.handler.codec.serialization.ObjectDecoder;

import io.netty.handler.codec.serialization.ObjectEncoder;

import java.util.HashMap;

import java.util.Map;

/**

* @author july_whj

*/

public class NettyRpcServer {

private IRegisterCenter registerCenter;

private String serviceAddress;

private Map<String, Object> handlerMap = new HashMap<>(16);

public NettyRpcServer(IRegisterCenter registerCenter, String serviceAddress) {

this.registerCenter = registerCenter;

this.serviceAddress = serviceAddress;

}

/**

* Publishing services

*/

public void publisher() {

for (String serviceName : handlerMap.keySet()) {

registerCenter.registry(serviceName, serviceAddress);

}

try {

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

//Start the netty service

ServerBootstrap bootstrap = new ServerBootstrap();

bootstrap.group(bossGroup, workerGroup).channel(NioServerSocketChannel.class);

bootstrap.childHandler(new ChannelInitializer<Channel>() {

@Override

protected void initChannel(Channel channel) throws Exception {

ChannelPipeline channelPipeline = channel.pipeline();

channelPipeline.addLast(new ObjectDecoder(1024 * 1024, ClassResolvers.weakCachingConcurrentResolver(this.getClass().getClassLoader())));

channelPipeline.addLast(new ObjectEncoder());

channelPipeline.addLast(new RpcServerHandler(handlerMap));

}

}).option(ChannelOption.SO_BACKLOG, 128).childOption(ChannelOption.SO_KEEPALIVE, true);

String[] addr = serviceAddress.split(":");

String ip = addr[0];

int port = Integer.valueOf(addr[1]);

ChannelFuture future = bootstrap.bind(ip, port).sync();

System.out.println("Service started successfully.");

future.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

/**

* Implementation of sub objects

*

* @param services Object implementation class

*/

public void bind(Object... services) {

//The implementation class gets the name of the implementation class through annotation, and the implementation of the implementation class is put into the map collection.

for (Object service : services) {

RpcAnnotation annotation = service.getClass().getAnnotation(RpcAnnotation.class);

String serviceName = annotation.value().getName();

handlerMap.put(serviceName, service);

}

}

}

Analyze the above Codes:

adopt bind Method to pass the service provider`RpcAnnotation`Annotation to get the service name, and put the service name and service implementation class into handlerMap Yes. adopt publisher Method, get handlerMap The service implementation of these services through`registerCenter.registry(serviceName, serviceAddress)`Register these services with zk In the registry, complete the registration of the service. The following code is netty Create two worker thread pools and start netty Services, through channelPipeline Define serialized objects and RpcServerHandler realization. There is not much parsing here. Let's see`RpcServerHandler`Code implementation of.

package cn.org.july.netty.dubbo.rpc;

import cn.org.july.netty.dubbo.api.RpcRequest;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

import java.io.UnsupportedEncodingException;

import java.lang.reflect.Method;

import java.nio.Buffer;

import java.util.HashMap;

import java.util.Map;

public class RpcServerHandler extends ChannelInboundHandlerAdapter {

private Map<String, Object> handlerMap = new HashMap<>();

public RpcServerHandler(Map<String, Object> handlerMap) {

this.handlerMap = handlerMap;

}

@Override

public void channelActive(ChannelHandlerContext ctx) throws UnsupportedEncodingException {

System.out.println("channelActive:" + ctx.channel().remoteAddress());

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

System.out.println("The server received the message:" + msg);

RpcRequest rpcRequest = (RpcRequest) msg;

Object result = new Object();

if (handlerMap.containsKey(rpcRequest.getClassName())) {

Object clazz = handlerMap.get(rpcRequest.getClassName());

Method method = clazz.getClass().getMethod(rpcRequest.getMethodName(), rpcRequest.getTypes());

result = method.invoke(clazz, rpcRequest.getParams());

}

ctx.write(result);

ctx.flush();

ctx.close();

}

}

It's copied here`channelRead`Method to receive the information passed by the client`RpcRequest`Object information. The following judgment handlerMap Whether there is an implementation class called by the client in the. If so, obtain the server implementation class through the reflection mechanism`invoke`Method calls the method implementation and executes the result`result`Object pass`ctx.write(result);`Returns the execution result to the client.

3.3.4. Write service startup class ` ServerTest`

import cn.org.july.netty.dubbo.api.IService;

import cn.org.july.netty.dubbo.registry.IRegisterCenter;

import cn.org.july.netty.dubbo.registry.RegisterCenterImpl;

import cn.org.july.netty.dubbo.rpc.NettyRpcServer;

import cn.org.july.netty.dubbo.service.ServiceImpl;

/**

* Created with IntelliJ IDEA.

* User: wanghongjie

* Date: 2019/5/3 - 23:03

* <p>

* Description:

*/

public class ServerTest {

public static void main(String[] args) {

IService service = new ServiceImpl();

IRegisterCenter registerCenter = new RegisterCenterImpl();

NettyRpcServer rpcServer = new NettyRpcServer(registerCenter, "127.0.0.1:8080");

rpcServer.bind(service);

rpcServer.publisher();

}

}

Start the netty service, bind the service implementation class service to the handlerMap through the bind method, publish the service and service implementation address to zk through the publisher method, start the netty service, and listen to port 8080.

3.4. Realize customer service

As service consumers, we must first connect zk The registration center obtains the address of the service implementation, and monitors and obtains the latest address information in real time. The service is implemented through remote invocation. If the service implementation is multiple, we need to implement the client load and select our service address.

3.4.1 realization of load balancing

definition`loadbalance`Interface.

package cn.org.july.netty.dubbo.loadbalance;

import java.util.List;

public interface LoadBalance {

String select(List<String> repos);

}

definition`select`Select the method.

The loadbalance interface is implemented through RandomLoadBalance. Random can be randomly obtained from the implementation name.

package cn.org.july.netty.dubbo.loadbalance;

import java.util.List;

import java.util.Random;

public class RandomLoadBalance implements LoadBalance {

@Override

public String select(List<String> repos) {

int len = repos.size();

if (len == 0)

throw new RuntimeException("No registered services were found.");

Random random = new Random();

return repos.get(random.nextInt(len));

}

}

3.4.2 obtain the service registration information of the registration center

definition`IServiceDiscover`Interface, definition`discover`Method for service discovery.

package cn.org.july.netty.dubbo.registry;

public interface IServiceDiscover {

String discover(String serviceName);

}

The IServiceDiscover interface is implemented through ServiceDiscoverImpl.

package cn.org.july.netty.dubbo.registry;

import cn.org.july.netty.dubbo.loadbalance.LoadBalance;

import cn.org.july.netty.dubbo.loadbalance.RandomLoadBalance;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.cache.PathChildrenCache;

import org.apache.curator.framework.recipes.cache.PathChildrenCacheEvent;

import org.apache.curator.framework.recipes.cache.PathChildrenCacheListener;

import org.apache.curator.retry.ExponentialBackoffRetry;

import java.util.ArrayList;

import java.util.List;

/**

* @author july_whj

*/

public class ServiceDiscoverImpl implements IServiceDiscover {

List<String> repos = new ArrayList<String>();

private CuratorFramework curatorFramework;

public ServiceDiscoverImpl() {

curatorFramework = CuratorFrameworkFactory.builder()

.connectString(ZkConfig.addr).sessionTimeoutMs(4000)

.retryPolicy(new ExponentialBackoffRetry(1000, 10))

.build();

curatorFramework.start();

}

@Override

public String discover(String serviceName) {

String path = ZkConfig.ZK_REGISTER_PATH.concat("/").concat(serviceName);

try {

repos = curatorFramework.getChildren().forPath(path);

} catch (Exception e) {

e.printStackTrace();

}

registerWatch(path);

LoadBalance loadBalance = new RandomLoadBalance();

return loadBalance.select(repos);

}

/**

* Listen for ZK node content refresh

*

* @param path route

*/

private void registerWatch(final String path) {

PathChildrenCache childrenCache = new PathChildrenCache(curatorFramework, path, true);

PathChildrenCacheListener childrenCacheListener = new PathChildrenCacheListener() {

@Override

public void childEvent(CuratorFramework curatorFramework, PathChildrenCacheEvent pathChildrenCacheEvent) throws Exception {

repos = curatorFramework.getChildren().forPath(path);

}

};

childrenCache.getListenable().addListener(childrenCacheListener);

try {

childrenCache.start();

} catch (Exception e) {

e.printStackTrace();

}

}

}

Same definition as service registration`CuratorFramework`Object, and through`curatorFramework.start();`connect ZK.

After the connection is successful, the service address of the service is obtained by adding the service name to the root node registered by zk.

The obtained service address may not be the latest service address. We need to listen zk The content of the node is refreshed by calling`registerWatch`Method to monitor the data changes of the node. Finally, the obtained address set is passed through`LoadBalance`Randomly select an address to implement the service.

3.4.3 client netty realizes RPC remote call

Define the client implementation class RpcClientProxy

package cn.org.july.netty.dubbo.proxy;

import cn.org.july.netty.dubbo.api.RpcRequest;

import cn.org.july.netty.dubbo.registry.IServiceDiscover;

import io.netty.bootstrap.Bootstrap;

import io.netty.channel.*;

import io.netty.channel.nio.NioEventLoopGroup;

import io.netty.channel.socket.SocketChannel;

import io.netty.channel.socket.nio.NioSocketChannel;

import io.netty.handler.codec.LengthFieldBasedFrameDecoder;

import io.netty.handler.codec.LengthFieldPrepender;

import io.netty.handler.codec.serialization.ClassResolvers;

import io.netty.handler.codec.serialization.ObjectDecoder;

import io.netty.handler.codec.serialization.ObjectEncoder;

import java.lang.reflect.InvocationHandler;

import java.lang.reflect.Method;

import java.lang.reflect.Proxy;

/**

* Created with IntelliJ IDEA.

* User: wanghongjie

* Date: 2019/5/3 - 23:08

* <p>

* Description:

*/

public class RpcClientProxy {

private IServiceDiscover serviceDiscover;

public RpcClientProxy(IServiceDiscover serviceDiscover) {

this.serviceDiscover = serviceDiscover;

}

public <T> T create(final Class<T> interfaceClass) {

return (T) Proxy.newProxyInstance(interfaceClass.getClassLoader(),

new Class<?>[]{interfaceClass}, new InvocationHandler() {

//Encapsulate the RpcRequest request object, and then send it to the service through netty

@Override

public Object invoke(Object proxy, Method method, Object[] args) throws Throwable {

RpcRequest rpcRequest = new RpcRequest();

rpcRequest.setClassName(method.getDeclaringClass().getName());

rpcRequest.setMethodName(method.getName());

rpcRequest.setTypes(method.getParameterTypes());

rpcRequest.setParams(args);

//Service discovery, zk communication

String serviceName = interfaceClass.getName();

//Get service implementation url address

String serviceAddress = serviceDiscover.discover(serviceName);

//Resolve ip and port

System.out.println("Server implementation address:" + serviceAddress);

String[] arrs = serviceAddress.split(":");

String host = arrs[0];

int port = Integer.parseInt(arrs[1]);

System.out.println("Service implementation ip:" + host);

System.out.println("Service implementation port:" + port);

final RpcProxyHandler rpcProxyHandler = new RpcProxyHandler();

//Connect and send data through netty

EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap bootstrap = new Bootstrap();

bootstrap.group(group).channel(NioSocketChannel.class).option(ChannelOption.TCP_NODELAY, true)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

protected void initChannel(SocketChannel socketChannel) throws Exception {

ChannelPipeline channelPipeline = socketChannel.pipeline();

channelPipeline.addLast(new ObjectDecoder(1024 * 1024, ClassResolvers.weakCachingConcurrentResolver(this.getClass().getClassLoader())));

channelPipeline.addLast(new ObjectEncoder());

//netty implementation code

channelPipeline.addLast(rpcProxyHandler);

}

});

ChannelFuture future = bootstrap.connect(host, port).sync();

//Write encapsulated objects to

future.channel().writeAndFlush(rpcRequest);

future.channel().closeFuture().sync();

} catch (Exception e) {

} finally {

group.shutdownGracefully();

}

return rpcProxyHandler.getResponse();

}

});

}

}

Let's see`create`Method through dynamic proxy newProxyInstance Method, pass in the interface object to be called, and get getClassLoader After, realize invoke method. definition`RpcRequest`Object that encapsulates the request parameters. adopt`interfaceClass`Object to obtain the service implementation name, and call`discover`Method to obtain the address information of the service provider, netty Connect to the service through this information and`RpcRequest`The object is sent to the server, which parses the object, obtains the interface request parameters and other information, executes the method, and returns the result to the client`RpcProxyHandler`Object to receive the returned result.`RpcProxyHandler`Code implementation:

package cn.org.july.netty.dubbo.proxy;

import io.netty.channel.ChannelHandlerContext;

import io.netty.channel.ChannelInboundHandlerAdapter;

/**

* Created with IntelliJ IDEA.

* User: wanghongjie

* Date: 2019/5/3 - 23:21

* <p>

* Description:

*/

public class RpcProxyHandler extends ChannelInboundHandlerAdapter {

private Object response;

public Object getResponse() {

return response;

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

//Return the content returned by the server

response = msg;

}

}

We copy`channelRead`Method to obtain the result information returned by the server`msg`,And will`msg`Assign to`response`,adopt`getResponse`Get return information.

3.4.4 customer test

import cn.org.july.netty.dubbo.api.IService;

import cn.org.july.netty.dubbo.proxy.RpcClientProxy;

import cn.org.july.netty.dubbo.registry.IServiceDiscover;

import cn.org.july.netty.dubbo.registry.ServiceDiscoverImpl;

/**

* Created with IntelliJ IDEA.

* User: wanghongjie

* Date: 2019/5/3 - 23:06

* <p>

* Description:

*/

public class ClientTest {

public static void main(String[] args) {

IServiceDiscover serviceDiscover = new ServiceDiscoverImpl();

RpcClientProxy rpcClientProxy = new RpcClientProxy(serviceDiscover);

IService iService = rpcClientProxy.create(IService.class);

System.out.println(iService.sayHello("netty-to-dubbo"));

System.out.println(iService.sayHello("Hello"));

System.out.println(iService.sayHello("It's a success. I'm glad"));

System.out.println(iService.add(10, 4));

}

}

Let's look at the implementation effect.

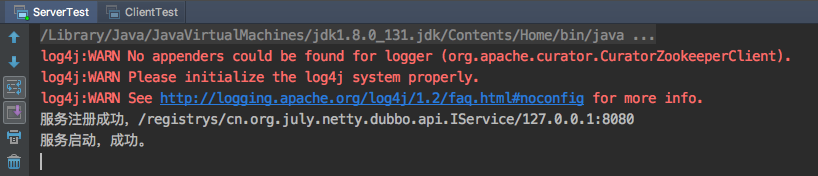

Server startup:

Server start

Client call:

Client call

Remote call completed.